国家区划数据在国家区划网站上的展示就是一个俄罗斯套娃

一层一层的套,从省市开始一直到村级(或居委会)

数据量达到了67w+(ps:咱们国家的区划就达到了这个数量级,人口大国真的不是盖的··)

在网上找到了一个很好的博主,也是采用了套娃的方式,将爬取的数据写到了县级,于是借鉴来改改,实现我想要的(这位博主,我后来也没找到了···请知情者评论区发一下链接,谢谢)

省-市-县-乡-村 所有的数据,分表存储,一省一表

在经过不断的优化改进,改成了以下的代码

代码说明:

1. 我程序用了for循环是一次跑完的(省力,但是时间久),你可以单拎出来,多创建几个进程,会快很多(我不会在代码中用多进程,你会的话可以试试)

2.尽量在配置好点的linux服务器上跑,我在windows上跑这个特别慢,后来无奈还是转到了linux

3.由于数据量太大,难免会因为各种各样的原因爬取失败(主要是网络请求失败),所以加了很多关键日志,我打印出来的就是你没爬到的。后面有补充失败的的代码,肯定会用到的。

# -*- coding:utf-8 -*-

import requests

from lxml import etree

import json

import time

headers = {

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.204 Safari/537.36',

'Cookie':'AD_RS_COOKIE=20080918; _trs_uv=kahvgie3_6_fc6v'}

#省级

def province(index):

s = requests.session()

s.keep_alive = False

response = requests.get('http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2020/index.html',headers=headers)

response.encoding = 'gbk'

text = response.text

html = etree.HTML(text)

trs = html.xpath('//tr[@class="provincetr"]/td')

tr = trs[index]

try:

province = tr.xpath('./a/text()')[0]

page = tr.xpath('./a/@href')[0]

province_code = page[:2]

city_url = 'http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2020/'+page

fp.write('%s,%s\t,%s\n' % (province, province_code,province))

try:

time.sleep(0.2)

city(city_url)

except:

print("city failed",city_url)

log_file.write('%s,%s\n' % ("city failed", city_url))

except:

print('province write failed ', tr.xpath('./td[2]/a/text()')[0])

log_file.write('%s,%s\n' % ('province write failed ', tr.xpath('./td[2]/a/text()')[0]))

#市级

def city(province_url):

time.sleep(5)

s = requests.session()

s.keep_alive = False

response2 = requests.get(province_url, headers=headers)

response2.encoding = 'gbk'

text2 = response2.text

html2 = etree.HTML(text2)

trs = html2.xpath('//tr[@class="citytr"]')

for tr in trs:

try:

page = tr.xpath('./td[1]/a/@href')[0]

page_list = page.split('/')

city_code = page_list[0]

country_url = 'http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2020/' + page

city_id = tr.xpath('./td[1]/a/text()')[0]

city = tr.xpath('./td[2]/a/text()')[0]

fp.write('%s,%s\t,%s\n' % (city, city_id[:4],city))

try:

time.sleep(0.2)

country(country_url,city_code)

except:

print('country faild ',country_url,city_code)

log_file.write('%s,%s,%s\t\n' % ('country faild ', country_url, city_code))

except:

print('city write failed:',json.dumps(city, ensure_ascii=False))

log_file.write('%s,%s\t\n' % ('city write failed:',json.dumps(city, ensure_ascii=False)))

#县级

def country(country_url,city_code): #县区级

print(country_url)

time.sleep(5)

s = requests.session()

s.keep_alive = False

response3 = requests.get(country_url, headers=headers)

response3.encoding = 'gbk'

text3 = response3.text

html3 = etree.HTML(text3)

trs = html3.xpath('//tr[@class="countytr"]')

if trs:

for tr in trs:

try:

page = tr.xpath('./td[1]/a/@href')[0]

page_list = page.split('/')

country_code = page_list[0]

town_url = 'http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2020/' + city_code + '/' + page

country_id = tr.xpath('./td[1]/a/text()')[0]

print(country_id)

country = tr.xpath('./td[2]/a/text()')[0]

print(country)

fp.write('%s,%s\t,%s\n' % (country, country_id[:6], country))

try:

time.sleep(0.2)

town(town_url, country_code, city_code)

except:

print('town failed ', town_url, country_code, city_code)

log_file.write('%s,%s,%s\t,%s\t\n' % ('town failed ', town_url, country_code, city_code))

except:

try:

country_id = tr.xpath('./td[1]/text()')[0]

country = tr.xpath('./td[2]/text()')[0]

fp.write('%s,%s\t,%s\n' % (country, country_id[:6], country))

print("没有下级的地区:" + country)

log_file.write('%s,%s\n' % ("没有下级地区:", country))

except:

print('country write failed', country_url)

log_file.write('%s,%s\n' % ('country write failed', country_url))

else:

fp.write('%s,%s\t,%s\n' % ('市辖区', country_url[-9:-5]+'00', '市辖区'))

town(country_url,'00',city_code)

def town(town_url,country_code,city_code):

time.sleep(5)

s = requests.session()

s.keep_alive = False

response4 = requests.get(town_url, headers=headers)

response4.encoding = 'gbk'

text4 = response4.text

html4 = etree.HTML(text4)

trs = html4.xpath('//tr[@class="towntr"]')

if country_code == '00':

for tr in trs:

try:

page = tr.xpath('./td[1]/a/@href')[0]

page_list = page.split('/')

town_code = page_list[0]

village_url = 'http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2020/' + city_code + '/' + page

town_id = tr.xpath('./td[1]/a/text()')[0]

print(town_id)

town = tr.xpath('./td[2]/a/text()')[0]

print(town)

fp.write('%s,%s\t,%s\n' % (town, town_id[:9], town))

try:

time.sleep(0.2)

village(village_url)

except:

print('village faild', village_url)

log_file.write('%s,%s\n' % ('village faild', village_url))

except:

try:

town_id = tr.xpath('./td[1]/text()')[0]

town = tr.xpath('./td[2]/text()')[0]

fp.write('%s,%s\t,%s\n' % (town, town_id[:9], town))

log_file.write('%s,%s\n' % ("没有下级地区:", town))

except:

print('town write failed:', json.dumps(city, ensure_ascii=False))

log_file.write('%s,%s\n' % ('town write failed:', json.dumps(city, ensure_ascii=False)))

else:

for tr in trs:

try:

page = tr.xpath('./td[1]/a/@href')[0]

page_list = page.split('/')

town_code = page_list[0]

village_url = 'http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2020/' + city_code + '/' + country_code + '/' + page

town_id = tr.xpath('./td[1]/a/text()')[0]

town = tr.xpath('./td[2]/a/text()')[0]

fp.write('%s,%s\t,%s\n' % (town, town_id[:9], town))

try:

time.sleep(0.2)

village(village_url)

except:

print('village faild',village_url)

log_file.write('%s,%s\n' % ('village faild',village_url))

except:

try:

town_id = tr.xpath('./td[1]/text()')[0]

town = tr.xpath('./td[2]/text()')[0]

fp.write('%s,%s\t,%s\n' % (town, town_id[:9], town))

log_file.write('%s,%s\n' % ("没有下级地区:", town))

except:

print('town write failed:', json.dumps(city, ensure_ascii=False))

log_file.write('%s,%s\n' % ('town write failed:', json.dumps(city, ensure_ascii=False)))

#村级

def village(village_url):

time.sleep(5)

s = requests.session()

s.keep_alive = False

response5 = requests.get(village_url, headers=headers)

response5.encoding = 'gbk'

text5 = response5.text

html5 = etree.HTML(text5)

trs = html5.xpath('//tr[@class="villagetr"]')

for tr in trs:

try:

village_id = tr.xpath('./td[1]/text()')[0]

village = tr.xpath('./td[3]/text()')[0]

fp.write('%s,%s\t\n' % (village, village_id))

time.sleep(0.2)

except:

print('village write failed', tr.xpath('./td[3]/text()')[0])

log_file.write('%s,%s\n' % ('village write failed', tr.xpath('./td[3]/text()')[0]))

print(village_url)

log_file.write('%s\n' % (village_url))

if __name__ == '__main__':

for i in range(0,32):

csv_name = "quhua_"+str(i)+".csv"

err_name = "error"+str(i)+".txt"

log_file = open(err_name, 'a')

log_file.write('%s\n' % (csv_name))

print(csv_name)

fp = open(csv_name, 'a')

fp.write('%s,%s\n' % ('区划名称', '区划代码')) # 表头

province(i)

time.sleep(30)

fp.close()

time.sleep(5)

log_file.close()

time.sleep(30)

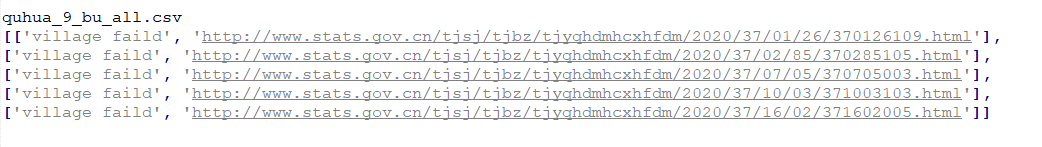

如果单个省市没有爬到的数据,我会打印日志,如下图:(windows打出来是个元组,linux打出来是几个字符串,你需要变成以下列表的形式,不同的省市你可以放在一起,只是注意放在一个列表里面,看我的代码层级就能明白)

加一个调用函数,修改一下主函数。补充爬取失败的代码如下:(建议单个省市跑,不然后面再爬取失败,你可能就不知道谁是谁了··)

def single(list):

url_err = list[0]

url_type = url_err.split(' ')

print(url_type[0])

if url_type[0]=='country':

country(list[1],list[2])

elif url_type[0]=='village':

village(list[1])

elif url_type[0]=='town':

town(list[1],list[2],list[3])

else:

print('数据错误')

if __name__ == '__main__':

list_a = [

[['country faild ', 'http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2020/42/4201.html', '42'],

['country faild ', 'http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2020/42/4213.html', '42']],

[['country faild ', 'http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2020/43/4301.html', '43'],

['country faild ', 'http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2020/43/4313.html', '43']]

]

for i,list_all in enumerate(list_a):

print(i)

print(time.time())

csv_name = "quhua_" + str(i) + "_bu.csv"

print(csv_name)

fp = open(csv_name, 'a')

fp.write('%s,%s\n' % ('区划名称', '补充内容'))

for list_single in list_all:

single(list_single)

time.sleep(40)

fp.close()

print(time.time())

爬下来的数据中有个地方是福建省泉州市金门县,这里在国家区划网上是没有乡级村级的,后来查了下,说是这里归台湾管辖

大家在数据上要注意这里有个特殊点。

如果想要我爬到的数据,请来下载我的资源

国家区划数据爬虫实践

国家区划数据爬虫实践

本文介绍了一个用于抓取中国国家区划数据的Python爬虫项目,包括省、市、县、乡、村各级行政区划信息。通过逐步解析网页结构,实现了数据的多层次抓取,并针对数据量大及网络不稳定等问题进行了优化。

本文介绍了一个用于抓取中国国家区划数据的Python爬虫项目,包括省、市、县、乡、村各级行政区划信息。通过逐步解析网页结构,实现了数据的多层次抓取,并针对数据量大及网络不稳定等问题进行了优化。

1613

1613

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?