不借助深度学习框架实现MNIST识别

import numpy as np

import gzip

import _pickle as cPickle

def sigmoid(z):

return 1.0 / (1 + np.exp(-z))

def sigmoid_prime(z):

'''sigmoid函数的导数'''

return sigmoid(z) * (1-sigmoid(z))

def load_data():

f = gzip.open('./mnist.pkl.gz', 'rb')

training_data,validation_data, test_data = cPickle.load(f,encoding='bytes')

f.close()

return (training_data, validation_data, test_data)

def load_data_wrapper():

tr_d, va_d, te_d = load_data()

training_inputs = [np.reshape(x, (784, 1)) for x in tr_d[0]]

training_results = [vectorized_result(y) for y in tr_d[1]]

training_data = list(zip(training_inputs, training_results))

validation_inputs = [np.reshape(x, (784, 1)) for x in va_d[0]]

validation_data = list(zip(validation_inputs, va_d[1]))

test_inputs = [np.reshape(x, (784, 1)) for x in te_d[0]]

test_data = list(zip(test_inputs, te_d[1]))

return training_data, validation_data, test_data

def vectorized_result(j):

e = np.zeros((10, 1))

e[j] = 1.0

return e

class Network(object):

def __init__(self,sizes):

self.num_layers = len(sizes)

self.sizes = sizes

self.biases = [np.random.randn(y, 1) for y in sizes[1:]]

self.weights = [np.random.randn(y,x) for x ,y in zip(sizes[:-1], sizes[1:])]

def feedforward(self, a):

'''返回输入a对应的输出'''

for b,w in zip(self.biases, self.weights):

a = sigmoid(np.dot(w,a) + b)

return a

def SGD(self, training_data, epochs,mini_batch_size, eta,test_data=None):

'''使用随机梯度下降算法训练模型。training_data为(x,y)的形式,代表训练数据及其对应的输出'''

if test_data:

n_test = len(test_data)

n = len(training_data)

for j in range(epochs):

np.random.shuffle(training_data)

mini_batches = [training_data[k: k + mini_batch_size] for k in range(0, n, mini_batch_size)]

for mini_batch in mini_batches:

self.update_mini_batch(mini_batch, eta)

if test_data:

print("Epoch {0}: {1} / {2}".format(j, self.evaluate(test_data), n_test))

else:

print("Epoch {0} complete".format(j))

def update_mini_batch(self, mini_batch, eta):

nabla_b = [np.zeros(b.shape) for b in self.biases]

nabla_w = [np.zeros(w.shape) for w in self.weights]

for x,y in mini_batch:

delta_nabla_b, delta_nabla_w = self.backprop(x,y)

nabla_b = [nb+dnb for nb,dnb in zip(nabla_b, delta_nabla_b)]

nabla_w = [nw+dnw for nw, dnw in zip(nabla_w,delta_nabla_w)]

self.weights = [w - (eta / len(mini_batch)) * nw for w, nw in zip(self.weights, nabla_w)]

self.biases = [b - (eta / len(mini_batch)) * nb for b, nb in zip(self.biases,nabla_b)]

def backprop(self,x, y):

nabla_b = [np.zeros(b.shape) for b in self.biases]

nabla_w = [np.zeros(w.shape) for w in self.weights]

activation = x

activations = [x]

zs = []

'''前向计算'''

for b,w in zip(self.biases, self.weights):

z = np.dot(w, activation) + b

zs.append(z)

activation = sigmoid(z)

activations.append(activation)

delta = self.cost_derivative(activations[-1], y) * sigmoid_prime(zs[-1])

nabla_b[-1] = delta

nabla_w[-1] = np.dot(delta, activations[-2].transpose())

'''误差反向传播'''

for l in range(2, self.num_layers):

z = zs[-l]

sp = sigmoid_prime(z)

delta = np.dot(self.weights[-l+1].transpose(), delta) * sp

nabla_b[-l] = delta

nabla_w[-l] = np.dot(delta,activations[-l-1].transpose())

return (nabla_b,nabla_w)

def evaluate(self, test_data):

test_results = [(np.argmax(self.feedforward(x)), y ) for (x,y) in test_data]

return sum(int(x==y) for (x,y) in test_results)

def cost_derivative(self, output_activations,y):

'''代价函数选取均方差,该函数定义了代价函数的导数'''

return (output_activations - y)

training_data, validation_data, test_data = load_data_wrapper()

net = Network([784, 30, 10])

net.SGD(training_data, 30, 10, 3.0, test_data=test_data)

Epoch 0: 8667 / 10000

Epoch 1: 8685 / 10000

Epoch 2: 8750 / 10000

Epoch 3: 8784 / 10000

Epoch 4: 9007 / 10000

Epoch 5: 9026 / 10000

Epoch 6: 9000 / 10000

Epoch 7: 8700 / 10000

Epoch 8: 8865 / 10000

Epoch 9: 8813 / 10000

Epoch 10: 8874 / 10000

Epoch 11: 9006 / 10000

Epoch 12: 9063 / 10000

Epoch 13: 9152 / 10000

Epoch 14: 9006 / 10000

Epoch 15: 9107 / 10000

Epoch 16: 9100 / 10000

Epoch 17: 9076 / 10000

Epoch 18: 9048 / 10000

Epoch 19: 9139 / 10000

Epoch 20: 9098 / 10000

Epoch 21: 9130 / 10000

Epoch 22: 9016 / 10000

Epoch 23: 9176 / 10000

Epoch 24: 9172 / 10000

Epoch 25: 9155 / 10000

Epoch 26: 9247 / 10000

Epoch 27: 9188 / 10000

Epoch 28: 9093 / 10000

Epoch 29: 9213 / 10000

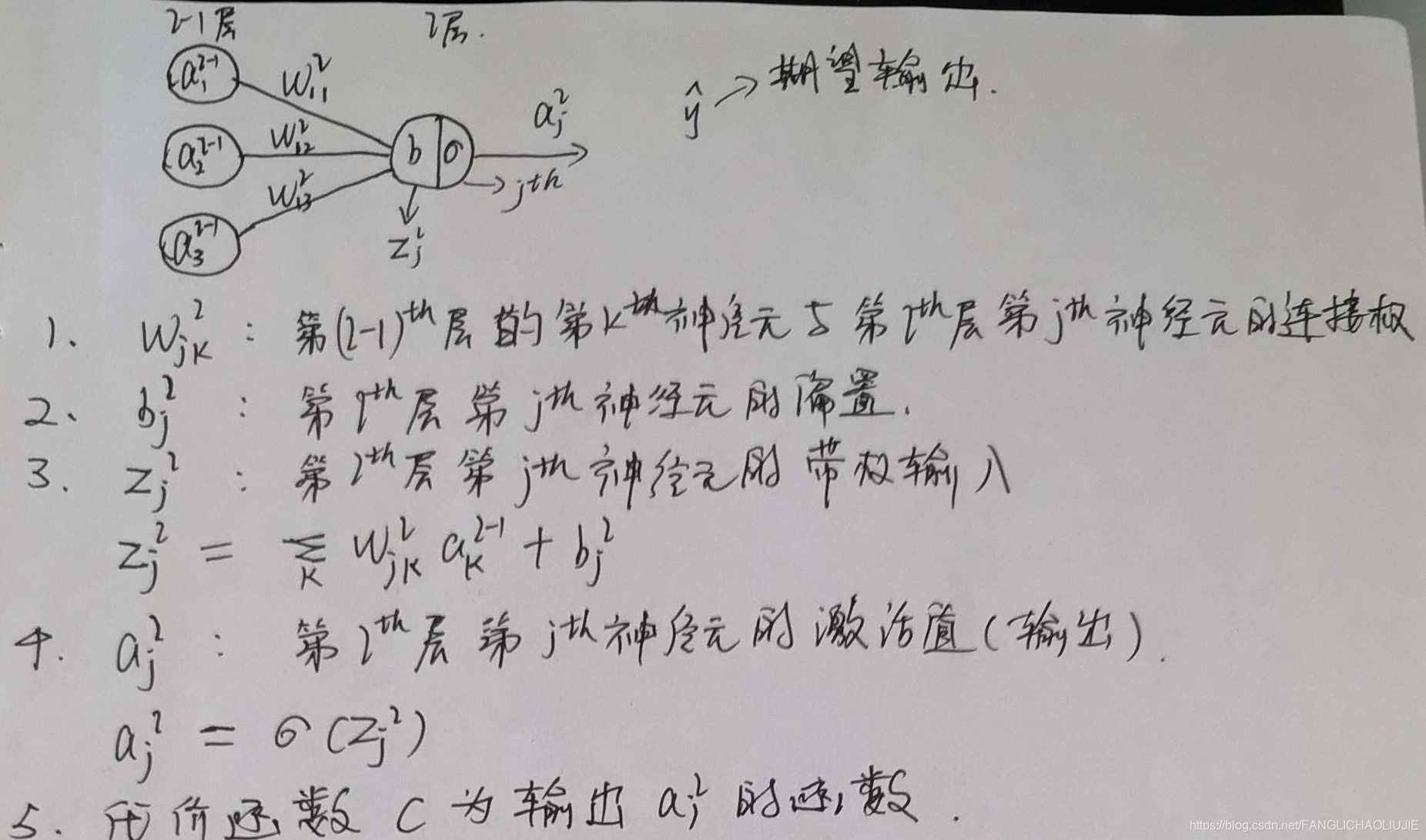

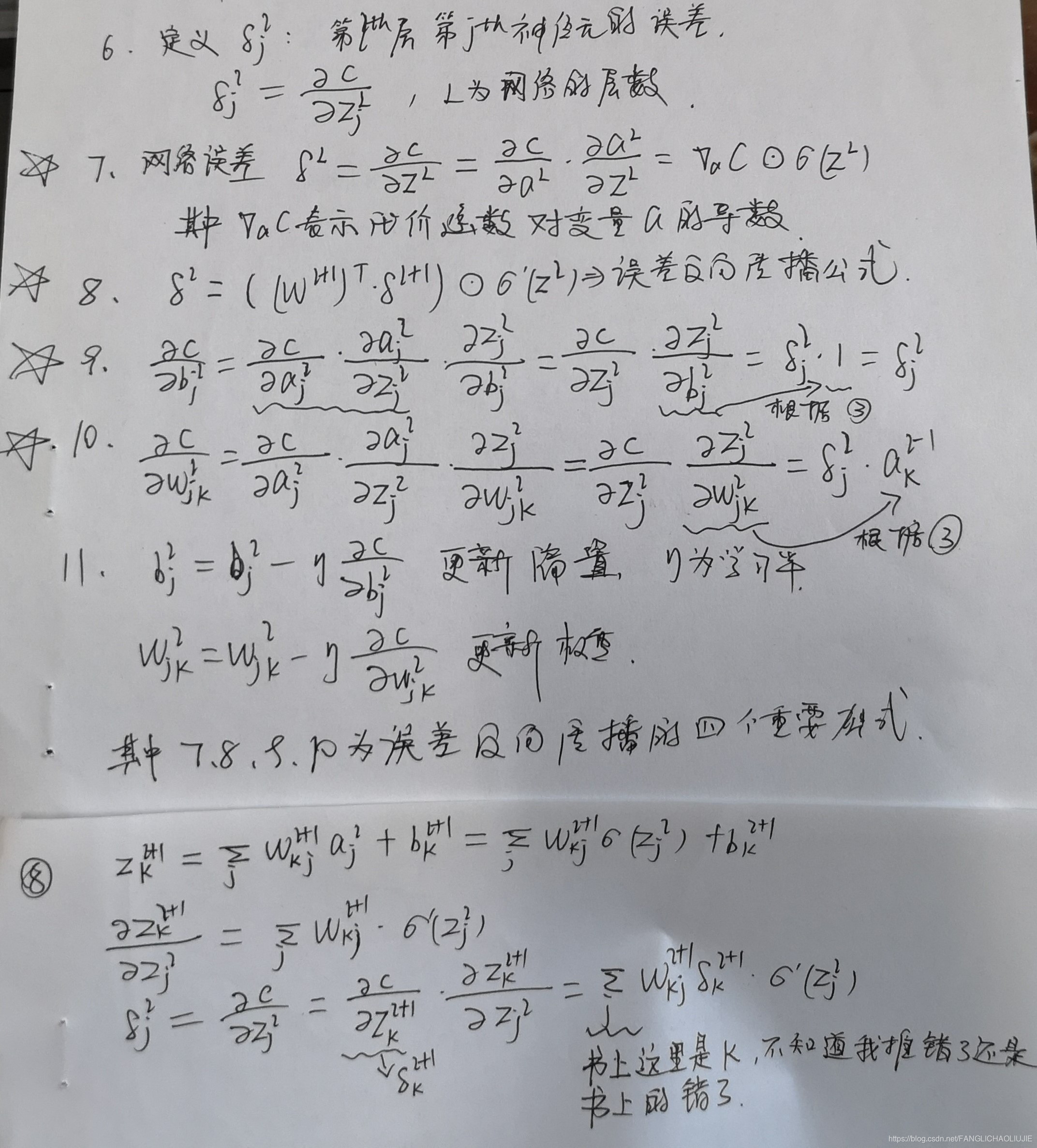

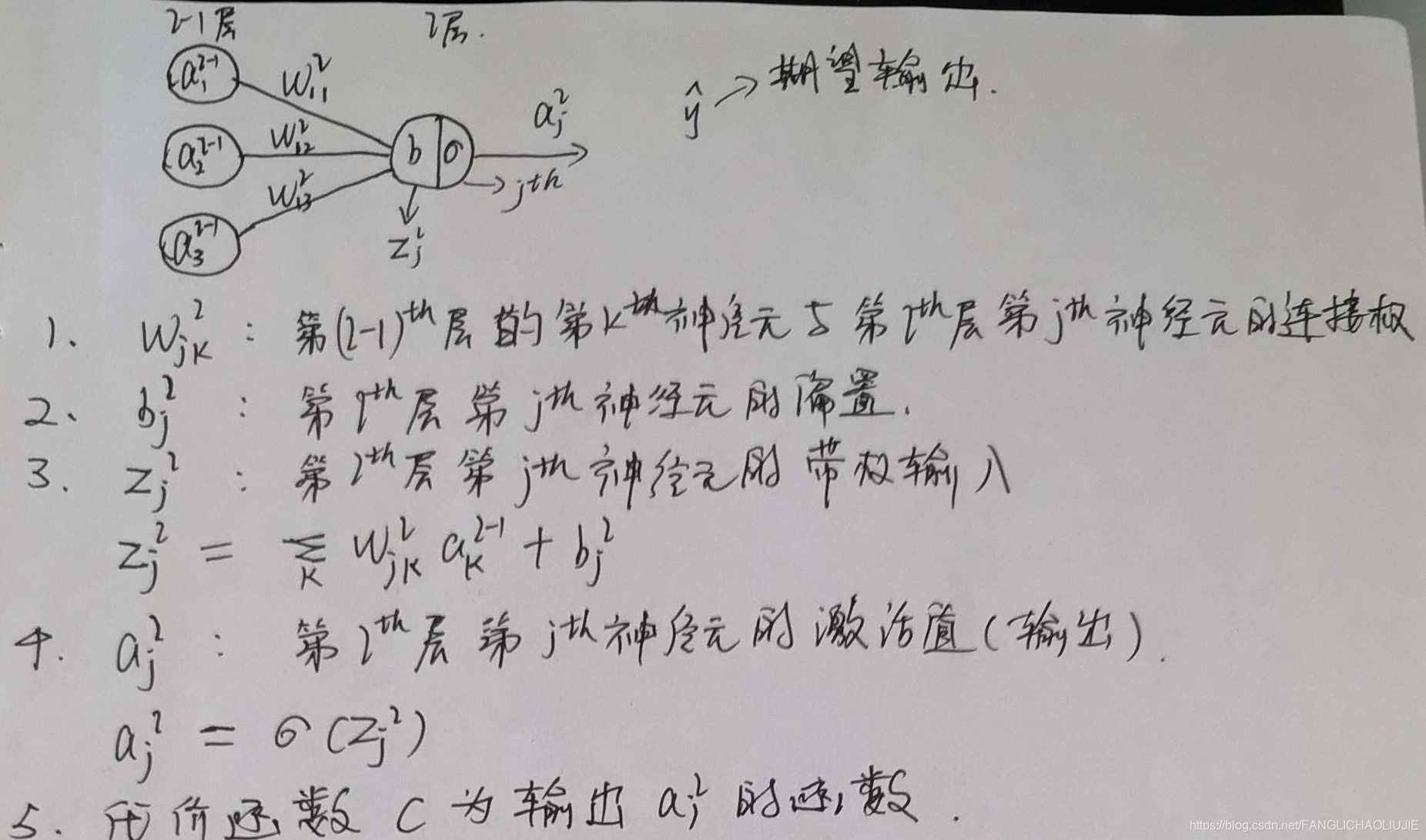

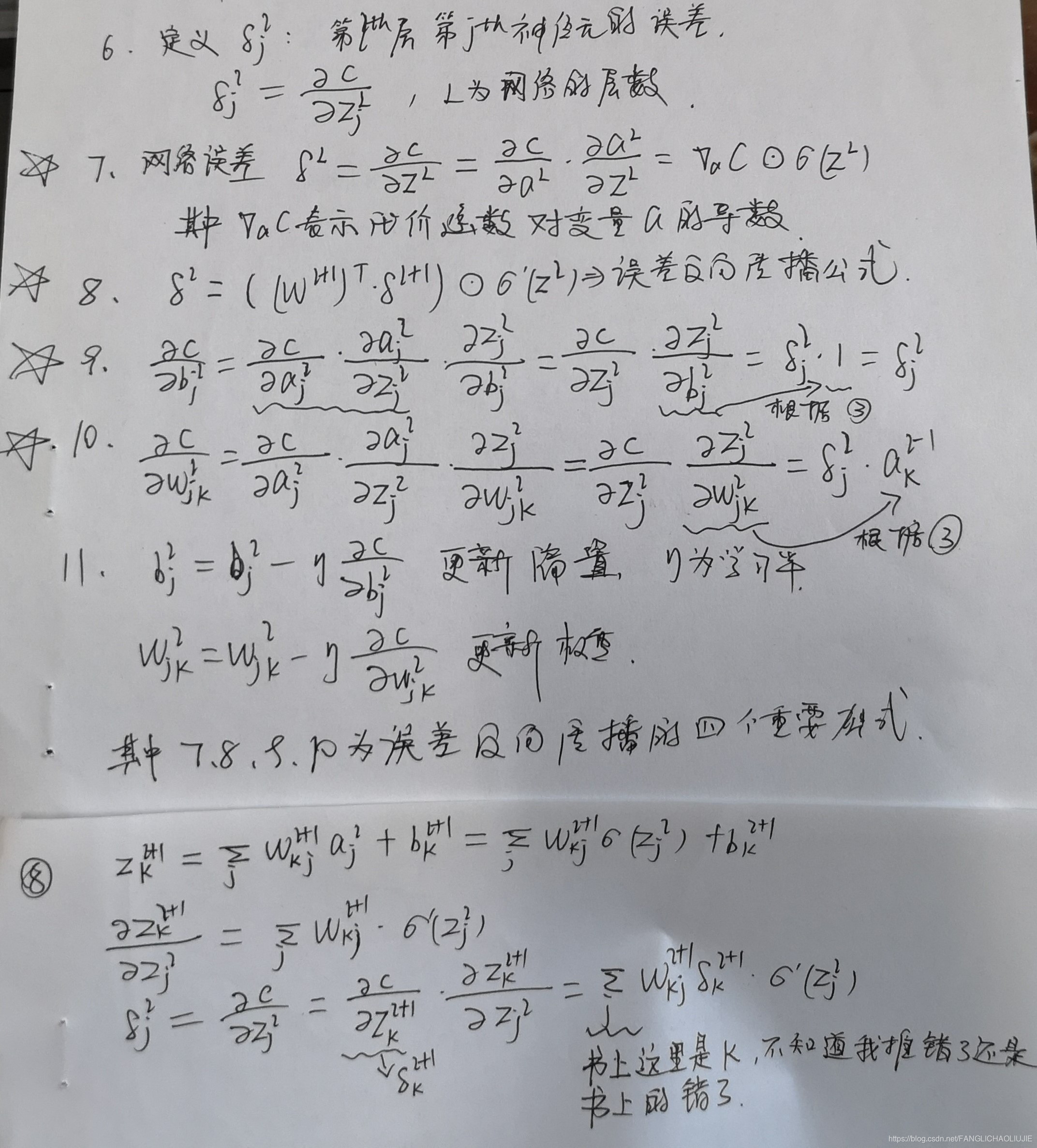

- 上述实现过程中最重要的便是误差反向传播和梯度下降,其中重要的定义和误差反向传播的四大重要公式推导过程如下图所示:

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?