#首先导入所需的库

import requests

from bs4 import BeautifulSoup

import time

for i in range(2,200): #爬取第2页到第200页的壁纸

url = f"http://www.netbian.com/index_{i}.htm"

headers = {

"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:99.0) Gecko/20100101 Firefox/99.0"

}

resp = requests.get(url,headers=headers)

print(url)

resp.encoding = "GBK" #处理乱码

href1 = str("")

href = str("")

#将源码转发给bs4

main_page = BeautifulSoup(resp.text,"html.parser")

alist = main_page.find("div",class_="list").find_all("a") #把范围第一次缩小

print(alist)

for a in alist:

href = (a.get("href"))

if href != "http://pic.netbian.com/":

#print("http://www.netbian.com/"+href)

#拿子页面的源代码

urlmix = requests.get("http://www.netbian.com/"+href)

urlmix.encoding = "GBK"

urlmix_text = urlmix.text

main_page1 = BeautifulSoup(urlmix_text,"html.parser")

urlmix_s = main_page1.find("div",class_="endpage")

img = urlmix_s.find("img")

src = (img.get("src"))

#下载图片

img_resp = requests.get(src)

img_resp.content #拿到的是源代码

img_name = src.split("/")[-1]

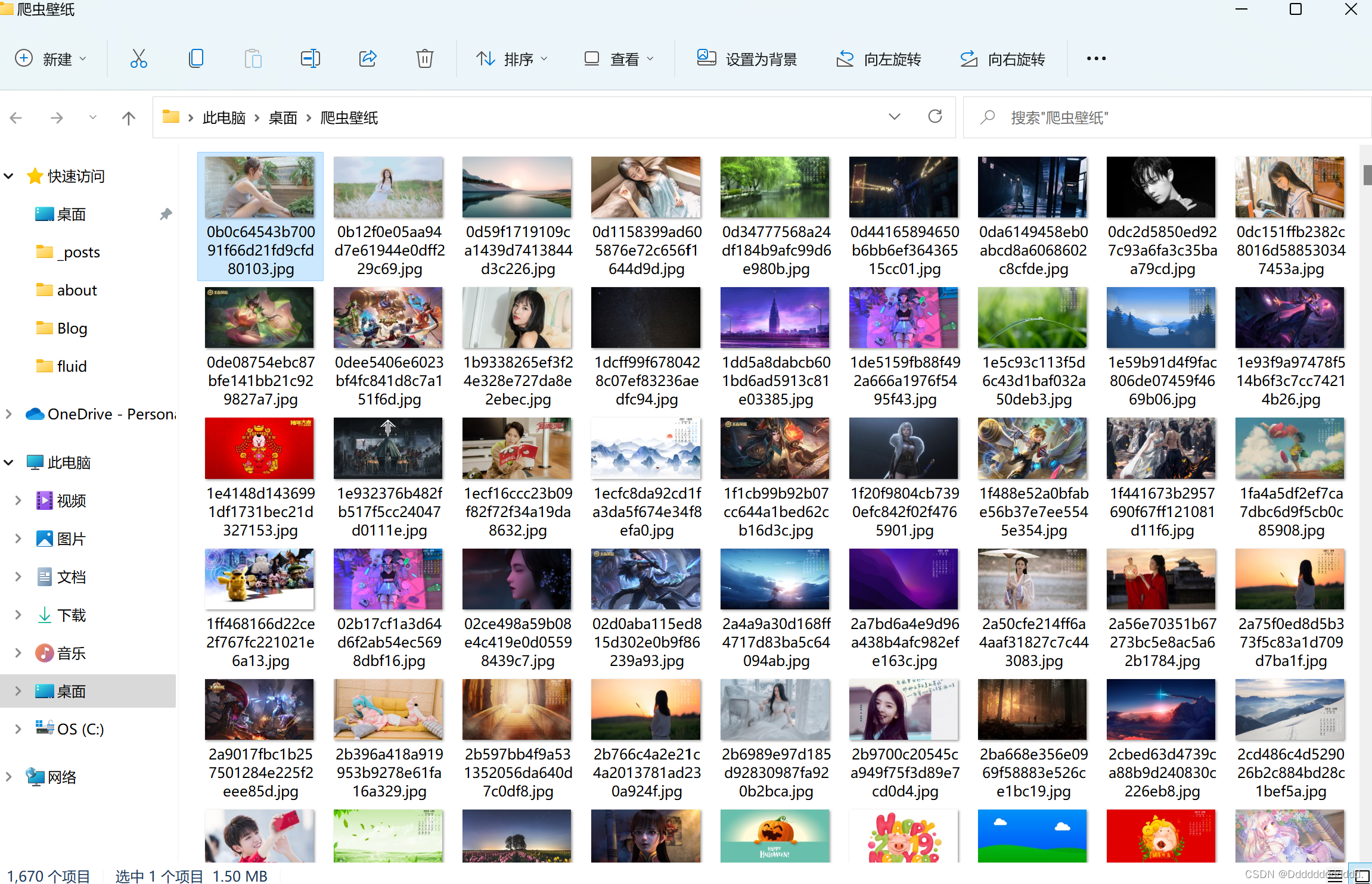

with open(img_name,mode="wb") as f:

f.write(img_resp.content) #图片内容写入文件

time.sleep(1) #这里设置一秒防止ip被过滤

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?