Topic 1 Word Embeddings and Sentence Embeddings

cs224n-2019

- lecture 1: Introduction and Word Vectors

- lecture 2: Word Vectors 2 and Word Senses

slp - chapter 6: Vector Semantics

ruder.io/word-embeddings - chapter 14: The Representation of Sentence Meaning

语言是信息传递知识传递的载体,

能有效沟通的前提是,双方的知识等同

文章目录

How to represent the meaning of a word?

meaning: signifier(symbol) <=> signified(idea or thing)

common solution: WordNet, a thesaurus containing lists of synonym sets and hypernyms 同义词和上位词。

缺点:missing new meanings of words, can’t compute accurate word similarity.

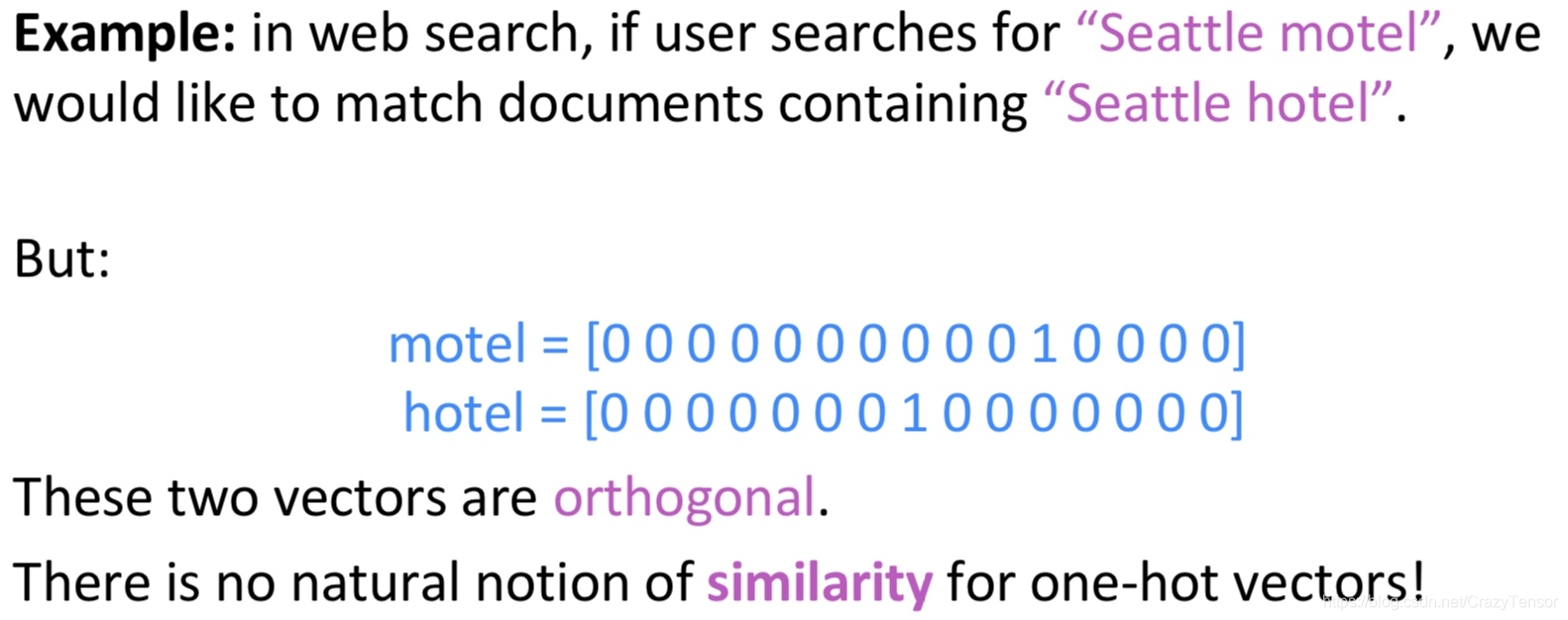

solution: representing words as discrete symbols one-hot, but there is curse of dimensionality problem as well as on natural notion of similarity:

Representing words by their context

It should learn to encode similarity in the vectors themselves

词向量的编码目标是把词相似性进行编码,所有优化的目标和实际的使用都围绕在similarity上。类比所有的编码器,都应该清楚编码的目标是什么!

Distributional semantics: A word’s meaning is given by the words that frequently appear close-by.

You shall know a word by the company it keeps.

Word vectors/word embeddings: a dense vector for each word, chosen so that it is similar to vectors of words that appear in similar contexts.

Word2vec: Overview

Word2vec (Mikolov et al. 2013) is a framework for learning word vectors, main idea

本文深入探讨了如何表示单词的意义,从上下文表示到Word2vec框架的介绍,包括其目标和预测函数。词向量通过捕获相似上下文中的单词关系来代表单词意义,解决了传统方法的局限性。

本文深入探讨了如何表示单词的意义,从上下文表示到Word2vec框架的介绍,包括其目标和预测函数。词向量通过捕获相似上下文中的单词关系来代表单词意义,解决了传统方法的局限性。

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

1081

1081

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?