伸缩(Scale Up/Down)是指在线增加或减少 Pod 的副本数。

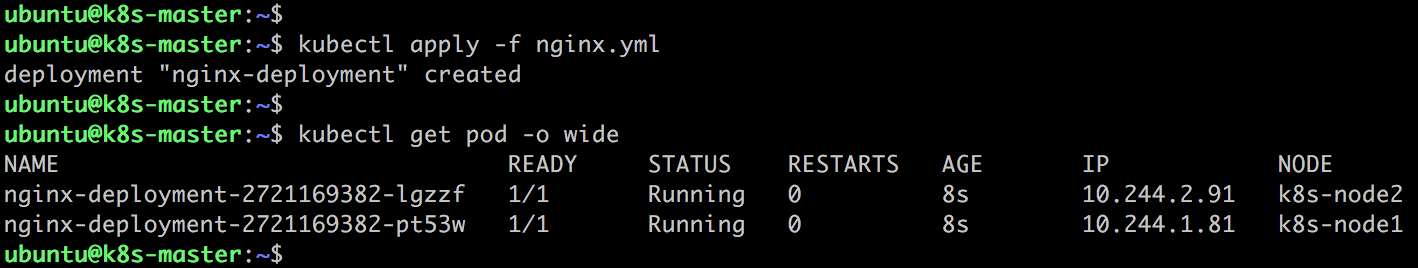

Deployment nginx-deployment 初始是两个副本。

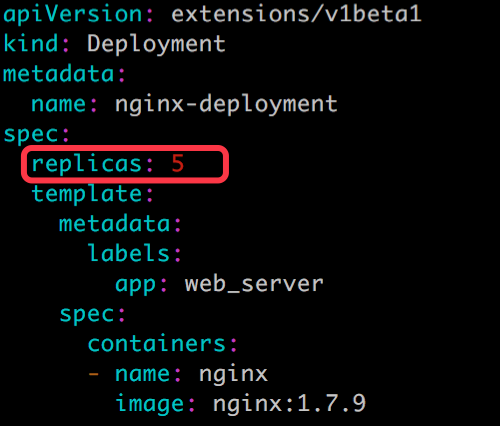

k8s-node1 和 k8s-node2 上各跑了一个副本。现在修改 nginx.yml,将副本改成 5 个。

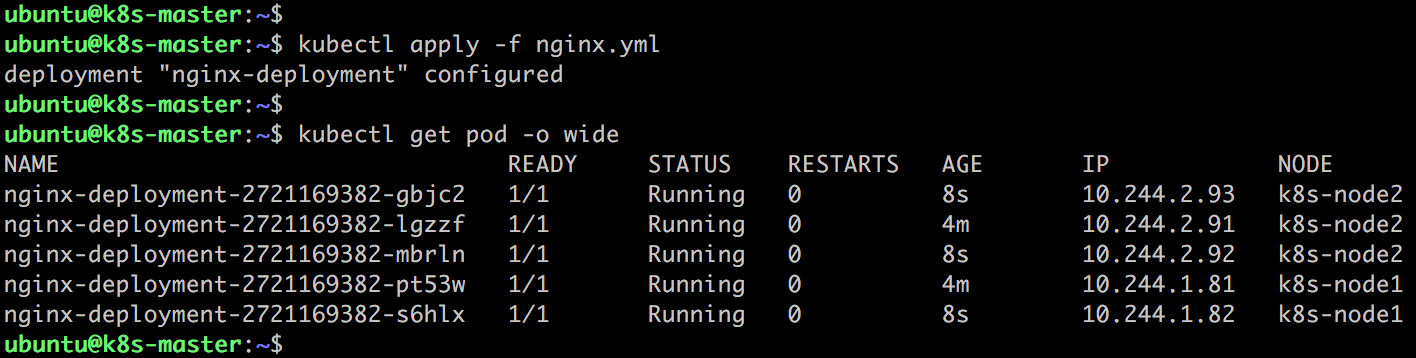

再次执行 kubectl apply:

三个新副本被创建并调度到 k8s-node1 和 k8s-node2 上。

出于安全考虑,默认配置下 Kubernetes 不会将 Pod 调度到 Master 节点。如果希望将 k8s-master 也当作 Node 使用,可以执行如下命令:

kubectl taint node k8s-master node-role.kubernetes.io/master-

如果要恢复 Master Only 状态,执行如下命令:

kubectl taint node k8s-master node-role.kubernetes.io/master="":NoSchedule

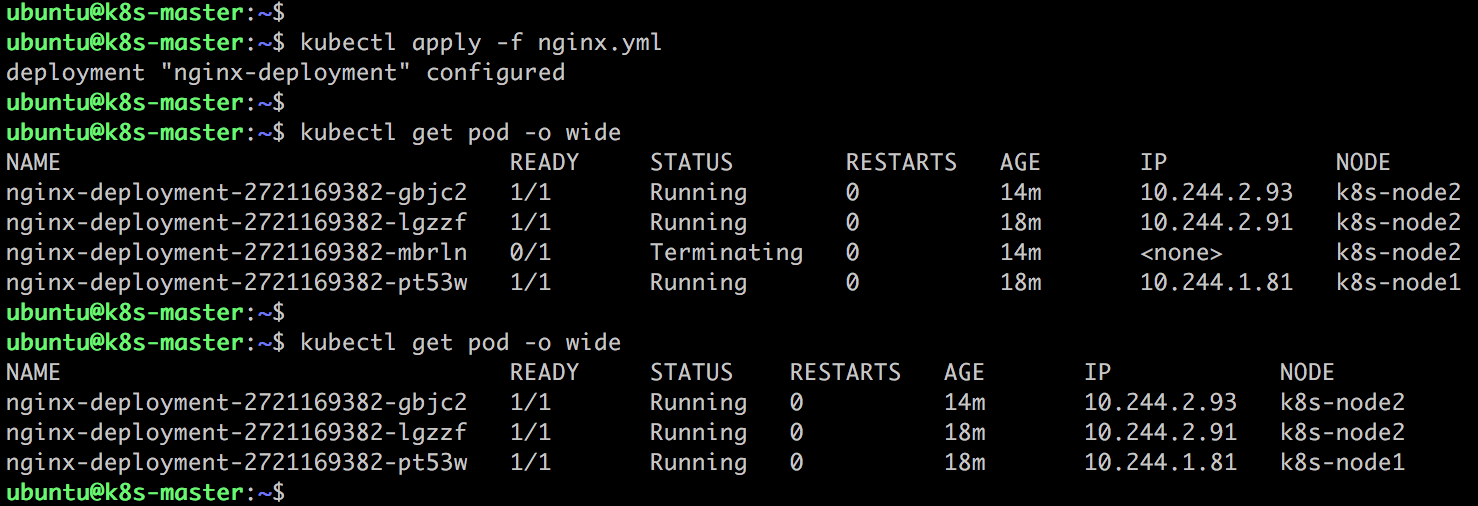

接下来修改配置文件,将副本数减少为 3 个,重新执行 kubectl apply:

可以看到两个副本被删除,最终保留了 3 个副本。

下一节我们学习 Deployment 的 Failover。

书籍:

书籍:

1.《每天5分钟玩转Docker容器技术》

https://item.jd.com/16936307278.html

2.《每天5分钟玩转OpenStack》

https://item.jd.com/12086376.html

本文介绍了如何使用Kubernetes通过修改Deployment来实现Pod的伸缩,包括增加和减少副本数量,并展示了具体的kubectl操作命令。

本文介绍了如何使用Kubernetes通过修改Deployment来实现Pod的伸缩,包括增加和减少副本数量,并展示了具体的kubectl操作命令。

1354

1354

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?