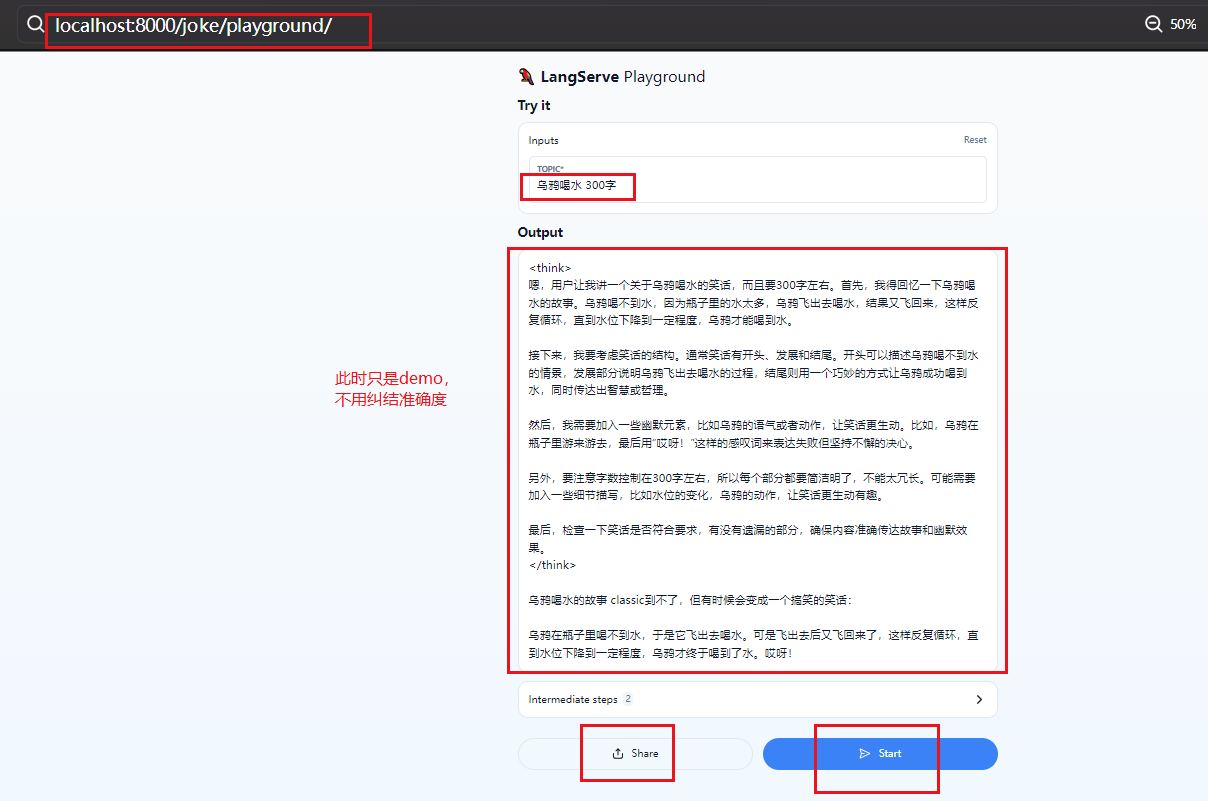

本地使用ollama部署的deepseek-r1:1.5b模型

from fastapi import FastAPI

from fastapi.responses import Response

from langserve import add_routes

from langchain.prompts import ChatPromptTemplate

from langchain_community.chat_models import ChatOllama

from pydantic import BaseModel

app = FastAPI(

title="My LangServer",

version="0.1.0",

description="暴露 LangChain 链为 REST API",

debug=True

)

class JokeInput(BaseModel):

topic: str

chat_model = ChatOllama(

model="deepseek-r1:1.5b",

base_url="http://localhost:11434",

temperature=0

)

prompt = ChatPromptTemplate.from_template("讲一个关于{topic}的笑话")

joke_chain = prompt | chat_model

add_routes(

app,

joke_chain,

path="/joke",

input_type=JokeInput

)

@app.get("/hello")

async def hello():

return Response("hello, world")

if __name__ == "__main__":

import uvicorn

uvicorn.run(app, host="0.0.0.0", port=8000)

1395

1395

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?