文章目录

声明:SEAM模块的代码并非完全独立完成,部分有参考魔傀面具,AI小怪兽以及其他专栏的改进系列涨点神器:基于Yolov8小目标遮挡物性能提升(SEAM、MultiSEAM)https://cloud.tencent.com/developer/article/2365667

添加新模块的相关代码

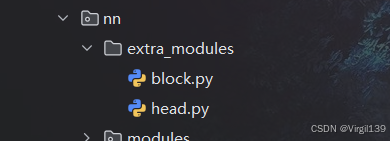

在ultralytics/nn/下建立extra_modules文件夹,并且建立两个文件block.py和head.py。

head.py:里面定义新模块的两个类Detect_SEAM和Detect_MultiSEAM,以及一些工具函数dist2bbox(),make_anchorsblock.py:定义一些模块的类Residual、SEAM、MultiSEAM,和函数DcovN()

ultralytics/nn/extra_modules/block.py

定义一些模块类:Residual、SEAM、MultiSEAM

函数:DcovN()

import torch

import torch.nn as nn

__all__ = ['SEAM', 'MultiSEAM']

def autopad(k, p=None, d=1): # kernel, padding, dilation

"""Pad to 'same' shape outputs."""

if d > 1:

k = d * (k - 1) + 1 if isinstance(k, int) else [d * (x - 1) + 1 for x in k] # actual kernel-size

if p is None:

p = k // 2 if isinstance(k, int) else [x // 2 for x in k] # auto-pad

return p

######################################## SEAM start ########################################

class Residual(nn.Module):

def __init__(self, fn):

super(Residual, self).__init__()

self.fn = fn

def forward(self, x):

return self.fn(x) + x

class SEAM(nn.Module):

def __init__(self, c1, c2, n, reduction=16):

super(SEAM, self).__init__()

if c1 != c2:

c2 = c1

self.DCovN = nn.Sequential(

*[nn.Sequential(

Residual(nn.Sequential(

nn.Conv2d(in_channels=c2, out_channels=c2, kernel_size=3, stride=1, padding=1, groups=c2),

nn.GELU(),

nn.BatchNorm2d(c2)

)),

nn.Conv2d(in_channels=c2, out_channels=c2, kernel_size=1, stride=1, padding=0, groups=1),

nn.GELU(),

nn.BatchNorm2d(c2)

) for i in range(n)]

)

self.avg_pool = torch.nn.AdaptiveAvgPool2d(1)

self.fc = nn.Sequential(

nn.Linear(c2, c2 // reduction, bias=False),

nn.ReLU(inplace=True),

nn.Linear(c2 // reduction, c2, bias=False),

nn.Sigmoid()

)

self._initialize_weights()

# self.initialize_layer(self.avg_pool)

self.initialize_layer(self.fc)

def forward(self, x):

b, c, _, _ = x.size()

y = self.DCovN(x)

y = self.avg_pool(y).view(b, c)

y = self.fc(y).view(b, c, 1, 1)

y = torch.exp(y)

return x * y.expand_as(x)

def _initialize_weights(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.xavier_uniform_(m.weight, gain=1)

elif isinstance(m, nn.BatchNorm2d):

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

def initialize_layer(self, layer):

if isinstance(layer, (nn.Conv2d, nn.Linear)):

torch.nn.init.normal_(layer.weight, mean=0., std=0.001)

if layer.bias is not None:

torch.nn.init.constant_(layer.bias, 0)

def DcovN(c1, c2, depth, kernel_size=3, patch_size=3):

dcovn = nn.Sequential(

nn.Conv2d(c1, c2, kernel_size=patch_size, stride=patch_size),

nn.SiLU(),

nn.BatchNorm2d(c2),

*[nn.Sequential(

Residual(nn.Sequential(

nn.Conv2d(in_channels=c2, out_channels=c2, kernel_size=kernel_size, stride=1, padding=1, groups=c2),

nn.SiLU(),

nn.BatchNorm2d(c2)

)),

nn.Conv2d(in_channels=c2, out_channels=c2, kernel_size=1, stride=1, padding=0, groups=1),

nn.SiLU(),

nn.BatchNorm2d(c2)

) for i in range(depth)]

)

return dcovn

class MultiSEAM(nn.Module):

def __init__(self, c1, c2, depth, kernel_size=3, patch_size=[3, 5, 7], reduction=16):

super(MultiSEAM, self).__init__

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

592

592

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?