1、在cdh启动了flume的情况下,在linux运行了命令:

flume-ng agent --conf conf --conf-file conf/flume-conf.properties --name a1 -Dflume.root.logger=INFO,console

配置文件1:flume-conf.properties

a1.sources = r1

a1.sinks = s1

a1.channels = c1

#sources 消息生产

a1.sources.r1.type = spooldir

a1.sources.r1.channels = c1

a1.sources.r1.spoolDir = /data/flume/flume_dir //用于存放收集的日志

a1.sources.r1.fileHeader = false

a1.sources.r1.interceptors = i1

a1.sources.r1.interceptors.i1.type = timestamp

#channels 消息传递

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

#sinks 消息消费

a1.sinks.s1.type = org.apache.flume.sink.kafka.KafkaSink

a1.sinks.s1.brokerList = node01:9092 //链接kafka

a1.sinks.s1.topics = from_flume//flume收集的日志分发给kafka的对应主题名称

a1.sinks.s1.requiredAcks = 1

a1.sinks.s1.batchSize = 20

a1.sinks.s1.channel = c1 //注意这里是channel不是channels

报错:

[root@node01 flume-ng]# flume-ng agent --conf conf --conf-file conf/flume-conf.properties --name a1 -Dflume.root.logger=INFO,console

Info: Including Hadoop libraries found via (/bin/hadoop) for HDFS access

Info: Including HBASE libraries found via (/opt/cloudera/parcels/CDH-6.0.1-1.cdh6.0.1.p0.590678/lib/hbase/bin/hbase) for HBASE access

Java HotSpot(TM) 64-Bit Server VM warning: Using incremental CMS is deprecated and will likely be removed in a future release

(这两个个Error每次启动都有报,但是不影响)

Error: Could not find or load main class org.apache.flume.tools.GetJavaProperty

Error: Could not find or load main class org.apache.hadoop.hbase.util.GetJavaProperty

Info: Including Hive libraries found via () for Hive access

org.apache.flume.conf.ConfigurationException: Channel c1 not in active set.

at org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.validateSinks(FlumeConfiguration.java:685)

at org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.isValid(FlumeConfiguration.java:347)

at org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.access$000(FlumeConfiguration.java:212)

at org.apache.flume.conf.FlumeConfiguration.validateConfiguration(FlumeConfiguration.java:126)

at org.apache.flume.conf.FlumeConfiguration.<init>(FlumeConfiguration.java:108)

at org.apache.flume.node.PropertiesFileConfigurationProvider.getFlumeConfiguration(PropertiesFileConfigurationProvider.java:194)

at org.apache.flume.node.AbstractConfigurationProvider.getConfiguration(AbstractConfigurationProvider.java:93)

at org.apache.flume.node.PollingPropertiesFileConfigurationProvider$FileWatcherRunnable.run(PollingPropertiesFileConfigurationProvider.java:145)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.runAndReset(FutureTask.java:308)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$301(ScheduledThreadPoolExecutor.java:180)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:294)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

2019-03-13 18:45:11,822 (conf-file-poller-0) [INFO - org.apache.flume.conf.FlumeConfiguration.validateConfiguration(FlumeConfiguration.java:140)] Post-validation flume configuration contains configuration for agents: [a1]

2019-03-13 18:45:11,822 (conf-file-poller-0) [INFO - org.apache.flume.node.AbstractConfigurationProvider.loadChannels(AbstractConfigurationProvider.java:147)] Creating channels

2019-03-13 18:45:11,835 (conf-file-poller-0) [INFO - org.apache.flume.channel.DefaultChannelFactory.create(DefaultChannelFactory.java:42)] Creating instance of channel c1 type memory

2019-03-13 18:45:11,838 (conf-file-poller-0) [INFO - org.apache.flume.node.AbstractConfigurationProvider.loadChannels(AbstractConfigurationProvider.java:201)] Created channel c1

2019-03-13 18:45:11,839 (conf-file-poller-0) [INFO - org.apache.flume.source.DefaultSourceFactory.create(DefaultSourceFactory.java:41)] Creating instance of source r1, type spooldir

2019-03-13 18:45:11,844 (conf-file-poller-0) [ERROR - org.apache.flume.node.PollingPropertiesFileConfigurationProvider$FileWatcherRunnable.run(PollingPropertiesFileConfigurationProvider.java:147)] Failed to load configuration data. Exception follows.

org.apache.flume.FlumeException: Unable to load source type: spooldir , class: spooldir

at org.apache.flume.source.DefaultSourceFactory.getClass(DefaultSourceFactory.java:68)

at org.apache.flume.source.DefaultSourceFactory.create(DefaultSourceFactory.java:42)

at org.apache.flume.node.AbstractConfigurationProvider.loadSources(AbstractConfigurationProvider.java:323)

at org.apache.flume.node.AbstractConfigurationProvider.getConfiguration(AbstractConfigurationProvider.java:101)

at org.apache.flume.node.PollingPropertiesFileConfigurationProvider$FileWatcherRunnable.run(PollingPropertiesFileConfigurationProvider.java:145)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.runAndReset(FutureTask.java:308)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$301(ScheduledThreadPoolExecutor.java:180)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:294)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.lang.ClassNotFoundException: spooldir

at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:335)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

at java.lang.Class.forName0(Native Method)

at java.lang.Class.forName(Class.java:264)

at org.apache.flume.source.DefaultSourceFactory.getClass(DefaultSourceFactory.java:66)

... 11 more

把空的行去掉之后,报另外的错:

2019-03-18 16:08:38,428 (kafka-producer-network-thread | producer-1) [WARN - org.apache.kafka.common.utils.LogContext$KafkaLogger.warn(LogContext.java:246)] [Producer clientId=producer-1] Error while fetching metadata with correlation id 1 : {default-flume-topic=LEADER_NOT_AVAILABLE}

2019-03-18 16:08:38,543 (kafka-producer-network-thread | producer-1) [WARN - org.apache.kafka.common.utils.LogContext$KafkaLogger.warn(LogContext.java:246)] [Producer clientId=producer-1] Error while fetching metadata with correlation id 3 : {default-flume-topic=LEADER_NOT_AVAILABLE}

flume-ng agent --conf conf --conf-file conf/flume-conf01.properties --name tier1 -Dflume.root.logger=INFO,console

配置文件2:flume-conf01.properties,运行没问题(即使更改tier1 为其他名称),source、channel、sink都能正常启动,只是kafka消费不到数据

tier1.sources=r1

tier1.sinks=k1

tier1.channels=c1

tier1.sources.r1.type=spooldir

tier1.sources.r1.spoolDir=/data/flume/flume_dir/

tier1.sources.r1.channels=c1

tier1.sources.r1.fileHeader=false

tier1.sinks.k1.type=org.apache.flume.sink.kafka.KafkaSink

tier1.sinks.k1.brokerList=node01:9092

tier1.sinks.k1.topics=from_flume

tier1.sinks.k1.requiredAcks=1

tier1.sinks.k1.channel=c1

#tier1.sinks.k1.hdfs.fileType=DataStream

#tier1.sinks.k1.hdfs.writeFormat=TEXT

#tier1.sinks.k1.hdfs.rollInterval=60

#tier1.sinks.k1.channel=c1

tier1.channels.c1.type=memory

tier1.channels.c1.capacity=1000

tier1.channels.c1.transactionCapacity=100

#tier1.channels.c1.checkpointDir=./file_channel/checkpoint

#tier1.channels.c1.dataDirs=./file_channel/data

原因:是因为tier1.sinks.k1.topic写成了tier1.sinks.k1.topics,改回来之后,另外用的agent名称tier1是cdh上面agent默认的名称,执行的时候用tier1会报错:(No such file or directory),找不到监控的目录文档,把tier1改成其他名字,比如a2,就可以正常执行了

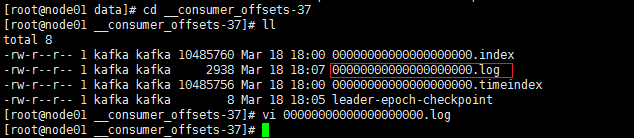

PS:在过程中有删掉 kafka的数据:__consumer_offsets等文档,这个是分片的文档,分多少片就有多少个,同样的,在cm上配置的是50个,那么我创建topic的时候,即使指定了1个,但是分片数还是50个;

这个时候cm上kafka会报错,直接重启kafka就可以了

本文档描述了在使用Flume时遇到的配置错误,包括空行问题和源、通道、接收器的配置。在启动Flume代理时,通过flume-ng命令指定配置文件,错误地配置了KafkaSink的主题属性导致数据无法正常消费。解决方案包括修正topics为topic,以及避免使用CDH预设的agent名称以避免路径找不到的错误。同时,清理Kafka的__consumer_offsets分区文件后,可能需要重启Kafka以解决问题。

本文档描述了在使用Flume时遇到的配置错误,包括空行问题和源、通道、接收器的配置。在启动Flume代理时,通过flume-ng命令指定配置文件,错误地配置了KafkaSink的主题属性导致数据无法正常消费。解决方案包括修正topics为topic,以及避免使用CDH预设的agent名称以避免路径找不到的错误。同时,清理Kafka的__consumer_offsets分区文件后,可能需要重启Kafka以解决问题。

1461

1461

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?