现在能在网上找到很多很多的学习资源,有免费的也有收费的,当我拿到1套比较全的学习资源之前,我并没着急去看第1节,我而是去审视这套资源是否值得学习,有时候也会去问一些学长的意见,如果可以之后,我会对这套学习资源做1个学习计划,我的学习计划主要包括规划图和学习进度表。

分享给大家这份我薅到的免费视频资料,质量还不错,大家可以跟着学习

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

self.branch1_conv1_bn = nn.BatchNorm2d(32)

self.branch2_pool = nn.MaxPool2d(kernel_size=3,stride=1,padding=1)

self.branch2_conv1 = nn.Conv2d(in_channels=128, out_channels=32, kernel_size=1, stride=1)

self.branch2_conv1_bn = nn.BatchNorm2d(32)

self.branch3_conv1 = nn.Conv2d(in_channels=128, out_channels=24, kernel_size=1, stride=1)

self.branch3_conv1_bn = nn.BatchNorm2d(24)

self.branch3_conv2 = nn.Conv2d(in_channels=24, out_channels=32, kernel_size=3, stride=1, padding=1)

self.branch3_conv2_bn = nn.BatchNorm2d(32)

self.branch4_conv1 = nn.Conv2d(in_channels=128, out_channels=24, kernel_size=1, stride=1)

self.branch4_conv1_bn = nn.BatchNorm2d(24)

self.branch4_conv2 = nn.Conv2d(in_channels=24, out_channels=32, kernel_size=3, stride=1, padding=1)

self.branch4_conv2_bn = nn.BatchNorm2d(32)

self.branch4_conv3 = nn.Conv2d(in_channels=32, out_channels=32, kernel_size=3, stride=1, padding=1)

self.branch4_conv3_bn = nn.BatchNorm2d(32)

def forward(self, x):

x1 = self.branch1_conv1_bn(self.branch1_conv1(x))

x2 = self.branch2_conv1_bn(self.branch2_conv1(self.branch2_pool(x)))

x3 = self.branch3_conv2_bn(self.branch3_conv2(self.branch3_conv1_bn(self.branch3_conv1(x))))

x4 = self.branch4_conv3_bn(self.branch4_conv3(self.branch4_conv2_bn(self.branch4_conv2(self.branch4_conv1_bn(self.branch4_conv1(x))))))

out = torch.cat([x1, x2, x3, x4],dim=1)

return out

class FaceBoxes(nn.Module):

def init(self, num_classes, phase):

super(FaceBoxes, self).init()

self.phase = phase

self.num_classes = num_classes

self.RapidlyDigestedConvolutionalLayers = nn.Sequential(

Conv2dCReLU(in_channels=3,out_channels=24,kernel_size=7,stride=4,padding=3),

nn.MaxPool2d(kernel_size=3,stride=2,padding=1),

Conv2dCReLU(in_channels=48,out_channels=64,kernel_size=5,stride=2,padding=2),

nn.MaxPool2d(kernel_size=3, stride=2,padding=1)

)

self.MultipleScaleConvolutionalLayers = nn.Sequential(

InceptionModules(),

InceptionModules(),

InceptionModules(),

)

self.conv3_1 = nn.Conv2d(in_channels=128,out_channels=128,kernel_size=1,stride=1)

self.conv3_2 = nn.Conv2d(in_channels=128, out_channels=256, kernel_size=3, stride=2, padding=1)

self.conv4_1 = nn.Conv2d(in_channels=256, out_channels=128, kernel_size=1, stride=1)

self.conv4_2 = nn.Conv2d(in_channels=128, out_channels=256, kernel_size=3, stride=2, padding=1)

self.loc_layer1 = nn.Conv2d(in_channels=128, out_channels=21*4, kernel_size=3, stride=1, padding=1)

self.conf_layer1 = nn.Conv2d(in_channels=128, out_channels=21*num_classes, kernel_size=3, stride=1, padding=1)

self.loc_layer2 = nn.Conv2d(in_channels=256, out_channels=4, kernel_size=3, stride=1, padding=1)

self.conf_layer2 = nn.Conv2d(in_channels=256, out_channels=num_classes, kernel_size=3, stride=1, padding=1)

self.loc_layer3 = nn.Conv2d(in_channels=256, out_channels=4, kernel_size=3, stride=1, padding=1)

self.conf_layer3 = nn.Conv2d(in_channels=256, out_channels=num_classes, kernel_size=3, stride=1, padding=1)

if self.phase == ‘test’:

self.softmax = nn.Softmax(dim=-1)

elif self.phase == ‘train’:

for m in self.modules():

if isinstance(m, nn.Conv2d):

if m.bias is not None:

nn.init.xavier_normal_(m.weight.data)

nn.init.constant_(m.bias, 0)

else:

nn.init.xavier_normal_(m.weight.data)

elif isinstance(m, nn.BatchNorm2d):

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

def forward(self, x):

x = self.RapidlyDigestedConvolutionalLayers(x)

out1 = self.MultipleScaleConvolutionalLayers(x)

out2 = self.conv3_2(self.conv3_1(out1))

out3 = self.conv4_2(self.conv4_1(out2))

loc1 = self.loc_layer1(out1)

conf1 = self.conf_layer1(out1)

loc2 = self.loc_layer2(out2)

conf2 = self.conf_layer2(out2)

loc3 = self.loc_layer3(out3)

conf3 = self.conf_layer3(out3)

locs = torch.cat([loc1.permute(0, 2, 3, 1).contiguous().view(loc1.size(0), -1),

loc2.permute(0, 2, 3, 1).contiguous().view(loc2.size(0), -1),

loc3.permute(0, 2, 3, 1).contiguous().view(loc3.size(0), -1)], dim=1)

confs = torch.cat([conf1.permute(0, 2, 3, 1).contiguous().view(conf1.size(0), -1),

学好 Python 不论是就业还是做副业赚钱都不错,但要学会 Python 还是要有一个学习规划。最后大家分享一份全套的 Python 学习资料,给那些想学习 Python 的小伙伴们一点帮助!

一、Python所有方向的学习路线

Python所有方向路线就是把Python常用的技术点做整理,形成各个领域的知识点汇总,它的用处就在于,你可以按照上面的知识点去找对应的学习资源,保证自己学得较为全面。

二、学习软件

工欲善其事必先利其器。学习Python常用的开发软件都在这里了,给大家节省了很多时间。

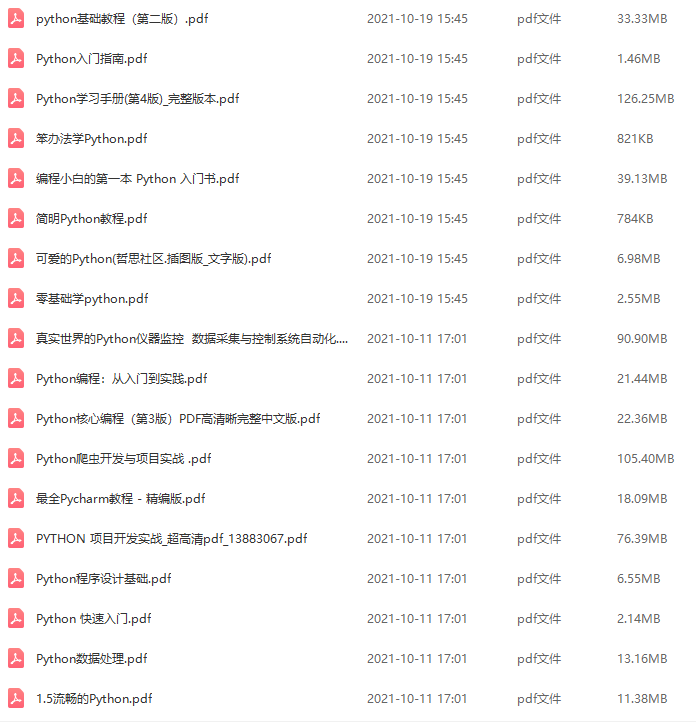

三、全套PDF电子书

书籍的好处就在于权威和体系健全,刚开始学习的时候你可以只看视频或者听某个人讲课,但等你学完之后,你觉得你掌握了,这时候建议还是得去看一下书籍,看权威技术书籍也是每个程序员必经之路。

四、入门学习视频

我们在看视频学习的时候,不能光动眼动脑不动手,比较科学的学习方法是在理解之后运用它们,这时候练手项目就很适合了。

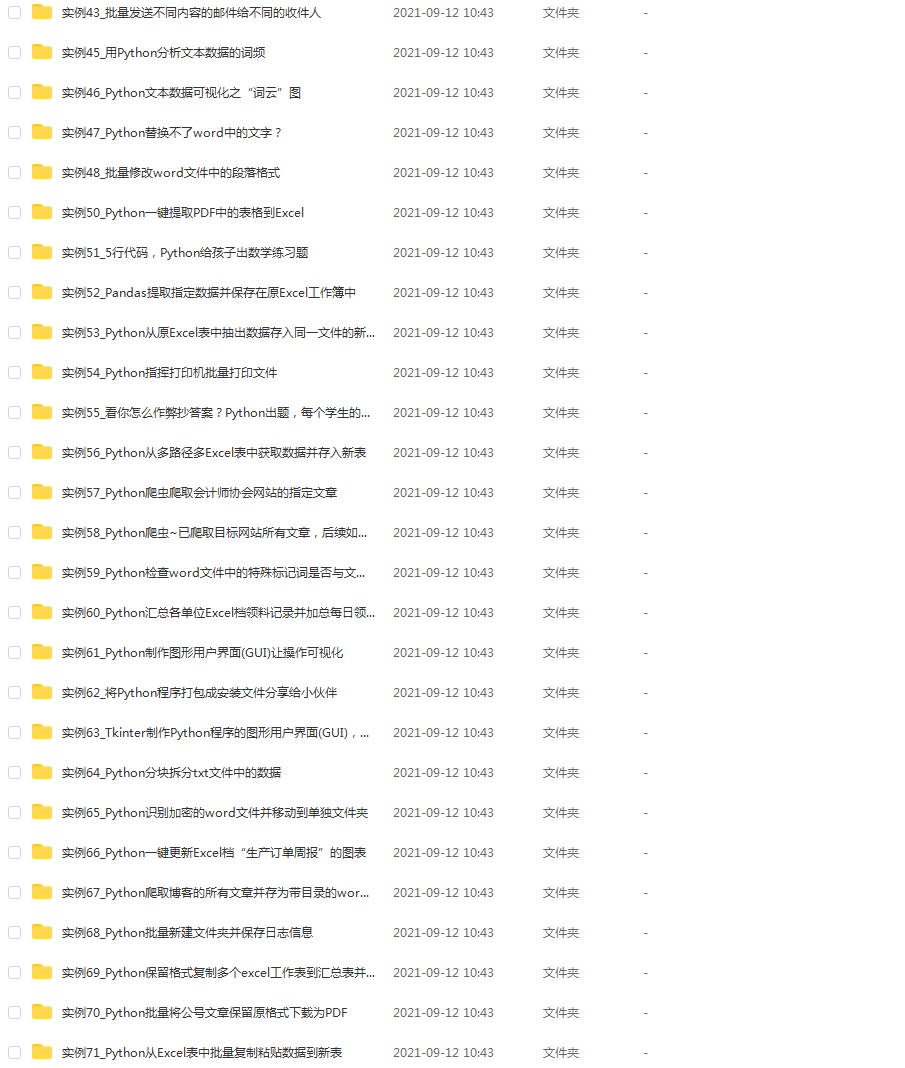

五、实战案例

光学理论是没用的,要学会跟着一起敲,要动手实操,才能将自己的所学运用到实际当中去,这时候可以搞点实战案例来学习。

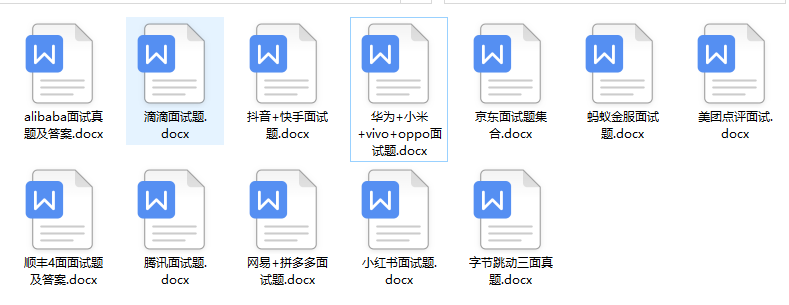

六、面试资料

我们学习Python必然是为了找到高薪的工作,下面这些面试题是来自阿里、腾讯、字节等一线互联网大厂最新的面试资料,并且有阿里大佬给出了权威的解答,刷完这一套面试资料相信大家都能找到满意的工作。

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

3329

3329

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?