提示:文章写完后,目录可以自动生成,如何生成可参考右边的帮助文档

前言

记录yolov8快速添加cbam注意力机制

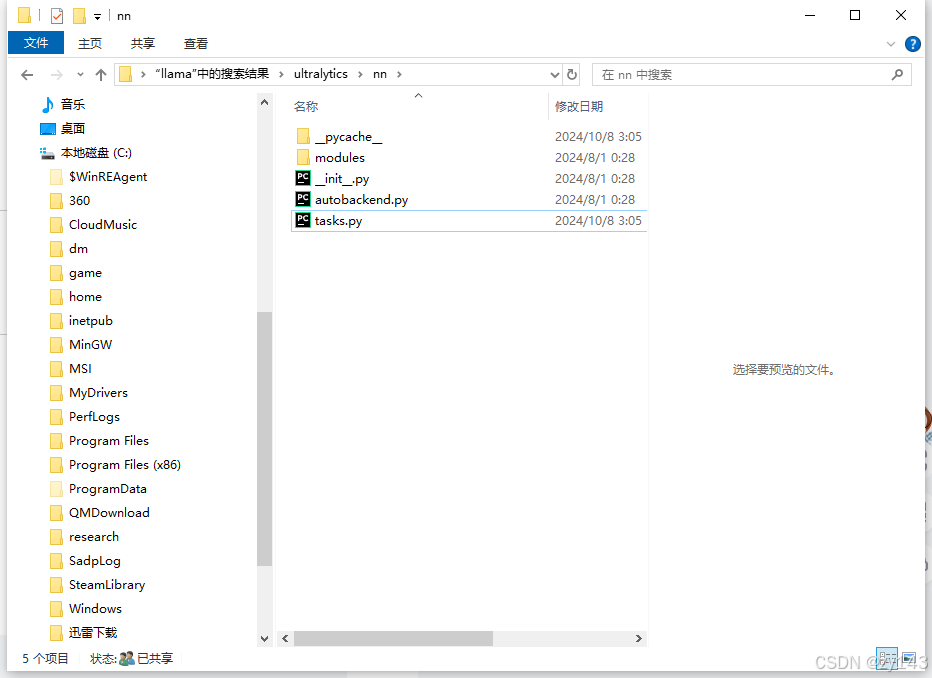

一、修改tasks

路径如上,将tasks.py替换为

# Ultralytics YOLO 🚀, AGPL-3.0 license

import contextlib

from copy import deepcopy

from pathlib import Path

import torch

import torch.nn as nn

from ultralytics.nn.modules import (

AIFI,

C1,

C2,

C3,

C3TR,

ELAN1,

OBB,

PSA,

SPP,

SPPELAN,

SPPF,

AConv,

ADown,

Bottleneck,

BottleneckCSP,

C2f,

C2fAttn,

C2fCIB,

C3Ghost,

C3x,

CBFuse,

CBLinear,

Classify,

Concat,

Conv,

Conv2,

ConvTranspose,

CBAM,

Detect,

DWConv,

DWConvTranspose2d,

Focus,

GhostBottleneck,

GhostConv,

HGBlock,

HGStem,

ImagePoolingAttn,

Pose,

RepC3,

RepConv,

RepNCSPELAN4,

RepVGGDW,

ResNetLayer,

RTDETRDecoder,

SCDown,

Segment,

WorldDetect,

v10Detect,

)

from ultralytics.utils import DEFAULT_CFG_DICT, DEFAULT_CFG_KEYS, LOGGER, colorstr, emojis, yaml_load

from ultralytics.utils.checks import check_requirements, check_suffix, check_yaml

from ultralytics.utils.loss import (

E2EDetectLoss,

v8ClassificationLoss,

v8DetectionLoss,

v8OBBLoss,

v8PoseLoss,

v8SegmentationLoss,

)

from ultralytics.utils.ops import make_divisible

from ultralytics.utils.plotting import feature_visualization

from ultralytics.utils.torch_utils import (

fuse_conv_and_bn,

fuse_deconv_and_bn,

initialize_weights,

intersect_dicts,

model_info,

scale_img,

time_sync,

)

try:

import thop

except ImportError:

thop = None

class BaseModel(nn.Module):

"""The BaseModel class serves as a base class for all the models in the Ultralytics YOLO family."""

def forward(self, x, *args, **kwargs):

"""

Forward pass of the model on a single scale. Wrapper for `_forward_once` method.

Args:

x (torch.Tensor | dict): The input image tensor or a dict including image tensor and gt labels.

Returns:

(torch.Tensor): The output of the network.

"""

if isinstance(x, dict): # for cases of training and validating while training.

return self.loss(x, *args, **kwargs)

return self.predict(x, *args, **kwargs)

def predict(self, x, profile=False, visualize=False, augment=False, embed=None):

"""

Perform a forward pass through the network.

Args:

x (torch.Tensor): The input tensor to the model.

profile (bool): Print the computation time of each layer if True, defaults to False.

visualize (bool): Save the feature maps of the model if True, defaults to False.

augment (bool): Augment image during prediction, defaults to False.

embed (list, optional): A list of feature vectors/embeddings to return.

Returns:

(torch.Tensor): The last output of the model.

"""

if augment:

return self._predict_augment(x)

return self._predict_once(x, profile, visualize, embed)

def _predict_once(self, x, profile=False, visualize=False, embed=None):

"""

Perform a forward pass through the network.

Args:

x (torch.Tensor): The input tensor to the model.

profile (bool): Print the computation time of each layer if True, defaults to False.

visualize (bool): Save the feature maps of the model if True, defaults to False.

embed (list, optional): A list of feature vectors/embeddings to return.

Returns:

(torch.Tensor): The last output of the model.

"""

y, dt, embeddings = [], [], [] # outputs

for m in self.model:

if m.f != -1: # if not from previous layer

x = y[m.f] if isinstance(m.f, int) else [x if j == -1 else y[j] for j in m.f] # from earlier layers

if profile:

self._profile_one_layer(m, x, dt)

x = m(x) # run

y.append(x if m.i in self.save else None) # save output

if visualize:

feature_visualization(x, m.type, m.i, save_dir=visualize)

if embed and m.i in embed:

embeddings.append(nn.functional.adaptive_avg_pool2d(x, (1, 1)).squeeze(-1).squeeze(-1)) # flatten

if m.i == max(embed):

return torch.unbind(torch.cat(embeddings, 1), dim=0)

return x

def _predict_augment(self, x):

"""Perform augmentations on input image x and return augmented inference."""

LOGGER.warning(

f"WARNING ⚠️ {self.__class__.__name__} does not support 'augment=True' prediction. "

f"Reverting to single-scale prediction."

)

return self._predict_once(x)

def _profile_one_layer(self, m, x, dt):

"""

Profile the computation time and FLOPs of a single layer of the model on a given input. Appends the results to

the provided list.

Args:

m (nn.Module): The layer to be profiled.

x (torch.Tensor): The input data to the layer.

dt (list): A list to store the computation time of the layer.

Returns:

None

"""

c = m == self.model[-1] and isinstance(x, list) # is final layer list, copy input as inplace fix

flops = thop.profile(m, inputs=[x.copy() if c else x], verbose=False)[0] / 1e9 * 2 if thop else 0 # GFLOPs

t = time_sync()

for _ in range(10):

m(x.copy() if c else x)

dt.append((time_sync() - t) * 100)

if m == self.model[0]:

LOGGER.info(f"{'time (ms)':>10s} {'GFLOPs':>10s} {'params':>10s} module")

LOGGER.info(f"{dt[-1]:10.2f} {flops:10.2f} {m.np:10.0f} {m.type}")

if c:

LOGGER.info(f"{sum(dt):10.2f} {'-':>10s} {'-':>10s} Total")

def fuse(self, verbose=True):

"""

Fuse the `Conv2d()` and `BatchNorm2d()` layers of the model into a single layer, in order to improve the

computation efficiency.

Returns:

(nn.Module): The fused model is returned.

"""

if not self.is_fused():

for m in self.model.modules():

if isinstance(m, (Conv, Conv2, DWConv)) and hasattr(m, "bn"):

if isinstance(m, Conv2):

m.fuse_convs()

m.conv = fuse_conv_and_bn(m.conv, m.bn) # update conv

delattr(m, "bn") # remove batchnorm

m.forward = m.forward_fuse # update forward

if isinstance(m, ConvTranspose) and hasattr(m, "bn"):

m.conv_transpose = fuse_deconv_and_bn(m.conv_transpose, m.bn)

delattr(m, "bn") # remove batchnorm

m.forward = m.forward_fuse # update forward

if isinstance(m, RepConv):

m.fuse_convs()

m.forward = m.forward_fuse # update forward

if isinstance(m, RepVGGDW):

m.fuse()

m.forward = m.forward_fuse

self.info(verbose=verbose)

return self

def is_fused(self, thresh=10):

"""

Check if the model has less than a certain threshold of BatchNorm layers.

Args:

thresh (int, optional): The threshold number of BatchNorm layers. Default is 10.

Returns:

(bool): True if the number of BatchNorm layers in the model is less than the threshold, False otherwise.

"""

bn = tuple(v for k, v in nn.__dict__.items() if "Norm" in k) # normalization layers, i.e. BatchNorm2d()

return sum(isinstance(v, bn) for v in self.modules()) < thresh # True if < 'thresh' BatchNorm layers in model

def info(self, detailed=False, verbose=True, imgsz=640):

"""

Prints model information.

Args:

detailed (bool): if True, prints out detailed information about the model. Defaults to False

verbose (bool): if True, prints out the model information. Defaults to False

imgsz (int): the size of the image that the model will be trained on. Defaults to 640

"""

return model_info(self, detailed=detailed, verbose=verbose, imgsz=imgsz)

def _apply(self, fn):

"""

Applies a function to all the tensors in the model that are not parameters or registered buffers.

Args:

fn (function): the function to apply to the model

Returns:

(BaseModel): An updated BaseModel object.

"""

self = super()._apply(fn)

m = self.model[-1] # Detect()

if isinstance(m, Detect): # includes all Detect subclasses like Segment, Pose, OBB, WorldDetect

m.stride = fn(m.stride)

m.anchors = fn(m.anchors)

m.strides = fn(m.strides)

return self

def load(self, weights, verbose=True):

"""

Load the weights into the model.

Args:

weights (dict | torch.nn.Module): The pre-trained weights to be loaded.

verbose (bool, optional): Whether to log the transfer progress. Defaults to True.

"""

model = weights["model"] if isinstance(weights, dict) else weights # torchvision models are not dicts

csd = model.float().state_dict() # checkpoint state_dict as FP32

csd = intersect_dicts(csd, self.state_dict()) # intersect

self.load_state_dict(csd, strict=False) # load

if verbose:

LOGGER.info(f"Transferred {len(csd)}/{len(self.model.state_dict())} item

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

8119

8119