pcie设备通用的设备,本文不做pcie的通识性解析,只对解决方案进行说明。

--------------------------------------------------------------------------------------------------------------------------------------

功能说明:ssd处于工作状态(读写)时意外断开,在不重启系统的前提下,内核能自动恢复ssd,且不导致系统异常。

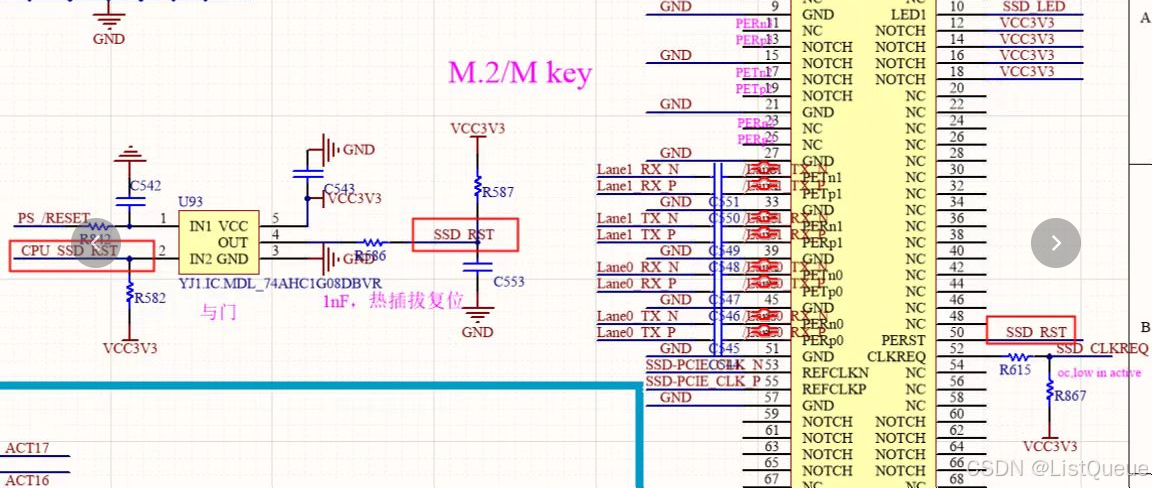

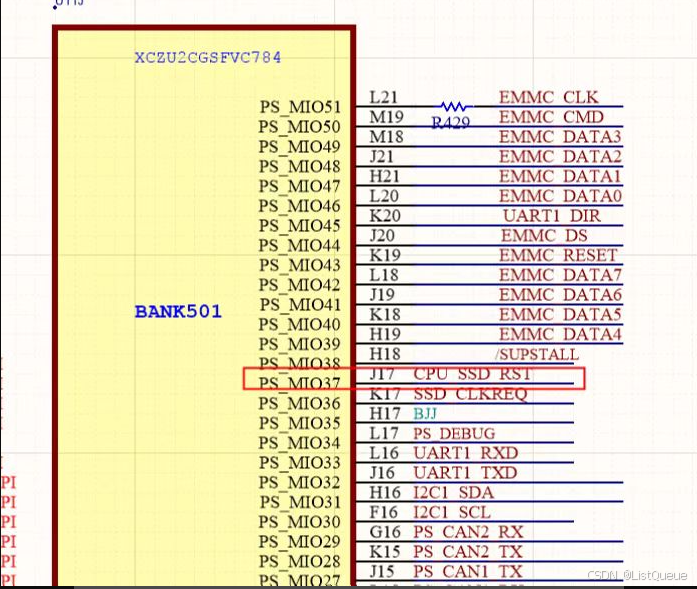

硬件原理图:

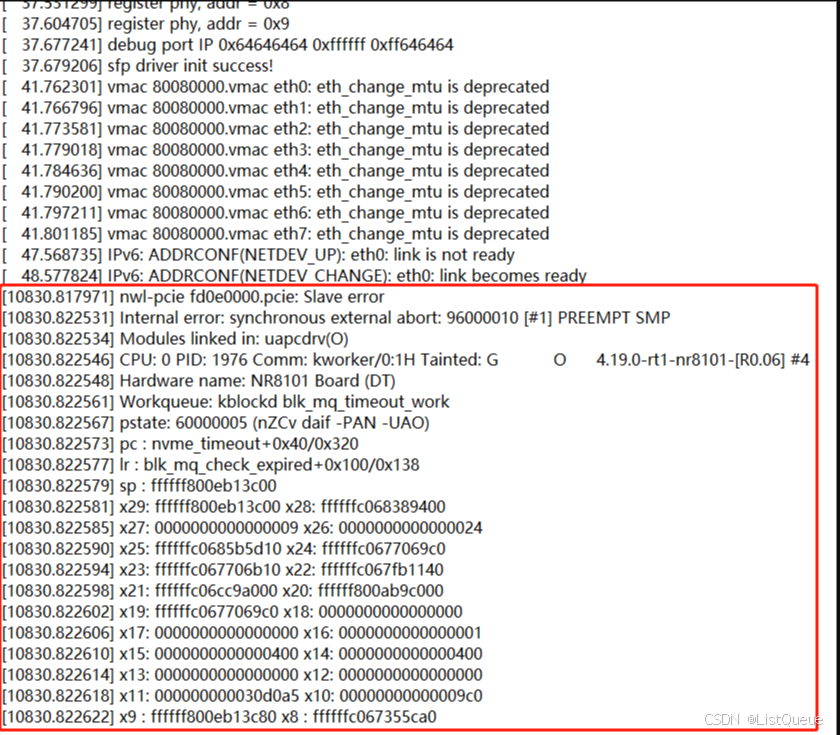

出错日志:

手动扫描pcie设备命令类似如下:

echo 1 > /sys/bus/pci/devices/0000\:01\:00.0/remove

echo 1 > /sys/bus/pci/rescan

解决方案:

---

arch/arm64/boot/dts/xilinx/zynqmp-nr8101.dts | 4 +

drivers/pci/controller/pcie-xilinx-nwl.c | 104 ++++++++++++++++++-

2 files changed, 106 insertions(+), 2 deletions(-)

diff --git a/arch/arm64/boot/dts/xilinx/zynqmp-nr8101.dts b/arch/arm64/boot/dts/xilinx/zynqmp-nr8101.dts

index f3225ed..784d2d6 100644

--- a/arch/arm64/boot/dts/xilinx/zynqmp-nr8101.dts

+++ b/arch/arm64/boot/dts/xilinx/zynqmp-nr8101.dts

@@ -640,3 +640,7 @@

&xlnx_dpdma {

status = "okay";

};

+

+&pcie {

+ ssd-reset-gpios = <&gpio 37 GPIO_ACTIVE_HIGH>;

+};

diff --git a/drivers/pci/controller/pcie-xilinx-nwl.c b/drivers/pci/controller/pcie-xilinx-nwl.c

index 449c8bc..93e1b2b 100644

--- a/drivers/pci/controller/pcie-xilinx-nwl.c

+++ b/drivers/pci/controller/pcie-xilinx-nwl.c

@@ -21,9 +21,14 @@

#include <linux/pci.h>

#include <linux/platform_device.h>

#include <linux/irqchip/chained_irq.h>

-

+#include <linux/gpio/consumer.h>

+#include <linux/kthread.h>

+#include <linux/syscalls.h>

+#include "../../../../fs/mount.h"

#include "../pci.h"

+#define NVME_SSD_PATH_PREFIX "/dev/nvme0n"

+

/* Bridge core config registers */

#define BRCFG_PCIE_RX0 0x00000000

#define BRCFG_INTERRUPT 0x00000010

@@ -181,6 +186,11 @@ struct nwl_pcie {

struct irq_domain *legacy_irq_domain;

struct clk *clk;

raw_spinlock_t leg_mask_lock;

+ bool link_status;

+ struct gpio_desc *gpio_ssd_reset;

+ struct task_struct *link_detect_thread;

+ struct work_struct link_down_cleanup_work;

+ struct work_struct link_up_rescan_work;

};

static inline u32 nwl_bridge_readl(struct nwl_pcie *pcie, u32 off)

@@ -272,6 +282,78 @@ static struct pci_ops nwl_pcie_ops = {

.write = pci_generic_config_write,

};

+static void force_umount_nvme_partitions(struct nwl_pcie *pcie)

+{

+ struct mnt_namespace *ns = current->nsproxy->mnt_ns;

+ struct mount *mnt, *tmp;

+ char *kbuf = kmalloc(PATH_MAX, GFP_KERNEL);

+ char *mount_point_path;

+

+ if (kbuf) {

+ list_for_each_entry_safe(mnt, tmp, &ns->list, mnt_list) {

+ if (!strncmp(mnt->mnt_devname, NVME_SSD_PATH_PREFIX, strlen(NVME_SSD_PATH_PREFIX))) {

+ const struct path mnt_path = { .dentry = mnt->mnt.mnt_root, .mnt = &mnt->mnt };

+ memset(kbuf, 0, PATH_MAX);

+ mount_point_path = d_absolute_path(&mnt_path, kbuf, PATH_MAX);

+ if (mount_point_path) {

+ dev_info(pcie->dev, "Force umounting %s : %s\n", mnt->mnt_devname, mount_point_path);

+ if (ksys_umount(mount_point_path, MNT_DETACH|MNT_FORCE)) {

+ dev_info(pcie->dev, "Failed to umount %s\n", mnt->mnt_devname);

+ }

+ }

+ }

+ }

+ kfree(kbuf);

+ } else {

+ dev_info(pcie->dev, "Failed to alloc memory\n");

+ }

+}

+

+static void link_up_rescan_fn(struct work_struct *work)

+{

+ struct nwl_pcie *pcie =

+ container_of(work, struct nwl_pcie, link_up_rescan_work);

+ struct pci_bus *self = pci_find_bus(0,0);

+ if (self!=NULL)

+ pci_rescan_bus(self);

+ pcie->link_status = nwl_pcie_link_up(pcie);

+}

+

+static void link_down_cleanup_fn(struct work_struct *work)

+{

+ struct nwl_pcie *pcie =

+ container_of(work, struct nwl_pcie, link_down_cleanup_work);

+

+ struct pci_dev *pdev = pci_get_domain_bus_and_slot(0, 1, 0);

+ if (pdev!=NULL) {

+ pci_disable_device(pdev);

+ pci_stop_and_remove_bus_device_locked(pdev);

+ force_umount_nvme_partitions(pcie);

+ }

+ pcie->link_status = false;

+}

+

+static int pcie_link_detect_thread_fn(void *data)

+{

+ struct nwl_pcie *pcie = data;

+ while (!kthread_should_stop()) {

+ if (!pcie->link_status && nwl_pcie_link_up(pcie)) {

+ dev_info(pcie->dev, "Link is UP\n");

+ msleep_interruptible(500);

+ gpiod_set_raw_value_cansleep(pcie->gpio_ssd_reset, 0);

+ msleep_interruptible(100);

+ gpiod_set_raw_value_cansleep(pcie->gpio_ssd_reset, 1);

+ msleep_interruptible(1000);

+ dev_info(pcie->dev, "Rescan devices\n");

+ schedule_work(&pcie->link_up_rescan_work);

+ msleep_interruptible(2000);

+ } else {

+ msleep_interruptible(1000);

+ }

+ }

+ return 0;

+}

+

static irqreturn_t nwl_pcie_misc_handler(int irq, void *data)

{

struct nwl_pcie *pcie = data;

@@ -287,6 +369,11 @@ static irqreturn_t nwl_pcie_misc_handler(int irq, void *data)

if (misc_stat & MSGF_MISC_SR_RXMSG_OVER)

dev_err(dev, "Received Message FIFO Overflow\n");

+ if ((misc_stat & MSGF_MISC_SR_LINK_DOWN) && pcie->link_status) {

+ dev_info(pcie->dev, "Link is DOWN\n");

+ schedule_work(&pcie->link_down_cleanup_work);

+ }

+

if (misc_stat & MSGF_MISC_SR_SLAVE_ERR)

dev_err(dev, "Slave error\n");

@@ -733,7 +820,8 @@ static int nwl_pcie_bridge_init(struct nwl_pcie *pcie)

ecam_val |= (pcie->last_busno << E_ECAM_SIZE_SHIFT);

writel(ecam_val, (pcie->ecam_base + PCI_PRIMARY_BUS));

- if (nwl_pcie_link_up(pcie))

+ pcie->link_status = nwl_pcie_link_up(pcie);

+ if (pcie->link_status)

dev_info(dev, "Link is UP\n");

else

dev_info(dev, "Link is DOWN\n");

@@ -919,6 +1007,18 @@ static int nwl_pcie_probe(struct platform_device *pdev)

list_for_each_entry(child, &bus->children, node)

pcie_bus_configure_settings(child);

pci_bus_add_devices(bus);

+

+ INIT_WORK(&pcie->link_down_cleanup_work, link_down_cleanup_fn);

+ INIT_WORK(&pcie->link_up_rescan_work, link_up_rescan_fn);

+

+ pcie->gpio_ssd_reset = devm_gpiod_get(pcie->dev, "ssd-reset", GPIOD_OUT_HIGH);

+ if (IS_ERR(pcie->gpio_ssd_reset)) {

+ dev_err(&pdev->dev, "Failed to get ssd-reset pin.\n");

+ }

+ pcie->link_detect_thread = kthread_run(pcie_link_detect_thread_fn, pcie, "nwl-pcie-detect");

+ if (IS_ERR(pcie->link_detect_thread)) {

+ dev_err(pcie->dev, "Could not start kernel thread.\n");

+ }

return 0;

error:

376

376

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?