Let me take you back to a time when our backend was on the edge of collapsing.

Everything started simple. We had a working payment system, users were growing, and we were pushing new features almost every week. On the surface, everything looked great until one fine day, users started complaining that they were getting charged but not receiving any confirmation. Some even paid twice. Others didn’t get receipts. And our support team was overwhelmed with messages like, “I paid, but nothing happened.”

At first, we assumed the issue was temporary. Maybe a server was slow or some third-party API was misbehaving. But as more users started reporting the same thing, we knew it was deeper than that.

The Problem Was in How We Designed It

We sat down and walked through our entire payment flow. And what we saw made everything clear.

Every time a user made a payment, our backend was doing all of this in one go:

- Verifying the transaction with the payment gateway

- Updating the database with payment status

- Sending the confirmation email

- Pushing a mobile notification

- Logging the data for fraud checks

- Updating internal dashboards and reports

All of this was happening in a single thread, one after the other.

If even one of these steps slowed down like email service taking an extra second the entire flow got delayed. If something crashed, the request failed completely. That meant the user got charged, but never got a confirmation. The frontend retried. Some people got charged multiple times.

We had built a chain reaction system where one delay caused ten other problems. It wasn’t a bug. It was a design issue. Our system had no space to breathe.

We Knew We Had to Decouple

The biggest realization we had was this: we needed to break the chain.

We couldn’t let the core payment processing depend on everything else. The core flow verifying and confirming payment should be fast, reliable, and independent.

That’s when one of our engineers suggested using Kafka.

At that time, not everyone in the team was familiar with Kafka. Some thought it was overkill. Others felt it was too complex. But the more we read, the more it made sense.

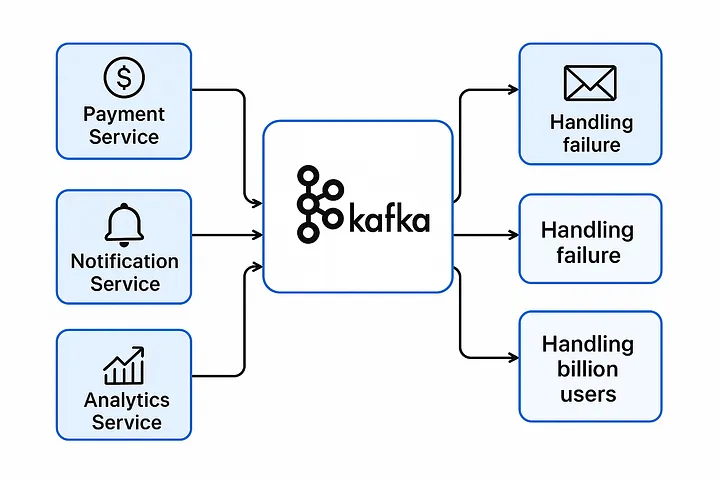

Kafka wasn’t another service or fancy new library. It was a message broker a way to pass events from one part of the system to another, without forcing them to run together.

Kafka, In Our Words

What Kafka gave us was very simple.

Imagine after a successful payment, instead of immediately sending an email, pushing notifications, and updating logs, we just do one thing:

We publish a message to Kafka saying:

“Payment successful for user A, amount ₹500”

That’s it.

Now all the other services email, notification, fraud detection, analytics just listen to that message. When they see it, they act. But if they are down, or busy, or slowthe main flow doesn’t care. The user already got their payment done. The core transaction is complete.

This simple idea changed everything.

The First Time We Tried It

The first thing we moved to Kafka was the email confirmation.

We updated our code: once a payment was complete, we wouldn’t directly call the email service anymore. Instead, we would publish a payment_success event to Kafka.

The email service was now a consumer of that event. It would pick it up, process it, and send the mail.

The result? Even if the email service went down, payments still worked. No more retries. No more blocking.

Slowly, we moved everything else to this model notifications, fraud checks, logging, analytics all became independent consumers of the Kafka event.

The Difference Was Instant

Suddenly, our system became faster. The payment API returned responses almost instantly. Users no longer had to wait. Our support tickets reduced overnight. And even better when we wanted to add new services (like sending SMS or triggering cashback), we didn’t need to change the core code.

All we did was add a new Kafka consumer that listened to payment_success.

That’s it. No extra load on the payment service. No risks of failure. Just plug-and-play.

We were finally thinking like a scalable system — where services don’t block each other, but work like a team, each doing their part without slowing the others down.

Kafka Made Us Ready for Growth

As our user base grew from millions to tens of millions, Kafka handled it easily. We were publishing thousands of payment events per second — and Kafka didn’t even blink.

We could scale each consumer service separately. Need to process more notifications? Add more consumers. Email service slow? No problem, Kafka will store the messages until it catches up. Want to replay old events for audit or backup? Kafka supports that too.

We had moved from a fragile setup to a robust event-driven system. All because we stopped trying to do everything in one place.

What Kafka Really Gave Us

It gave us speed, yes. It gave us reliability. It made scaling easier. But the biggest thing Kafka gave us? Peace of mind.

We were no longer scared to push changes. We no longer feared “what if email fails again?”. Each service had its space. Each part of the system was free to do its job.

And that freedom to build confidently, to scale without fear, and to sleep better at night that was the real win.

Final Words

If you’re working on a payment system, or any system where many things happen after a user action please don’t try to handle it all at once. You don’t need to write all the logic in one big API.

Take a step back. Let Kafka or any event-streaming platform help you. Publish one event. Let other services listen and act on it. Build cleanly, scale safely.

We learned this the hard way.

But you don’t have to.

Kafka didn’t just save our system.

It helped us grow. It helped us breathe.

And it made us feel ready for 10 Million users and beyond.

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?