1.install and configure hadoop-2.6.0 ($HADOOP_HOME must be set).

2. download mysql-5.6.22.tar.gz source code from http://dev.mysql.com/downloads/mysql/

#tar xf mysql-5.6.22.tar.gz

#cd mysql-5.6.22

#cmake .

#make

#export MYSQL_DIR=/path/to/mysql-5.6.22

3. download mysql-connector-c-6.1.5-src.tar.gz from http://dev.mysql.com/downloads/connector/c/#downloads

#tar xf mysql-connector-c-6.1.5-src.tar.gz

#cd mysql-connector-c-6.1.5-src

#cmake .

#make

#make install

4.download mysql hadoop applier from http://labs.mysql.com.

#tar xf mysql-hadoop-applier-0.1.0-alpha.tar.gz

#cd mysql-hadoop-applier-0.1.0-alpha

5. download FindHDFS.cmake from https://github.com/cloudera/impala/blob/master/cmake_modules/FindHDFS.cmake

#mv FindHDFS.cmake /path/to/mysql-hadoop-applier-0.1.0-alpha/MyCMake

#cmake . -DENABLE_DOWNLOADS=1

#make

#make install

The library 'libreplication' which is to be used by Hadoop Applier is in lib dirAn otherway is :

#mkdir build

#cd build

#cmake .. -DCMAKE_MODULE_PATH:String=../MyCMake -DENABLE_DOWNLOADS=1

#make

#make install

#export PATH=$HADOOP_HOME/bin:$PATH

#cd build/examples/mysql2hdfs

#make

there is a error

[ 77%] Built target replication_static Linking CXX executable happlier /usr/bin/ld: warning: libmawt.so, needed by /opt/jdk1.7.0_51/jre/lib/amd64/libjawt.so, not found (try using -rpath or -rpath-link) /opt/jdk1.7.0_51/jre/lib/amd64/libjawt.so: undefined reference to `awt_Unlock@SUNWprivate_1.1' /opt/jdk1.7.0_51/jre/lib/amd64/libjawt.so: undefined reference to `awt_GetComponent@SUNWprivate_1.1' /opt/jdk1.7.0_51/jre/lib/amd64/libjawt.so: undefined reference to `awt_Lock@SUNWprivate_1.1' /opt/jdk1.7.0_51/jre/lib/amd64/libjawt.so: undefined reference to `awt_GetDrawingSurface@SUNWprivate_1.1' /opt/jdk1.7.0_51/jre/lib/amd64/libjawt.so: undefined reference to `awt_FreeDrawingSurface@SUNWprivate_1.1' collect2: ld returned 1 exit status make[2]: *** [examples/mysql2hdfs/happlier] Error 1 make[1]: *** [examples/mysql2hdfs/CMakeFiles/happlier.dir/all] Error 2 make: *** [all] Error 2

the suggested solution is

export LD_LIBRARY_DIR=${JAVA_HOME}/jre/lib/amd64/xawt:${LD_LIBRARY_DIR}

but the error still exists.

(I found that the above env variable is error,the correct is

export LD_LIBRARY_PATH=${JAVA_HOME}/jre/lib/amd64/xawt:${LD_LIBRARY_PATH}

)

a error is

[root@dmining05 mysql2hdfs]# ./happlier The default data warehouse directory in HDFS will be set to /usr/hive/warehouse Change the default data warehouse directory? (Y or N) n Enter either Y or N:N loadFileSystems error: (unable to get stack trace for java.lang.NoClassDefFoundError exception: ExceptionUtils::getStackTrace error.) hdfsBuilderConnect(forceNewInstance=0, nn=default, port=0, kerbTicketCachePath=(NULL), userName=(NULL)) error: (unable to get stack trace for java.lang.NoClassDefFoundError exception: ExceptionUtils::getStackTrace error.) Couldnot connect to HDFS file system

the error may be due to the following cause

http://stackoverflow.com/questions/21064140/hadoop-c-hdfs-test-running-exception

#echo $CLASSPATH

/root/hadoop-2.6.0/etc/hadoop: /root/hadoop-2.6.0/share/hadoop/common/lib/*: /root/hadoop-2.6.0/share/hadoop/common/*: /root/hadoop-2.6.0/share/hadoop/hdfs: /root/hadoop-2.6.0/share/hadoop/hdfs/lib/*: /root/hadoop-2.6.0/share/hadoop/hdfs/*: /root/hadoop-2.6.0/share/hadoop/yarn/lib/*: /root/hadoop-2.6.0/share/hadoop/yarn/*: /root/hadoop-2.6.0/share/hadoop/mapreduce/lib/*: /root/hadoop-2.6.0/share/hadoop/mapreduce/*: /root/hadoop-2.6.0/contrib/capacity-scheduler/*.jar

For hadoop versions 2.0.0 and above, the classpath doesn't support wild characters. If you add the jars explicitly to the CLASSPATH, your app will work.

Can JNI be made to honour wildcard expansion in the classpath?

One way is to build a file name setclass.sh

#!/bin/bash

hcp=$(hadoop classpath)

#echo $hcp

arr=(${hcp//:/ })

len=${#arr[@]}

let len-=1

echo $len

j=0

export CLASSPATH=/etc/hadoop/conf

for i in ${arr[@]}

do

# echo $i

if [ $j -eq 0 ]; then

export CLASSPATH=$i

elif [ $j -eq $len ]; then

echo $i

else

export CLASSPATH=$CLASSPATH:$i

fi

let j+=1

done

then source it, not to execute it

#source setclass.sh

#echo $CLASSPATH

This work well in current session, if want to make it work gloably,

#$echo $CLASSPATH >> ~/.bashrc

#source ~/.bashrc

open another consele to check it .

mysql configuration in /etc/my.cnf likes

[mysqld]

basedir = /usr/local/mysql

datadir = /var/lib/mysql

port = 3306

#socket = /var/lib/mysql/mysql.sock

user=mysql

bind_address = ::

#bin log conf

log_bin = masterbin_log

binlog_checksum = NONE

binlog_format = ROW

server-id = 2

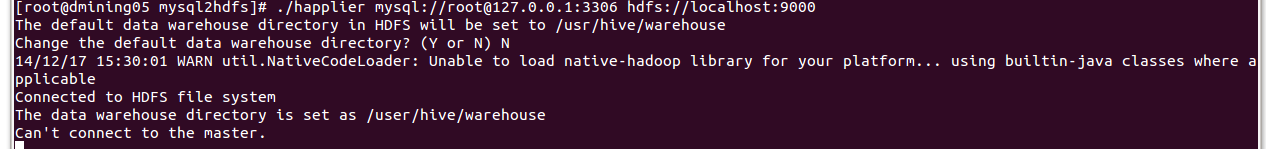

#./happlier mysql://root@127.0.0.1:3306 hdfs://localhost:9000

the msg is

the right way is

#./happlier mysql://root:123456@127.0.0.1:3306 hdfs://localhost:9000

(you should provide password to access mysql)

but this warning still exists

WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Refrences

http://innovating-technology.blogspot.com/2013/04/mysql-hadoop-applier-part-1.html

http://innovating-technology.blogspot.com/2013/04/mysql-hadoop-applier-part-2.html

http://paddy-w.iteye.com/blog/2023656

http://www.tuicool.com/articles/NfArA3i

本文介绍如何安装配置Hadoop Applier,并详细记录了过程中遇到的问题及解决方案,包括环境变量设置、编译错误处理及连接HDFS时的常见问题。

本文介绍如何安装配置Hadoop Applier,并详细记录了过程中遇到的问题及解决方案,包括环境变量设置、编译错误处理及连接HDFS时的常见问题。

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?