一、Audio HAL架构分析

Android音频架构定义了如何实现音频功能,并指出实现过程中涉及的相关源码

Application framework

application framework包括应用程序代码,该代码使用android.media包中的API接口去与音频硬件交互。在内部,这些代码通过jni去访问与硬件交互的native层的代码。

JNI

与android.media相关的jni代码会调用native层的代码去访问音频硬件

Native framework

native 框架提供了一个Binder IPC代理去访问媒体服务器的音频特定服务。对外提供了AudioRecord和AudioTrack两个API类,通过它们可以完成android平台上音频数据的采集和输出任务。

Binder IPC

Binder IPC有利于不同进程之间的通信

Media server

媒体服务包含了音频服务,它是与hal层实现交互的代码。AudioFlinger它是audio系统的工作引擎,管理着系统的输入输出音频流,并承担音频数据的混音,以及读写audio硬件等工作以实现数据的输入输出功能。AudioPolicyService它是Audio系统的策略控制中心,控制着声音设备的选择和切换、音量控制等功能。

HAL

HAL定义了音频服务调用的标准接口,您必现实现该接口才能使音频硬件正常工作

Kernel driver

音频驱动与硬件和hal层交互。你可以使用高级的Linux声音体系结构(ALSA),开发的声音系统(OOS),或自定义驱动

二、Audio HAL源码分析

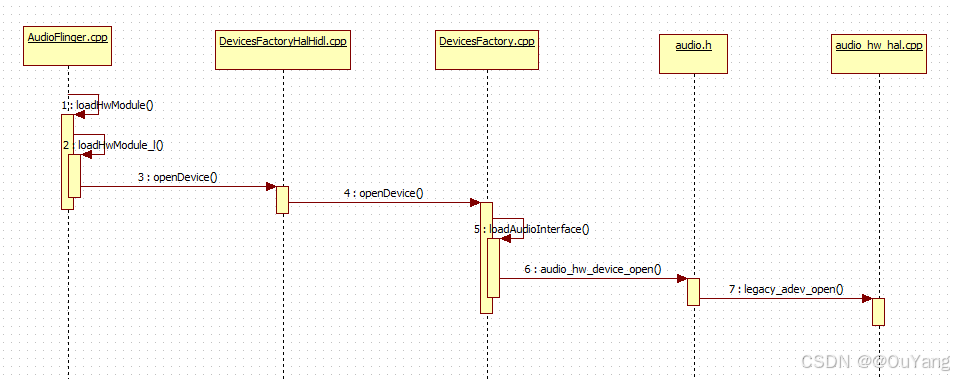

2.1 打开音频设备

xref: /frameworks/av/services/audioflinger/AudioFlinger.cpp

audio_module_handle_t AudioFlinger::loadHwModule(const char *name)

{

if (name == NULL) {

return AUDIO_MODULE_HANDLE_NONE;

}

if (!settingsAllowed()) {

return AUDIO_MODULE_HANDLE_NONE;

}

Mutex::Autolock _l(mLock);

return loadHwModule_l(name);

}

// loadHwModule_l() must be called with AudioFlinger::mLock held

audio_module_handle_t AudioFlinger::loadHwModule_l(const char *name)

{

// 在集合mAudioHwDevs中查找是否有已经打开的AudioHwDevice设备

for (size_t i = 0; i < mAudioHwDevs.size(); i++) {

if (strncmp(mAudioHwDevs.valueAt(i)->moduleName(), name, strlen(name)) == 0) {

ALOGW("loadHwModule() module %s already loaded", name);

return mAudioHwDevs.keyAt(i);

}

}

sp<DeviceHalInterface> dev;

// mDevicesFactoryHal就是audio hidl的客户端,通过它和hal层交互

int rc = mDevicesFactoryHal->openDevice(name, &dev);

if (rc) {

ALOGE("loadHwModule() error %d loading module %s", rc, name);

return AUDIO_MODULE_HANDLE_NONE;

}

mHardwareStatus = AUDIO_HW_INIT;

rc = dev->initCheck();

mHardwareStatus = AUDIO_HW_IDLE;

if (rc) {

ALOGE("loadHwModule() init check error %d for module %s", rc, name);

return AUDIO_MODULE_HANDLE_NONE;

}

// Check and cache this HAL's level of support for master mute and master

// volume. If this is the first HAL opened, and it supports the get

// methods, use the initial values provided by the HAL as the current

// master mute and volume settings.

AudioHwDevice::Flags flags = static_cast<AudioHwDevice::Flags>(0);

{ // scope for auto-lock pattern

AutoMutex lock(mHardwareLock);

if (0 == mAudioHwDevs.size()) {

mHardwareStatus = AUDIO_HW_GET_MASTER_VOLUME;

float mv;

if (OK == dev->getMasterVolume(&mv)) {

mMasterVolume = mv;

}

mHardwareStatus = AUDIO_HW_GET_MASTER_MUTE;

bool mm;

if (OK == dev->getMasterMute(&mm)) {

mMasterMute = mm;

}

}

mHardwareStatus = AUDIO_HW_SET_MASTER_VOLUME;

if (OK == dev->setMasterVolume(mMasterVolume)) {

flags = static_cast<AudioHwDevice::Flags>(flags |

AudioHwDevice::AHWD_CAN_SET_MASTER_VOLUME);

}

mHardwareStatus = AUDIO_HW_SET_MASTER_MUTE;

if (OK == dev->setMasterMute(mMasterMute)) {

flags = static_cast<AudioHwDevice::Flags>(flags |

AudioHwDevice::AHWD_CAN_SET_MASTER_MUTE);

}

mHardwareStatus = AUDIO_HW_IDLE;

}

if (strcmp(name, AUDIO_HARDWARE_MODULE_ID_MSD) == 0) {

// An MSD module is inserted before hardware modules in order to mix encoded streams.

flags = static_cast<AudioHwDevice::Flags>(flags | AudioHwDevice::AHWD_IS_INSERT);

}

//打开成功的保存

audio_module_handle_t handle = (audio_module_handle_t) nextUniqueId(AUDIO_UNIQUE_ID_USE_MODULE);

mAudioHwDevs.add(handle, new AudioHwDevice(handle, name, dev, flags));

ALOGI("loadHwModule() Loaded %s audio interface, handle %d", name, handle);

return handle;

}先遍历mAudioHwDevs集合是否已经有打开的设备,如果有则直接返回。如果没有就调用mDevicesFactoryHal打开设备,并将打开的设备保存在mAudioHwDevs集合中。dev就是hal层构造的audio_hw_device,dev会被封装在AudioHwDevice中,然后添加到mAudioHwDevs数组中。

上面两种方式与HAL层交互,一种是通过HIDL进行进程间的交互(DevicesFactoryHalHidl),另外一种是使用传统的hw_get_module_xxx获取HAL层的audio模块(DevicesFactoryHalLocal),同进程交互。我们分析hidl方式

xref: /frameworks/av/media/libaudiohal/impl/DevicesFactoryHalHidl.cpp

#if MAJOR_VERSION == 2

static IDevicesFactory::Device idFromHal(const char *name, status_t* status) {

*status = OK;

if (strcmp(name, AUDIO_HARDWARE_MODULE_ID_PRIMARY) == 0) {

return IDevicesFactory::Device::PRIMARY;

} else if(strcmp(name, AUDIO_HARDWARE_MODULE_ID_A2DP) == 0) {

return IDevicesFactory::Device::A2DP;

} else if(strcmp(name, AUDIO_HARDWARE_MODULE_ID_USB) == 0) {

return IDevicesFactory::Device::USB;

} else if(strcmp(name, AUDIO_HARDWARE_MODULE_ID_REMOTE_SUBMIX) == 0) {

return IDevicesFactory::Device::R_SUBMIX;

} else if(strcmp(name, AUDIO_HARDWARE_MODULE_ID_STUB) == 0) {

return IDevicesFactory::Device::STUB;

}

ALOGE("Invalid device name %s", name);

*status = BAD_VALUE;

return {};

}

#elif MAJOR_VERSION >= 4

static const char* idFromHal(const char *name, status_t* status) {

*status = OK;

return name;

}

#endif

status_t DevicesFactoryHalHidl::openDevice(const char *name, sp<DeviceHalInterface> *device) {

if (mDeviceFactories.empty()) return NO_INIT;

status_t status;

// 将name转换成hidlId

auto hidlId = idFromHal(name, &status);

if (status != OK) return status;

Result retval = Result::NOT_INITIALIZED;

// 遍历所有的DeviceFactory,根据hidlId打开对应的设备

for (const auto& factory : mDeviceFactories) {

Return<void> ret = factory->openDevice(

hidlId,

[&](Result r, const sp<IDevice>& result) {

retval = r;

if (retval == Result::OK) {

*device = new DeviceHalHidl(result);

}

});

if (!ret.isOk()) return FAILED_TRANSACTION;

switch (retval) {

// Device was found and was initialized successfully.

case Result::OK: return OK;

// Device was found but failed to initalize.

case Result::NOT_INITIALIZED: return NO_INIT;

// Otherwise continue iterating.

default: ;

}

}

ALOGW("The specified device name is not recognized: \"%s\"", name);

return BAD_VALUE;

}通过HIDL通信调用openDevice方法打开服务端的设备

xref: /hardware/interfaces/audio/core/all-versions/default/DevicesFactory.cpp

template <class DeviceShim, class Callback>

Return<void> DevicesFactory::openDevice(const char* moduleName, Callback _hidl_cb) {

audio_hw_device_t* halDevice;

Result retval(Result::INVALID_ARGUMENTS);

sp<DeviceShim> result;

// 加载音频接口

int halStatus = loadAudioInterface(moduleName, &halDevice);

if (halStatus == OK) {

result = new DeviceShim(halDevice);

retval = Result::OK;

} else if (halStatus == -EINVAL) {

retval = Result::NOT_INITIALIZED;

}

_hidl_cb(retval, result);

return Void();

}

// static

int DevicesFactory::loadAudioInterface(const char* if_name, audio_hw_device_t** dev) {

const hw_module_t* mod;

int rc;

//加载hal层的音频模块

rc = hw_get_module_by_class(AUDIO_HARDWARE_MODULE_ID, if_name, &mod);

if (rc) {

ALOGE("%s couldn't load audio hw module %s.%s (%s)", __func__, AUDIO_HARDWARE_MODULE_ID,

if_name, strerror(-rc));

goto out;

}

//打开hal层的音频模块

rc = audio_hw_device_open(mod, dev);

if (rc) {

ALOGE("%s couldn't open audio hw device in %s.%s (%s)", __func__, AUDIO_HARDWARE_MODULE_ID,

if_name, strerror(-rc));

goto out;

}

if ((*dev)->common.version < AUDIO_DEVICE_API_VERSION_MIN) {

ALOGE("%s wrong audio hw device version %04x", __func__, (*dev)->common.version);

rc = -EINVAL;

audio_hw_device_close(*dev);

goto out;

}

return OK;

out:

*dev = NULL;

return rc;

}上面hw_get_module_by_class调用时,其参数AUDIO_HARDWARE_MODULE_ID是audio,if_name是module的名字,取值有primary、a2dp、usb等等。

xref: /hardware/libhardware/include/hardware/audio.h

static inline int audio_hw_device_open(const struct hw_module_t* module,

struct audio_hw_device** device)

{

return module->methods->open(module, AUDIO_HARDWARE_INTERFACE,

TO_HW_DEVICE_T_OPEN(device));

}audio_hw_device_open的具体实现就是在定义结构体audio_module的地方,不同的平台实现不一样,我们这里以legacy_adev_open的实现方式进行解析。

xref: /hardware/libhardware_legacy/audio/audio_hw_hal.cpp

static int legacy_adev_open(const hw_module_t* module, const char* name,

hw_device_t** device)

{

struct legacy_audio_device *ladev;

int ret;

if (strcmp(name, AUDIO_HARDWARE_INTERFACE) != 0)

return -EINVAL;

ladev = (struct legacy_audio_device *)calloc(1, sizeof(*ladev));

if (!ladev)

return -ENOMEM;

ladev->device.common.tag = HARDWARE_DEVICE_TAG;

ladev->device.common.version = AUDIO_DEVICE_API_VERSION_2_0;

ladev->device.common.module = const_cast<hw_module_t*>(module);

ladev->device.common.close = legacy_adev_close;

ladev->device.init_check = adev_init_check;

ladev->device.set_voice_volume = adev_set_voice_volume;

ladev->device.set_master_volume = adev_set_master_volume;

ladev->device.get_master_volume = adev_get_master_volume;

ladev->device.set_mode = adev_set_mode;

ladev->device.set_mic_mute = adev_set_mic_mute;

ladev->device.get_mic_mute = adev_get_mic_mute;

ladev->device.set_parameters = adev_set_parameters;

ladev->device.get_parameters = adev_get_parameters;

ladev->device.get_input_buffer_size = adev_get_input_buffer_size;

ladev->device.open_output_stream = adev_open_output_stream;

ladev->device.close_output_stream = adev_close_output_stream;

ladev->device.open_input_stream = adev_open_input_stream;

ladev->device.close_input_stream = adev_close_input_stream;

ladev->device.dump = adev_dump;

ladev->hwif = createAudioHardware();

if (!ladev->hwif) {

ret = -EIO;

goto err_create_audio_hw;

}

*device = &ladev->device.common;

return 0;

err_create_audio_hw:

free(ladev);

return ret;

}

static struct hw_module_methods_t legacy_audio_module_methods = {

open: legacy_adev_open

};

struct legacy_audio_module HAL_MODULE_INFO_SYM = {

module: {

common: {

tag: HARDWARE_MODULE_TAG,

module_api_version: AUDIO_MODULE_API_VERSION_0_1,

hal_api_version: HARDWARE_HAL_API_VERSION,

id: AUDIO_HARDWARE_MODULE_ID,

name: "LEGACY Audio HW HAL",

author: "The Android Open Source Project",

methods: &legacy_audio_module_methods,

dso : NULL,

reserved : {0},

},

},

}; 在audio_hw_hal.cpp的legacy_adev_open函数中,会构建legacy_audio_device结构体, legacy_audio_device结构体中包含了audio_hw_device结构体,该结构体中有各类函数,特别是open_output_stream/open_input_stream。AudioFlinger会根据audio_hw_device结构体构造一个AudioHwDev对象并放入mAudioHwDevs。

createAudioHardware()创建的AudioHardware是由相关的厂家提供源码,通过AudioHardware向下访问硬件。

audio_hw_device: 用于hal向上提供统一的接口,供AudioFlinger调用

audio_stream_out: legacy_stream_out中的audio_stream_out结构体,它是厂家向上提供的输出接口,表示输出功能。

audio_stream_in: legacy_stream_in中的audio_stream_in结构体,它是厂家向上提供的录音接口,表示输入功能。

2.2 写数据

xref: /frameworks/av/services/audioflinger/AudioFlinger.cpp

sp<AudioFlinger::ThreadBase> AudioFlinger::openOutput_l(audio_module_handle_t module,

audio_io_handle_t *output,

audio_config_t *config,

audio_devices_t devices,

const String8& address,

audio_output_flags_t flags)

{

AudioHwDevice *outHwDev = findSuitableHwDev_l(module, devices);

if (outHwDev == NULL) {

return 0;

}

if (*output == AUDIO_IO_HANDLE_NONE) {

*output = nextUniqueId(AUDIO_UNIQUE_ID_USE_OUTPUT);

} else {

// Audio Policy does not currently request a specific output handle.

// If this is ever needed, see openInput_l() for example code.

ALOGE("openOutput_l requested output handle %d is not AUDIO_IO_HANDLE_NONE", *output);

return 0;

}

mHardwareStatus = AUDIO_HW_OUTPUT_OPEN;

// FOR TESTING ONLY:

// This if statement allows overriding the audio policy settings

// and forcing a specific format or channel mask to the HAL/Sink device for testing.

if (!(flags & (AUDIO_OUTPUT_FLAG_COMPRESS_OFFLOAD | AUDIO_OUTPUT_FLAG_DIRECT))) {

// Check only for Normal Mixing mode

if (kEnableExtendedPrecision) {

// Specify format (uncomment one below to choose)

//config->format = AUDIO_FORMAT_PCM_FLOAT;

//config->format = AUDIO_FORMAT_PCM_24_BIT_PACKED;

//config->format = AUDIO_FORMAT_PCM_32_BIT;

//config->format = AUDIO_FORMAT_PCM_8_24_BIT;

// ALOGV("openOutput_l() upgrading format to %#08x", config->format);

}

if (kEnableExtendedChannels) {

// Specify channel mask (uncomment one below to choose)

//config->channel_mask = audio_channel_out_mask_from_count(4); // for USB 4ch

//config->channel_mask = audio_channel_mask_from_representation_and_bits(

// AUDIO_CHANNEL_REPRESENTATION_INDEX, (1 << 4) - 1); // another 4ch example

}

}

AudioStreamOut *outputStream = NULL;

status_t status = outHwDev->openOutputStream(

&outputStream,

*output,

devices,

flags,

config,

address.string());

mHardwareStatus = AUDIO_HW_IDLE;

if (status == NO_ERROR) {

// 根据配置文件中的的flags创建不同的线程

if (flags & AUDIO_OUTPUT_FLAG_MMAP_NOIRQ) {

sp<MmapPlaybackThread> thread =

new MmapPlaybackThread(this, *output, outHwDev, outputStream,

devices, AUDIO_DEVICE_NONE, mSystemReady);

mMmapThreads.add(*output, thread);

ALOGV("openOutput_l() created mmap playback thread: ID %d thread %p",

*output, thread.get());

return thread;

} else {

sp<PlaybackThread> thread;

if (flags & AUDIO_OUTPUT_FLAG_COMPRESS_OFFLOAD) {

thread = new OffloadThread(this, outputStream, *output, devices, mSystemReady);

ALOGV("openOutput_l() created offload output: ID %d thread %p",

*output, thread.get());

} else if ((flags & AUDIO_OUTPUT_FLAG_DIRECT)

|| !isValidPcmSinkFormat(config->format)

|| !isValidPcmSinkChannelMask(config->channel_mask)) {

thread = new DirectOutputThread(this, outputStream, *output, devices, mSystemReady);

ALOGV("openOutput_l() created direct output: ID %d thread %p",

*output, thread.get());

} else {

thread = new MixerThread(this, outputStream, *output, devices, mSystemReady);

ALOGV("openOutput_l() created mixer output: ID %d thread %p",

*output, thread.get());

}

mPlaybackThreads.add(*output, thread);

mPatchPanel.notifyStreamOpened(outHwDev, *output);

return thread;

}

}

return 0;

}从hal层返回的audio_hw_device结构体中的open_output_stream会在AudioFlinger::openOutput_l方法中被封装成一个MixerThread,每个thread会跟一个output相对应被添加到mPlaybackThreads中,这样就将每一个播放线程跟outputStream对应。

xref: /frameworks/av/services/audioflinger/Threads.cpp

ssize_t AudioFlinger::PlaybackThread::threadLoop_write()

{

LOG_HIST_TS();

mInWrite = true;

ssize_t bytesWritten;

const size_t offset = mCurrentWriteLength - mBytesRemaining;

// If an NBAIO sink is present, use it to write the normal mixer's submix

if (mNormalSink != 0) {

const size_t count = mBytesRemaining / mFrameSize;

ATRACE_BEGIN("write");

// update the setpoint when AudioFlinger::mScreenState changes

uint32_t screenState = AudioFlinger::mScreenState;

if (screenState != mScreenState) {

mScreenState = screenState;

MonoPipe *pipe = (MonoPipe *)mPipeSink.get();

if (pipe != NULL) {

pipe->setAvgFrames((mScreenState & 1) ?

(pipe->maxFrames() * 7) / 8 : mNormalFrameCount * 2);

}

}

//写入数据进行播放

ssize_t framesWritten = mNormalSink->write((char *)mSinkBuffer + offset, count);

ATRACE_END();

if (framesWritten > 0) {

bytesWritten = framesWritten * mFrameSize;

#ifdef TEE_SINK

mTee.write((char *)mSinkBuffer + offset, framesWritten);

#endif

} else {

bytesWritten = framesWritten;

}

// otherwise use the HAL / AudioStreamOut directly

} else {

// Direct output and offload threads

if (mUseAsyncWrite) {

ALOGW_IF(mWriteAckSequence & 1, "threadLoop_write(): out of sequence write request");

mWriteAckSequence += 2;

mWriteAckSequence |= 1;

ALOG_ASSERT(mCallbackThread != 0);

mCallbackThread->setWriteBlocked(mWriteAckSequence);

}

// FIXME We should have an implementation of timestamps for direct output threads.

// They are used e.g for multichannel PCM playback over HDMI.

bytesWritten = mOutput->write((char *)mSinkBuffer + offset, mBytesRemaining);

if (mUseAsyncWrite &&

((bytesWritten < 0) || (bytesWritten == (ssize_t)mBytesRemaining))) {

// do not wait for async callback in case of error of full write

mWriteAckSequence &= ~1;

ALOG_ASSERT(mCallbackThread != 0);

mCallbackThread->setWriteBlocked(mWriteAckSequence);

}

}

mNumWrites++;

mInWrite = false;

mStandby = false;

return bytesWritten;

}通过mNormalSink->write写入数据进行播放。NormalSink->write就是adev_open_output_stream返回的audio_stream_out结构体变量。

xref: /hardware/libhardware_legacy/audio/audio_hw_hal.cpp

static int adev_open_output_stream(struct audio_hw_device *dev,

audio_io_handle_t handle,

audio_devices_t devices,

audio_output_flags_t flags,

struct audio_config *config,

struct audio_stream_out **stream_out,

const char *address __unused)

{

struct legacy_audio_device *ladev = to_ladev(dev);

status_t status;

struct legacy_stream_out *out;

int ret;

out = (struct legacy_stream_out *)calloc(1, sizeof(*out));

if (!out)

return -ENOMEM;

devices = convert_audio_device(devices, HAL_API_REV_2_0, HAL_API_REV_1_0);

out->legacy_out = ladev->hwif->openOutputStreamWithFlags(devices, flags,

(int *) &config->format,

&config->channel_mask,

&config->sample_rate, &status);

if (!out->legacy_out) {

ret = status;

goto err_open;

}

out->stream.common.get_sample_rate = out_get_sample_rate;

out->stream.common.set_sample_rate = out_set_sample_rate;

out->stream.common.get_buffer_size = out_get_buffer_size;

out->stream.common.get_channels = out_get_channels;

out->stream.common.get_format = out_get_format;

out->stream.common.set_format = out_set_format;

out->stream.common.standby = out_standby;

out->stream.common.dump = out_dump;

out->stream.common.set_parameters = out_set_parameters;

out->stream.common.get_parameters = out_get_parameters;

out->stream.common.add_audio_effect = out_add_audio_effect;

out->stream.common.remove_audio_effect = out_remove_audio_effect;

out->stream.get_latency = out_get_latency;

out->stream.set_volume = out_set_volume;

out->stream.write = out_write;

out->stream.get_render_position = out_get_render_position;

out->stream.get_next_write_timestamp = out_get_next_write_timestamp;

// 将&out->stream传递给*stream_out指针,也就是audio_stream_out

*stream_out = &out->stream;

return 0;

err_open:

free(out);

*stream_out = NULL;

return ret;

}将&out->stream传递给*stream_out指针,也就是audio_stream_out。当应用层进行播放数据操作的时候,就会调用out_write函数写数据。

static ssize_t out_write(struct audio_stream_out *stream, const void* buffer,

size_t bytes)

{

struct legacy_stream_out *out =

reinterpret_cast<struct legacy_stream_out *>(stream);

return out->legacy_out->write(buffer, bytes);

}调用第三方平台提供的库的write(out->legacy_out->write)方法

2.3读数据

xref: /hardware/libhardware_legacy/audio/audio_hw_hal.cpp

static int adev_open_input_stream(struct audio_hw_device *dev,

audio_io_handle_t handle,

audio_devices_t devices,

struct audio_config *config,

struct audio_stream_in **stream_in,

audio_input_flags_t flags __unused,

const char *address __unused,

audio_source_t source __unused)

{

struct legacy_audio_device *ladev = to_ladev(dev);

status_t status;

struct legacy_stream_in *in;

int ret;

in = (struct legacy_stream_in *)calloc(1, sizeof(*in));

if (!in)

return -ENOMEM;

devices = convert_audio_device(devices, HAL_API_REV_2_0, HAL_API_REV_1_0);

// 将ladev->hwif赋值给in->legacy_in

in->legacy_in = ladev->hwif->openInputStream(devices, (int *) &config->format,

&config->channel_mask, &config->sample_rate,

&status, (AudioSystem::audio_in_acoustics)0);

if (!in->legacy_in) {

ret = status;

goto err_open;

}

in->stream.common.get_sample_rate = in_get_sample_rate;

in->stream.common.set_sample_rate = in_set_sample_rate;

in->stream.common.get_buffer_size = in_get_buffer_size;

in->stream.common.get_channels = in_get_channels;

in->stream.common.get_format = in_get_format;

in->stream.common.set_format = in_set_format;

in->stream.common.standby = in_standby;

in->stream.common.dump = in_dump;

in->stream.common.set_parameters = in_set_parameters;

in->stream.common.get_parameters = in_get_parameters;

in->stream.common.add_audio_effect = in_add_audio_effect;

in->stream.common.remove_audio_effect = in_remove_audio_effect;

in->stream.set_gain = in_set_gain;

in->stream.read = in_read;

in->stream.get_input_frames_lost = in_get_input_frames_lost;

// 将in->stream赋值给stream_in,从而建立audio_stream_in与ladev->hwif的联系

*stream_in = &in->stream;

return 0;

err_open:

free(in);

*stream_in = NULL;

return ret;

}启动录音,HAL层调用adev_open_input_stream

static ssize_t in_read(struct audio_stream_in *stream, void* buffer,

size_t bytes)

{

struct legacy_stream_in *in =

reinterpret_cast<struct legacy_stream_in *>(stream);

return in->legacy_in->read(buffer, bytes);

} 录音时,HAL层调用in_read。每次写入一个void *buffer,buffer大小取决于录音采样率和buffer时长,bit位,声道数(一般为双声道)。如果是高通平台hwif就是AudioHardwareALSA,

read是AudioStreamInALSA的read方法,最终会调用pcm_read进行读数据操作

806

806

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?