本篇对调度方式进行优化,实现内存拷贝和计算overlap。

单流同步调用

/*

单流同步

*/

void CallKmeansSync()

{

//TODO:init host memory

float* h_Src/*[Coords][SrcCount]*/,

* h_Clusters/*[ClusterCount][Coords]*/;

int* h_MemberShip/*[kSrcCount]*/,

* h_MemberCount/*[kClusterCount]*/;

h_Src = (float*)malloc(kSrcCount * kCoords * sizeof(float));

h_Clusters = (float*)malloc(kClusterCount * kCoords * sizeof(float));/*[kClusterCount][kCoords]*/

for (size_t i = 0; i < kClusterCount; i++)

{

for (int j = 0; j < kCoords; ++j)

{

h_Clusters[i * kCoords + j] = float(100 * i + 10);

}

}

for (size_t i = 0; i < kClusterCount; i++)

{

for (size_t j = 0; j < (kSrcCount / kClusterCount); j++)

{

for (size_t iDim = 0; iDim < kCoords; iDim++)

{

h_Src[iDim * kSrcCount + i * (kSrcCount / kClusterCount) + j] = i * 100 + 0.5f;// -(float)((rand() % 100) / 100);

}

}

}

h_MemberShip = (int*)malloc(kSrcCount * sizeof(int));

memset(h_MemberShip, 9, kSrcCount * sizeof(int));

h_MemberCount = (int*)malloc(kClusterCount * sizeof(int));

memset(h_MemberCount, 0, kClusterCount * sizeof(int));

//TODO:init device mempry

float* d_pSrc/*[Coords][SrcCount]*/,

* d_pClusters/*[ClusterCount][Coords]*/;

int* d_pChanged/*[1]*/,

* d_pMemberShip/*[kSrcCount]*/,

* d_pMemberCount/*[kClusterCount]*/;

cudaMalloc(&d_pSrc, kSrcCount * kCoords * sizeof(float));

cudaMemcpy(d_pSrc, h_Src, kSrcCount * kCoords * sizeof(float), cudaMemcpyHostToDevice);

cudaMalloc(&d_pClusters, kClusterCount * kCoords * sizeof(float));

cudaMalloc(&d_pMemberShip, kSrcCount * sizeof(int));

cudaMemcpy(d_pMemberShip, h_MemberShip, kSrcCount * sizeof(int), cudaMemcpyHostToDevice);

cudaMalloc(&d_pMemberCount, kClusterCount * sizeof(int));

cudaMemcpy(d_pMemberCount, h_MemberCount, kClusterCount * sizeof(int), cudaMemcpyHostToDevice);

cudaMalloc(&d_pChanged, sizeof(int));

//TODO:find the points

int itCount = 0;

int iChanged = 0;

cudaStream_t sMember, sNewCluster;

cudaStreamCreate(&sMember);

cudaStreamCreate(&sNewCluster);

cudaEvent_t eMember, eNewCluster;

cudaEventCreate(&eMember);

cudaEventCreate(&eNewCluster);

do

{

{

const int UnrollScale = 8;

cudaMemcpy(d_pClusters, h_Clusters, kClusterCount * kCoords * sizeof(float), cudaMemcpyHostToDevice);//s1

cudaMemset(d_pMemberCount, 0, kClusterCount * sizeof(int));//s1

kKmeansClassifyMembershipMath<kCoords, UnrollScale, kClusterCount, kClusterUnroll> << <kGridCount * 1, kBlockSize / UnrollScale, kClusterCount* kCoords * sizeof(float) >> >

(kSrcCount, kCoords, kClusterCount, d_pSrc, d_pMemberShip, d_pClusters, d_pChanged);

checkCUDARtn(cudaDeviceSynchronize());

cudaMemcpy(&iChanged, d_pChanged, sizeof(int), cudaMemcpyDeviceToHost);//s1

cudaMemset(d_pChanged, 0, sizeof(int));//s1

cudaMemset(d_pClusters, 0, kClusterCount * kCoords * sizeof(float));//s2

const int kUnrollAdvUNROLLSCALE = 16;

kkKmeansUnrollAdv<kBlockSize / kExecuteScale, kUnrollAdvUNROLLSCALE, kCoords, kClusterCount> << <kGridCount * kExecuteScale / kUnrollAdvUNROLLSCALE, kBlockSize / kExecuteScale, (kBlockSize * kCoords + kBlockSize) * sizeof(float) / kExecuteScale >> > (kSrcCount, kCoords, kClusterCount, d_pSrc, d_pMemberShip, d_pClusters, d_pMemberCount);//s2

checkCUDARtn(cudaDeviceSynchronize());

cudaMemcpy(h_MemberCount, d_pMemberCount, kClusterCount * sizeof(int), cudaMemcpyDeviceToHost);//s2

cudaMemcpy(h_Clusters, d_pClusters, kClusterCount * kCoords * sizeof(float), cudaMemcpyDeviceToHost);//s2

for (size_t i = 0; i < kClusterCount; i++)//s2

{

for (int j = 0; j < kCoords; ++j)

{

h_Clusters[i * kCoords + j] = (h_MemberCount[i] == 0) ? 0 : (h_Clusters[i * kCoords + j] / h_MemberCount[i]);

}

}

}

} while (/*(0 != iChanged) &&*/ itCount++ < 8);

std::cout << "it count " << itCount << std::endl;

cudaEventDestroy(eMember);

cudaEventDestroy(eNewCluster);

cudaStreamDestroy(sMember);

cudaStreamDestroy(sNewCluster);

free(h_Src);

free(h_Clusters);

free(h_MemberShip);

free(h_MemberCount);

cudaFree(d_pSrc);

cudaFree(d_pClusters);

cudaFree(d_pChanged);

cudaFree(d_pMemberShip);

cudaFree(d_pMemberCount);

}

void CallFunckmeans(int FuncIndex)

{

const int kSingleStm = 0;

const int kMultStm = 1;

const int kPrevCopy = 2;

const int kNonSync = 3;

const int kSync = 4;

if (FuncIndex == kSingleStm)

{

CallKmeansSingleStm();

}

else if (FuncIndex == kMultStm)

{

CallKmeansMultOverlap();

}

else if (FuncIndex == kPrevCopy)

{

CallKmeansMultPrevCopy();

}

else if (FuncIndex == kNonSync)

{

CallKmeansMultNonSync();

}

else if (FuncIndex == kSync)

{

CallKmeansSync();

}

return;

}

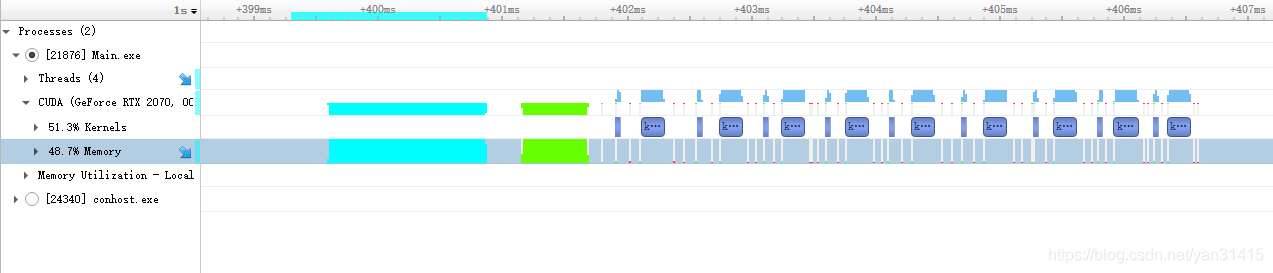

调度情况

总耗时7ms,可以看到核函数之间由于同步没有连续执行

单流异步调用

在这里插入代码片/*

单流异步

*/

void CallKmeansSingleStm()

{

//TODO:init host memory

float* h_Src/*[Coords][SrcCount]*/,

* h_Clusters/*[ClusterCount][Coords]*/;

int* h_MemberShip/*[kSrcCount]*/,

* h_MemberCount/*[kClusterCount]*/;

//h_Src = (float*)malloc(kSrcCount * kCoords * sizeof(float));

cudaMallocHost(&h_Src, kSrcCount * kCoords * sizeof(float));

h_Clusters = (float*)malloc(kClusterCount * kCoords * sizeof(float));/*[kClusterCount][kCoords]*/

for (size_t i = 0; i < kClusterCount; i++)

{

for (int j = 0; j < kCoords; ++j)

{

h_Clusters[i * kCoords + j] = float(100 * i + 10);

}

}

for (size_t i = 0; i < kClusterCount; i++)

{

for (size_t j = 0; j < (kSrcCount / kClusterCount); j++)

{

for (size_t iDim = 0; iDim < kCoords; iDim++)

{

h_Src[iDim * kSrcCount + i * (kSrcCount / kClusterCount) + j] = i * 100 + 0.5f;// -(float)((rand() % 100) / 100);

}

}

}

//h_MemberShip = (int*)malloc(kSrcCount * sizeof(int));

cudaMallocHost(&h_MemberShip, kSrcCount * sizeof(int));

memset(h_MemberShip, 9, kSrcCount * sizeof(int));

h_MemberCount = (int*)malloc(kClusterCount * sizeof(int));

memset(h_MemberCount, 0, kClusterCount * sizeof(int));

//TODO:init stream

cudaStream_t stm;

cudaStreamCreate(&stm);

const int EventNum = 10;

cudaEvent_t event[EventNum];

for (size_t i = 0; i < EventNum; i++)

{

cudaEventCreate(&event[i]);

}

//TODO:init device mempry

float* d_pSrc/*[Coords][SrcCount]*/,

* d_pClusters/*[ClusterCount][Coords]*/;

int* d_pChanged/*[1]*/,

* d_pMemberShip/*[kSrcCount]*/,

* d_pMemberCount/*[kClusterCount]*/;

cudaMalloc(&d_pSrc, kSrcCount * kCoords * sizeof(float));

cudaMalloc(&d_pClusters, kClusterCount * k

本文探讨了四种不同的GPU计算调度方式:单流同步调用、单流异步调用、多流执行(通过OpenMP开启4个线程,每个线程持有一个流)以及多流执行但不添加事件同步。通过对KMeans算法的实现,比较了它们的执行时间和内存拷贝overlap效果。结果显示,多流执行并添加事件同步的方式能有效提高执行效率,但执行结果稳定性有待提升。

本文探讨了四种不同的GPU计算调度方式:单流同步调用、单流异步调用、多流执行(通过OpenMP开启4个线程,每个线程持有一个流)以及多流执行但不添加事件同步。通过对KMeans算法的实现,比较了它们的执行时间和内存拷贝overlap效果。结果显示,多流执行并添加事件同步的方式能有效提高执行效率,但执行结果稳定性有待提升。

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

2万+

2万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?