filebeat使用pipeline的grok

因为不想使用logstash 想偷懒使用filebeat 且新版的filebeat支持grok

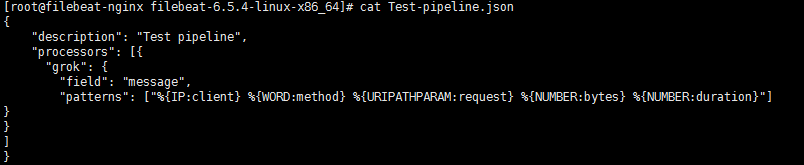

先创个一个json文件

| { "description": "Test pipeline", "processors": [{ "grok": { "field": "message", "patterns": ["%{IP:client} %{WORD:method} %{URIPATHPARAM:request} %{NUMBER:bytes} %{NUMBER:duration}"] } } ] } |

curl -H 'Content-Type: application/json' -XPUT 'http://10.6.11.176:9200/_ingest/pipeline/Test-pipeline' -d@Test-pipeline.json

![]()

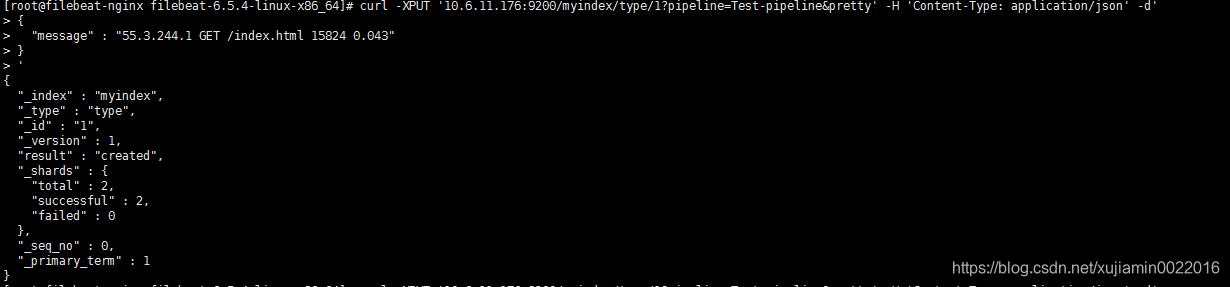

curl -XPUT '10.6.11.176:9200/myindex/type/1?pipeline=Test-pipeline&pretty' -H 'Content-Type: application/json' -d' { "message" : "55.3.244.1 GET /index.html 15824 0.043" } '

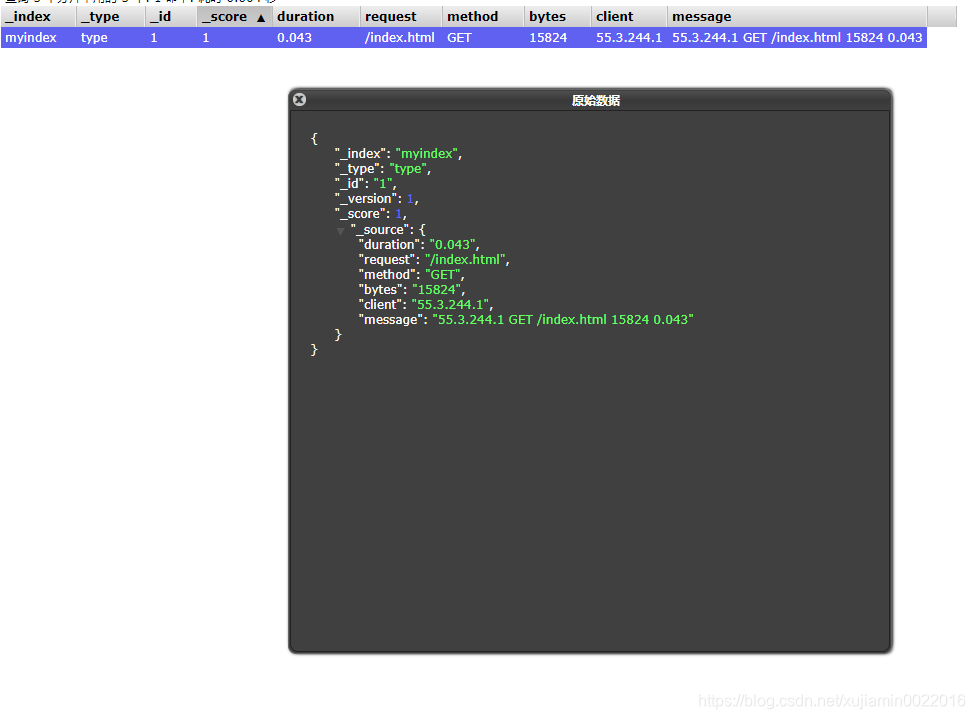

es里的数据

这边如果想用grok去处理非json的nginx的日志 可以尝试一下

| { "description": "nginx pipeline", "processors": [{ "grok": { "field": "message", "patterns": ["%{TIMESTAMP_ISO8601:timestamp} %{TIMESTAMP_ISO8601:time} %{IP:remote_addr} %{USER:remote_user} %{NUMBER:body_bytes_sent} %{NUMBER:request_time} %{NUMBER:status} %{IP:host} %{URIPATHPARAM:request} %{WORD:request_method} %{URIPATHPARAM:url} %{URIPATHPARAM:http_referrer} %{NUMBER:body_bytes_sent} %{URIPATHPARAM:http_x_forwarded_for} %{URIPATHPARAM:http_user_agent} "] } } ] } |

参考

https://www.elastic.co/guide/en/beats/filebeat/current/configuring-ingest-node.html

http://www.axiaoxin.com/article/236/

https://www.felayman.com/articles/2017/11/24/1511527532643.html#b3_solo_h4_9

https://www.elastic.co/guide/en/elasticsearch/reference/current/grok-processor.html

本文详细介绍如何使用Filebeat的Grok处理器解析非JSON格式的Nginx日志,包括创建自定义管道、配置及使用示例,适用于希望简化日志处理流程的读者。

本文详细介绍如何使用Filebeat的Grok处理器解析非JSON格式的Nginx日志,包括创建自定义管道、配置及使用示例,适用于希望简化日志处理流程的读者。

659

659

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?