最近小红书开源的Dots.OCR火出圈了!作为专为中文场景优化的表格/文档识别模型,精度吊打一众开源方案。今天就手把手教你用Docker一键部署Dots.OCR API服务,开箱即用,支持PDF/图片批量识别,还能直接返回合并后的Markdown格式(含base64的图片)~

📋 部署前准备

环境要求

- Docker环境(20.10+)

- NVIDIA显卡(显存≥10G,建议16G+)

- NVIDIA Container Toolkit(GPU支持必备)

- 网络通畅(需要拉取镜像和模型)

核心文件清单

先新建一个项目目录(比如dots-ocr-api),创建以下4个文件:

1. Dockerfile(核心镜像构建)

FROM python:3.12-slim

# 禁用交互+预设时区+初始化环境变量

ENV DEBIAN_FRONTEND=noninteractive \

TZ=Etc/UTC \

LD_LIBRARY_PATH="" \

PYOPENGL_PLATFORM=osmesa

# 安装Debian 12原生依赖

RUN apt-get update --fix-missing && \

apt-get install -y --no-install-recommends \

git gcc g++ pkg-config poppler-utils \

&& apt-get clean && \

rm -rf /var/lib/apt/lists/* /tmp/* /var/tmp/*

# Python环境变量配置

ENV PYTHONUNBUFFERED=1 \

PIP_NO_CACHE_DIR=1 \

PIP_DEFAULT_TIMEOUT=100 \

PYTHONPATH=/app/dots.ocr \

HF_TRUST_REMOTE_CODE=1 \

TRANSFORMERS_CACHE=/app/models \

VLLM_CACHE=/app/models \

MAX_JOBS=8

# 克隆源码+安装核心依赖

RUN git clone https://github.com/rednote-hilab/dots.ocr.git /app/dots.ocr

RUN pip install torch==2.7.0 torchvision==0.22.0 torchaudio==2.7.0 --index-url https://download.pytorch.org/whl/cu128

RUN pip install flash-attn==2.8.0.post2 --no-build-isolation || echo "flash-attn安装失败,核心功能不受影响"

RUN pip install pyopengl pyopengl-accelerate pymupdf

# 安装FastAPI依赖(需提前创建requirements.txt)

COPY requirements.txt /app/

RUN pip install -r /app/requirements.txt

# 安装Dots.OCR

RUN cd /app/dots.ocr && pip install -e . || echo "Dots.OCR核心依赖已安装"

# 复制启动文件

COPY api_server.py /app/

COPY start.sh /app/

RUN chmod +x /app/start.sh

# 暴露端口

EXPOSE 8000 8001

# 启动命令

CMD ["/app/start.sh"]

2. start.sh(启动脚本)

set -e

# 配置参数

MAX_MODEL_LEN=9000

VLLM_PORT=8001

FASTAPI_PORT=8000

# 清理GPU缓存

echo "🧹 清理GPU缓存..."

python -c "import torch; torch.cuda.empty_cache()" 2>/dev/null || true

# 环境验证

echo -e "\n📝 环境验证"

echo "Python版本: $(python --version | awk '{print $2}')"

python -c "import torch; print(f'CUDA可用: {torch.cuda.is_available()}')" || echo "⚠️ CUDA检查警告"

echo "配置:max-model-len=${MAX_MODEL_LEN} | VLLM端口=${VLLM_PORT} | FastAPI端口=${FASTAPI_PORT}"

# 启动vLLM服务(后台运行)

echo -e "\n🚀 启动vLLM服务..."

vllm serve rednote-hilab/dots.ocr \

--trust-remote-code \

--async-scheduling \

--gpu-memory-utilization 0.55 \

--max-model-len ${MAX_MODEL_LEN} \

--disable-nccl \

--tensor-parallel-size 1 \

--port ${VLLM_PORT} \

--host 0.0.0.0 \

> /app/vllm.log 2>&1 &

# 启动FastAPI服务(前台运行)

echo -e "\n🚀 启动FastAPI服务..."

cd /app

exec uvicorn api_server:app --host 0.0.0.0 --port ${FASTAPI_PORT} --log-level info

3. api_server.py(API接口服务)

import os

import sys

import json

import tempfile

import torch

import time

import subprocess

from fastapi import FastAPI, UploadFile, File, HTTPException

from fastapi.responses import JSONResponse

from pathlib import Path

from types import SimpleNamespace

import requests

# 基础配置

parser_module_dir = Path("/app/dots.ocr/dots_ocr")

sys.path.insert(0, str(parser_module_dir.absolute()))

IMAGE_EXTENSIONS = ['.png', '.jpg', '.jpeg', '.tiff', '.bmp', '.gif']

OUTPUT_DIR = "/app/output"

VLLM_HEALTH_URL = "http://localhost:8001/health"

VLLM_WAIT_TIMEOUT = 120

# 初始化FastAPI

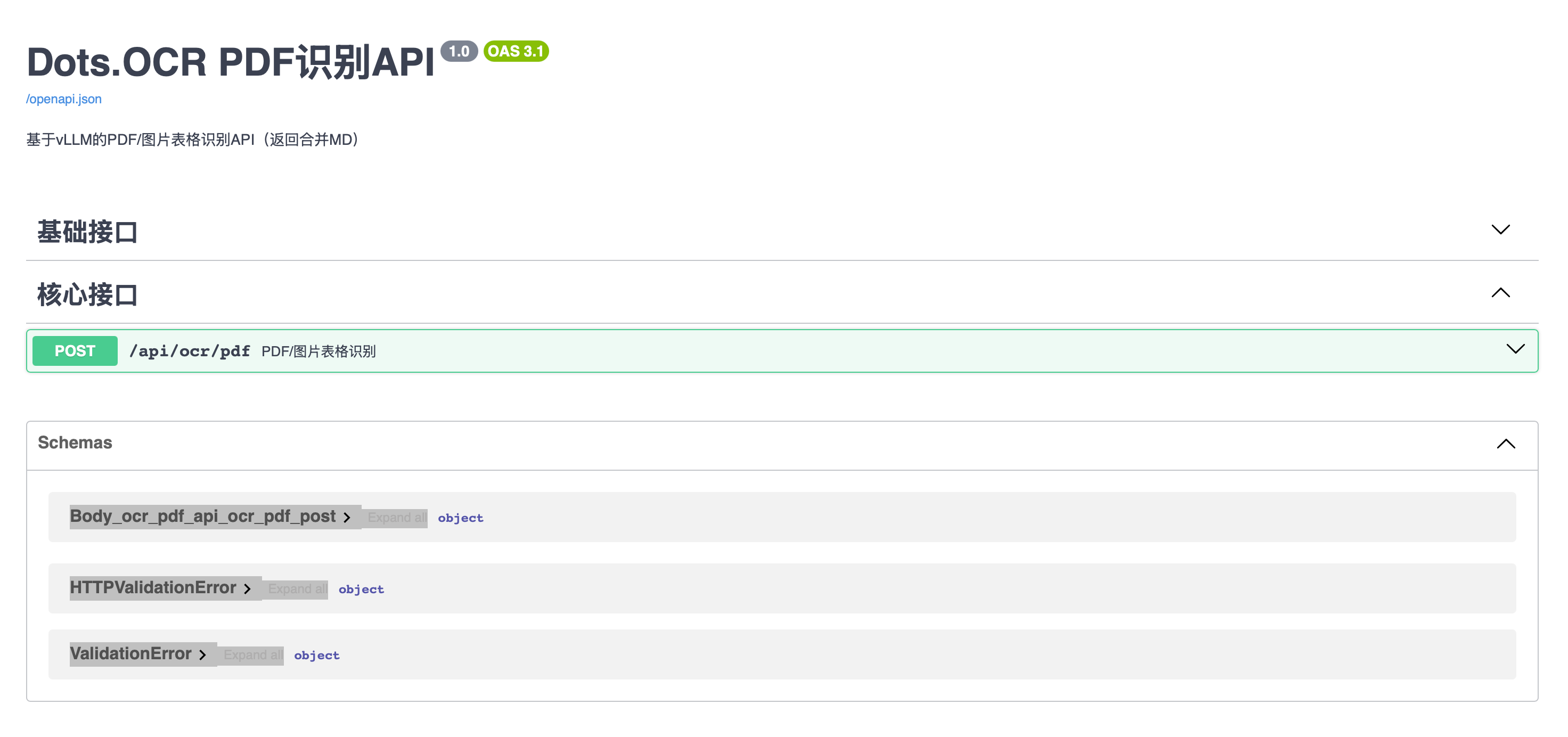

app = FastAPI(

title="Dots.OCR PDF识别API",

version="1.0",

description="基于vLLM的PDF/图片表格识别API(返回合并MD)"

)

# 创建输出目录

os.makedirs(OUTPUT_DIR, exist_ok=True)

# 配置参数

args = SimpleNamespace(

protocol="http",

ip="localhost",

port=8001,

model_name="rednote-hilab/dots.ocr",

temperature=0.0,

top_p=1.0,

max_completion_tokens=8192,

num_thread=4,

dpi=300,

output=OUTPUT_DIR,

min_pixels=100,

max_pixels=1000000,

use_hf=False,

)

# 初始化OCR解析器

ocr_parser = None

def init_ocr_parser():

global ocr_parser

try:

from parser import DotsOCRParser, MIN_PIXELS

if args.min_pixels < MIN_PIXELS:

args.min_pixels = MIN_PIXELS

ocr_parser = DotsOCRParser(

protocol=args.protocol, ip=args.ip, port=args.port,

model_name=args.model_name, temperature=args.temperature,

top_p=args.top_p, max_completion_tokens=args.max_completion_tokens,

num_thread=args.num_thread, dpi=args.dpi, output_dir=args.output,

min_pixels=args.min_pixels, max_pixels=args.max_pixels, use_hf=args.use_hf

)

print("✅ DotsOCRParser初始化成功!")

return True

except Exception as e:

raise RuntimeError(f"❌ 初始化失败:{str(e)}")

# 等待vLLM就绪

def wait_vllm_ready():

print("🚀 等待vLLM服务就绪...")

start_time = time.time()

while time.time() - start_time < VLLM_WAIT_TIMEOUT:

try:

response = requests.get(VLLM_HEALTH_URL, timeout=5)

if response.status_code == 200:

print("✅ vLLM服务已就绪!")

return True

except:

pass

time.sleep(2)

raise TimeoutError(f"❌ vLLM启动超时")

# 合并MD文件

def merge_md_files(page_results):

merged_md = []

for page_item in page_results:

page_no = page_item["page_no"] + 1

md_path = page_item["md_content_path"]

with open(md_path, "r", encoding="utf-8") as f:

page_md = f.read().strip()

merged_md.append(f"\n--- 第{page_no}页 ---\n")

merged_md.append(page_md)

return "".join(merged_md).lstrip("\n")

# 启动钩子

@app.on_event("startup")

async def startup_event():

try:

wait_vllm_ready()

init_ocr_parser()

print("🎉 API服务启动成功!")

except Exception as e:

print(f"❌ 服务启动失败:{e}")

sys.exit(1)

# 健康检查

@app.get("/health", summary="健康检查", tags=["基础接口"])

async def health_check():

vllm_healthy = False

try:

response = requests.get(VLLM_HEALTH_URL, timeout=5)

vllm_healthy = (response.status_code == 200)

except:

pass

return {

"api_status": "healthy",

"timestamp": time.strftime("%Y-%m-%d %H:%M:%S"),

"cuda_available": torch.cuda.is_available(),

"ocr_parser_initialized": ocr_parser is not None,

"vllm_healthy": vllm_healthy

}

# 核心OCR接口

@app.post("/api/ocr/pdf", summary="PDF/图片表格识别", tags=["核心接口"])

async def ocr_pdf(

file: UploadFile = File(..., description="支持PDF/图片格式"),

prompt_mode: str = "prompt_layout_all_en",

fitz_preprocess: bool = False,

output_dir: str = ""

):

if ocr_parser is None:

raise HTTPException(status_code=500, detail="DotsOCRParser未初始化!")

filename = file.filename

file_ext = os.path.splitext(filename)[1].lower()

if file_ext not in ['.pdf'] + IMAGE_EXTENSIONS:

raise HTTPException(status_code=400, detail=f"不支持的格式:{file_ext}")

temp_path = None

try:

# 保存临时文件

with tempfile.NamedTemporaryFile(delete=False, suffix=file_ext, dir="/tmp") as f:

f.write(await file.read())

temp_path = f.name

# 解析文件

page_results = ocr_parser.parse_file(

input_path=temp_path,

output_dir=output_dir or OUTPUT_DIR,

prompt_mode=prompt_mode,

fitz_preprocess=fitz_preprocess

)

# 合并MD

merged_md_content = merge_md_files(page_results)

merged_md_filename = f"{os.path.splitext(filename)[0]}_merged.md"

merged_md_path = os.path.join(output_dir or OUTPUT_DIR, merged_md_filename)

with open(merged_md_path, "w", encoding="utf-8") as f:

f.write(merged_md_content)

# 返回结果

return JSONResponse(content={

"status": "success",

"code": 200,

"filename": filename,

"merged_md_content": merged_md_content,

"merged_md_path": merged_md_path,

"page_count": len(page_results),

"page_details": page_results

})

except Exception as e:

raise HTTPException(status_code=500, detail=f"解析失败:{str(e)}")

finally:

if temp_path and Path(temp_path).exists():

os.unlink(temp_path)

if __name__ == "__main__":

import uvicorn

uvicorn.run(app, host="0.0.0.0", port=8000, log_level="info")

4. requirements.txt(依赖清单)

# ===== 仅新增的依赖(官方setup.py未包含)=====

# FastAPI API框架

fastapi>=0.110.0

uvicorn>=0.29.0

python-multipart>=0.0.9

httpx>=0.27.0

# vLLM(官方启动命令所需)

vllm>=0.9.0

🚀 开始部署

步骤1:构建Docker镜像

cd dots-ocr-api

docker build -t dots-ocr-api:latest .

⚠️ 注意:构建过程会下载PyTorch、vLLM等依赖,首次构建可能需要10-20分钟,耐心等待~

步骤2:启动容器

docker run -d \

--gpus all \

--name dots-ocr-server \

-p 8000:8000 -p 8001:8001 \

-v $(pwd)/models:/app/models \

-v $(pwd)/output:/app/output \

dots-ocr-api:latest

参数说明:

--gpus all:启用所有GPU-p 8000:8000:FastAPI接口端口-p 8001:8001:vLLM服务端口-v $(pwd)/models:/app/models:挂载模型缓存目录(避免重复下载)-v $(pwd)/output:/app/output:挂载输出目录(保存识别结果)

步骤3:检查服务状态

# 查看容器日志

docker logs -f dots-ocr-server

# 健康检查

curl http://localhost:8000/health

如果返回{"api_status":"healthy",...},说明服务启动成功!

📝 接口使用示例

方式1:Swagger UI可视化调用

直接访问:http://localhost:8000/docs

找到/api/ocr/pdf接口,点击「Try it out」,上传PDF/图片文件,点击「Execute」即可看到识别结果~

方式2:curl命令调用

curl -X POST "http://localhost:8000/api/ocr/pdf" \

-H "accept: application/json" \

-H "Content-Type: multipart/form-data" \

-F "file=@你的文件.pdf;type=application/pdf"

方式3:Python代码调用

import requests

url = "http://localhost:8000/api/ocr/pdf"

files = {"file": open("test.pdf", "rb")}

response = requests.post(url, files=files)

result = response.json()

# 打印合并后的Markdown内容

print(result["merged_md_content"])

✨ 核心特性

- 🎯 高精度:专为中文表格/文档优化,识别准确率远超传统OCR

- 🚀 高性能:基于vLLM加速,推理速度提升5-10倍

- 📄 多格式支持:支持PDF、PNG、JPG等主流格式

- 📝 一键合并:自动合并多页识别结果,添加页码分割线

- 🐳 容器化部署:隔离环境,跨平台兼容,一键启停

❌ 常见问题解决

- vLLM启动超时:检查GPU显存是否充足(至少10G),降低

--gpu-memory-utilization参数 - CUDA错误:确保NVIDIA驱动和Container Toolkit安装正确

- 模型下载慢:可以手动下载模型到

models目录,或配置HF镜像源 - 识别乱码:检查文件编码,确保是UTF-8格式

🎉 总结

这套部署方案基于Docker容器化,完美解决了环境依赖、版本兼容等问题,5分钟就能搞定Dots.OCR API服务。不管是个人使用还是集成到项目中,都非常方便~

如果觉得有用,记得点赞收藏!有任何问题欢迎评论区交流~

#DotsOCR #OCR识别 #小红书开源 #PDF识别 #表格识别 #Docker部署 #API开发 #人工智能

3568

3568

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?