#请作以下修改:1.大模型的名称列表用程序从Ollama管理的大模型列表中提取;2,默认大模型为“llama3.2-vision”;3.其它各处尽量少动或不动

import sys

import os

import time

import base64

import threading

import cv2

import numpy as np

from PIL import Image

import speech_recognition as sr

import pyttsx3

from PyQt5.QtWidgets import (QApplication, QMainWindow, QTabWidget, QWidget, QVBoxLayout, QHBoxLayout,

QTextEdit, QPushButton, QLineEdit, QLabel, QSlider, QProgressBar,

QFileDialog, QComboBox, QGroupBox, QCheckBox)

from PyQt5.QtGui import QPixmap, QImage, QFont

from PyQt5.QtCore import Qt, QTimer, QThread, pyqtSignal

import ollama

from io import BytesIO # 添加这行修复

class ModelWorker(QThread):

responseSignal = pyqtSignal(str)

progressSignal = pyqtSignal(int)

completed = pyqtSignal()

def __init__(self, prompt, model, images=None, max_tokens=4096, temperature=0.7):

super().__init__()

self.prompt = prompt

self.model = model

self.images = images

self.max_tokens = max_tokens

self.temperature = temperature

self._is_running = True

def run(self):

try:

options = {

'num_predict': self.max_tokens,

'temperature': self.temperature

}

if self.images:

images_base64 = [self.image_to_base64(img) for img in self.images]

response = ollama.chat(

model=self.model,

messages=[{

'role': 'user',

'content': self.prompt,

'images': images_base64

}],

stream=True,

options=options

)

else:

response = ollama.chat(

model=self.model,

messages=[{'role': 'user', 'content': self.prompt}],

stream=True,

options=options

)

full_response = ""

for chunk in response:

if not self._is_running:

break

content = chunk.get('message', {}).get('content', '')

if content:

full_response += content

self.responseSignal.emit(full_response)

self.progressSignal.emit(25)

self.completed.emit()

except Exception as e:

self.responseSignal.emit(f"错误: {str(e)}")

self.completed.emit()

def image_to_base64(self, image_path):

with Image.open(image_path) as img:

img = img.convert('RGB')

buffered = BytesIO()

img.save(buffered, format="JPEG")

return base64.b64encode(buffered.getvalue()).decode('utf-8')

def stop(self):

self._is_running = False

class SpeechWorker(QThread):

finished = pyqtSignal(str)

def run(self):

r = sr.Recognizer()

with sr.Microphone() as source:

audio = r.listen(source)

try:

text = r.recognize_google(audio, language='zh-CN')

self.finished.emit(text)

except Exception as e:

self.finished.emit(f"语音识别错误: {str(e)}")

class MultiModalApp(QMainWindow):

def __init__(self):

super().__init__()

self.setWindowTitle("多模态智能助手 - Gemma3:27b")

self.setGeometry(100, 100, 1200, 800)

self.setFont(QFont("Microsoft YaHei", 10))

# 主布局

self.tabs = QTabWidget()

self.setCentralWidget(self.tabs)

# 创建功能页

self.create_chat_tab()

self.create_image_tab()

self.create_voice_tab()

self.create_video_tab()

self.create_settings_tab()

# 状态变量

self.current_images = []

self.model_worker = None

self.speech_worker = None

self.video_capture = None

self.video_timer = QTimer()

self.video_timer.timeout.connect(self.update_video_frame)

# 初始化语音引擎

self.engine = pyttsx3.init()

self.engine.setProperty('rate', 150)

self.engine.setProperty('volume', 0.9)

self.engine.setProperty('voice', 'zh') # 设置中文语音

# 检查Ollama连接

self.check_ollama_connection()

def create_chat_tab(self):

tab = QWidget()

layout = QVBoxLayout()

# 聊天历史

self.chat_history = QTextEdit()

self.chat_history.setReadOnly(True)

self.chat_history.setStyleSheet("background-color: #f0f8ff;")

# 参数控制

param_group = QGroupBox("模型参数")

param_layout = QHBoxLayout()

self.max_tokens = QSlider(Qt.Horizontal)

self.max_tokens.setRange(128, 8192)

self.max_tokens.setValue(2048)

self.max_tokens_label = QLabel("最大Token数: 2048")

self.temperature = QSlider(Qt.Horizontal)

self.temperature.setRange(0, 100)

self.temperature.setValue(70)

self.temperature_label = QLabel("温度: 0.7")

param_layout.addWidget(QLabel("最大Token:"))

param_layout.addWidget(self.max_tokens)

param_layout.addWidget(self.max_tokens_label)

param_layout.addWidget(QLabel("温度:"))

param_layout.addWidget(self.temperature)

param_layout.addWidget(self.temperature_label)

param_group.setLayout(param_layout)

# 输入区域

input_layout = QHBoxLayout()

self.input_field = QLineEdit()

self.input_field.setPlaceholderText("输入您的问题...")

self.send_button = QPushButton("发送")

self.clear_button = QPushButton("清空对话")

input_layout.addWidget(self.input_field, 4)

input_layout.addWidget(self.send_button, 1)

input_layout.addWidget(self.clear_button, 1)

# 进度条

self.progress_bar = QProgressBar()

self.progress_bar.setVisible(False)

# 组装布局

layout.addWidget(self.chat_history, 6)

layout.addWidget(param_group)

layout.addLayout(input_layout)

layout.addWidget(self.progress_bar)

tab.setLayout(layout)

self.tabs.addTab(tab, "文本对话")

# 信号连接

self.send_button.clicked.connect(self.send_chat)

self.clear_button.clicked.connect(self.clear_chat)

self.input_field.returnPressed.connect(self.send_chat)

self.max_tokens.valueChanged.connect(self.update_max_tokens_label)

self.temperature.valueChanged.connect(self.update_temperature_label)

def create_image_tab(self):

tab = QWidget()

layout = QVBoxLayout()

# 图像显示

image_layout = QHBoxLayout()

self.image_label = QLabel()

self.image_label.setAlignment(Qt.AlignCenter)

self.image_label.setStyleSheet("border: 2px solid gray; min-height: 300px;")

self.image_label.setText("图片预览区域")

image_layout.addWidget(self.image_label)

# 图像处理按钮

btn_layout = QHBoxLayout()

self.load_image_btn = QPushButton("加载图片")

self.analyze_image_btn = QPushButton("分析图片")

self.generate_image_btn = QPushButton("生成图片描述")

btn_layout.addWidget(self.load_image_btn)

btn_layout.addWidget(self.analyze_image_btn)

btn_layout.addWidget(self.generate_image_btn)

# 结果区域

self.image_result = QTextEdit()

self.image_result.setReadOnly(True)

self.image_result.setStyleSheet("background-color: #fffaf0;")

# 组装布局

layout.addLayout(image_layout, 3)

layout.addLayout(btn_layout)

layout.addWidget(self.image_result, 2)

tab.setLayout(layout)

self.tabs.addTab(tab, "图像分析")

# 信号连接

self.load_image_btn.clicked.connect(self.load_image)

self.analyze_image_btn.clicked.connect(self.analyze_image)

self.generate_image_btn.clicked.connect(self.generate_image_description)

def create_voice_tab(self):

tab = QWidget()

layout = QVBoxLayout()

# 语音控制

voice_layout = QHBoxLayout()

self.record_btn = QPushButton("开始录音")

self.play_btn = QPushButton("播放回复")

self.stop_btn = QPushButton("停止")

voice_layout.addWidget(self.record_btn)

voice_layout.addWidget(self.play_btn)

voice_layout.addWidget(self.stop_btn)

# 语音识别结果

self.voice_input = QTextEdit()

self.voice_input.setPlaceholderText("语音识别结果将显示在这里...")

# 语音合成设置

tts_group = QGroupBox("语音设置")

tts_layout = QHBoxLayout()

self.voice_speed = QSlider(Qt.Horizontal)

self.voice_speed.setRange(100, 300)

self.voice_speed.setValue(150)

self.voice_speed_label = QLabel("语速: 150")

self.voice_volume = QSlider(Qt.Horizontal)

self.voice_volume.setRange(0, 100)

self.voice_volume.setValue(90)

self.voice_volume_label = QLabel("音量: 90%")

tts_layout.addWidget(QLabel("语速:"))

tts_layout.addWidget(self.voice_speed)

tts_layout.addWidget(self.voice_speed_label)

tts_layout.addWidget(QLabel("音量:"))

tts_layout.addWidget(self.voice_volume)

tts_layout.addWidget(self.voice_volume_label)

tts_group.setLayout(tts_layout)

# 组装布局

layout.addLayout(voice_layout)

layout.addWidget(self.voice_input, 2)

layout.addWidget(tts_group)

tab.setLayout(layout)

self.tabs.addTab(tab, "语音交互")

# 信号连接

self.record_btn.clicked.connect(self.start_recording)

self.play_btn.clicked.connect(self.play_response)

self.stop_btn.clicked.connect(self.stop_audio)

self.voice_speed.valueChanged.connect(self.update_voice_speed)

self.voice_volume.valueChanged.connect(self.update_voice_volume)

def create_video_tab(self):

tab = QWidget()

layout = QVBoxLayout()

# 视频控制

video_control = QHBoxLayout()

self.load_video_btn = QPushButton("加载视频")

self.play_video_btn = QPushButton("播放")

self.pause_video_btn = QPushButton("暂停")

self.analyze_video_btn = QPushButton("分析视频")

video_control.addWidget(self.load_video_btn)

video_control.addWidget(self.play_video_btn)

video_control.addWidget(self.pause_video_btn)

video_control.addWidget(self.analyze_video_btn)

# 视频显示

video_layout = QHBoxLayout()

self.video_label = QLabel()

self.video_label.setAlignment(Qt.AlignCenter)

self.video_label.setStyleSheet("border: 2px solid gray; min-height: 300px;")

self.video_label.setText("视频预览区域")

video_layout.addWidget(self.video_label)

# 分析结果

self.video_result = QTextEdit()

self.video_result.setReadOnly(True)

self.video_result.setStyleSheet("background-color: #f5f5f5;")

# 组装布局

layout.addLayout(video_control)

layout.addLayout(video_layout, 3)

layout.addWidget(self.video_result, 2)

tab.setLayout(layout)

self.tabs.addTab(tab, "视频分析")

# 信号连接

self.load_video_btn.clicked.connect(self.load_video)

self.play_video_btn.clicked.connect(self.play_video)

self.pause_video_btn.clicked.connect(self.pause_video)

self.analyze_video_btn.clicked.connect(self.analyze_video)

def create_settings_tab(self):

tab = QWidget()

layout = QVBoxLayout()

# Ollama设置

ollama_group = QGroupBox("Ollama设置")

ollama_layout = QVBoxLayout()

self.ollama_host = QLineEdit("http://localhost:11434")

self.ollama_model = QComboBox()

self.ollama_model.addItems(["Gemma3:27b", "llama3", "mistral"])

ollama_layout.addWidget(QLabel("服务器地址:"))

ollama_layout.addWidget(self.ollama_host)

ollama_layout.addWidget(QLabel("模型选择:"))

ollama_layout.addWidget(self.ollama_model)

ollama_group.setLayout(ollama_layout)

# 系统设置

system_group = QGroupBox("系统设置")

system_layout = QVBoxLayout()

self.auto_play = QCheckBox("自动播放语音回复")

self.stream_output = QCheckBox("启用流式输出")

self.stream_output.setChecked(True)

self.save_logs = QCheckBox("保存对话日志")

system_layout.addWidget(self.auto_play)

system_layout.addWidget(self.stream_output)

system_layout.addWidget(self.save_logs)

system_group.setLayout(system_layout)

# 组装布局

layout.addWidget(ollama_group)

layout.addWidget(system_group)

layout.addStretch()

tab.setLayout(layout)

self.tabs.addTab(tab, "系统设置")

# 信号连接

self.ollama_host.textChanged.connect(self.update_ollama_host)

self.ollama_model.currentIndexChanged.connect(self.update_ollama_model)

# 实现之前省略的方法

def update_max_tokens_label(self, value):

self.max_tokens_label.setText(f"最大Token数: {value}")

def update_temperature_label(self, value):

self.temperature_label.setText(f"温度: {value / 100:.1f}")

def update_voice_speed(self, value):

self.voice_speed_label.setText(f"语速: {value}")

self.engine.setProperty('rate', value)

def update_voice_volume(self, value):

self.voice_volume_label.setText(f"音量: {value}%")

self.engine.setProperty('volume', value / 100)

def send_chat(self):

prompt = self.input_field.text().strip()

if not prompt:

return

self.progress_bar.setVisible(True)

self.progress_bar.setRange(0, 0) # 不确定进度

self.chat_history.append(f"<b>用户:</b> {prompt}")

self.input_field.clear()

# 创建模型工作线程

self.model_worker = ModelWorker(

prompt=prompt,

model=self.ollama_model.currentText(),

max_tokens=self.max_tokens.value(),

temperature=self.temperature.value() / 100

)

self.model_worker.responseSignal.connect(self.update_chat_response)

self.model_worker.completed.connect(self.on_chat_completed)

self.model_worker.start()

def update_chat_response(self, response):

# 获取当前文本并移除旧的助手回复

current_text = self.chat_history.toPlainText()

if "助手:" in current_text:

current_text = current_text[:current_text.rfind("助手:")]

# 添加新回复

self.chat_history.setPlainText(f"{current_text}<b>助手:</b> {response}")

self.chat_history.moveCursor(self.chat_history.textCursor().End)

def on_chat_completed(self):

self.progress_bar.setVisible(False)

if self.auto_play.isChecked():

self.play_response()

def clear_chat(self):

self.chat_history.clear()

def load_image(self):

file_path, _ = QFileDialog.getOpenFileName(

self, "选择图片", "",

"图片文件 (*.png *.jpg *.jpeg *.bmp)"

)

if file_path:

self.current_images = [file_path]

pixmap = QPixmap(file_path)

pixmap = pixmap.scaled(600, 400, Qt.KeepAspectRatio)

self.image_label.setPixmap(pixmap)

def analyze_image(self):

if not self.current_images:

self.image_result.setText("请先加载图片")

return

self.progress_bar.setVisible(True)

self.progress_bar.setRange(0, 0)

prompt = "详细分析这张图片的内容,包括场景、对象、颜色和可能的含义"

self.model_worker = ModelWorker(

prompt=prompt,

model=self.ollama_model.currentText(),

images=self.current_images,

max_tokens=self.max_tokens.value(),

temperature=self.temperature.value() / 100

)

self.model_worker.responseSignal.connect(self.update_image_result)

self.model_worker.completed.connect(lambda: self.progress_bar.setVisible(False))

self.model_worker.start()

def update_image_result(self, response):

self.image_result.setText(response)

def generate_image_description(self):

if not self.current_images:

self.image_result.setText("请先加载图片")

return

self.progress_bar.setVisible(True)

self.progress_bar.setRange(0, 0)

prompt = "为这张图片生成一个简洁的描述,适合用于社交媒体分享"

self.model_worker = ModelWorker(

prompt=prompt,

model=self.ollama_model.currentText(),

images=self.current_images,

max_tokens=self.max_tokens.value(),

temperature=self.temperature.value() / 100

)

self.model_worker.responseSignal.connect(self.update_image_result)

self.model_worker.completed.connect(lambda: self.progress_bar.setVisible(False))

self.model_worker.start()

def start_recording(self):

self.record_btn.setEnabled(False)

self.voice_input.setText("正在录音...")

self.speech_worker = SpeechWorker()

self.speech_worker.finished.connect(self.on_speech_recognition_complete)

self.speech_worker.start()

def on_speech_recognition_complete(self, text):

self.record_btn.setEnabled(True)

self.voice_input.setText(text)

# 如果设置了自动播放,直接发送到聊天

if self.auto_play.isChecked():

self.input_field.setText(text)

self.send_chat()

def play_response(self):

if not self.chat_history.toPlainText():

return

# 获取最后一条助手的回复

full_text = self.chat_history.toPlainText()

last_response = full_text.split("助手:")[-1].strip()

# 播放语音

self.engine.say(last_response)

self.engine.runAndWait()

def stop_audio(self):

self.engine.stop()

def load_video(self):

file_path, _ = QFileDialog.getOpenFileName(

self, "选择视频", "",

"视频文件 (*.mp4 *.avi *.mov)"

)

if file_path:

self.video_path = file_path

self.video_capture = cv2.VideoCapture(file_path)

self.play_video()

def play_video(self):

if not hasattr(self, 'video_capture') or not self.video_capture.isOpened():

return

self.video_timer.start(30) # 约30fps

def pause_video(self):

self.video_timer.stop()

def update_video_frame(self):

ret, frame = self.video_capture.read()

if not ret:

self.video_timer.stop()

return

# 转换OpenCV BGR图像为Qt RGB图像

frame = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

h, w, ch = frame.shape

bytes_per_line = ch * w

q_img = QImage(frame.data, w, h, bytes_per_line, QImage.Format_RGB888)

pixmap = QPixmap.fromImage(q_img)

pixmap = pixmap.scaled(800, 450, Qt.KeepAspectRatio)

self.video_label.setPixmap(pixmap)

def analyze_video(self):

if not hasattr(self, 'video_path'):

self.video_result.setText("请先加载视频")

return

self.progress_bar.setVisible(True)

self.progress_bar.setRange(0, 0)

prompt = "分析这段视频的主要内容,包括场景、关键动作和可能的主题"

self.model_worker = ModelWorker(

prompt=prompt,

model=self.ollama_model.currentText(),

max_tokens=self.max_tokens.value(),

temperature=self.temperature.value() / 100

)

self.model_worker.responseSignal.connect(self.update_video_result)

self.model_worker.completed.connect(lambda: self.progress_bar.setVisible(False))

self.model_worker.start()

def update_video_result(self, response):

self.video_result.setText(response)

def update_ollama_host(self, host):

os.environ["OLLAMA_HOST"] = host

self.check_ollama_connection()

def update_ollama_model(self, index):

# 模型切换逻辑

pass

def check_ollama_connection(self):

try:

client = ollama.Client(host=self.ollama_host.text())

models = client.list()

self.statusBar().showMessage("Ollama连接成功", 3000)

return True

except:

self.statusBar().showMessage("无法连接Ollama服务器,请检查设置", 5000)

return False

if __name__ == "__main__":

app = QApplication(sys.argv)

window = MultiModalApp()

window.show()

sys.exit(app.exec_())

最新发布

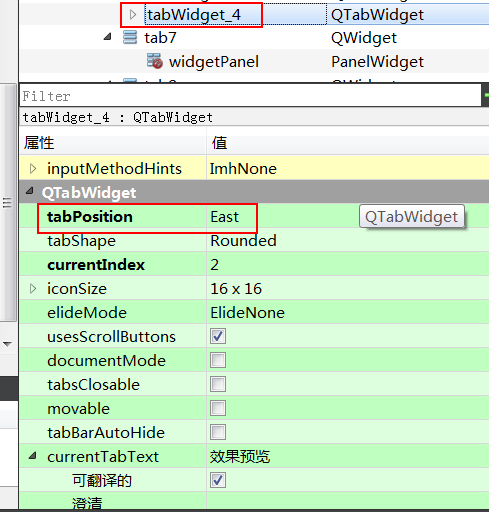

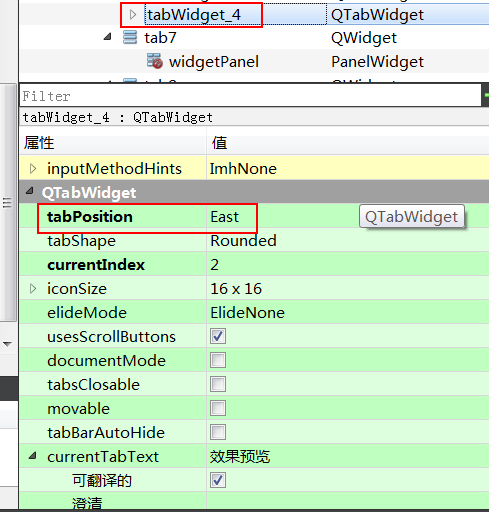

Qt题目之Tab选项卡

Qt题目之Tab选项卡

博客提及Qt题目4中的Tab选项卡相关内容,聚焦于信息技术领域的Qt开发方面。

博客提及Qt题目4中的Tab选项卡相关内容,聚焦于信息技术领域的Qt开发方面。

3326

3326

1604

1604

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?