java版spark使用faltmap时报空指针错误,错误如下:

20/08/28 09:41:44 INFO DAGScheduler: ResultStage 0 (count at TestJob.java:252) failed in 3.500 s due to Job aborted due to stage failure: Task 299 in stage 0.0 failed 1 times, most recent failure: Lost task 299.0 in stage 0.0 (TID 299, localhost, executor driver): java.lang.NullPointerException

at scala.collection.convert.Wrappers$JIteratorWrapper.hasNext(Wrappers.scala:42)

at scala.collection.Iterator$$anon$12.hasNext(Iterator.scala:439)

at org.apache.spark.util.Utils$.getIteratorSize(Utils.scala:1817)

at org.apache.spark.rdd.RDD$$anonfun$count$1.apply(RDD.scala:1168)

at org.apache.spark.rdd.RDD$$anonfun$count$1.apply(RDD.scala:1168)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2113)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2113)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:121)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$11.apply(Executor.scala:407)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:413)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Driver stacktrace:

20/08/28 09:41:44 INFO DAGScheduler: Job 0 failed: count at TestJob.java:252, took 3.555955 s

Exception in thread "main" org.apache.spark.SparkException: Job aborted due to stage failure: Task 299 in stage 0.0 failed 1 times, most recent failure: Lost task 299.0 in stage 0.0 (TID 299, localhost, executor driver): java.lang.NullPointerException

at scala.collection.convert.Wrappers$JIteratorWrapper.hasNext(Wrappers.scala:42)

at scala.collection.Iterator$$anon$12.hasNext(Iterator.scala:439)

at org.apache.spark.util.Utils$.getIteratorSize(Utils.scala:1817)

at org.apache.spark.rdd.RDD$$anonfun$count$1.apply(RDD.scala:1168)

at org.apache.spark.rdd.RDD$$anonfun$count$1.apply(RDD.scala:1168)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2113)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2113)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:121)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$11.apply(Executor.scala:407)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:413)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Driver stacktrace:

at org.apache.spark.scheduler.DAGScheduler.org$apache$spark$scheduler$DAGScheduler$$failJobAndIndependentStages(DAGScheduler.scala:1890)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$abortStage$1.apply(DAGScheduler.scala:1878)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$abortStage$1.apply(DAGScheduler.scala:1877)

at scala.collection.mutable.ResizableArray$class.foreach(ResizableArray.scala:59)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:48)

at org.apache.spark.scheduler.DAGScheduler.abortStage(DAGScheduler.scala:1877)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$handleTaskSetFailed$1.apply(DAGScheduler.scala:929)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$handleTaskSetFailed$1.apply(DAGScheduler.scala:929)

at scala.Option.foreach(Option.scala:257)

at org.apache.spark.scheduler.DAGScheduler.handleTaskSetFailed(DAGScheduler.scala:929)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.doOnReceive(DAGScheduler.scala:2111)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2060)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2049)

at org.apache.spark.util.EventLoop$$anon$1.run(EventLoop.scala:49)

at org.apache.spark.scheduler.DAGScheduler.runJob(DAGScheduler.scala:740)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2073)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2094)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2113)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2138)

at org.apache.spark.rdd.RDD.count(RDD.scala:1168)

at org.apache.spark.api.java.JavaRDDLike$class.count(JavaRDDLike.scala:455)

at org.apache.spark.api.java.AbstractJavaRDDLike.count(JavaRDDLike.scala:45)

at com.TestJob.main(TestJob.java:252)

Caused by: java.lang.NullPointerException

at scala.collection.convert.Wrappers$JIteratorWrapper.hasNext(Wrappers.scala:42)

at scala.collection.Iterator$$anon$12.hasNext(Iterator.scala:439)

at org.apache.spark.util.Utils$.getIteratorSize(Utils.scala:1817)

at org.apache.spark.rdd.RDD$$anonfun$count$1.apply(RDD.scala:1168)

at org.apache.spark.rdd.RDD$$anonfun$count$1.apply(RDD.scala:1168)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2113)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2113)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:121)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$11.apply(Executor.scala:407)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:413)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

20/08/28 09:41:44 INFO SparkContext: Invoking stop() from shutdown hook

20/08/28 09:41:44 INFO SparkUI: Stopped Spark web UI at http://DESKTOP-LSJJA44.mshome.net:4040

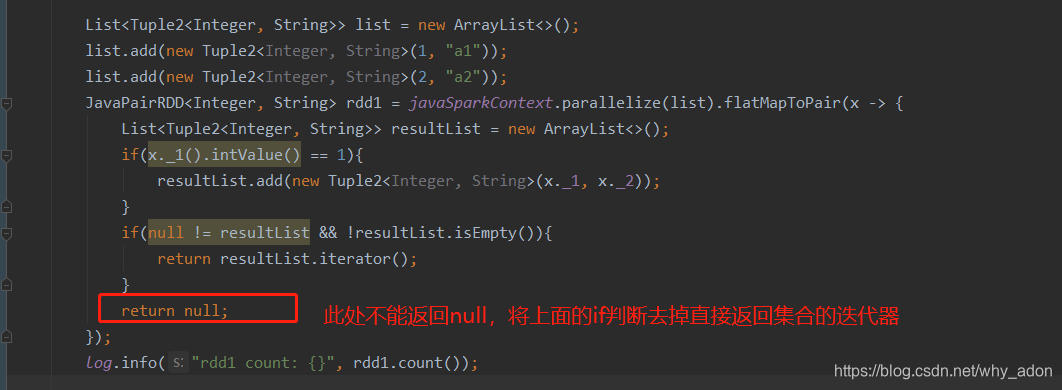

问题代码如下:

List<Tuple2<Integer, String>> list = new ArrayList<>();

list.add(new Tuple2<Integer, String>(1, "a1"));

list.add(new Tuple2<Integer, String>(2, "a2"));

JavaPairRDD<Integer, String> rdd1 = javaSparkContext.parallelize(list).flatMapToPair(x -> {

List<Tuple2<Integer, String>> resultList = new ArrayList<>();

if(x._1().intValue() == 1){

resultList.add(new Tuple2<Integer, String>(x._1, x._2));

}

if(null != resultList && !resultList.isEmpty()){

return resultList.iterator();

}

return null;

});

log.info("rdd1 count: {}", rdd1.count());

问题原因:

因为flatmap系列的函数默认需要返回一个集合(即使集合为空也要返回一个非空集合的迭代器对象),如果此处有返回null则会报空指针错误

改正后代码:

List<Tuple2<Integer, String>> list = new ArrayList<>();

list.add(new Tuple2<Integer, String>(1, "a1"));

list.add(new Tuple2<Integer, String>(2, "a2"));

JavaPairRDD<Integer, String> rdd1 = javaSparkContext.parallelize(list).flatMapToPair(x -> {

List<Tuple2<Integer, String>> resultList = new ArrayList<>();

if(x._1().intValue() == 1){

resultList.add(new Tuple2<Integer, String>(x._1, x._2));

}

return resultList.iterator();

});

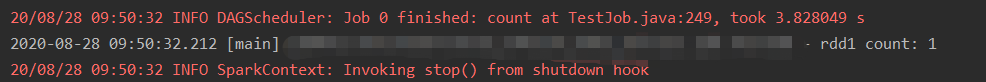

log.info("rdd1 count: {}", rdd1.count());

执行结果:

203

203

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?