GRU + LSTM + ConvLSTM 从数学公式上理解

一、GRU理解(公式+手推代码)

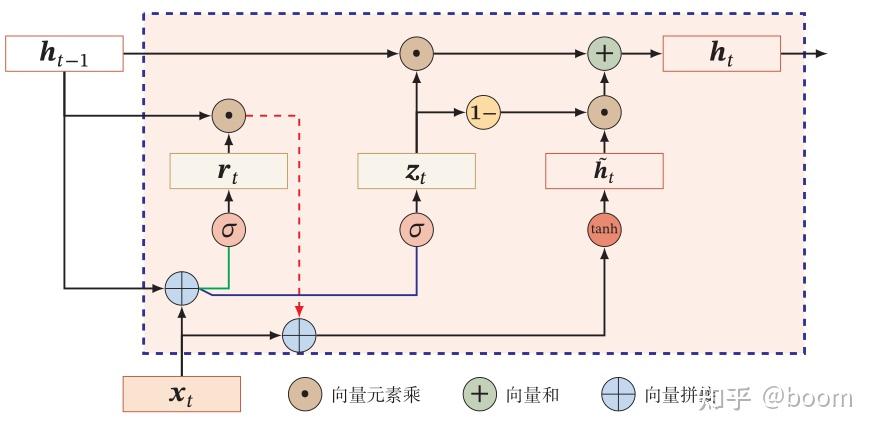

1.1 GRU图

1.2 GRU公式(附带各变量的维度解释)

注意:** 是 内积 ·是向量相乘*

(1)重置门:

Xt :输入向量(inputdim,1)

Ht-1:上一个GRU的输出向量(上一个gru的输出维度(hiddendim),1)

Wxr: (hiddendim,inputdim)

Whr:(hiddendim,上一个gru的输出维度(hiddendim))

br :(hiddendim,1)

rt :(hiddendim,1)

r

t

=

s

i

g

m

o

i

d

(

W

x

r

⋅

X

t

+

W

h

r

⋅

H

t

−

1

+

b

r

)

rt = sigmoid(Wxr · Xt + Whr · Ht-1 + br)

rt=sigmoid(Wxr⋅Xt+Whr⋅Ht−1+br)

(2)更新门:

Wxz: (hiddendim,inputdim)

Whz:(hiddendim,上一个gru的输出维度(hiddendim))

bz :(hiddendim,1)

zt :(hiddendim,1)

z

t

=

s

i

g

m

o

i

d

(

W

x

z

⋅

X

t

+

W

h

z

⋅

H

t

−

1

+

b

z

)

zt = sigmoid(Wxz · Xt + Whz · Ht-1 + bz)

zt=sigmoid(Wxz⋅Xt+Whz⋅Ht−1+bz)

(3)hat :

Wxz: (hiddendim,inputdim)

Whz:(hiddendim,上一个gru的输出维度(hiddendim))

bn :(hiddendim,1)

hat :(hiddendim,1)

h

a

t

=

t

a

n

h

(

W

x

h

⋅

X

t

+

W

h

h

⋅

(

r

t

∗

H

t

−

1

)

+

b

n

)

hat = tanh(Wxh·Xt + Whh·(rt*Ht-1) + bn)

hat=tanh(Wxh⋅Xt+Whh⋅(rt∗Ht−1)+bn)

(4)输出 :

Ht:(hiddendim,1)

H

t

=

z

t

∗

H

t

−

1

+

(

1

−

z

t

)

∗

h

a

t

Ht = zt * Ht-1 + (1-zt )* hat

Ht=zt∗Ht−1+(1−zt)∗hat

1.3 GRU手推

https://gitee.com/Hz092811/neural-network/blob/master/%E6%89%8B%E5%86%99GRU.ipynb

二、LSTM理解(公式+手推代码)

2.1 LSTM图

2.2 LSTM公式

(1)遗忘门:

Xt :输入向量(inputdim,1)

Ht-1:上一个LSTM的输出向量(上一个LSTM的输出维度(hiddendim),1)

Wf:(hiddendim,inputdim + 上一个LSTM的输出维度(hiddendim))

bf:(hiddendim,1)

f

t

=

s

i

g

m

o

i

d

(

W

f

⋅

[

X

t

,

H

t

−

1

]

+

b

f

)

ft = sigmoid(Wf · [Xt,Ht-1] + bf)

ft=sigmoid(Wf⋅[Xt,Ht−1]+bf)

(2)更新门:

Xt :输入向量(inputdim,1)

Ht-1:上一个LSTM的输出向量(上一个LSTM的输出维度(hiddendim),1)

Wi:(hiddendim,inputdim + 上一个LSTM的输出维度(hiddendim))

bi:(hiddendim,1)

i

t

=

s

i

g

m

o

i

d

(

W

i

⋅

[

X

t

,

H

t

−

1

]

+

b

i

)

it = sigmoid(Wi · [Xt,Ht-1] + bi)

it=sigmoid(Wi⋅[Xt,Ht−1]+bi)

(3)输出门:

Xt :输入向量(inputdim,1)

Ht-1:上一个LSTM的输出向量(上一个LSTM的输出维度(hiddendim),1)

Wo:(hiddendim,inputdim + 上一个LSTM的输出维度(hiddendim))

bo:(hiddendim,1)

o

t

=

s

i

g

m

o

i

d

(

W

o

⋅

[

X

t

,

H

t

−

1

]

+

b

o

)

ot = sigmoid(Wo · [Xt,Ht-1] + bo)

ot=sigmoid(Wo⋅[Xt,Ht−1]+bo)

(4)状态向量:

ft :(hiddendim,1)

ct-1:(hiddendim,1)

it:(hiddendim,1)

ct :(hiddendim,1)

c

t

=

f

t

∗

c

t

−

1

+

i

t

∗

t

a

n

h

(

W

c

⋅

[

X

t

,

H

t

−

1

]

+

b

c

)

ct = ft * ct-1 + it * tanh(Wc·[Xt,Ht-1] + bc)

ct=ft∗ct−1+it∗tanh(Wc⋅[Xt,Ht−1]+bc)

(5)输出:

ht : (hiddendim,1)

ot : (hiddendim,1)

ct : (hiddendim,1)

h

t

=

o

t

∗

t

a

n

h

(

c

t

)

ht = ot * tanh(ct)

ht=ot∗tanh(ct)

三、ConvLSTM理解(公式)

重点:将LSTM的向量相乘操作换成了卷积操作(因为ConvLSTM的输入为三维向量,为了提取空间特征,采用卷积操作)

**表示卷积操作,W为卷积核参数

(1)遗忘门:

Xt :输入向量(n,m,inputdim)

Ht-1:(n,m,hiddendim)

Wf:卷积核

bf:(n,m,hiddendim)

ft:(n,m,hiddendim)

f

t

=

s

i

g

m

o

i

d

(

W

f

∗

∗

[

X

t

,

H

t

−

1

]

+

b

f

)

ft = sigmoid(Wf ** [Xt,Ht-1] + bf)

ft=sigmoid(Wf∗∗[Xt,Ht−1]+bf)

(2)更新门:

Xt :输入向量(n,m,inputdim)

Ht-1:(n,m,hiddendim)

Wi:卷积核

bi:(n,m,hiddendim)

it:(n,m,hiddendim)

i

t

=

s

i

g

m

o

i

d

(

W

i

∗

∗

[

X

t

,

H

t

−

1

]

+

b

i

)

it = sigmoid(Wi** [Xt,Ht-1] + bi)

it=sigmoid(Wi∗∗[Xt,Ht−1]+bi)

(3)输出门:

Xt :输入向量(n,m,inputdim)

Ht-1:(n,m,hiddendim)

Wo:卷积核

bo:(n,m,hiddendim)

io:(n,m,hiddendim)

o

t

=

s

i

g

m

o

i

d

(

W

o

∗

∗

[

X

t

,

H

t

−

1

]

+

b

o

)

ot = sigmoid(Wo**[Xt,Ht-1] + bo)

ot=sigmoid(Wo∗∗[Xt,Ht−1]+bo)

(4)状态向量:

ft :(n,m,hiddendim)

ct-1:(n,m,hiddendim)

it:(n,m,hiddendim)

ct :(n,m,hiddendim)

c

t

=

f

t

∗

c

t

−

1

+

i

t

∗

t

a

n

h

(

W

c

∗

∗

[

X

t

,

H

t

−

1

]

+

b

c

)

ct = ft * ct-1 + it * tanh(Wc**[Xt,Ht-1] + bc)

ct=ft∗ct−1+it∗tanh(Wc∗∗[Xt,Ht−1]+bc)

(5)输出:

ht : (n,m,hiddendim)

ot : (n,m,hiddendim)

ct : (n,m,hiddendim)

$$

ht = ot * tanh(ct)

,m,hiddendim)

ct :(n,m,hiddendim)

c

t

=

f

t

∗

c

t

−

1

+

i

t

∗

t

a

n

h

(

W

c

∗

∗

[

X

t

,

H

t

−

1

]

+

b

c

)

ct = ft * ct-1 + it * tanh(Wc**[Xt,Ht-1] + bc)

ct=ft∗ct−1+it∗tanh(Wc∗∗[Xt,Ht−1]+bc)

(5)输出:

ht : (n,m,hiddendim)

ot : (n,m,hiddendim)

ct : (n,m,hiddendim)

h

t

=

o

t

∗

t

a

n

h

(

c

t

)

ht = ot * tanh(ct)

ht=ot∗tanh(ct)

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?