提示:以下是本篇文章正文内容,下面案例可供参考

一、Hadoop是什么?

Hadoop是一个由Apache基金会所开发的分布式系统基础架构。 用户可以在不了解分布式底层细节的情况下,开发分布式程序。充分利用集群的威力进行高速运算和存储。Hadoop实现了一个分布式文件系统(Hadoop Distributed File System),简称HDFS。HDFS有高容错性的特点,并且设计用来部署在低廉的(low-cost)硬件上;而且它提供高吞吐量(high throughput)来访问应用程序的数据,适合那些有着超大数据集(large data set)的应用程序。HDFS放宽了(relax)POSIX的要求,可以以流的形式访问(streaming access)文件系统中的数据。Hadoop的框架最核心的设计就是:HDFS和Map Reduce。HDFS为海量的数据提供了存储,则Map Reduce为海量的数据提供了计算。。

二、使用步骤

1.IDEA中使用Maven构建HDFS API

1.下载maven解压

⒉.配置系统环境变量

3. 验证

4.设置localRepository

5.测试——输入mvn help:system

6.在Idea中关联Maven;

7.更改settings的位置

8.用Maven创建一个标准化的Java项目

9.启动Idea,导入刚才创建的项目

10、修改项目下的pom.xml,增加Hadoop依赖;

11、再次执行mvn clean install

12、从hadoop集群中下载以下三个配置文件放在idea中;

13. 修改hdfs-site.xml文件,在之间添加内容并上传至hadoop集群

14. 修改配置本地host——在C:\Windows\System32\drivers\etc下

15. 添加系统环境变量HADOOP_USER_NAME,值为admin(登录hadoop的用户)

16. 修改系统登录用户名为Hadoop的登录用户名

17.在hadoop集群中修改hdfs的用户权限:hadoop fs –chmod 777

2.HDFS基本操作

1、单元测试的setup和setdown方法

public class HDFSApp {

public static final String HDFS_PATH = "hdfs://192.168.10.111:9000";

Configuration configuration = null;

FileSystem fileSystem = null;

@Before

public void setUp() throws Exception{

System.out.println("HDFSApp.setUp()");

Configuration configuration = new Configuration();

configuration.set("fs.defaultFS","hdfs://192.168.10.111:9000");

fileSystem= FileSystem.get(configuration);

}

@After

public void tearDown()throws Exception{

fileSystem=null;

configuration=null;

System.out.println("HDFSApp.tearDown()");

}

2、Java API操作HDFS文件。

/*创建目录*/

@Test

public void mkdir() throws Exception{

fileSystem.mkdirs(new Path("hdfs://192.168.10.111:9000/user/test"));

}

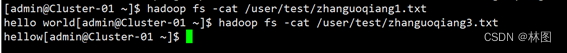

/*创建文件*/

@Test

public void create() throws Exception{

FSDataOutputStream output= fileSystem.create(new Path("hdfs://192.168.10.111:9000/user/test/zhanguoqiang1.txt"));

output.write("hello world".getBytes());

output.flush();

output.close();

}

/*重命名*/

@Test

public void rename() throws Exception{

Path oldPath = new Path("hdfs://192.168.10.111:9000/user/test/zhanguoqiang1.txt");

Path newPath = new Path("hdfs://192.168.10.111:9000/user/test/zhanguoqiang2.txt");

System.out.println(fileSystem.rename(oldPath,newPath));

}

/*上传文件到HDFS*/

@Test

public void copyFromLocalFile() throws Exception{

Path src =new Path("D://hadoop实战/zhanguoqiang3.txt");

Path dist = new Path("hdfs://192.168.10.111:9000/user/test/");

fileSystem.copyFromLocalFile(src,dist);

}

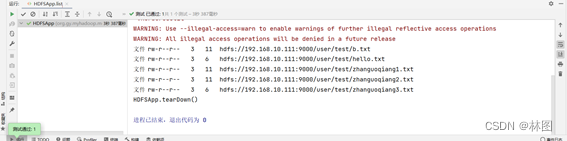

/*查看某个目录下的所有文件*/

@Test

public void list() throws Exception{

FileStatus[] listStatus=fileSystem.listStatus(new Path("hdfs://192.168.10.111:9000/user/test"));

for (FileStatus fileStatus: listStatus) {

String isDir=fileStatus.isDirectory() ? "文件夹" : "文件"; //文件/文件夹

String permission =fileStatus.getPermission().toString();//权限

short replication = fileStatus.getReplication(); //副本系数

long len=fileStatus.getLen();//长度

String path =fileStatus.getPath().toString();//路径

System.out.println(isDir + "\t" + permission + "\t" + replication + "\t" + len +"\t" +path);

}

}

运行截图

3.SequenceFile;

1、SequenceFile文件写操作;

public class SequenceFileWriter {

private static Configuration configuration = new Configuration();

private static String url = "hdfs://192.168.10.111:9000";

private static String[] data ={ "a,b,c,d,e,f,g","e,f.g,h,i.j,k","l,m,n,o.p,q,r,s","t,u,v,w,x,y,z"};

public static void main(String[] args) throws Exception{

FileSystem fs =FileSystem.get(URI.create(url), configuration);

Path outputPath = new Path("zhangguoqiang6.seq");

IntWritable key = new IntWritable();

Text value = new Text();

SequenceFile.Writer writer =SequenceFile.createWriter(fs, configuration, outputPath, IntWritable.class, Text.class,SequenceFile.CompressionType.NONE);

for (int i=0; i<10; i++){

key.set(10-i);

value.set(data[i%data.length]);

writer.append(key, value);

}

IOUtils.closeStream(writer);

System.out.println("ok");

}

}

运行截图

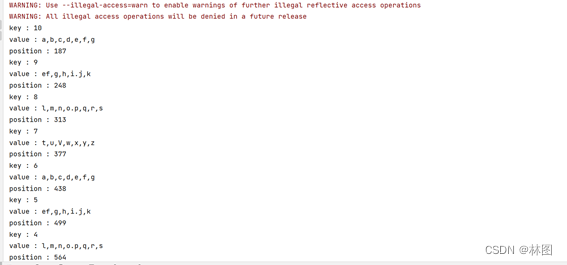

2、SequenceFile文件读操作

public class SequenceFileReader {

private static Configuration configuration = new Configuration();

private static String url = "hdfs://192.168.10.111:9000";

public static void main(String[] args) throws Exception{

FileSystem fs = FileSystem.get(URI.create(url), configuration);

Path inputPath = new Path("zhanguoqiang6.seq");

SequenceFile.Reader reader = new SequenceFile.Reader(fs,inputPath,configuration);

Writable keyClass = (Writable)ReflectionUtils.newInstance(reader.getKeyClass(), configuration);

Writable valueClass =(Writable)ReflectionUtils.newInstance(reader.getValueClass(), configuration);

while(reader.next(keyClass, valueClass)){

System.out.println("key :" + keyClass);

System.out.println("value : " + valueClass);

}

IOUtils.closeStream(reader);

}

}

运行截图

3. SequenceFile文件使用压缩写操作

public class SequenceFileCompression {

private static Configuration configuration = new Configuration();

private static String url = "hdfs://192.168.10.111:9000";

static {

configuration = new Configuration();

}

private static String[] data = { "a,b,c,d,e,f,g", "ef,g,h,i.j,k","l,m,n,o.p,q,r,s", "t,u,V,w,x,y,z"};

public static void main(String[] args) throws Exception {

FileSystem fs = FileSystem.get(URI.create(url), configuration);

Path outputPath = new Path("zhanguoqiang5.seq");

IntWritable key = new IntWritable();

Text value = new Text();

SequenceFile.Writer writer = SequenceFile.createWriter(fs, configuration, outputPath, IntWritable.class, Text.class, SequenceFile.CompressionType.RECORD, new BZip2Codec());

for (int i = 0; i < 10; i++) {

key.set(10 - i);

value.set(data[i % data.length]);

writer.append(key, value);

}

IOUtils.closeStream(writer);

Path inputPath = new Path("zhanguoqiang5.seq");

SequenceFile.Reader reader = new SequenceFile.Reader(fs, inputPath, configuration);

Writable keyClass = (Writable) ReflectionUtils.newInstance(reader.getKeyClass(), configuration);

Writable valueClass = (Writable) ReflectionUtils.newInstance(reader.getValueClass(), configuration);

while (reader.next(keyClass, valueClass)) {

System.out.println("key : " + keyClass);

System.out.println("value : " + valueClass);

System.out.println("position : " + reader.getPosition());

}

IOUtils.closeStream(reader);

}

}

运行截图

4.MapFile

1、MapFile 写操作;

public class MapFileWriter {

static Configuration configuration = new Configuration();

private static String url = "hdfs://192.168.10.111:9000";

public static void main(String[] args) throws Exception{

FileSystem fs = FileSystem.get(URI.create(url), configuration);

Path outPath = new Path("hdfs://192.168.10.111:9000/user/test/zhanguoqiang4.map");

Text key = new Text();

key.set("mymapkey");

Text value = new Text();

value.set("mymapvalue");

MapFile.Writer writer = new MapFile.Writer(configuration,fs,

outPath.toString(),Text.class,Text.class);

writer.append(key, value);

IOUtils.closeStream(writer);

}

}

运行截图

2、MapFile读操作

public class MapFileReader {

static Configuration configuration = new Configuration();

private static String url = "hdfs://192.168.10.111:9000";

public static void main(String[] args) throws Exception{

FileSystem fs = FileSystem.get(URI.create(url),configuration);

Path inPath =new Path("zhanguoqiang4.map");

MapFile.Reader reader = new MapFile.Reader(fs, inPath.toString(),configuration);

Writable keyClass = (Writable) ReflectionUtils.newInstance(reader.getKeyClass(), configuration);

Writable valueClass = (Writable) ReflectionUtils.newInstance(reader.getValueClass(), configuration);

while (reader.next((WritableComparable) keyClass, valueClass)) {

System.out.println(keyClass);

System.out.println(valueClass);

}

IOUtils.closeStream(reader);

}

}

运行截图

出现的问题与解决方案

问题1:使用命令行方式创建maven项目时clean install 出现报错

解决:在pom文件中加入 (其中版本号以自己的jdk版本为主我的是9)

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<maven.compiler.source>9</maven.compiler.source>

<maven.compiler.target>9</maven.compiler.target>

问题2:运行程序的时候报错提示

Exception in thread “main” java.lang.NullPointerException

解决:下载hadoop.dll和winutils.exe 文件放到C:\Windows\System32中然后重新运行

附:hadoop.dll文件

链接:https://pan.baidu.com/s/1LxwYfFyuTLBoKe1ImmTAWg

提取码:sx40

2188

2188

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?