2. Web Usage Mining and Recommender Systems

Discovery of patterns in click-streams and associated data collected or generated as a result of user interactions with one or more web sites or Web 2.0 applications.

Typical Sources of Data

- web server access logs

- e-commerce and product-oriented user events (e.g., shopping cart changes, ad or product click-throughs, purchases)

- user events on social network sites (e.g., likes, posts, comments)

Associated Data

- page attributes, page content, site structure

- additional domain knowledge and demographic data

- user profiles or user ratings

2.1 Usage Data Collection

Sever-Side Data Collection: web server logs + application logs

Client-Side Data Collection: page tagging + via providing the application + additional collectable data (mouse movements, keyboards strokes, size of browser window)

Recording Users Entering(HTTP Referer) and Leaving the Site(URL Rewriting)

2.2 Data Preparation

Data Cleansing -> Data Integration -> Data Transformation -> Data Reduction

Robot Detection

(1) Identification via HTTP User-Agent Header

(2) Classification using Behavioral Features

Data Transformation: Mechanisms for User Identification

(1)->(5) Privacy Concerns: Low -> high

(1) IP Address + Agent

(2) Embedded Session Ids

(3) Registration

(4) Cookie

(5) Software Agents

Data Transformation: Mechanisms for Session Identification

Time & Navigation

Data Transformation: Data Aggregation

in order to generate features

Example: User Pageview Matrix -> discover user groups (cluster analysis)

Data Transformation: Semantic Enrichment

Associate each requested page with one or more topics/ concepts to better understand user behavior.

Aggregation Level: Page Level & Session Level

Concepts can be part of a concept hierarchy or ontology

Example:

User Pageview Matrix + Page Topic Matrix = User Topic Matrix

2.3 Web Usage Mining Tasks

- Content Personalization

- Personalized content & Navigation elements

- Techniques: Classification, Re-Ranking, Sequential Pattern Mining, Recommender Systems

- Marketing

- Discovery of associated products for cross-selling (Association rules, Sequential Pattern Mining)

- Discovery of associated products in different price categories for up-selling (Association rules, Sequential Pattern Mining)

- Identification of Customer Groups for Target Marketing (Clustering, Classification)

- Personalized recommendations

2.4 Recommender Systems

help to match user with items

can be seen as a function

input: User model + Items -> output: Rating/Relevance Score

When does a recommender do a good job?

From users’ perspective: serendipity(accident of finding something good while not specifically searching for it)

From merchants’ perspectives: increase the sale of high-revenue items

2.4.1 Paradigms of Recommender Systems

Content-based: Show me more of the same what I’ve liked

input: User profile & contextual parameters + Product features

Collaborative: Tell me what’s popular among my peers

input: User profile & contextual parameters + Community data

Personalized Recommendation

(1)Demographic Recommendation

(2)Contextual Recommendation (Location/Time)

Hybrid: Combination of various inputs and/or composition of different mechanisms

input: User profile & contextual parameters + Product features + Community data

2.4.2 Collaborative Filtering

Basic Assumptions:

User give ratings to catalog items. Customers who had similar tastes in the past, will have similar tastes in the future

Input: Matrix of given user-item rating

Output types: (1) (Numerical) prediction indicating to what degree the current user will like a certain item (2) Ranking: Top-k list of recommended items

Pros: well-understand, requires no explicit item descriptions or demographic user profiles

Cons: require user community to give enough ratings; no exploitation of other scources of recommendation knowledge; cold start problem => ask/force users to rate a set of itmes(unrealistic) + use another method or combination of methods (e.g., content-based, demographic or simply non-personalized) until enough ratings are collected (see hybrid recommendation)

2.4.2.1 User-Based Nearest-Neighbor Collaborative Filtering

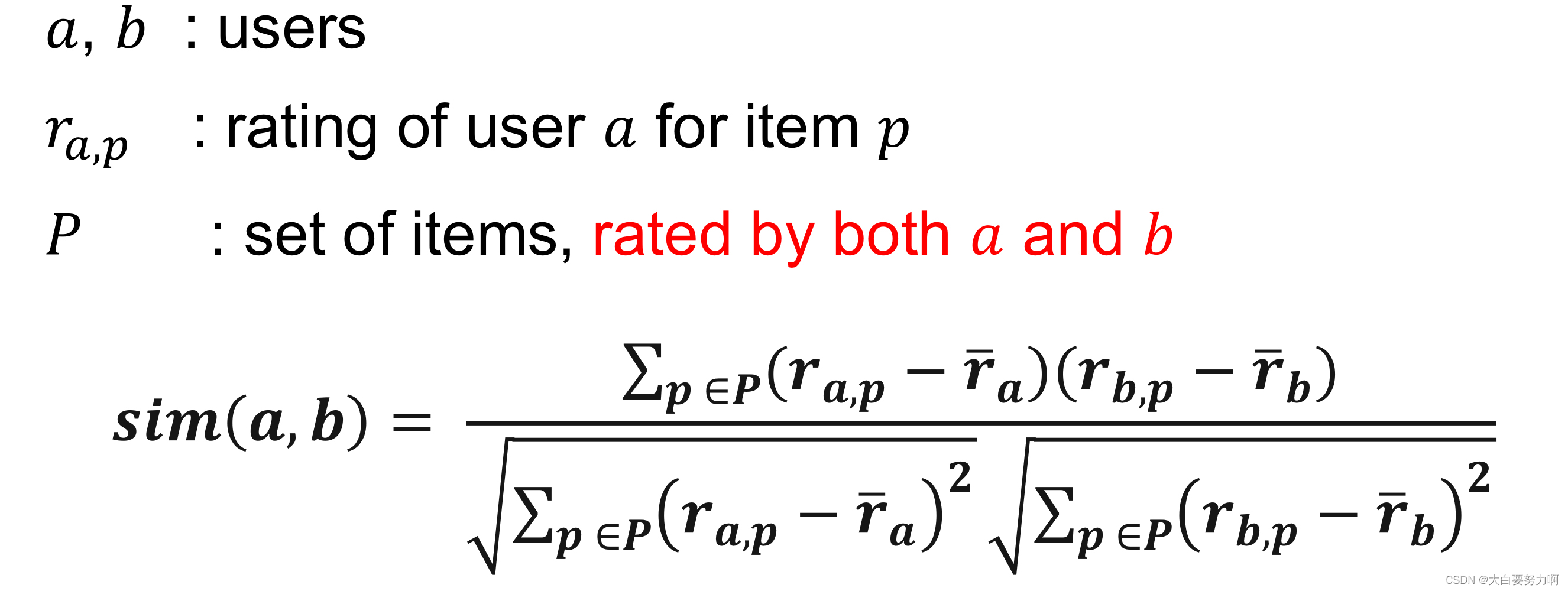

Measuring User Similarity - Pearson Correlation Coefficient

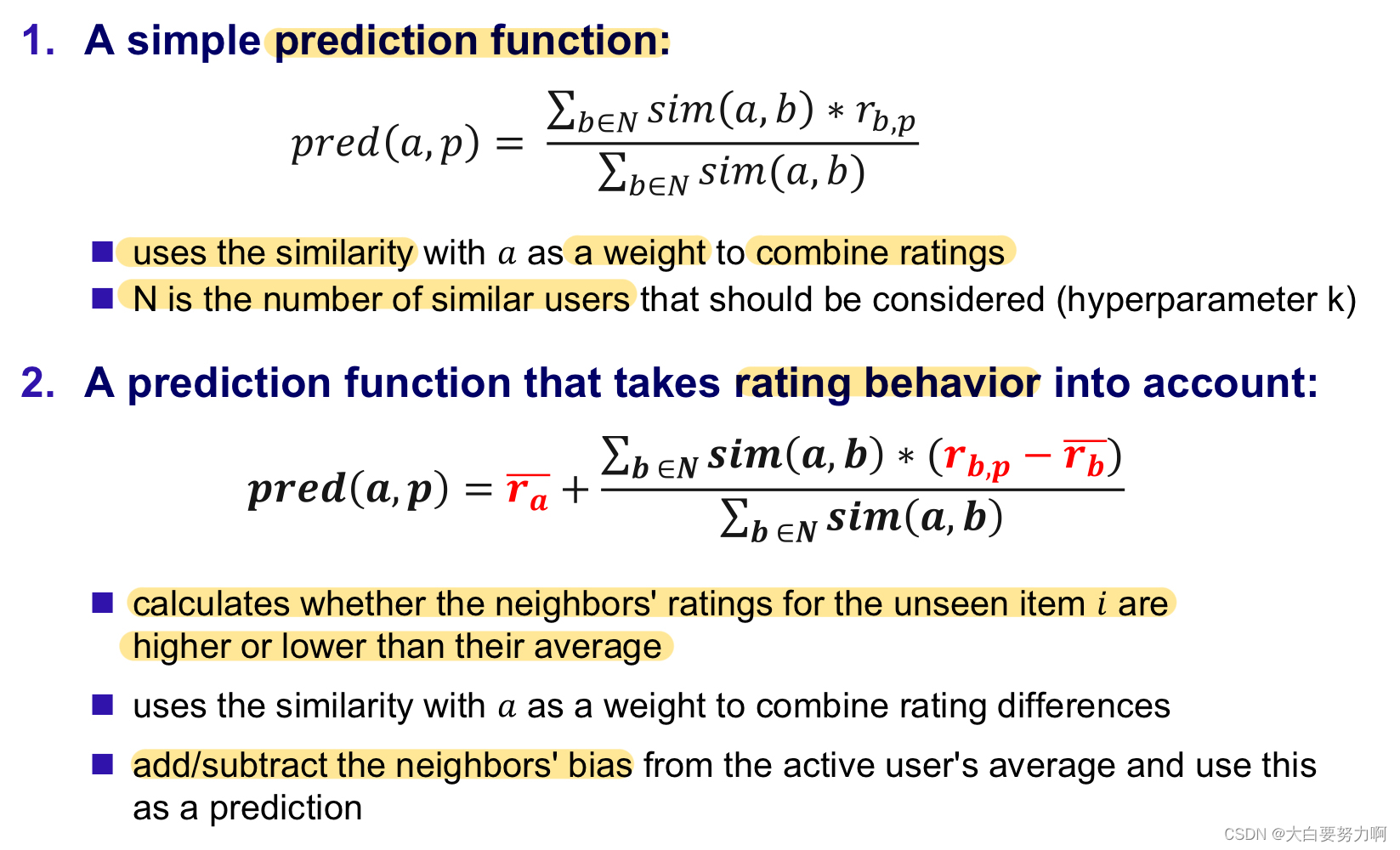

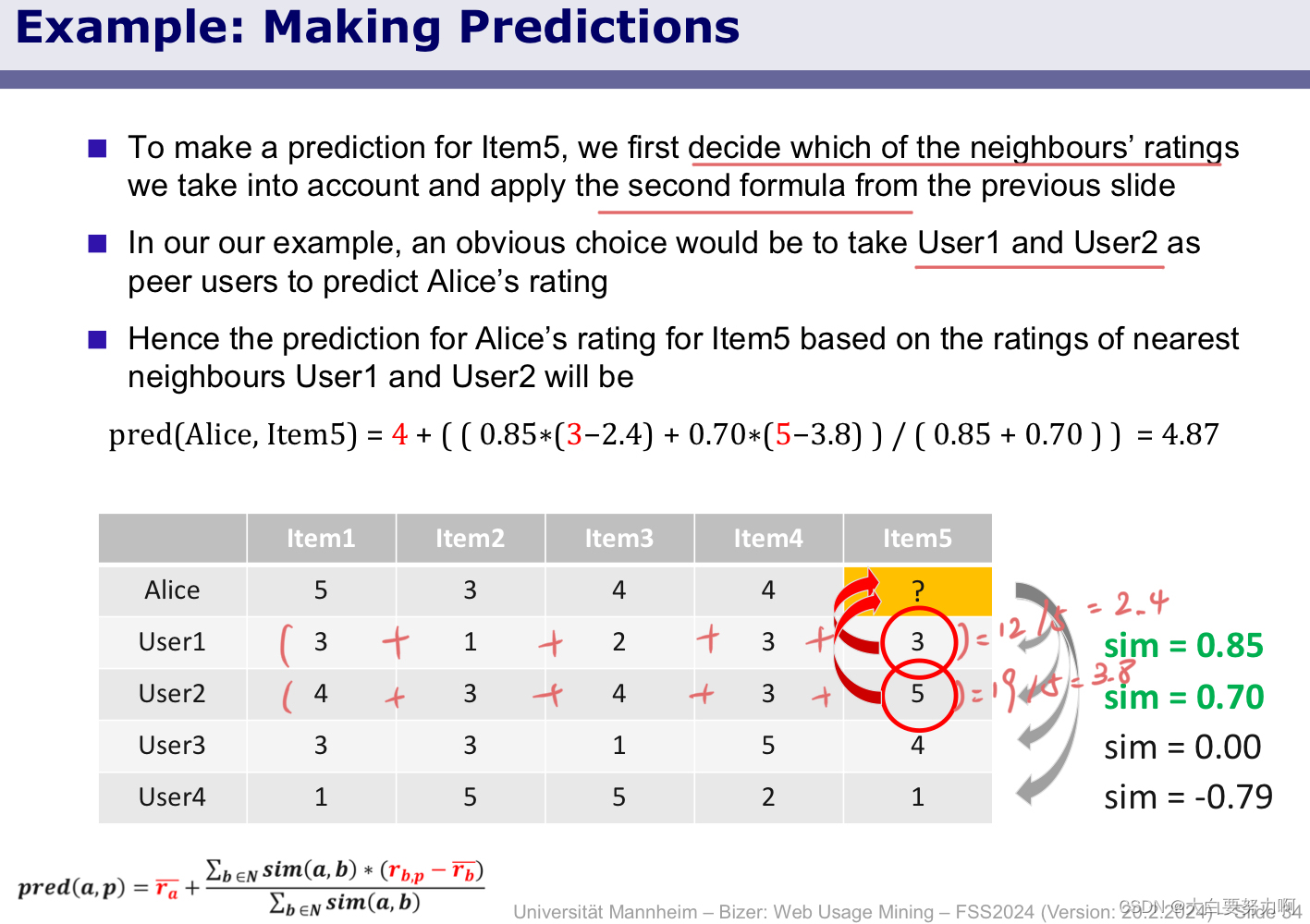

Making Predictions

Improving the Similarity / Predictions Functions

- Neighborhood selection (use similarity threshold)

- Case amplification (give more weight to “very similar neighbors” -> sim(a,b)^2)

- Rating variance (give more weight to items that have a higher variance)

- Number of co-rated items (use “significance weighting” -> linearly reducing the weight when the number of co-rated items is low)

Memory-based approaches

User-based CF is a memory-based. The rating matrix is directly used to find neighbors and make predictions. To predict we compute user similarity online and collect the ratings of the most similar ones. Such a KNN approach is called lazy learning.

This does not scale for large e-commerce sites

Model-based approaches

We build a model offline. And we use the model we computed offline to make predictions online

models are updated / re-trained periodically

Examples

(1) Item-based collaborative filtering

(2) Probabilistic methods

(3) Matrix factorization

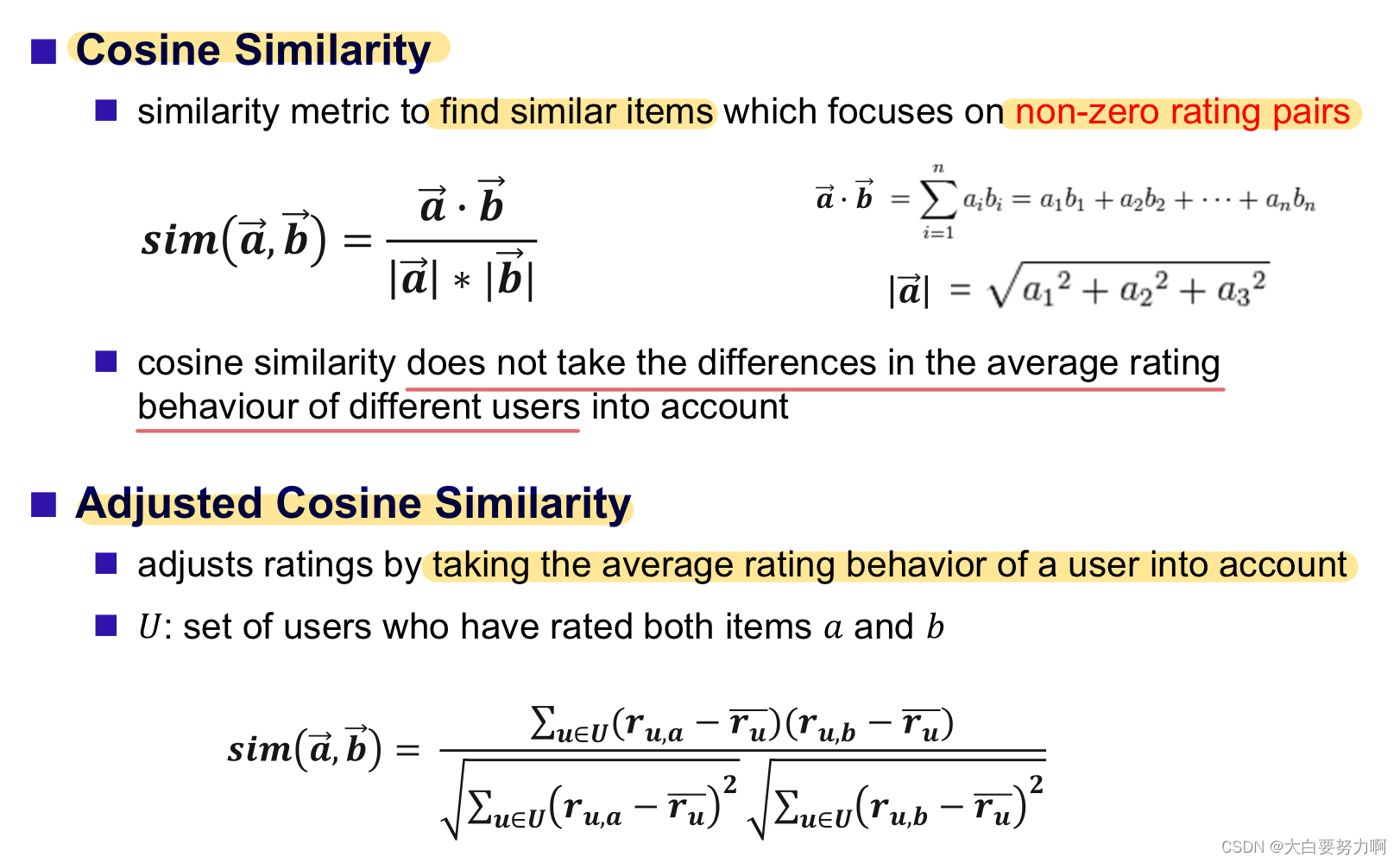

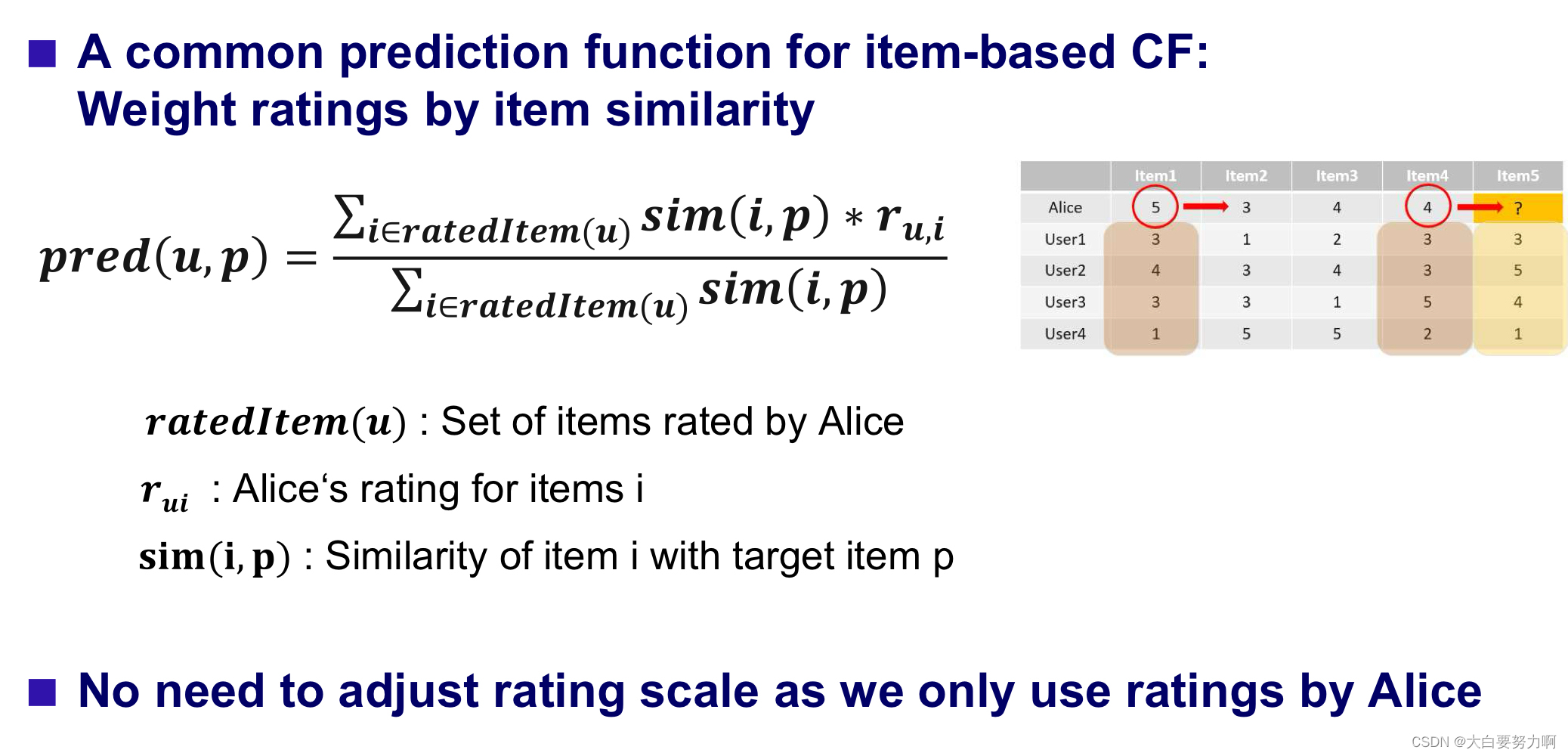

2.4.2.2 Item-Based Collaborative Filtering

Basic idea: Use the similarity between items (and not users) to make predictions

Calculating Item-to-Item Similarity

Adjusted Cosine Similarity = Pearson

Making Prediction

Offline Pre-Calculations for Item-Based Filtering

less items than users => pre-calculate the item similarities and store them in memory

Neighborhood size is typically also limited to a specific size k

Memory requirements: Up to n^2 pair-wise similarities to be memorized. But in practice, the memory requirements are significantly lower as many items have no co-ratings and neighborhood size often limited to k items above minimum similarity threshold

Explicit Ratings

the most precise ratings but users often not willing to rate items

Implicit Ratings

items bought, clicks, page views, time spent on some page,…

Easy to collect but cannot sure whether the user behavior is correctly interpreted (gift)

Most deployed collaborative filtering systems rely on implicit ratings

2.4.2.3 Summary

Collaborative filtering is a technique used in recommendation systems to predict a user’s preference for a particular item based on the preferences of other similar users.

The idea behind collaborative filtering is that if two users have similar preferences for a set of items, they are likely to have similar preferences for other items as well. Similarly, if two items have been rated highly by the same set of users, they are likely to be similar in some way and may be recommended to users who have shown an interest in one of the items.

Collaborative filtering algorithms can be based on either user-based or item-based methods. User-based collaborative filtering recommends items to a user based on the preferences of other users who are similar to them, while item-based collaborative filtering recommends items that are similar to the items a user has previously liked or rated highly.

2.4.3 Content-based Recommendation

While collaborative filtering methods do not use any information about the items, it might be reasonable to exploit such information. e.g., recommend fantasy novels to people who liked fantasy novels in the past

What do we need?

(1)information about the available items (content)

(2) some sort of user profile describing what the user likes (user preferences)

The tasks:

(1) learn user preferences from what she has bought/seen before

(2) recommend items that are “similar” to the user preferences

2.4.3.1 Structured Content and User Profile Representation

Simple recommendation approach: Compute the similarity of an unseen item with the user profile based on keyword overlap (e.g. using Dice)

More sophisticated approach: include other attributes: Genre, Author, Type

2.4.3.2 Textual Content and User Profile Representation

Documents and user profiles can be represented as term-vectors containing, for example, term frequencies.

Similarity of Text Documents

Challenge: term vectors are very sparse, not every word has same importance, long documents have higher chance to overlap with user profile, semantic similarity of words might be relevant

Methods

similarity metric: cosine similarity, as it ignores M00

preprocessing: remove stop words

vector creation:

- Term-Frequency - Inverse Document Frequency (TF-IDF )

- use word embeddings instead of one-hot-encoded term vectors

combined feature creation and similarity calculation :

- Transformer-based methods (e.g. Sentence BERT)

Recap: Term-Frequency - Inverse Document Frequency (TF-IDF)

Evaluate how important a word is to a corpus of documents, give more weight to rare words, less weight to common words(domain-specific stopwords)

Recap: Cosine Similarity and TF-IDF

Recommending Documents

Given a set of documents D already rated by the user, find the k nearest neighbors of a not-yet-seen item i in D (measure similarity: cosine). Then, use ratings from Alice for neighbors k to predict a rating for item i.

Variations: use similarity threshold instead of neighborhood size k; use upper similarity threshold to prevent system from recommending too similar texts.

Pros

- do not require a user community

- no problems with cold-start-problem

Cons

- require to learn a suitable model of user’s preferences based on explicit or implicit feedback (ramp-up phase required for new users - users need to view/rate some items)

- overspecialization (propose “more of the same”, recommendations might be boring as items are too similar)

2.4.4 Model-based Collaborative Filtering

Key idea: Learn a model from training data “offline” and apply it “online” to compute ratings and perform recommendations. It requires less online computation than memory-based KNN approaches. Including: Item-Based Collaborative Filtering(model = pre-calculated similarities to set of neighbors), Probabilistic Recommendation using Naïve Bayes, and Recommendation using Matrix Factorization

2.4.4.1 Probabilistic Recommendation using Naïve Bayes

Class Conditional Independence Assumption

Example

2.4.4.2 Recommendation using Matrix Factorization

Latent Factor Models

Item characteristics and user preferences are represented as numerical factor values in the same space.

Latent Factor models map both users and items to a joint latent factor space of dimensionality f

- Each item i and user u is associated with a factor vector qi , pu

- For a given item i, elements of qi measure the extent to which the item possesses those factors (positive or negative)

- For a given user u, elements of pu measure the extent of interest the user has in items that are high on the corresponding factors (positive or negative)

User-item interactions are modelled as dot product in that space. The dot product, captures the interaction between user u and item i – namely the user’s overall interest in the item’s characteristics

Matrix Factorization

Approach: approximately decompose rating matrix into dot product of user feature and item feature matrices. However, rating matrix is usually sparse.

Stochastic Gradient Descent

Item and User Bias

Item or user specific rating variations are called biases.

Adding Item and User Biases to the Model

Pros:

(1) scale to large use cases

(2) allow flexible modeling of use case

(3) outperform KNN

2.4.5 Hybrid Recommendation

Hybrid: Combinations of various inputs and/or composition of different mechanism in order to overcome problems of single methods.

- Collaborative: “Tell me what’s popular among my peers”

- Content-based: “Show me more of the same what I’ve liked”

- Demographic: :Offer American plugs to people from the US"

2.4.5.1 Parallelized Hybrid Recommendation Design

Output of several recommenders is combined

Least invasive design

Requires some weighting or voting scheme

(1) Static weights: Can be learned using existingratings as supervision

(2) Dynamic weighting: Adjust weights or switch between different recommenders as more information about users and items becomes available (To deal with cold start problem: If too few ratings available for a new item, then use content-based recommendation, otherwise use collaborative filtering)

More expressive aggregation: RandomForest, Neural Net

2.4.5.2 Monolithic Hybrid Recommendation Design

Features/knowledge of different recommendation paradigms are combined in a single recommendation component.

E.g.:

Ratings and user demographics: for example, filter rating by user location.

Ratings and content features: user rated many movies positive which are

comedies -> recommend more comedies (see example below)

Example: Content-boosted Collaborative Filtering

based on content features additional ratings are created

e.g.Alice likes Items1 and 3(unary ratings), and item7 is similar to 1 and 3 by a degree of 0.75. Thus, add rating of 0.75 for Alice/Item7 to rating matrix

Rating matrix becomes less sparse

2.4.6 Evaluating Recommender Systems

Different views on performance. Here we only focus on measuring the degree of performance when compared to ground truth judgements.

2.4.6.1 Popular Evaluation Measures

2.4.6.1.1 Numerical ratings

The gold standard consists of ground-truth judgements of how much a user likes an item- e.g., on a Likert scale between 1 and 5 - Mean Absolute Error (MAE), Root Mean Squared Error (RMSE). RMSE emphasis on larger deviation.

2.4.6.1.2 Categorical ratings

The gold standard consists of ground-truth judgements of whether a user likes or dislikes an item. – e.g., like/dislike or good, neutral, bad - Accuracy, Precision, Recall, F1-Score

2.4.6.1.3 ranked results

useful when items are presented as ranked lists - Average Precision (AP), Precision at rank k (P@k), Normalized Discounted Cumulative Gain (nDCG)

Rank position also matter! Rank metrics take the positions of relevant items in a ranked list into account.

If the gold standard consists of ground-truth judgements of whether an item is relevant (i.e., to be recommended) for a user, i.e., binary relevance annotations, we use Average Precision (AP), P@K, R-Precision

Average Precision considers all recall levels, even at very low ranks. This might be inappropriate since most users will look only at a few top recommendations.

2.4.6.1.4 Normalized Discounted Cumulative Gain (nDCG)

For graded relevance annotations. e.g., from 1 (marginally relevant) to 5 (highly relevant).

Assumptions

(1) Highly relevant items are more useful than marginally relevant items

(2) The higher the relevance of the item, the higher it should appear in the relevance ranking

nDCG takes into account the graded relevancies of items when evaluating the ranking

Maximal DCG score depends on the number of relevant items

In addition to selecting a measure, we need an evaluation setup that ensures a good estimate for unseen data.

2.4.6.2 Evaluation Setup

If dataset is

large - use fixed training/validation/test split

small - optimize hyperparameters using k-fold cross-validation (CV)

2.4.6.3 Model Selection

Overall process: selecting the hyperparameters, training, testing

Select – Train – Evaluate:

- Split the data set into a training set and a test set (e.g.,70% | 30%)

- Model selection: for each hyperparameter configuration -> Cross-validate the model on the training set (e.g., 5-fold CV)

- Choose the best performing hyperparameter configuration

- Train model with best hyperparameters on the whole train set

- Evaluate the trained model on the test set

This ensures that your model is not overfitted to the test set

You get a realistic estimate of its performance on unseen data

2.4.7 Attacks on Recommender Systems

Basic Attack Strategies

- automatically create numerous fake accounts / profiles

- issue high or low ratings for target item

- rate additional filller items to - make fake profile appear in neighborhood of many real-world users and camouflage fake profiles

- for implicit ratings: user crawler that automatically navigates the site

Counter Measures:

- make it difficult to generate fake profiles (e.g. using Captchas)

- use machine-learning methods to discriminate real from fake profiles

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?