正文

tensorflow提供了多种optimizer,典型梯度下降GradientDescent和Adagrad、Momentum、Nestrov、Adam等变种。

典型的学习步骤是梯度下降GradientDescent,optimizer可以自动实现这一过程,通过指定loss来串联所有相关变量形成计算图,然后通过optimizer(learning_rate).minimize(loss)实现自动梯度下降。minimize()也是两步操作的合并,后边会分解。

计算图的概念:一个变量想要被训练到,前提他在计算图中,更直白的说,要在公式或者连锁公式中,如果一个变量和loss没有任何直接以及间接关系,那就不会被训练到。

train的过程其实就是修改计算图中的tf.Variable的过程,可以认为这些所有variable都是权重。

限定train的Variable的方法:

1.根据train是修改计算图中tf.Variable的事实,可以使用tf.constant或者python变量的形式来规避常量被训练。

#demo2

#define variable and error

label = tf.constant(1,dtype = tf.float32)

x = tf.placeholder(dtype = tf.float32)

w1 = tf.Variable(4,dtype=tf.float32)

w2 = tf.Variable(4,dtype=tf.float32)

w3 = tf.constant(4,dtype=tf.float32)

y_predict = w1*x+w2*x+w3*x

#define losses and train

make_up_loss = tf.square(y_predict - label)

optimizer = tf.train.GradientDescentOptimizer(0.01)

train_step = optimizer.minimize(make_up_loss)

init = tf.global_variables_initializer()

with tf.Session() as sess:

sess.run(init)

for _ in range(100):

w1_,w2_,w3_,loss_ = sess.run([w1,w2,w3,make_up_loss],feed_dict={x:1})

print('variable is w1:',w1_,' w2:',w2_,' w3:',w3_, ' and the loss is ',loss_)

sess.run(train_step,{x:1})

默认是计算图中所有tf.Variable,可以通过var_list指定

train_step = optimizer.minimize(make_up_loss,var_list = w2)

3.var_list的取值还可以通过tf.getCollection获得

#demo2.2 another way to collect var_list

label = tf.constant(1,dtype = tf.float32)

x = tf.placeholder(dtype = tf.float32)

w1 = tf.Variable(4,dtype=tf.float32)

with tf.name_scope(name='selected_variable_to_trian'):

w2 = tf.Variable(4,dtype=tf.float32)

w3 = tf.constant(4,dtype=tf.float32)

y_predict = w1*x+w2*x+w3*x

#define losses and train

make_up_loss = (y_predict - label)**3

optimizer = tf.train.GradientDescentOptimizer(0.01)

output_vars = tf.get_collection(tf.GraphKeys.TRAINABLE_VARIABLES, scope='selected_variable_to_trian')

train_step = optimizer.minimize(make_up_loss,var_list = output_vars)

init = tf.global_variables_initializer()

with tf.Session() as sess:

sess.run(init)

for _ in range(3000):

w1_,w2_,w3_,loss_ = sess.run([w1,w2,w3,make_up_loss],feed_dict={x:1})

print('variable is w1:',w1_,' w2:',w2_,' w3:',w3_, ' and the loss is ',loss_)

sess.run(train_step,{x:1})

4.TRAINABLE_VARIABLE=False

另一种限制variable被限制的方法,与上边的方法原理相似,都和tf.GraphKeys.TRAINABLE_VARIABLE有关,只不过前一个是从里边挑出指定scope,这个从变量定义时就决定了不往里插入这个变量。

#demo2.4 another way to avoid variable be train

label = tf.constant(1,dtype = tf.float32)

x = tf.placeholder(dtype = tf.float32)

w1 = tf.Variable(4,dtype=tf.float32,trainable=False)

w2 = tf.Variable(4,dtype=tf.float32)

w3 = tf.constant(4,dtype=tf.float32)

y_predict = w1*x+w2*x+w3*x

#define losses and train

make_up_loss = (y_predict - label)**3

optimizer = tf.train.GradientDescentOptimizer(0.01)

output_vars = tf.get_collection(tf.GraphKeys.TRAINABLE_VARIABLES)

train_step = optimizer.minimize(make_up_loss,var_list = output_vars)

init = tf.global_variables_initializer()

with tf.Session() as sess:

sess.run(init)

for _ in range(3000):

w1_,w2_,w3_,loss_ = sess.run([w1,w2,w3,make_up_loss],feed_dict={x:1})

print('variable is w1:',w1_,' w2:',w2_,' w3:',w3_, ' and the loss is ',loss_)

sess.run(train_step,{x:1})

获取所有trainable变量来train,(不指定var_list直接train),是默认参数。其效果等价于指定var_list训练。

minimize()操作分解

其实minimize()操作也只是一个compute_gradients()和apply_gradients()的组合操作.

compute_gradients()用来计算梯度,opt.apply_gradients()用来更新参数。通过多个optimizer可以指定多个具有不同学习率的学习过程,针对不同的var_list分别进行gradient的计算和参数更新,可以用来迁移学习或者处理一些深层网络梯度更新不匹配的问题,暂不赘述。

#demo2.4 combine of ompute_gradients() and apply_gradients()

label = tf.constant(1,dtype = tf.float32)

x = tf.placeholder(dtype = tf.float32)

w1 = tf.Variable(4,dtype=tf.float32,trainable=False)

w2 = tf.Variable(4,dtype=tf.float32)

w3 = tf.Variable(4,dtype=tf.float32)

y_predict = w1*x+w2*x+w3*x

#define losses and train

make_up_loss = (y_predict - label)**3

optimizer = tf.train.GradientDescentOptimizer(0.01)

w2_gradient = optimizer.compute_gradients(loss = make_up_loss, var_list = w2)

train_step = optimizer.apply_gradients(grads_and_vars = (w2_gradient))

init = tf.global_variables_initializer()

with tf.Session() as sess:

sess.run(init)

for _ in range(300):

w1_,w2_,w3_,loss_,w2_gradient_ = sess.run([w1,w2,w3,make_up_loss,w2_gradient],feed_dict={x:1})

print('variable is w1:',w1_,' w2:',w2_,' w3:',w3_, ' and the loss is ',loss_)

print('gradient:',w2_gradient_)

sess.run(train_step,{x:1})

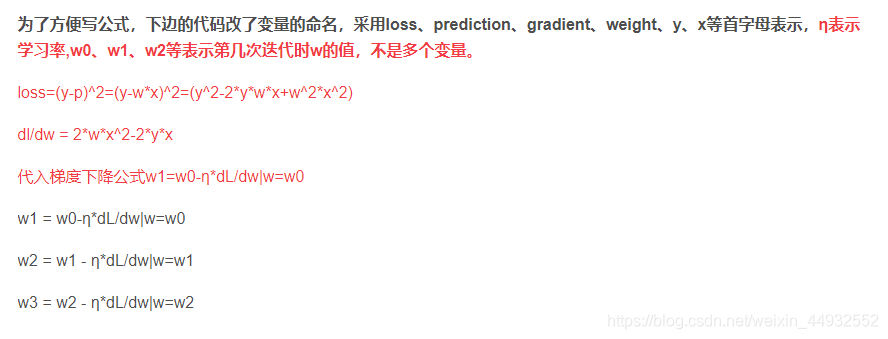

梯度下降的理解:

https://blog.youkuaiyun.com/huqinweI987/article/details/82771521

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?