1001.使用jieba.cut()对“Python是最有意思的编程语言”进行分词,输出结果,并将该迭代器转换为列表类型。

import jieba

text = "Python是最有意思的编程语言"

#jieba.cut()生成的是一个generator发生器,可以通过for循环来取里面的每一个词

ls = list(jieba.cut(text))

print(ls)

结果

['Python', '是', '最', '有意思', '的', '编程语言']

1002.使用jieba.cut()对“今天晚上我吃了意大利面”进行分词,输出结果,并使“意大利面”作为一个词出现在结果中。

import jieba

text = "今天晚上我吃了意大利面"

jieba.add_word('意大利面')

ls = list(jieba.cut(text))

print(ls)

结果

['今天', '晚上', '我', '吃', '了', '意大利面']

1003.自选一篇报告或者演讲稿,利用jieba分析出其词频排前5的关键词。

#这里选择马丁·路德·金的演讲《我有一个梦想》进行分析

import jieba

f = open('我有一个梦想.txt','r')

txt = f.read()

f.close()

words = jieba.lcut(txt)

d = {}

for word in words:

if len(word) == 1:

continue

else:

d[word] = d.get(word,0)+1

items = list(d.items())

items.sort(key = lambda x:x[1],reverse = True)

for i in range(5):

word,count = items[i]

print('{:<5}{:>5}'.format(word,count))

结果

我们 46

自由 27

黑人 18

今天 13

一个 12

1004.参考本章最后的实例,选择你喜欢的小说,统计出场人物词频排名。

#这里选择《雪中悍刀行》

import jieba

excludes = {"北凉","没有","一个","这个","不是","就是", \

"自己","北莽","女子","只是","什么","那个", \

"已经","江湖","年轻","知道","还是","不过", \

"老人","这位","有些","问道","他们","说道", \

"但是","可以","殿下","世子","骑军","离阳", \

"那些","以后","如何","这些","然后","时候", \

"看到","还有","天下","一声","当年","这么", \

"轩辕","咱们","你们","将军","觉得","不会", \

"其实","那位","不知","便是","怎么","如今", \

"之后","如果","年轻人","一位","轻轻","身边", \

"能够","藩王","北凉王","如此","那么","一起", \

"一些","点头","更是","不敢","甚至","铁骑", \

"师父","京城","最后","开始","只要","一点", \

"许多","就要","高手","皇帝","因为","两人", \

"突然","一下","所以","大将军","广陵","起身", \

"公子","中原","双手","转头","这种","一直", \

"一名","就算","看着","少年","除了","yin", \

"缓缓","男子","那边","只有","朝廷","喜欢", \

"为何","而是","一次","一样","摇头","坐在", \

"哪怕","我们","那名","说话","幽州","事情", \

"当时","眼神","离开","家伙","境界","这里", \

"宗师"}

f = open("雪中悍刀行.txt", "r", encoding="utf-8")

txt = f.read()

f.close()

words = jieba.lcut(txt)

counts = {}

for word in words:

if len(word) == 1: #排除单个字符的分词结果

continue

else:

counts[word] = counts.get(word,0) + 1

for word in excludes:

del counts[word]

items = list(counts.items())

items.sort(key=lambda x:x[1], reverse=True)

for i in range(6):

word, count = items[i]

print ("{0:<5}{1:>5}".format(word, count))

结果

徐凤年 19255

徐骁 1996

王仙芝 1151

菩萨 1145

陈芝豹 1008

曹长卿 1001

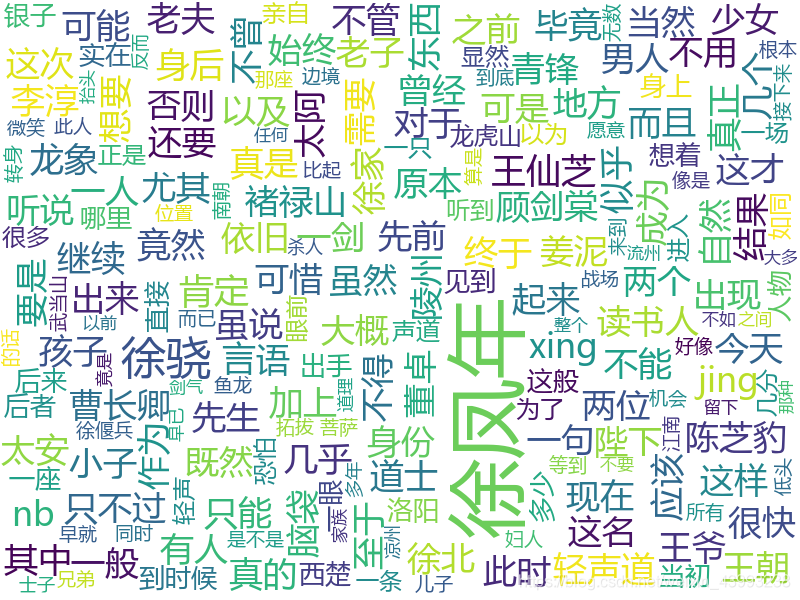

1005.续上题,将上题结果以词云的方式展现,并尝试美化生成的词云图片。

import jieba

from wordcloud import WordCloud

excludes = {"北凉","没有","一个","这个","不是","就是", \

"自己","北莽","女子","只是","什么","那个", \

"已经","江湖","年轻","知道","还是","不过", \

"老人","这位","有些","问道","他们","说道", \

"但是","可以","殿下","世子","骑军","离阳", \

"那些","以后","如何","这些","然后","时候", \

"看到","还有","天下","一声","当年","这么", \

"轩辕","咱们","你们","将军","觉得","不会", \

"其实","那位","不知","便是","怎么","如今", \

"之后","如果","年轻人","一位","轻轻","身边", \

"能够","藩王","北凉王","如此","那么","一起", \

"一些","点头","更是","不敢","甚至","铁骑", \

"师父","京城","最后","开始","只要","一点", \

"许多","就要","高手","皇帝","因为","两人", \

"突然","一下","所以","大将军","广陵","起身", \

"公子","中原","双手","转头","这种","一直", \

"一名","就算","看着","少年","除了","yin", \

"缓缓","男子","那边","只有","朝廷","喜欢", \

"为何","而是","一次","一样","摇头","坐在", \

"哪怕","我们","那名","说话","幽州","事情", \

"当时","眼神","离开","家伙","境界","这里", \

"宗师"}

f = open("雪中悍刀行.txt", "r", encoding="utf-8")

txt = f.read()

f.close()

words = jieba.lcut(txt)

newtxt = ' '.join(words)

wordcloud = WordCloud(background_color="white", \

width=800, \

height=600, \

font_path="msyh.ttc", \

max_words=200, \

max_font_size=80, \

stopwords = excludes, \

).generate(newtxt)

wordcloud.to_file("雪中悍刀行基本词云.png")

结果

博客围绕jieba库展开应用实践,包括对不同语句使用jieba.cut()进行分词,将迭代器转换为列表;让特定词语作为整体分词;用jieba分析报告或演讲稿的前5关键词;统计小说出场人物词频排名,并将结果以词云形式展现且美化图片。

博客围绕jieba库展开应用实践,包括对不同语句使用jieba.cut()进行分词,将迭代器转换为列表;让特定词语作为整体分词;用jieba分析报告或演讲稿的前5关键词;统计小说出场人物词频排名,并将结果以词云形式展现且美化图片。

904

904

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?