Understanding Multimodal Contrastive Learning and Incorporating Unpaired Data

Toward Understanding the Feature Learning Process of Self-supervised Contrastive Learning

Text and Code Embeddings by Contrastive Pre-Training

Momentum Contrastive Pre-training for Question Answering

LiT: Zero-Shot Transfer with Locked-image text Tuning

UNDERSTANDING DIMENSIONAL COLLAPSE IN CONTRASTIVE SELF-SUPERVISED LEARNING

Unsupervised Feature Learning via Non-Parametric Instance Discrimination

Exploring simple siamese representation learning

Contrastive Learning for Prompt-Based Few-Shot Language Learners

UniTRec: A Unified Text-to-Text Transformer and Joint Contrastive Learning Framework for Text-based Recommendation

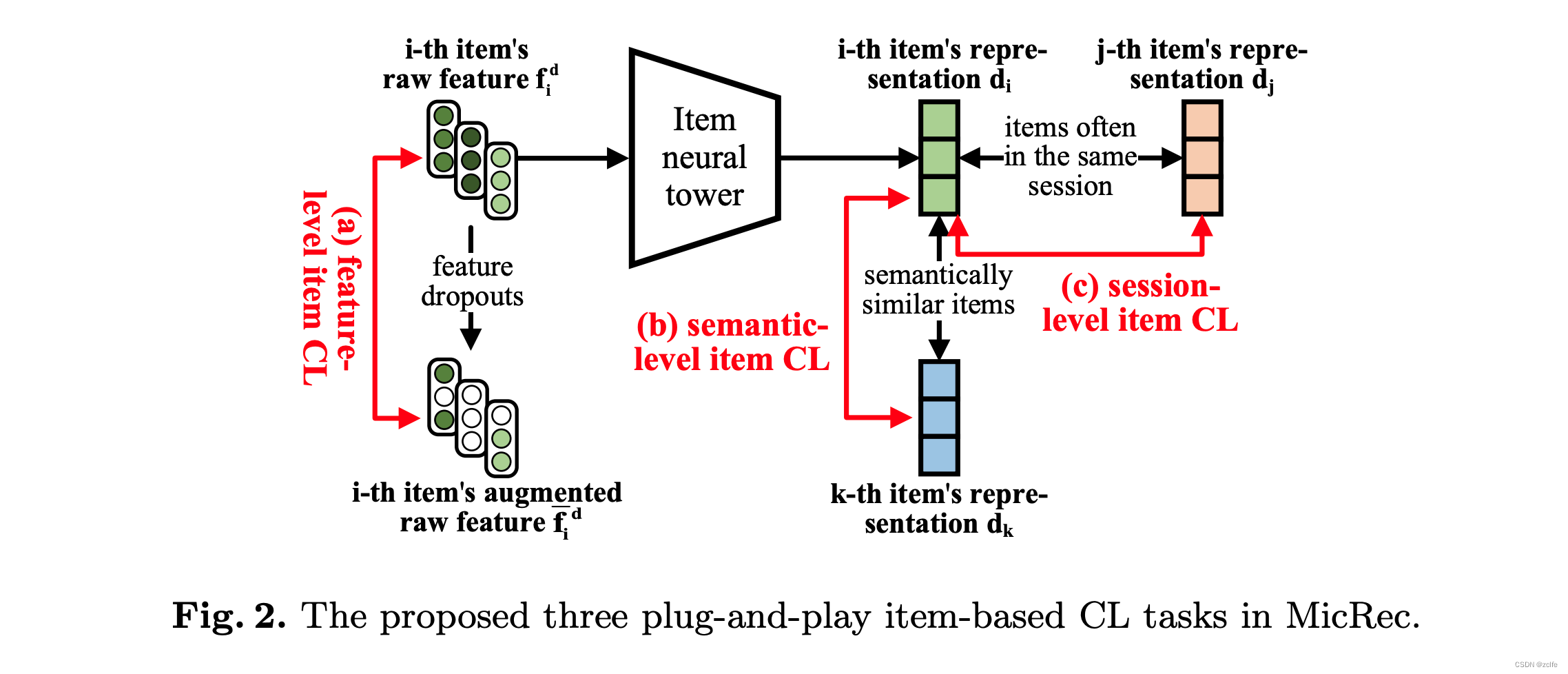

Multi-granularity Item-based Contrastive Recommendation

Contrastive Collaborative Filtering for Cold-Start Item Recommendation

main idea is to teach the CF module to memorize the co-occurrence collaborative signals during the training phase and how to rectify the blurry CBCEs of cold-start items according to the memorized co-occurrence collaborative signals when applying the model.

Review-based Multi-intention Contrastive Learning for Recommendation

A Contrastive Sharing Model for Multi-Task Recommendation

Re4: Learning to Re-contrast, Re-attend, Re-construct for Multi-interest Recommendation

对比学习关键的因素:

- L2正则使用,将向量转化为单位向量,使得训练变得稳定

- 温度参数的设置,一般要设置小一点

- alignment 拉近正样本

- uniformity靠负样本使得样本均匀分布在超球面上。负样本太容易区分会导致uniformity失效,导致容易崩溃

- 避免模型坍塌(1)基于不对称结构进行优化 (2)基于冗余降低进行优化 (3)

- stop gradient is import to collapse

一些论文认为对于collapse关键的因素

- simsiam -> stop gradient

- inbatch negative -> negative sample

- BYOL -> momentum encoder

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?