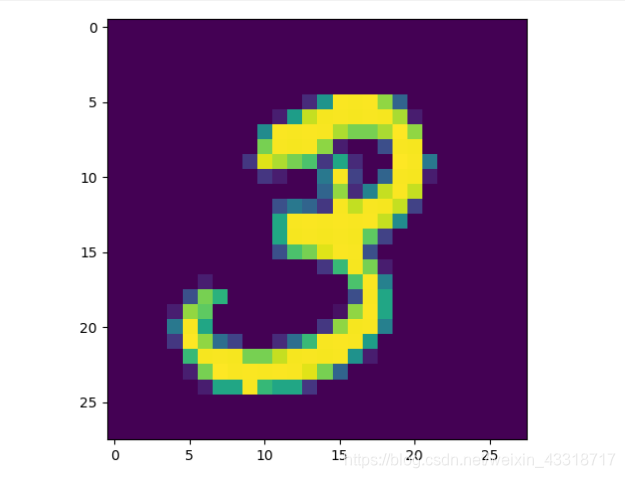

从MNIST数据集中选择一幅图,让机器模拟人眼区分手写数字是几

5.1导入图片和数据集

程序:

#1 利用tensorflow代码下载mnist

# http://yann.lecun.com/exdb/mnist/

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets("F:/shendu/MNIST_data/", one_hot=True)

#将mnist里面的信息打印出来

print ('输入数据:',mnist.train.images)

print ('输入数据打shape:',mnist.train.images.shape)

import pylab

im = mnist.train.images[1]

im = im.reshape(-1,28)

pylab.imshow(im)

pylab.show()

#查看数据集里面的数据信息

print ('输入数据打shape:',mnist.test.images.shape)

print ('输入数据打shape:',mnist.validation.images.shape)

结果:

Extracting F:/shendu/MNIST_data/train-images-idx3-ubyte.gz

Extracting F:/shendu/MNIST_data/train-labels-idx1-ubyte.gz

Extracting F:/shendu/MNIST_data/t10k-images-idx3-ubyte.gz

Extracting F:/shendu/MNIST_data/t10k-labels-idx1-ubyte.gz

输入数据: [[0. 0. 0. ... 0. 0. 0.]

[0. 0. 0. ... 0. 0. 0.]

[0. 0. 0. ... 0. 0. 0.]

...

[0. 0. 0. ... 0. 0. 0.]

[0. 0. 0. ... 0. 0. 0.]

[0. 0. 0. ... 0. 0. 0.]]

输入数据打shape: (55000, 784)

输入数据打shape: (10000, 784)

输入数据打shape: (5000, 784)

5.2 分析图片的特点,定义变量

5.3 构建模型

5.4 训练模型并输出中间状态参数

程序:

#5.2 分析图片的特点,定义变量

import tensorflow as tf #导入tensorflow库

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets("F:/shendu/MNIST_data/", one_hot=True)

import pylab

tf.reset_default_graph()

# tf Graph Input

x = tf.placeholder(tf.float32, [None, 784]) # mnist data维度 28*28=784

y = tf.placeholder(tf.float32, [None, 10]) # 0-9 数字=> 10 classes

#5.3 构建模型

#1 定义学习参数 Set model weights

W = tf.Variable(tf.random_normal([784, 10]))

b = tf.Variable(tf.zeros([10]))

#2 定义输出节点

# 构建模型

pred = tf.nn.softmax(tf.matmul(x, W) + b) # Softmax分类

#3 定义反向传播结构

#损失函数 Minimize error using cross entropy

cost = tf.reduce_mean(-tf.reduce_sum(y*tf.log(pred), reduction_indices=1))

#参数设置

learning_rate = 0.01

# 使用梯度下降优化器

optimizer = tf.train.GradientDescentOptimizer(learning_rate).minimize(cost)

#5.4 训练模型并输出中间状态参数

training_epochs = 25

batch_size = 100

display_step = 1

saver = tf.train.Saver()

model_path = "F:/shendu/521model.ckpt"

# 启动session

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())# Initializing OP

# 启动循环开始训练

for epoch in range(training_epochs):

avg_cost = 0.

total_batch = int(mnist.train.num_examples/batch_size)

# 遍历全部数据集,循环所有数据集

for i in range(total_batch):

batch_xs, batch_ys = mnist.train.next_batch(batch_size)

#运行优化器 Run optimization op (backprop) and cost op (to get loss value)

_, c = sess.run([optimizer, cost], feed_dict={x: batch_xs,

y: batch_ys})

#计算平均loss值 Compute average loss

avg_cost += c / total_batch

# 显示训练中的详细信息

if (epoch+1) % display_step == 0:

print ("Epoch:", '%04d' % (epoch+1), "cost=", "{:.9f}".format(avg_cost))

print( " Finished!")

结果:

Extracting F:/shendu/MNIST_data/train-images-idx3-ubyte.gz

Extracting F:/shendu/MNIST_data/train-labels-idx1-ubyte.gz

Extracting F:/shendu/MNIST_data/t10k-images-idx3-ubyte.gz

Extracting F:/shendu/MNIST_data/t10k-labels-idx1-ubyte.gz

Epoch: 0001 cost= 7.533937749

Epoch: 0002 cost= 4.087300664

Epoch: 0003 cost= 2.859837624

Epoch: 0004 cost= 2.265535372

Epoch: 0005 cost= 1.923775465

Epoch: 0006 cost= 1.702600822

Epoch: 0007 cost= 1.547159600

Epoch: 0008 cost= 1.431017190

Epoch: 0009 cost= 1.340611978

Epoch: 0010 cost= 1.267918987

Epoch: 0011 cost= 1.207861313

Epoch: 0012 cost= 1.157394901

Epoch: 0013 cost= 1.114226475

Epoch: 0014 cost= 1.076534821

Epoch: 0015 cost= 1.043827639

Epoch: 0016 cost= 1.014481443

Epoch: 0017 cost= 0.988165269

Epoch: 0018 cost= 0.964408675

Epoch: 0019 cost= 0.942912809

Epoch: 0020 cost= 0.923217485

Epoch: 0021 cost= 0.904975601

Epoch: 0022 cost= 0.888248457

Epoch: 0023 cost= 0.872694091

Epoch: 0024 cost= 0.858185982

Epoch: 0025 cost= 0.844587765

Finished!

5.5 -5.6 测试模型,保存模型

程序:

#5.2 分析图片的特点,定义变量

import tensorflow as tf #导入tensorflow库

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets("F:/shendu/MNIST_data/", one_hot=True)

import pylab

tf.reset_default_graph()

# tf Graph Input

x = tf.placeholder(tf.float32, [None, 784]) # mnist data维度 28*28=784

y = tf.placeholder(tf.float32, [None, 10]) # 0-9 数字=> 10 classes

#5.3 构建模型

#1 定义学习参数 Set model weights

W = tf.Variable(tf.random_normal([784, 10]))

b = tf.Variable(tf.zeros([10]))

#2 定义输出节点

# 构建模型

pred = tf.nn.softmax(tf.matmul(x, W) + b) # Softmax分类

#3 定义反向传播结构

#损失函数 Minimize error using cross entropy

cost = tf.reduce_mean(-tf.reduce_sum(y*tf.log(pred), reduction_indices=1))

#参数设置

learning_rate = 0.01

# 使用梯度下降优化器

optimizer = tf.train.GradientDescentOptimizer(learning_rate).minimize(cost)

#5.4 训练模型并输出中间状态参数

training_epochs = 25

batch_size = 100

display_step = 1

saver = tf.train.Saver()

model_path = "F:/shendu/521model.ckpt"

# 启动session

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())# Initializing OP

# 启动循环开始训练

for epoch in range(training_epochs):

avg_cost = 0.

total_batch = int(mnist.train.num_examples/batch_size)

# 遍历全部数据集,循环所有数据集

for i in range(total_batch):

batch_xs, batch_ys = mnist.train.next_batch(batch_size)

#运行优化器 Run optimization op (backprop) and cost op (to get loss value)

_, c = sess.run([optimizer, cost], feed_dict={x: batch_xs,

y: batch_ys})

#计算平均loss值 Compute average loss

avg_cost += c / total_batch

# 显示训练中的详细信息

if (epoch+1) % display_step == 0:

print ("Epoch:", '%04d' % (epoch+1), "cost=", "{:.9f}".format(avg_cost))

print( " Finished!")

#5.5 测试模型

# 测试 model

correct_prediction = tf.equal(tf.argmax(pred, 1), tf.argmax(y, 1))

# 计算准确率

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

print ("Accuracy:", accuracy.eval({x: mnist.test.images, y: mnist.test.labels}))

#5.6 保存模型

# Save model weights to disk

save_path = saver.save(sess, model_path)

print("Model saved in file: %s" % save_path)

结果:

Extracting F:/shendu/MNIST_data/train-images-idx3-ubyte.gz

Extracting F:/shendu/MNIST_data/train-labels-idx1-ubyte.gz

Extracting F:/shendu/MNIST_data/t10k-images-idx3-ubyte.gz

Extracting F:/shendu/MNIST_data/t10k-labels-idx1-ubyte.gz

Epoch: 0001 cost= 8.105970959

Epoch: 0002 cost= 4.475853801

Epoch: 0003 cost= 3.171927071

Epoch: 0004 cost= 2.533820179

Epoch: 0005 cost= 2.156220097

Epoch: 0006 cost= 1.904493352

Epoch: 0007 cost= 1.724163913

Epoch: 0008 cost= 1.588195300

Epoch: 0009 cost= 1.481576429

Epoch: 0010 cost= 1.395297254

Epoch: 0011 cost= 1.323965988

Epoch: 0012 cost= 1.263911754

Epoch: 0013 cost= 1.212177466

Epoch: 0014 cost= 1.167430636

Epoch: 0015 cost= 1.127955468

Epoch: 0016 cost= 1.093035270

Epoch: 0017 cost= 1.061706158

Epoch: 0018 cost= 1.033252213

Epoch: 0019 cost= 1.007543803

Epoch: 0020 cost= 0.983997456

Epoch: 0021 cost= 0.962346797

Epoch: 0022 cost= 0.942473246

Epoch: 0023 cost= 0.923953633

Epoch: 0024 cost= 0.906754157

Epoch: 0025 cost= 0.890760613

Finished!

Accuracy: 0.8306

Model saved in file: F:/shendu/521model.ckpt

5.7 读取模型

程序:

#5.2 分析图片的特点,定义变量

import tensorflow as tf #导入tensorflow库

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets("F:/shendu/MNIST_data/", one_hot=True)

import pylab

tf.reset_default_graph()

# tf Graph Input

x = tf.placeholder(tf.float32, [None, 784]) # mnist data维度 28*28=784

y = tf.placeholder(tf.float32, [None, 10]) # 0-9 数字=> 10 classes

#5.3 构建模型

#1 定义学习参数 Set model weights

W = tf.Variable(tf.random_normal([784, 10]))

b = tf.Variable(tf.zeros([10]))

#2 定义输出节点

# 构建模型

pred = tf.nn.softmax(tf.matmul(x, W) + b) # Softmax分类

#3 定义反向传播结构

#损失函数 Minimize error using cross entropy

cost = tf.reduce_mean(-tf.reduce_sum(y*tf.log(pred), reduction_indices=1))

#参数设置

learning_rate = 0.01

# 使用梯度下降优化器

optimizer = tf.train.GradientDescentOptimizer(learning_rate).minimize(cost)

#5.4 训练模型并输出中间状态参数

training_epochs = 25

batch_size = 100

display_step = 1

saver = tf.train.Saver()

model_path = "F:/shendu/521model.ckpt"

#5.7 读取模型

# 读取模型

print("Starting 2nd session...")

with tf.Session() as sess:

#初始化变量 Initialize variables

sess.run(tf.global_variables_initializer())

#恢复模型变量 Restore model weights from previously saved model

saver.restore(sess, model_path)

# 测试 model

correct_prediction = tf.equal(tf.argmax(pred, 1), tf.argmax(y, 1))

# 计算准确率

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

print("Accuracy:", accuracy.eval({x: mnist.test.images, y: mnist.test.labels}))

output = tf.argmax(pred, 1)

batch_xs, batch_ys = mnist.train.next_batch(2)

outputval, predv = sess.run([output, pred], feed_dict={x: batch_xs})

print(outputval, predv, batch_ys)

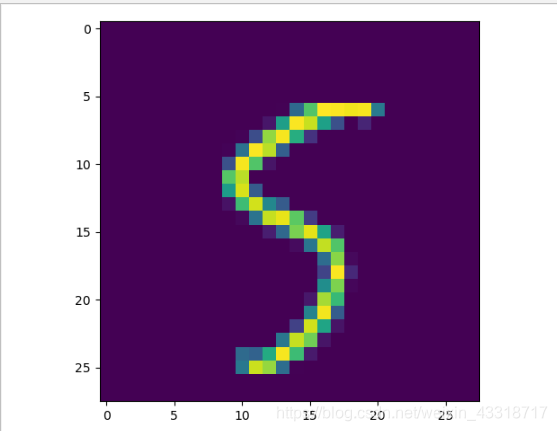

im = batch_xs[0]

im = im.reshape(-1, 28)

pylab.imshow(im)

pylab.show()

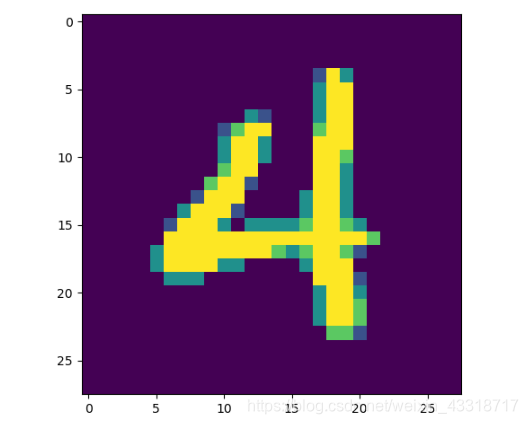

im = batch_xs[1]

im = im.reshape(-1, 28)

pylab.imshow(im)

pylab.show()

结果:

Extracting F:/shendu/MNIST_data/train-images-idx3-ubyte.gz

Extracting F:/shendu/MNIST_data/train-labels-idx1-ubyte.gz

Extracting F:/shendu/MNIST_data/t10k-images-idx3-ubyte.gz

Extracting F:/shendu/MNIST_data/t10k-labels-idx1-ubyte.gz

Starting 2nd session...

Accuracy: 0.8306

[5 4]

[[1.6561843e-04 5.4651889e-07 2.7361073e-04 3.7989013e-02 1.9851981e-05

5.9364939e-01 6.0037843e-07 9.7130658e-05 3.6701840e-01 7.8581058e-04]

[2.3846442e-07 2.8713408e-16 6.3471548e-06 5.8026183e-07 9.9998915e-01

7.3509878e-09 2.5957190e-06 3.7521692e-09 3.1330194e-07 7.7429326e-07]]

[[0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

[0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]]

(第一行是模型的准确率,

下边第一个数组是输出的预测结果,

第二个数组是预测出来的真实输出值,

第三个数组是标签值onehot编码表示的5和4)

博客介绍了利用MNIST数据集进行手写数字识别的过程。包括导入图片和数据集,分析图片特点并定义变量,构建、训练、测试和保存模型,最后读取模型,让机器模拟人眼区分手写数字。

博客介绍了利用MNIST数据集进行手写数字识别的过程。包括导入图片和数据集,分析图片特点并定义变量,构建、训练、测试和保存模型,最后读取模型,让机器模拟人眼区分手写数字。

14

14

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?