实现自动识别监控图像的任何物体

实现在监控中对图像画面的判断。(商业价值:人脸打卡;对实施监控外来人口的出入;识别异样物体;实施监控除该物体以外的其他物体等)

通过模型对需要载入的人进行人脸模型训练。在识别率的问题进行优化,通过算法可以对遮蔽防止监控的人进行识别,目前达到遮掩识别率超过其他类似识别算法。其中对于监控的摄像设备其使用当今的普通摄像头即可。

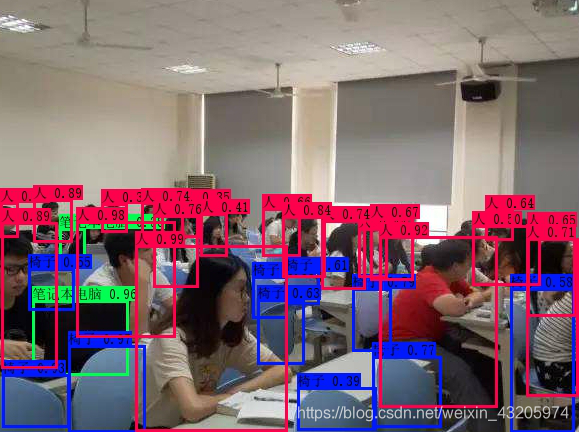

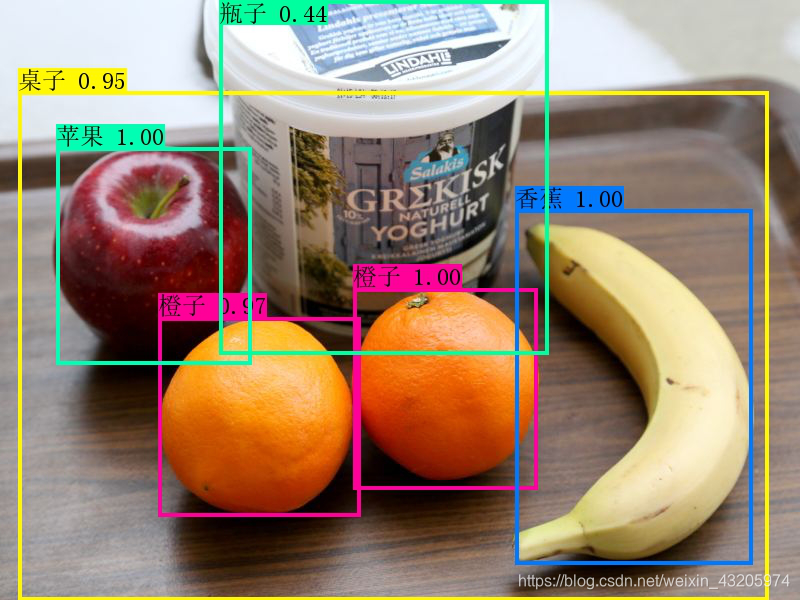

产品的展示效果

代码专区:

代码专区:

客户端

from PIL import Image

import socket

import cv2

import threading

import struct

import numpy as np

import argparse

from yolo import YOLO, detect_video

class Camera_Connect_Object:

def init(self,D_addr_port=["",8880]):

self.resolution=[640,480]

self.addr_port=D_addr_port

self.src=888+15 #双方确定传输帧数,(888)为校验值

self.interval=0 #图片播放时间间隔

self.img_fps=15 #每秒传输多少帧数

def Set_socket(self):

self.client=socket.socket(socket.AF_INET,socket.SOCK_STREAM)

self.client.setsockopt(socket.SOL_SOCKET,socket.SO_REUSEADDR,1)

def Socket_Connect(self):

self.Set_socket()

self.client.connect(self.addr_port)

print("IP is %s:%d" % (self.addr_port[0],self.addr_port[1]))

def RT_Image(self,yolo):

#按照格式打包发送帧数和分辨率

self.name=self.addr_port[0]+" Camera"

self.client.send(struct.pack("lhh", self.src, self.resolution[0], self.resolution[1]))

while(1):

info=struct.unpack("lhh",self.client.recv(8))

buf_size=info[0] #获取读的图片总长度

if buf_size:

try:

self.buf=b"" #代表bytes类型

temp_buf=self.buf

while(buf_size): #读取每一张图片的长度

temp_buf=self.client.recv(buf_size)

buf_size-=len(temp_buf)

self.buf+=temp_buf #获取图片

data = np.fromstring(self.buf, dtype='uint8') #按uint8转换为图像矩阵

self.image = cv2.imdecode(data, 1) #图像解码

imag = Image.fromarray(self.image)

imag = yolo.detect_image(imag)

result = np.asarray(imag)

cv2.putText(result, text=5, org=(3, 15), fontFace=cv2.FONT_HERSHEY_SIMPLEX,

fontScale=0.50, color=(255, 0, 0), thickness=2)

outVideo = cv2.resize(result, (640,480), interpolation=cv2.INTER_CUBIC)

# print(outVideo.shape)

# cv2.namedWindow("outVideo", cv2.WINDOW_NORMAL)

cv2.imshow(self.name, imag)

cv2.waitKey(10)

#cv2.imshow(self.name, self.image) #展示图片

yolo.close_session()

except:

pass;

finally:

if(cv2.waitKey(10)==27): #每10ms刷新一次图片,按‘ESC’(27)退出

self.client.close()

cv2.destroyAllWindows()

break

def Get_Data(self,interval):

showThread=threading.Thread(target=self.RT_Image(YOLO(**vars(FLAGS))))

showThread.start()

if name == ‘main’:

# class YOLO defines the default value, so suppress any default here

parser = argparse.ArgumentParser(argument_default=argparse.SUPPRESS)

parser.add_argument(

‘–model’, type=str,

help='path to model weight file, default ’ + YOLO.get_defaults(“model_path”)

)

parser.add_argument(

'--anchors', type=str,

help='path to anchor definitions, default ' + YOLO.get_defaults("anchors_path")

)

parser.add_argument(

'--classes', type=str,

help='path to class definitions, default ' + YOLO.get_defaults("classes_path")

)

parser.add_argument(

'--gpu_num', type=int,

help='Number of GPU to use, default ' + str(YOLO.get_defaults("gpu_num"))

)

parser.add_argument(

'--image', default=False, action="store_true",

help='Image detection mode, will ignore all positional arguments'

)

parser.add_argument(

'--webcam', type=int,

help='Number of GPU to use, default ' + str(YOLO.get_defaults("webcam"))

)

'''

Command line positional arguments -- for video detection mode

'''

parser.add_argument(

"--input", nargs='?', type=str, required=False, default='./path2your_video',

help="Video input path"

)

parser.add_argument(

"--output", nargs='?', type=str, default="",

help="[Optional] Video output path"

)

FLAGS = parser.parse_args()

print(FLAGS)

if FLAGS.image:

"""

Image detection mode, disregard any remaining command line arguments

"""

print("Image detection mode")

if "input" in FLAGS:

print(" Ignoring remaining command line arguments: " + FLAGS.input + "," + FLAGS.output)

camera=Camera_Connect_Object()

camera.addr_port[0]="192.168.1.100"

camera.addr_port=tuple(camera.addr_port)

camera.Socket_Connect()

camera.Get_Data(camera.interval)

-- coding: utf-8 -

Form implementation generated from reading ui file ‘jisuanji.ui’ # # Created by: PyQt5 UI code generator 5.5.1 # # WARNING! All changes made in this file will be lost!

import cv2

import os

import sys

from PyQt5.QtWidgets import QWidget, QPushButton, QApplication

from PyQt5.QtCore import QCoreApplication

from PyQt5 import QtCore, QtGui, QtWidgets

from PyQt5.QtGui import *

from PyQt5.QtWidgets import *

from PyQt5.QtCore import *

from PyQt5.QtCore import QTimer

import random

import time

import global_image

import numpy as np

global_image._init()

class Ui_MainWindow(QMainWindow, QWidget):

def init(self):

super().init()

self.desktop = QApplication.desktop()

self.screenRect = self.desktop.screenGeometry()

self.height = self.screenRect.height()

self.width = self.screenRect.width()

self.video_width = int(self.width * 50 / 92 / 4) * 4

self.video_height = int(self.width * 50 / 92 / 4) * 3

self.vidofram = '视频大小 : ' + str(self.video_width) + ' x ' + str(self.video_height)

self.setupUi(self)

self.timercount = 0

QShortcut(QKeySequence("Escape"), self, self.close)

self.showFullScreen()

self.videothread = VideoThread()

self.videothread.update_image_singal.connect(self.slot_init)

# self.videothread.finished.connect(self.slot_init())

self.videothread.start()

def setupUi(self, MainWindow):

MainWindow.setObjectName("MainWindow")

MainWindow.resize(self.width, self.height)

self.Mainwidget = QtWidgets.QWidget(MainWindow)

self.Mainwidget.setStyleSheet("#Mainwidget{border-image: url(./background8.png);}")

self.Mainwidget.setObjectName("Mainwidget")

self.verticalLayout = QtWidgets.QVBoxLayout(self.Mainwidget)

self.verticalLayout.setContentsMargins(0, -1, 0, 0)

# self.verticalLayout.setSpacing(0)

self.verticalLayout.setObjectName("verticalLayout")

self.horizontalLayout_up = QtWidgets.QHBoxLayout()

self.horizontalLayout_up.setContentsMargins(-1, -1, 0, 0)

self.horizontalLayout_up.setSpacing(0)

self.horizontalLayout_up.setObjectName("horizontalLayout_up")

spacerItem = QtWidgets.QSpacerItem(40, 20, QtWidgets.QSizePolicy.Expanding, QtWidgets.QSizePolicy.Minimum)

self.horizontalLayout_up.addItem(spacerItem)

self.video_widget = QtWidgets.QWidget(self.Mainwidget)

self.video_widget.setObjectName("video_widget")

# self.video_widget.setStyleSheet("border-style:solid ;border-width: 1px 1px 1px 1px;border-color :white ;")

self.verticalLayout_video = QtWidgets.QVBoxLayout(self.video_widget)

self.verticalLayout_video.setObjectName("verticalLayout_video")

self.video = QtWidgets.QLabel(self.video_widget)

self.video.setObjectName("video")

self.verticalLayout_video.addWidget(self.video)

self.video.setMinimumSize(QtCore.QSize(self.video_width, self.video_height))

self.video.setMaximumSize(QtCore.QSize(self.video_width, self.video_height))

self.verticalLayout_video.setStretch(0, 1)

self.verticalLayout_video.setStretch(1, 30)

self.verticalLayout_video.setContentsMargins(400, 94, 4, 0)

self.horizontalLayout_up.addWidget(self.video_widget)

spacerItem1 = QtWidgets.QSpacerItem(40, 20, QtWidgets.QSizePolicy.Expanding, QtWidgets.QSizePolicy.Minimum)

self.horizontalLayout_up.addItem(spacerItem1)

self.message_widget = QtWidgets.QWidget(self.Mainwidget)

self.message_widget.setObjectName("message_widget")

# self.message_widget.setStyleSheet("border-style:solid ;border-width: 1px 1px 1px 1px;border-color :white ;")

self.horizontalLayout_4 = QtWidgets.QHBoxLayout(self.message_widget)

self.horizontalLayout_4.setObjectName("horizontalLayout_4")

self.picture = QtWidgets.QLabel(self.message_widget)

self.picture.setMinimumSize(QtCore.QSize(320, 240))

self.picture.setMaximumSize(QtCore.QSize(320, 240))

self.picture.setObjectName("picture")

self.picture.setStyleSheet("border-style:solid ;border-width: 1px 1px 1px 1px;border-color :white ;")

####self.picture.setWindowOpacity(1)#透明背景色

self.picture.setScaledContents(True)

self.horizontalLayout_4.addWidget(self.picture)

self.horizontalLayout_up.addWidget(self.message_widget)

spacerItem2 = QtWidgets.QSpacerItem(40, 20, QtWidgets.QSizePolicy.Expanding, QtWidgets.QSizePolicy.Minimum)

self.horizontalLayout_up.addItem(spacerItem2)

self.horizontalLayout_up.setStretch(0, 1)

self.horizontalLayout_up.setStretch(1, 61)

self.horizontalLayout_up.setStretch(2, 1)

self.horizontalLayout_up.setStretch(3, 30)

self.horizontalLayout_up.setStretch(4, 2)

self.horizontalLayout_4.setContentsMargins(0, 180, 50, 100)

self.verticalLayout.addLayout(self.horizontalLayout_up)

self.horizontalLayout_down = QtWidgets.QHBoxLayout()

self.horizontalLayout_down.setContentsMargins(-1, -1, -1, 0)

# self.horizontalLayout_4.setContentsMargins(71, 21, 61, 39)

self.horizontalLayout_4.setSpacing(0)

self.horizontalLayout_down.setObjectName("horizontalLayout_down")

self.horizontalLayout_down.setContentsMargins(-1, -1, -1, 0)

self.verticalLayout.addLayout(self.horizontalLayout_down)

self.verticalLayout.setStretch(0, 40)

self.verticalLayout.setStretch(1, 1)

MainWindow.setCentralWidget(self.Mainwidget)

pe = QPalette()

pe.setColor(QPalette.WindowText, Qt.white) # 设置字体颜色

pegray = QPalette()

pegray.setColor(QPalette.WindowText, Qt.gray) # 设置字体颜色

font_viedo = QtGui.QFont()

font_viedo.setFamily("Microsoft YaHei")

font_viedo.setBold(True)

font_viedo.setPointSize(15)

self.video.setWordWrap(True)

self.video.setPalette(pegray)

self.video.setFont(font_viedo)

self.video.setStyleSheet("border-style:solid ;border-width: 1px 1px 1px 1px;border-color :white ;")

self.picture.setPalette(pegray)

self.picture.setFont(font_viedo)

self.retranslateUi(MainWindow)

QtCore.QMetaObject.connectSlotsByName(MainWindow)

self.timer = QTimer(self) # 初始化一个定时器

self.timer.timeout.connect(self.operate) # 计时结束调用operate()方法

self.timer.start(1000) # 设置计时间隔并启动

def retranslateUi(self, MainWindow):

_translate = QtCore.QCoreApplication.translate

MainWindow.setWindowTitle(_translate("MainWindow", "MainWindow"))

self.video.setText(_translate("MainWindow", "\n\n\n\n\n\n\n\n\n\t\t\t\t 网络正在加载"))

self.picture.setText(_translate("MainWindow", "网片正在加载"))

def operate(self):

dot = ['网络正在加载 .', '网络正在加载 . .', '网络正在加载 . . .', '网络正在加载']

self.video.setText("\n\n\n\n\n\n\n\n\n\t\t\t\t " + dot[self.timercount])

self.timercount += 1

if self.timercount == 4:

self.timercount = 0

def mousePressEvent(self, event):

if event.button() == Qt.LeftButton:

print("鼠标左键点击")

global_image.Set_image_flag()

def slot_init(self, showImage, flag0):

if flag0 == False:

msg = QtWidgets.QMessageBox.warning(self, u"Warning", u"请检测相机与电脑是否连接正确")

# time.sleep(2)

quit()

else:

self.timer.stop()

self.video.setPixmap(QtGui.QPixmap.fromImage(showImage))

if global_image.Get_image_flag(): # 如果按下鼠标左键 就截图

self.picture.setPixmap(QtGui.QPixmap.fromImage(showImage))

####### Video thread ########

class VideoThread(QtCore.QThread):

update_image_singal = QtCore.pyqtSignal(QImage, bool)

def init(self, parent=None):

super(VideoThread, self).init(parent)

self.desktop = QApplication.desktop()

self.screenRect = self.desktop.screenGeometry()

self.height = self.screenRect.height()

self.width = self.screenRect.width()

self.video_width = int(self.width * 50 / 91 / 4) * 4

self.video_height = int(self.width * 50 / 91 / 4) * 3

# self.video_width = 400

# self.video_height = 300

self.cap = cv2.VideoCapture() # 创建一个 VideoCapture 对象

def run(self):

self.flag0 = self.cap.open(0)

# if self.flag0 == False:

# return

while 1:

flag1, self.image = self.cap.read(0)

show = cv2.resize(self.image, (self.video_width, self.video_height))

show = cv2.cvtColor(show, cv2.COLOR_BGR2RGB)

showImage = QtGui.QImage(show.data, self.video_width, self.video_height, QtGui.QImage.Format_RGB888)

self.update_image_singal.emit(showImage, self.flag0)

# 两者总时间为子窗口显示时间

####### main ########

if name == ‘main’:

app = QApplication(sys.argv)

ui = Ui_MainWindow()

ui.show()

sys.exit(app.exec_())

import labelgb_rc

欢迎咨询

该博客介绍了一款可自动识别监控图像中任何物体的产品。它能对图像画面进行判断,有多种商业价值。通过模型进行人脸训练,优化识别率,可识别遮蔽的人。还展示了客户端代码,包括图像传输、处理等相关代码。

该博客介绍了一款可自动识别监控图像中任何物体的产品。它能对图像画面进行判断,有多种商业价值。通过模型进行人脸训练,优化识别率,可识别遮蔽的人。还展示了客户端代码,包括图像传输、处理等相关代码。

2911

2911

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?