一、创建Maven项目

1.1 配置Maevn文件

<dependencies>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.12</artifactId>

<version>3.0.0</version>

</dependency>

</dependencies>

<build>

<plugins>

<!-- 该插件用于将 Scala 代码编译成 class 文件 -->

<plugin>

<groupId>net.alchim31.maven</groupId>

<artifactId>scala-maven-plugin</artifactId>

<version>3.2.2</version>

<executions>

<execution>

<!-- 声明绑定到 maven 的 compile 阶段 -->

<goals>

<goal>testCompile</goal>

</goals>

</execution>

</executions>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-assembly-plugin</artifactId>

<version>3.1.0</version>

<configuration>

<descriptorRefs>

<descriptorRef>jar-with-dependencies</descriptorRef>

</descriptorRefs>

</configuration>

<executions>

<execution>

<id>make-assembly</id>

<phase>package</phase>

<goals>

<goal>single</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>

配置scala

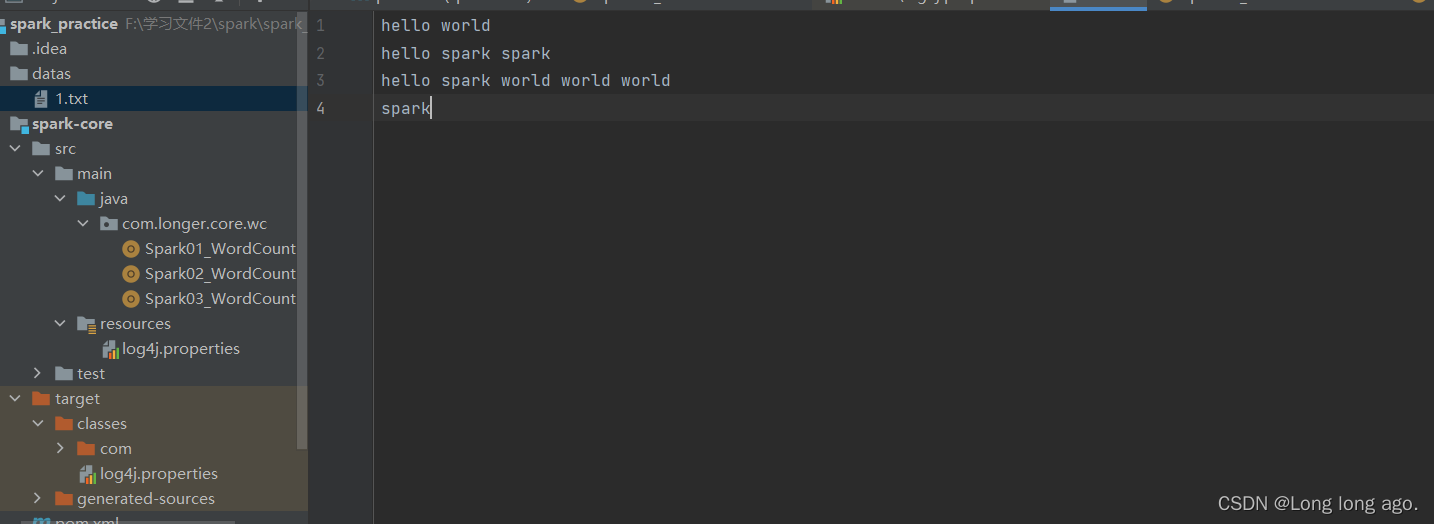

创建wordcount1使用gorup by和map的模式匹配

package com.longer.core.wc

import org.apache.spark.rdd.RDD

import org.apache.spark.{SparkConf, SparkContext}

/**

* 传统Scala的wordl count

*/

object Spark01_WordCount {

def main(args: Array[String]): Unit = {

// Application

// Spark框架

//TODO 建立和Spark框架的链接

//JDBC:Connection

val sparkConf = new SparkConf().setMaster("local").setAppName("WordCount")

val sc = new SparkContext(sparkConf)

//TODO 执行业务操作

//1、读取文件,获取一行一行的数据

//hello world

val lines: RDD[String] = sc.textFile("datas")

//将督导的数据进行扁平化处理

val newLines: RDD[String] = lines.flatMap(_.split(" "))

//分组

val wordsGroup: RDD[(String, Iterable[String])] = newLines.groupBy(word => word)

//统计

val wordCount:RDD[(String,Int)] = wordsGroup.map {

case (word, list) => (word, list.size)

}

//获取统计结果 触发执行操作

val array: Array[(String, Int)] = wordCount.collect()

array.foreach(println)

//TODO 关闭链接

sc.stop()

}

}

WordCount2使用reduceByKey实现

package com.atguigu.bigdata.spark.core.wc

import org.apache.spark.rdd.RDD

import org.apache.spark.{SparkConf, SparkContext}

object Spark02_WordCount1 {

def main(args: Array[String]): Unit = {

// Application

// Spark框架

// TODO 建立和Spark框架的连接

// JDBC : Connection

val sparConf = new SparkConf().setMaster("local").setAppName("WordCount")

val sc = new SparkContext(sparConf)

// TODO 执行业务操作

// 1. 读取文件,获取一行一行的数据

// hello world

val lines: RDD[String] = sc.textFile("datas")

// 2. 将一行数据进行拆分,形成一个一个的单词(分词)

// 扁平化:将整体拆分成个体的操作

// "hello world" => hello, world, hello, world

val words: RDD[String] = lines.flatMap(_.split(" "))

// 3. 将单词进行结构的转换,方便统计

// word => (word, 1)

val wordToOne = words.map(word=>(word,1))

// 4. 将转换后的数据进行分组聚合

// 相同key的value进行聚合操作

// (word, 1) => (word, sum)

val wordToSum: RDD[(String, Int)] = wordToOne.reduceByKey(_+_)

// 5. 将转换结果采集到控制台打印出来

val array: Array[(String, Int)] = wordToSum.collect()

array.foreach(println)

// TODO 关闭连接

sc.stop()

}

}

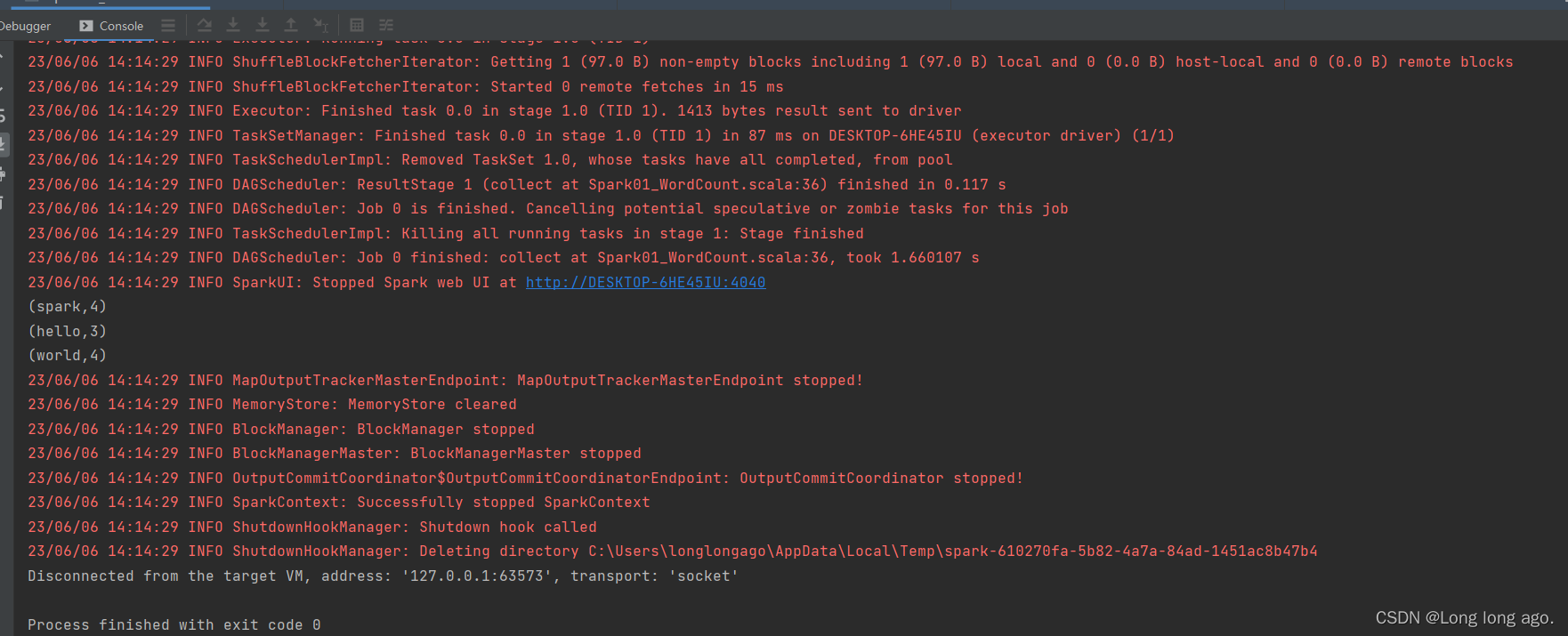

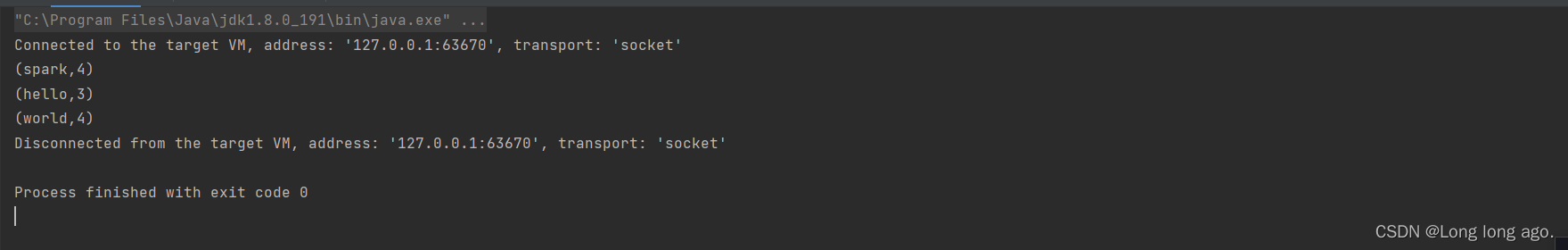

配置因为打印会有很多日志文件,所以 配置log4j去除日志

log4j.rootCategory=ERROR, console

log4j.appender.console=org.apache.log4j.ConsoleAppender

log4j.appender.console.target=System.err

log4j.appender.console.layout=org.apache.log4j.PatternLayout

log4j.appender.console.layout.ConversionPattern=%d{yy/MM/dd HH:mm:ss} %p %c{1}: %m%n

# Set the default spark-shell log level to ERROR. When running the spark-shell, the

# log level for this class is used to overwrite the root logger's log level, so that

# the user can have different defaults for the shell and regular Spark apps.

log4j.logger.org.apache.spark.repl.Main=ERROR

# Settings to quiet third party logs that are too verbose

log4j.logger.org.spark_project.jetty=ERROR

log4j.logger.org.spark_project.jetty.util.component.AbstractLifeCycle=ERROR

log4j.logger.org.apache.spark.repl.SparkIMain$exprTyper=ERROR

log4j.logger.org.apache.spark.repl.SparkILoop$SparkILoopInterpreter=ERROR

log4j.logger.org.apache.parquet=ERROR

log4j.logger.parquet=ERROR

# SPARK-9183: Settings to avoid annoying messages when looking up nonexistent UDFs in SparkSQL with Hive support

log4j.logger.org.apache.hadoop.hive.metastore.RetryingHMSHandler=FATAL

log4j.logger.org.apache.hadoop.hive.ql.exec.FunctionRegistry=ERROR

配置后没生效,clean以下项目

配置前

配置后

1157

1157

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?