Heap3

The Heap: Once upon a free() - bin 0x17

The Heap: dlmalloc unlink() exploit - bin 0x18

Smashing The Heap For Fun And Profit

This level introduces the Doug Lea Malloc (dlmalloc) and how heap meta data can be modified to change program execution.

source code:

#include <stdlib.h>

#include <unistd.h>

#include <string.h>

#include <sys/types.h>

#include <stdio.h>

void winner()

{

printf("that wasn't too bad now, was it? @ %d\n", time(NULL));

}

int main(int argc, char **argv)

{

char *a, *b, *c;

a = malloc(32);

b = malloc(32);

c = malloc(32);

strcpy(a, argv[1]);

strcpy(b, argv[2]);

strcpy(c, argv[3]);

free(c);

free(b);

free(a);

printf("dynamite failed?\n");

}open in gdb:

set a breakpoint at every call. run it and get the address of heap. and define a hook-stop.

and then we can see how the heap develops.

after first malloc:

after second malloc:

after third malloc:

after 3 strcpy:

next we gonna free them

so the last chunk got freed, the Cs got overwritten with 0, because these two words here have special meaning for a free block.

No much else changed, because those chunks are very small and are considered "fastbins" by malloc.

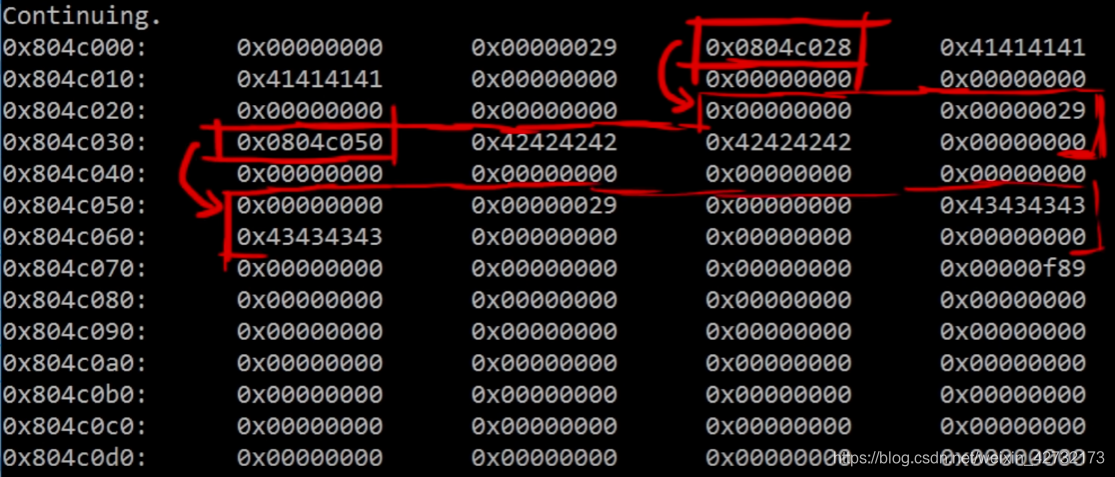

let's continue and free the next one, so we have two free blocks.

This time our Bs got overwritten with an address, and it points to the other free block.

that's the special meaning of the overwritten word fd, they are pointers, pointing to the next free block.

A LINKED LIST!

You probably ask yourself why the last bit of the sizes, which indicates that the previous blocks are in-use didn't get set to 0. In this case, they are small fastbins and we want to super fast handling them. And how we freed blocks in the reverse order, it just doesn't matter. It would be unnecessary waste of time to set that bit. Just imagine this pointer somewhere, which always points to the first free chunk. When I want to malloc something and look for free space, I can then simply follow the linked list to find all free chunks and thus the information that the previous chunk is free is just irrelevant. But, It would also look a little bit different if the chunk sizes were bigger. Then malloc and free would clean up a little bit more and use more heap metadata for housekeeping.

Let's have a look in the code: (dlmalloc 2001 version source code)

Before we start reading the code, think for a moment what we are looking for.

Looking for writes that we can control via heap metadata(e.g. prevsize, size, fd, bk).

We look for a primitive that allows us to perform an arbitrary write. That would allow us to overwrite an entry in the GOT. One possible way how such code that can be abused for that looks like, is following pointers that we control. If there is some kind of code that performs a write based on pointers on the heap we can overflow, we win.

/* conversion from malloc headers to user pointers, and back */

#define chunk2mem(p) ((Void_t*)((char*)(p) + 2*SIZE_SZ))

#define mem2chunk(mem) ((mchunkptr)((char*)(mem) - 2*SIZE_SZ))

/* Get size, ignoring use bits */

#define chunksize(p) ((p)->size & ~(SIZE_BITS))

/* size field is or'ed with IS_MMAPPED if the chunk was obtained with mmap() */

#define IS_MMAPPED 0x2

/* check for mmap()'ed chunk */

#define chunk_is_mmapped(p) ((p)->size & IS_MMAPPED)

/* size field is or'ed with PREV_INUSE when previous adjacent chunk in use */

#define PREV_INUSE 0x1

/* extract inuse bit of previous chunk */

#define prev_inuse(p) ((p)->size & PREV_INUSE)

/* Treat space at ptr + offset as a chunk */

#define chunk_at_offset(p, s) ((mchunkptr)(((char*)(p)) + (s)))

/* Take a chunk off a bin list */

#define unlink(P, BK, FD) { \

FD = P->fd; \

BK = P->bk; \

FD->bk = BK; \

BK->fd = FD; \

}

/*

------------------------------ free ------------------------------

*/

#if __STD_C

void fREe(Void_t* mem) /* mem: the address we want to free */

#else

void fREe(mem) Void_t* mem;

#endif

{

mstate av = get_malloc_state();

mchunkptr p; /* chunk corresponding to mem */

INTERNAL_SIZE_T size; /* its size */

mfastbinptr* fb; /* associated fastbin */

mchunkptr nextchunk; /* next contiguous chunk */

INTERNAL_SIZE_T nextsize; /* its size */

int nextinuse; /* true if nextchunk is used */

INTERNAL_SIZE_T prevsize; /* size of previous contiguous chunk */

mchunkptr bck; /* misc temp for linking */

mchunkptr fwd; /* misc temp for linking */

/* free(0) has no effect */

if (mem != 0) {

p = mem2chunk(mem); /* get the true starting address of the chunk */

size = chunksize(p);

check_inuse_chunk(p);

/*

If eligible, place chunk on a fastbin so it can be found

and used quickly in malloc.

*/

if ((unsigned long)(size) <= (unsigned long)(av->max_fast)

#if TRIM_FASTBINS

/*

If TRIM_FASTBINS set, don't place chunks

bordering top into fastbins

*/

&& (chunk_at_offset(p, size) != av->top)

#endif

) {

set_fastchunks(av);

fb = &(av->fastbins[fastbin_index(size)]);

p->fd = *fb;

*fb = p;

/*

We need to make sure that the chunk size is greater than the maximum fastbin

chunk size which is defined as 80 bytes so that our chunk won't be placed on

a fastbin.

*/

}

/*

Consolidate other non-mmapped chunks as they arrive.

*/

else if (!chunk_is_mmapped(p)) {

nextchunk = chunk_at_offset(p, size);

nextsize = chunksize(nextchunk);

/* consolidate backward */

if (!prev_inuse(p)) {

prevsize = p->prev_size;

size += prevsize;

p = chunk_at_offset(p, -((long) prevsize));

unlink(p, bck, fwd);

}

if (nextchunk != av->top) {

/* get and clear inuse bit */

nextinuse = inuse_bit_at_offset(nextchunk, nextsize);

set_head(nextchunk, nextsize);

/* consolidate forward */

if (!nextinuse) {

unlink(nextchunk, bck, fwd);

size += nextsize;

}

/*

Place the chunk in unsorted chunk list. Chunks are

not placed into regular bins until after they have

been given one chance to be used in malloc.

*/

bck = unsorted_chunks(av);

fwd = bck->fd;

p->bk = bck;

p->fd = fwd;

bck->fd = p;

fwd->bk = p;

set_head(p, size | PREV_INUSE);

set_foot(p, size);

check_free_chunk(p);

}

/*

If the chunk borders the current high end of memory,

consolidate into top

*/

else {

size += nextsize;

set_head(p, size | PREV_INUSE);

av->top = p;

check_chunk(p);

}

/*

If freeing a large space, consolidate possibly-surrounding

chunks. Then, if the total unused topmost memory exceeds trim

threshold, ask malloc_trim to reduce top.

Unless max_fast is 0, we don't know if there are fastbins

bordering top, so we cannot tell for sure whether threshold

has been reached unless fastbins are consolidated. But we

don't want to consolidate on each free. As a compromise,

consolidation is performed if FASTBIN_CONSOLIDATION_THRESHOLD

is reached.

*/

if ((unsigned long)(size) >= FASTBIN_CONSOLIDATION_THRESHOLD) {

if (have_fastchunks(av))

malloc_consolidate(av);

#ifndef MORECORE_CANNOT_TRIM

if ((unsigned long)(chunksize(av->top)) >=

(unsigned long)(av->trim_threshold))

sYSTRIm(av->top_pad, av);

#endif

}

}

/*

If the chunk was allocated via mmap, release via munmap()

Note that if HAVE_MMAP is false but chunk_is_mmapped is

true, then user must have overwritten memory. There's nothing

we can do to catch this error unless DEBUG is set, in which case

check_inuse_chunk (above) will have triggered error.

*/

else {

#if HAVE_MMAP

int ret;

INTERNAL_SIZE_T offset = p->prev_size;

av->n_mmaps--;

av->mmapped_mem -= (size + offset);

ret = munmap((char*)p - offset, size + offset);

/* munmap returns non-zero on failure */

assert(ret == 0);

#endif

}

}

}to be continued...

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?