什么是FASTAI

是PyTorch的顶层框架,理念就是让神经网络变得非常假肚腩!!

FastAI类似Keras,但是对新手更加友好。FastAI的后端框架PyTorch, 而Keras的后端框架是TensorFlow。个人感觉,掌握难度排行:

Tensorflow>PyTorch>Keras>FastAI

现在Kaggle的比赛中,很多人逐渐使用FastAI了。(我太难了,刚学了PyTorch,现在又要开始FastAI,哭哭)

这个是Fastai的官网

安装就不讲了,其实就是

pip install torch

pip install fastai依赖

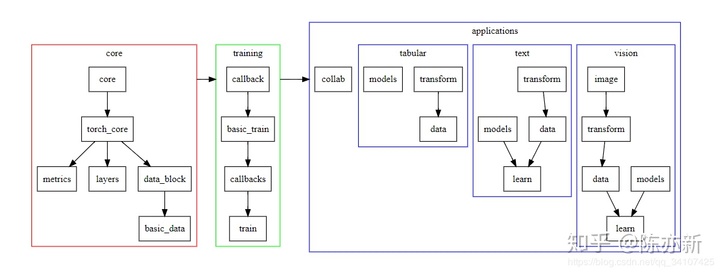

这个作为扩展知识,这个是官方给出的核心模块以来关系

实战

实战中的所有英文都可以不看,下面都有我的翻译。如果有人觉得翻译的不清晰或者有错误,这时候以英文原文为主。

from fastai.vision import models, URLs, ImageDataBunch, cnn_learner, untar_data, accuracy, get_transforms

# 干脆from fastai.vision import *

path = untar_data(URLs.MNIST_SAMPLE) # 下载数据集,这里只是MNIST的子集,只包含3和7的图像,会下载并解压(untar的命名原因)到/root/.fastai/data/mnist_sample(如果你是root用户)下,包含训练数据,测试数据,包含label的csv文件

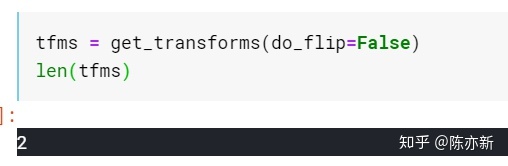

tfms = get_transforms(do_flip=False)

data = ImageDataBunch.from_folder(path,ds_tfms = tfms) # 利用ImageDataBunch读取文件夹,返回一个ImageDataBunch对象

learn = cnn_learner(data, models.resnet18, metrics=accuracy) # 构建cnn模型,使用resnet18预训练模型

learn.fit(1) # 训练一轮untar_data

Download url to fname if dest doesn’t exist, and un-tgz to folder dest.

In general, untar_data uses a url to download a tgz file under fname, and then un-tgz fname into a folder under dest.

就是下载并且解压缩

URLs

目前支持这么多的数据库:

S3_NLP, S3_COCO, MNIST_SAMPLE, MNIST_TINY, IMDB_SAMPLE, ADULT_SAMPLE, ML_SAMPLE, PLANET_SAMPLE, CIFAR, PETS, MNIST.

但是现在好像莫名不能使用MNIST下载数据集,奇怪

get_transform()

哎,现在已经不用那么复杂的用PyTorch的randomRatation啊什么的什么的Compose啊构建transform,现在只用来一个get_transform()就可以了,真好啊。

get_transforms returns a tuple of two lists of transforms: one for the training set and one for the validation set (we don’t want to modify the pictures in the validation set, so the second list of transforms is limited to resizing the pictures).

首先这个get_transform返回的是两个元素的list,第一个是训练集,第二个是验证集。因为我们一般都不会对验证集进行图像增强的操作,所以验证机的tranform只会有resize这一个。

我们来看下这个函数的参数:

get_transforms(do_flip:bool=True, flip_vert:bool=False, max_rotate:float=10.0, max_zoom:float=1.1, max_lighting:float=0.2, max_warp:float=0.2, p_affine:float=0.75, p_lighting:float=0.75, xtra_tfms:Optional[Collection[Transform]]=None) → Collection[Transform]do_flip: if True, a random flip is applied with probability 0.5

flip_vert: requires do_flip=True. If True, the image can be flipped vertically or rotated by 90 degrees, otherwise only an horizontal flip is applied

max_rotate: if not None, a random rotation between -max_rotate and max_rotate degrees is applied with probability p_affine

max_zoom: if not 1. or less, a random zoom between 1. and max_zoom is applied with probability p_affine

max_lighting: if not None, a random lightning and contrast change controlled by max_lighting is applied with probability p_lighting

max_warp: if not None, a random symmetric warp of magnitude between -max_warp and maw_warp is applied with probability p_affine

p_affine: the probability that each affine transform and symmetric warp is applied

p_lighting: the probability that each lighting transform is applied

xtra_tfms: a list of additional transforms you would like to be applied

do_flip: 如果是True,图像就会随机翻转

flip_vert:如果是False,图像只会水平翻转,如果是True,就会水平和数值一起翻转,就好像图片旋转了90一样

zoom_crop

zoom_crop(scale:float, do_rand:bool=False, p:float=1.0)scale: Decimal or range of decimals to zoom the image

do_rand: If true, transform is randomized, otherwise it’s a zoom of scale and a center crop

p: Probability to apply the zoom

如果do_rand是False的话,scale应该是一个float;否则的话,这个scale可以是a range of floats,然后zoom会有一个随机的值在这个range里面。

这有一个例子:

rand_resize_crop

rand_resize_crop(size:int, max_scale:float=2.0, ratios:Point=(0.75,1.33))size: Final size of the image

max_scale: Zooms the image to a random scale up to this

ratios: Range of ratios in which a new one will be randomly picked

这个方法好,这个方法是一个主要的方法,根据近期的Imagenet优胜者的方法。

这个的max_scale就是先把图片随机的放大或者缩小,然后随机决定一个新的长和宽,再把图片拉伸成那个长和宽,然后再随机剪裁?

It determines a new width and height of the image after the random scale and squish to the new ratio are applied. Those are switched with probability 0.5. Then we return the part of the image with the width and height computed, centered in row_pct, col_pct if width and height are both less than the corresponding size of the image. Otherwise we try again with new random parameters.

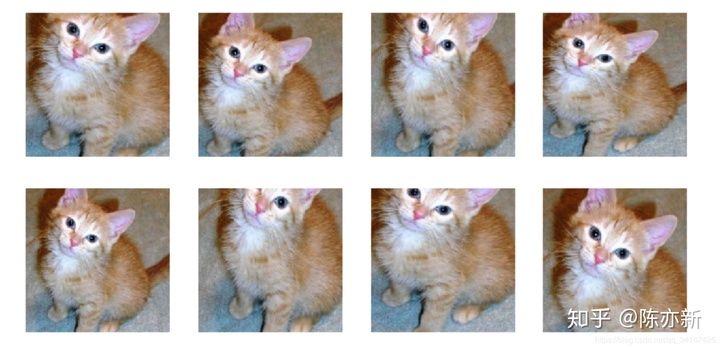

tfms =[rand_resize_crop(224)]

plots_f(2,4,12,6, size=224)

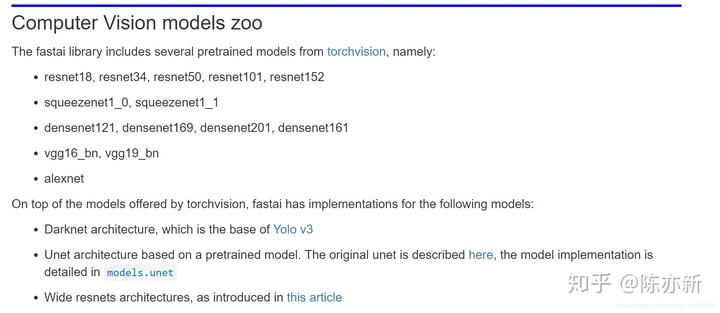

fastai.vision.models

视觉来说,目前支持这些pre-trained models

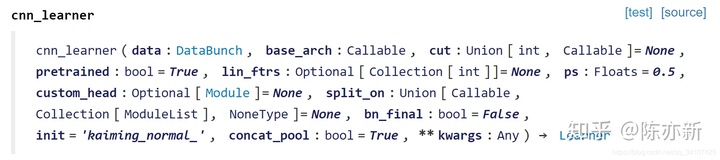

cnn_learner()

这篇博客介绍了FastAI,一个基于PyTorch的简单易用的深度学习库。它类似于Keras,但更适合初学者。FastAI的后端是PyTorch,而Keras依赖于TensorFlow。文中通过示例展示了如何使用FastAI下载数据集(如MNIST),应用图像变换,构建预训练的CNN模型(如ResNet18)并进行训练。重点提到了get_transforms()函数,用于简化图像增强操作。此外,还讨论了不同深度学习框架的学习曲线和在Kaggle比赛中的应用趋势。

这篇博客介绍了FastAI,一个基于PyTorch的简单易用的深度学习库。它类似于Keras,但更适合初学者。FastAI的后端是PyTorch,而Keras依赖于TensorFlow。文中通过示例展示了如何使用FastAI下载数据集(如MNIST),应用图像变换,构建预训练的CNN模型(如ResNet18)并进行训练。重点提到了get_transforms()函数,用于简化图像增强操作。此外,还讨论了不同深度学习框架的学习曲线和在Kaggle比赛中的应用趋势。

516

516

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?