架构规划

k8s至少需要一个Master和一个Node才能组成一个可用集群,本文搭建一个Master节点和一个Node节点。采用vmware新建的两个centos7虚拟机进行部署,部署之前参考下面的链接将虚拟机IP地址配置静态IP,防止在dhcp模式下,重启后IP地址发生改变。

一 、环境准备

| 节点 | 主机名 | IP | OS |

| Master | k8s-master | 192.168.203.201 | centos 7 |

| Node1 | k8s-node-1 | 192.168.203.202 | centos 7 |

二、离线包下载

参考此链接:链接: https://pan.baidu.com/s/19CRg4v3oACVSfZal_zi9oQ 提取码: 132g,在此也感谢这位前辈的无私奉献

三、设置主机名

//Master上执行:

hostnamectl --static set-hostname k8s-master

//重启

reboot

//Node1上执行:

hostnamectl --static set-hostname k8s-node-1

//重启

reboot四、分别修改节点的hosts文件,并使内容保持一致

//Master、Node1编辑hosts文件

vi /etc/hosts

//新增下面的内容,wq保存。

192.168.203.201 k8s-master

192.168.203.202 k8s-node-1五、关闭防火墙

//Master、Node1执行

systemctl stop firewalld

systemctl disable firewalld六、Master节点与Node1节点做互信

//Master执行

ssh-keygen //回车

ssh-copy-id k8s-node-1

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host 'k8s-node-1 (192.168.203.202)' can't be established.

ECDSA key fingerprint is SHA256:dr2qCYlJD55sSUmkqYGlgSumYLfxCVFGL0TUdOoQr4k.

ECDSA key fingerprint is MD5:ff:d4:93:fe:13:45:7b:3c:cc:da:02:bd:4c:45:b6:7d.

Are you sure you want to continue connecting (yes/no)?

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

The authenticity of host 'k8s-node-1 (192.168.203.202)' can't be established.

ECDSA key fingerprint is SHA256:dr2qCYlJD55sSUmkqYGlgSumYLfxCVFGL0TUdOoQr4k.

ECDSA key fingerprint is MD5:ff:d4:93:fe:13:45:7b:3c:cc:da:02:bd:4c:45:b6:7d.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@k8s-node-1's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'k8s-node-1'"

and check to make sure that only the key(s) you wanted were added.

下面7-13步需要在Master和Node1上都执行

七、关闭selinux

//编辑config文件,将SELINUX=enforcing改为SELINUX=disabled,wq保存。

vi /etc/selinux/config

//或者当前临时关闭selinux(不重启临时关闭selinux的方式)

se tenforce 0八、关闭swap

//当前临时关闭虚拟内存

swapoff -a

//修改/etc/fstab文件

vi /etc/fstab

//加#注释掉下面的语句屏蔽SWAP的自动挂载,wq保存

#/dev/mapper/centos-swap swapswapdefaults0 0若不关闭下面初始化master的时候会存在下图的错误:

九、配置路由参数,防止kubeadm报路由警告

//将内容写入k8s.conf文件

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

//立即生效

sysctl --system

* Applying /usr/lib/sysctl.d/00-system.conf ...

* Applying /usr/lib/sysctl.d/10-default-yama-scope.conf ...

kernel.yama.ptrace_scope = 0

* Applying /usr/lib/sysctl.d/50-default.conf ...

kernel.sysrq = 16

kernel.core_uses_pid = 1

net.ipv4.conf.default.rp_filter = 1

net.ipv4.conf.all.rp_filter = 1

net.ipv4.conf.default.accept_source_route = 0

net.ipv4.conf.all.accept_source_route = 0

net.ipv4.conf.default.promote_secondaries = 1

net.ipv4.conf.all.promote_secondaries = 1

fs.protected_hardlinks = 1

fs.protected_symlinks = 1

* Applying /usr/lib/sysctl.d/60-libvirtd.conf ...

fs.aio-max-nr = 1048576

* Applying /etc/sysctl.d/99-sysctl.conf ...

* Applying /etc/sysctl.d/k8s.conf ...

* Applying /etc/sysctl.conf ...

十、安装docker

cd k8s_images

rpm -ihv docker-ce-selinux-17.03.2.ce-1.el7.centos.noarch.rpm发现缺少依赖包的错误

则:

cd mypackages

yum -y install *.rpm

已加载插件:fastestmirror, langpacks

正在检查 audit-libs-python-2.7.6-3.el7.x86_64.rpm: audit-libs-python-2.7.6-3.el7.x86_64

audit-libs-python-2.7.6-3.el7.x86_64.rpm:不更新已安装的软件包。

正在检查 checkpolicy-2.5-4.el7.x86_64.rpm: checkpolicy-2.5-4.el7.x86_64

checkpolicy-2.5-4.el7.x86_64.rpm:不更新已安装的软件包。

正在检查 libcgroup-0.41-13.el7.x86_64.rpm: libcgroup-0.41-13.el7.x86_64

libcgroup-0.41-13.el7.x86_64.rpm:不更新已安装的软件包。

正在检查 libseccomp-2.3.1-3.el7.x86_64.rpm: libseccomp-2.3.1-3.el7.x86_64

libseccomp-2.3.1-3.el7.x86_64.rpm:不更新已安装的软件包。

正在检查 libsemanage-python-2.5-8.el7.x86_64.rpm: libsemanage-python-2.5-8.el7.x86_64

libsemanage-python-2.5-8.el7.x86_64.rpm:不更新已安装的软件包。

正在检查 policycoreutils-python-2.5-17.1.el7.x86_64.rpm: policycoreutils-python-2.5-17.1.el7.x86_64

policycoreutils-python-2.5-17.1.el7.x86_64.rpm:不更新已安装的软件包。

正在检查 python-IPy-0.75-6.el7.noarch.rpm: python-IPy-0.75-6.el7.noarch

python-IPy-0.75-6.el7.noarch.rpm:不更新已安装的软件包。

正在检查 setools-libs-3.3.8-1.1.el7.x86_64.rpm: setools-libs-3.3.8-1.1.el7.x86_64

setools-libs-3.3.8-1.1.el7.x86_64.rpm:不更新已安装的软件包。

错误:无须任何处理

cd k8s_images

rpm -ihv docker-ce-selinux-17.03.2.ce-1.el7.centos.noarch.rpm

准备中... ################################# [100%]

软件包 docker-ce-selinux-17.03.2.ce-1.el7.centos.noarch 已经安装

rpm -ihv docker-ce-17.03.2.ce-1.el7.centos.x86_64.rpm

准备中... ################################# [100%]

正在升级/安装...

1:docker-ce-17.03.2.ce-1.el7.centos ( 2%################################# [100%]

//若出现下图情况需要先执行

rpm -ihv libtool-ltdl-2.4.2-22.el7_3.x86_64.rpm

systemctl start docker && systemctl enable docker

docker version

Client:

Version: 17.03.2-ce

API version: 1.27

Go version: go1.7.5

Git commit: f5ec1e2

Built: Tue Jun 27 02:21:36 2017

OS/Arch: linux/amd64

Server:

Version: 17.03.2-ce

API version: 1.27 (minimum version 1.12)

Go version: go1.7.5

Git commit: f5ec1e2

Built: Tue Jun 27 02:21:36 2017

OS/Arch: linux/amd64

Experimental: false十一、导入镜像

cd docker_images/

for i in $(ls *.tar); do docker load < $i ; done

6a749002dd6a: Loading layer 1.338 MB/1.338 MB

bbd07ea14872: Loading layer 159.2 MB/159.2 MB

611a3394df5d: Loading layer 32.44 MB/32.44 MB

Loaded image: gcr.io/google_containers/etcd-amd64:3.1.10

5bef08742407: Loading layer 4.221 MB/4.221 MB

b87261cc1ccb: Loading layer 2.56 kB/2.56 kB

ac66a5c581a8: Loading layer 362 kB/362 kB

22f71f461ac8: Loading layer 3.072 kB/3.072 kB

686a085da152: Loading layer 36.63 MB/36.63 MB

Loaded image: gcr.io/google_containers/k8s-dns-dnsmasq-nanny-amd64:1.14.7

cd69fdcd7591: Loading layer 46.31 MB/46.31 MB

Loaded image: gcr.io/google_containers/k8s-dns-kube-dns-amd64:1.14.7

bd94706d2c63: Loading layer 38.07 MB/38.07 MB

Loaded image: gcr.io/google_containers/k8s-dns-sidecar-amd64:1.14.7

0271b8eebde3: Loading layer 1.338 MB/1.338 MB

9ccc9fba4253: Loading layer 209.2 MB/209.2 MB

Loaded image: gcr.io/google_containers/kube-apiserver-amd64:v1.9.0

50a426d115f8: Loading layer 136.6 MB/136.6 MB

Loaded image: gcr.io/google_containers/kube-controller-manager-amd64:v1.9.0

684c19bf2c27: Loading layer 44.2 MB/44.2 MB

deb4ca39ea31: Loading layer 3.358 MB/3.358 MB

9c44b0d51ed1: Loading layer 63.38 MB/63.38 MB

Loaded image: gcr.io/google_containers/kube-proxy-amd64:v1.9.0

64c55db70c4a: Loading layer 121.2 MB/121.2 MB

Loaded image: gcr.io/google_containers/kubernetes-dashboard-amd64:v1.8.1

f733b8f8af29: Loading layer 61.57 MB/61.57 MB

Loaded image: gcr.io/google_containers/kube-scheduler-amd64:v1.9.0

5f70bf18a086: Loading layer 1.024 kB/1.024 kB

41ff149e94f2: Loading layer 748.5 kB/748.5 kB

Loaded image: gcr.io/google_containers/pause-amd64:3.0

十二、安装kubelet、kubectl、kubeadm 包

cd k8s_images

rpm -ivh socat-1.7.3.2-2.el7.x86_64.rpm

准备中... ################################# [100%]

正在升级/安装...

1:socat-1.7.3.2-2.el7 ( 6%################################# [100%]

rpm -ivh kubernetes-cni-0.6.0-0.x86_64.rpm kubelet-1.9.9-9.x86_64.rpm kubectl-1.9.0-0.x86_64.rpm

准备中... ################################# [100%]

正在升级/安装...

1:kubelet-1.9.0-0 ( 1%################################# [ 33%]

2:kubernetes-cni-0.6.0-0 ( 2%################################# [ 67%]

3:kubectl-1.9.0-0 ( 1%################################# [100%]

rpm -ivh kubeadm-1.9.0-0.x86_64.rpm

准备中... ################################# [100%]

正在升级/安装...

1:kubeadm-1.9.0-0 ( 2%################################# [100%]

十三 、启动 kubelet

systemctl start kubelet&&sudo systemctl enable kubelet

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /etc/systemd/system/kubelet.service.

十四、初始化 Master( Master执行 )

kubeadm init --kubernetes-version=v1.9.0 --pod-network-cidr=10.244.0.0/16

//发现存在错误

cat /var/log/message

是kubelet默认的cgroup的driver和docker的不一样导致的,docker默认的cgroupfs,kubelet默认为systemd,编辑10-kubeadm.conf修改为 cgroupfs (Master和Node1均需要修改)

//master和每个node都要改这里

vi /etc/systemd/system/kubelet.service.d/10-kubeadm.conf

若虚拟机意外挂掉,则Master从这步继续,Node则执行重启命令并完成后面的kubeadm join即可。

//重启

systemctl daemon-reload && systemctl restart kubelet

//环境reset

kubeadm reset

[preflight] Running pre-flight checks.

[reset] Stopping the kubelet service.

[reset] Unmounting mounted directories in "/var/lib/kubelet"

[reset] Removing kubernetes-managed containers.

[reset] Deleting contents of stateful directories: [/var/lib/kubelet /etc/cni/net.d /var/lib/dockershim /var/run/kubernetes /var/lib/etcd]

[reset] Deleting contents of config directories: [/etc/kubernetes/manifests /etc/kubernetes/pki]

[reset] Deleting files: [/etc/kubernetes/admin.conf /etc/kubernetes/kubelet.conf /etc/kubernetes/controller-manager.conf /etc/kubernetes/scheduler.conf]

//重新初始化

kubeadm init --kubernetes-version=v1.9.0 --pod-network-cidr=10.244.0.0/16

[init] Using Kubernetes version: v1.9.0

[init] Using Authorization modes: [Node RBAC]

[preflight] Running pre-flight checks.

[WARNING FileExisting-crictl]: crictl not found in system path

[preflight] Starting the kubelet service

[certificates] Generated ca certificate and key.

[certificates] Generated apiserver certificate and key.

[certificates] apiserver serving cert is signed for DNS names [k8s-master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.203.201]

[certificates] Generated apiserver-kubelet-client certificate and key.

[certificates] Generated sa key and public key.

[certificates] Generated front-proxy-ca certificate and key.

[certificates] Generated front-proxy-client certificate and key.

[certificates] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[kubeconfig] Wrote KubeConfig file to disk: "admin.conf"

[kubeconfig] Wrote KubeConfig file to disk: "kubelet.conf"

[kubeconfig] Wrote KubeConfig file to disk: "controller-manager.conf"

[kubeconfig] Wrote KubeConfig file to disk: "scheduler.conf"

[controlplane] Wrote Static Pod manifest for component kube-apiserver to "/etc/kubernetes/manifests/kube-apiserver.yaml"

[controlplane] Wrote Static Pod manifest for component kube-controller-manager to "/etc/kubernetes/manifests/kube-controller-manager.yaml"

[controlplane] Wrote Static Pod manifest for component kube-scheduler to "/etc/kubernetes/manifests/kube-scheduler.yaml"

[etcd] Wrote Static Pod manifest for a local etcd instance to "/etc/kubernetes/manifests/etcd.yaml"

[init] Waiting for the kubelet to boot up the control plane as Static Pods from directory "/etc/kubernetes/manifests".

[init] This might take a minute or longer if the control plane images have to be pulled.

[apiclient] All control plane components are healthy after 27.502503 seconds

[uploadconfig] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[markmaster] Will mark node k8s-master as master by adding a label and a taint

[markmaster] Master k8s-master tainted and labelled with key/value: node-role.kubernetes.io/master=""

[bootstraptoken] Using token: e446e3.ffa351926a6229d8

[bootstraptoken] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstraptoken] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstraptoken] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstraptoken] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: kube-dns

[addons] Applied essential addon: kube-proxy

Your Kubernetes master has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of machines by running the following on each node

as root:

kubeadm join --token e446e3.ffa351926a6229d8 192.168.203.201:6443 --discovery-token-ca-cert-hash sha256:5e2f8ae1f7ffbc4548afa3b226cb384e8d73ee28122caa0286a1c5cdddf80956

kubeadm join 最后这句话要记录下来,node节点加入 master 的时候要用到。

kubeadm token list查看

kubeadm token list

TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS

e446e3.ffa351926a6229d8 23h 2021-01-13T15:15:15+08:00 authentication,signing The default bootstrap token generated by 'kubeadm init'. system:bootstrappers:kubeadm:default-node-token

//默认token 24小时就会过期,后续的机器要加入集群需要重新生成token

kubeadm token create

//查看 kubelet状态

systemctl status kubelet

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/etc/systemd/system/kubelet.service; enabled; vendor preset: disabled)

Drop-In: /etc/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: active (running) since 二 2021-01-12 15:14:55 CST; 5min ago

Docs: http://kubernetes.io/docs/

Main PID: 67781 (kubelet)

Tasks: 22

Memory: 38.6M

CGroup: /system.slice/kubelet.service

└─67781 /usr/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf --pod-manifest...

1月 12 15:20:35 k8s-master kubelet[67781]: W0112 15:20:35.869674 67781 kubelet.go:1592] Deleting mirror pod "kube-controller-manager-k8s-maste... outdated

1月 12 15:20:35 k8s-master kubelet[67781]: W0112 15:20:35.875330 67781 kubelet.go:1592] Deleting mirror pod "kube-scheduler-k8s-master_kube-sy... outdated

1月 12 15:20:38 k8s-master kubelet[67781]: W0112 15:20:38.077009 67781 cni.go:171] Unable to update cni config: No networks found in /etc/cni/net.d

1月 12 15:20:38 k8s-master kubelet[67781]: E0112 15:20:38.077213 67781 kubelet.go:2105] Container runtime network not ready: NetworkReady=fals...itialized

1月 12 15:20:40 k8s-master kubelet[67781]: W0112 15:20:40.817325 67781 kubelet.go:1592] Deleting mirror pod "etcd-k8s-master_kube-system(aa820... outdated

1月 12 15:20:40 k8s-master kubelet[67781]: W0112 15:20:40.821764 67781 kubelet.go:1592] Deleting mirror pod "kube-apiserver-k8s-master_kube-sy... outdated

1月 12 15:20:40 k8s-master kubelet[67781]: W0112 15:20:40.825327 67781 kubelet.go:1592] Deleting mirror pod "kube-controller-manager-k8s-maste... outdated

1月 12 15:20:40 k8s-master kubelet[67781]: W0112 15:20:40.832452 67781 kubelet.go:1592] Deleting mirror pod "kube-scheduler-k8s-master_kube-sy... outdated

1月 12 15:20:43 k8s-master kubelet[67781]: W0112 15:20:43.079619 67781 cni.go:171] Unable to update cni config: No networks found in /etc/cni/net.d

1月 12 15:20:43 k8s-master kubelet[67781]: E0112 15:20:43.079804 67781 kubelet.go:2105] Container runtime network not ready: NetworkReady=fals...itialized

Hint: Some lines were ellipsized, use -l to show in full.

十五、此时root用户还不能使用kubelet控制集群需要,配置下环境变量

将master下/etc/kubernetes路径下的内容拷贝到每个node节点的相同路径下,参考

//对于非root用户

su 非root用户

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

//对于root用户

export KUBECONFIG=/etc/kubernetes/admin.conf

//也可以直接放到~/.bash_profile

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

//source一下环境变量

source ~/.bash_profile

//查看版本

kubectl version

Client Version: version.Info{Major:"1", Minor:"9", GitVersion:"v1.9.0", GitCommit:"925c127ec6b946659ad0fd596fa959be43f0cc05", GitTreeState:"clean", BuildDate:"2017-12-15T21:07:38Z", GoVersion:"go1.9.2", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"9", GitVersion:"v1.9.0", GitCommit:"925c127ec6b946659ad0fd596fa959be43f0cc05", GitTreeState:"clean", BuildDate:"2017-12-15T20:55:30Z", GoVersion:"go1.9.2", Compiler:"gc", Platform:"linux/amd64"}

若出现:非root用户不在 sudoers 文件中。此事将被报告。则参考:非root用户加入root组

十六、安装网络,可以使用flannel、calico、weave、macvlan这里我用的flannel ( Master执行 )

kube-flannel.yml里面的网段要和 kubeadm --pod-network-cidr设置的一致,默认是 10.244.0.0/16

cd k8s_images

kubectl create -f kube-flannel.yml

clusterrole "flannel" created

clusterrolebinding "flannel" created

serviceaccount "flannel" created

configmap "kube-flannel-cfg" created

daemonset "kube-flannel-ds" created十七、Node1节点加入 Master(Node1 执行 )

//执行上面保存的kubeadm join这句话

kubeadm join --token e446e3.ffa351926a6229d8 192.168.203.201:6443 --discovery-token-ca-cert-hash sha256:5e2f8ae1f7ffbc4548afa3b226cb384e8d73ee28122caa0286a1c5cdddf80956

//可能会需要这句命令kubeadm join --token aa78f6.8b4cafc8ed26c34f --discovery-token-ca-cert-hash sha256:0fd95a9bc67a7bf0ef42da968a0d55d92e52898ec37c971bd77ee501d845b538 172.16.6.79:6443 --skip-preflight-checks

[preflight] Running pre-flight checks.

[WARNING FileExisting-crictl]: crictl not found in system path

[preflight] Starting the kubelet service

[discovery] Trying to connect to API Server "192.168.203.201:6443"

[discovery] Created cluster-info discovery client, requesting info from "https://192.168.203.201:6443"

[discovery] Requesting info from "https://192.168.203.201:6443" again to validate TLS against the pinned public key

[discovery] Cluster info signature and contents are valid and TLS certificate validates against pinned roots, will use API Server "192.168.203.201:6443"

[discovery] Successfully established connection with API Server "192.168.203.201:6443"

This node has joined the cluster:

* Certificate signing request was sent to master and a response

was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the master to see this node join the cluster.

十八、验证node 和pod 如下图代表Kubernetes 1.9.0 安装成功 ( Master执行 )

kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 39m v1.9.0

k8s-node-1 Ready <none> 1m v1.9.0

kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system etcd-k8s-master 1/1 Running 0 4m

kube-system kube-apiserver-k8s-master 1/1 Running 0 4m

kube-system kube-controller-manager-k8s-master 1/1 Running 0 4m

kube-system kube-dns-6f4fd4bdf-4fzjq 3/3 Running 0 40m

kube-system kube-flannel-ds-b78fb 1/1 Running 0 5m

kube-system kube-flannel-ds-zbtsr 1/1 Running 0 2m

kube-system kube-proxy-5hxsg 1/1 Running 0 2m

kube-system kube-proxy-gsnbs 1/1 Running 0 40m

kube-system kube-scheduler-k8s-master 1/1 Running 0 4m

docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

026ffcdb2e18 db76ee297b85 "/sidecar --v=2 --..." 4 minutes ago Up 4 minutes k8s_sidecar_kube-dns-6f4fd4bdf-4fzjq_kube-system_f427256c-54a5-11eb-b8b3-000c298a2984_0

cd22c0719aff 5feec37454f4 "/dnsmasq-nanny -v..." 4 minutes ago Up 4 minutes k8s_dnsmasq_kube-dns-6f4fd4bdf-4fzjq_kube-system_f427256c-54a5-11eb-b8b3-000c298a2984_0

9f85b0322c7c 5d049a8c4eec "/kube-dns --domai..." 4 minutes ago Up 4 minutes k8s_kubedns_kube-dns-6f4fd4bdf-4fzjq_kube-system_f427256c-54a5-11eb-b8b3-000c298a2984_0

4ff2ce834a87 gcr.io/google_containers/pause-amd64:3.0 "/pause" 4 minutes ago Up 4 minutes k8s_POD_kube-dns-6f4fd4bdf-4fzjq_kube-system_f427256c-54a5-11eb-b8b3-000c298a2984_0

8c5b37f84e40 9611457a1219 "/opt/bin/flanneld..." 5 minutes ago Up 5 minutes k8s_kube-flannel_kube-flannel-ds-b78fb_kube-system_d3454b82-54aa-11eb-b8b3-000c298a2984_0

1364a40bfba9 gcr.io/google_containers/pause-amd64:3.0 "/pause" 6 minutes ago Up 6 minutes k8s_POD_kube-flannel-ds-b78fb_kube-system_d3454b82-54aa-11eb-b8b3-000c298a2984_0

f1bc8093b6d9 f6f363e6e98e "/usr/local/bin/ku..." 41 minutes ago Up 41 minutes k8s_kube-proxy_kube-proxy-gsnbs_kube-system_f4270b66-54a5-11eb-b8b3-000c298a2984_0

9ed93da6d683 gcr.io/google_containers/pause-amd64:3.0 "/pause" 41 minutes ago Up 41 minutes k8s_POD_kube-proxy-gsnbs_kube-system_f4270b66-54a5-11eb-b8b3-000c298a2984_0

f7d17a94ccd7 7bff5aa286d7 "kube-apiserver --..." 41 minutes ago Up 41 minutes k8s_kube-apiserver_kube-apiserver-k8s-master_kube-system_d032a8e812b9242291777a03e9cb8f9f_0

9451ebfe2fd0 1406502a6459 "etcd --listen-cli..." 41 minutes ago Up 41 minutes k8s_etcd_etcd-k8s-master_kube-system_408851a572c13f8177557fdb9151111c_0

a2def272e59b 3bb172f9452c "kube-controller-m..." 41 minutes ago Up 41 minutes k8s_kube-controller-manager_kube-controller-manager-k8s-master_kube-system_ba06de5c8fd46dce7feebb55d380f362_0

5365b5ba9d36 5ceb21996307 "kube-scheduler --..." 41 minutes ago Up 41 minutes k8s_kube-scheduler_kube-scheduler-k8s-master_kube-system_daf4ae2370716908643234b98eb0dab8_0

98677461d5f8 gcr.io/google_containers/pause-amd64:3.0 "/pause" 41 minutes ago Up 41 minutes k8s_POD_kube-apiserver-k8s-master_kube-system_d032a8e812b9242291777a03e9cb8f9f_0

ac7c736a3d9d gcr.io/google_containers/pause-amd64:3.0 "/pause" 41 minutes ago Up 41 minutes k8s_POD_kube-controller-manager-k8s-master_kube-system_ba06de5c8fd46dce7feebb55d380f362_0

f54dbf16a1f7 gcr.io/google_containers/pause-amd64:3.0 "/pause" 41 minutes ago Up 41 minutes k8s_POD_etcd-k8s-master_kube-system_408851a572c13f8177557fdb9151111c_0

a47e95b6d6e9 gcr.io/google_containers/pause-amd64:3.0 "/pause" 41 minutes ago Up 41 minutes k8s_POD_kube-scheduler-k8s-master_kube-system_daf4ae2370716908643234b98eb0dab8_0

十九、部署kubernetes-dashboard ( Master执行 )

cd k8s_images

vi kubernetes-dashboard.yaml

设置type为NodePort ,设置在范围30000-32767内的任意端口,本人设置的是32666(默认),(端口范围可以通过 kube-apiserver.yaml修改)

# ------------------- Dashboard Service ------------------- #

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 32666

selector:

k8s-app: kubernetes-dashboard

kubectl create -f kubernetes-dashboard.yaml

secret "kubernetes-dashboard-certs" created

serviceaccount "kubernetes-dashboard" created

role "kubernetes-dashboard-minimal" created

rolebinding "kubernetes-dashboard-minimal" created

deployment "kubernetes-dashboard" created

service "kubernetes-dashboard" created

设置验证方式 默认验证方式有kubeconfig和token,这里都不用,我们使用basicauth的方式进行apiserver的验证。

//用于存放用户名、密码、用户ID

echo 'admin,admin,2' > /etc/kubernetes/pki/basic_auth_file

//编辑kube-apiserver.yaml,给kube-apiserver添加basic_auth验证

vi /etc/kubernetes/manifests/kube-apiserver.yaml

//添加, ps:-与--之间的不是空格

- --basic_auth_file=/etc/kubernetes/pki/basic_auth_file

//保存后若API pod停止了,则重启kubelet服务:kubelet 会重启 kube-apiserver Pod

systemctl restart kubelet //更新apiserver

kubectl apply -f /etc/kubernetes/manifests/kube-apiserver.yaml

pod "kube-apiserver" created

// 授权,k8s 1.6以后的版本都采用RBAC授权模型,给admin授权默认cluster-admin是拥有全部权限的,将admin和cluster-admin bind这样admin就有cluster-admin的权限。

kubectl create clusterrolebinding login-on-dashboard-with-cluster-admin --clusterrole=cluster-admin --user=admin

clusterrolebinding "login-on-dashboard-with-cluster-admin" created

//验证apiserver

curl --insecure https://192.168.203.201:6443 -basic -u admin:admin

{

"paths": [

"/api",

"/api/v1",

"/apis",

"/apis/",

"/apis/admissionregistration.k8s.io",

"/apis/admissionregistration.k8s.io/v1beta1",

"/apis/apiextensions.k8s.io",

"/apis/apiextensions.k8s.io/v1beta1",

"/apis/apiregistration.k8s.io",

"/apis/apiregistration.k8s.io/v1beta1",

"/apis/apps",

"/apis/apps/v1",

"/apis/apps/v1beta1",

"/apis/apps/v1beta2",

"/apis/authentication.k8s.io",

"/apis/authentication.k8s.io/v1",

"/apis/authentication.k8s.io/v1beta1",

"/apis/authorization.k8s.io",

"/apis/authorization.k8s.io/v1",

"/apis/authorization.k8s.io/v1beta1",

"/apis/autoscaling",

"/apis/autoscaling/v1",

"/apis/autoscaling/v2beta1",

"/apis/batch",

"/apis/batch/v1",

"/apis/batch/v1beta1",

"/apis/certificates.k8s.io",

"/apis/certificates.k8s.io/v1beta1",

"/apis/events.k8s.io",

"/apis/events.k8s.io/v1beta1",

"/apis/extensions",

"/apis/extensions/v1beta1",

"/apis/networking.k8s.io",

"/apis/networking.k8s.io/v1",

"/apis/policy",

"/apis/policy/v1beta1",

"/apis/rbac.authorization.k8s.io",

"/apis/rbac.authorization.k8s.io/v1",

"/apis/rbac.authorization.k8s.io/v1beta1",

"/apis/storage.k8s.io",

"/apis/storage.k8s.io/v1",

"/apis/storage.k8s.io/v1beta1",

"/healthz",

"/healthz/autoregister-completion",

"/healthz/etcd",

"/healthz/ping",

"/healthz/poststarthook/apiservice-openapi-controller",

"/healthz/poststarthook/apiservice-registration-controller",

"/healthz/poststarthook/apiservice-status-available-controller",

"/healthz/poststarthook/bootstrap-controller",

"/healthz/poststarthook/ca-registration",

"/healthz/poststarthook/generic-apiserver-start-informers",

"/healthz/poststarthook/kube-apiserver-autoregistration",

"/healthz/poststarthook/rbac/bootstrap-roles",

"/healthz/poststarthook/start-apiextensions-controllers",

"/healthz/poststarthook/start-apiextensions-informers",

"/healthz/poststarthook/start-kube-aggregator-informers",

"/healthz/poststarthook/start-kube-apiserver-informers",

"/logs",

"/metrics",

"/swagger-2.0.0.json",

"/swagger-2.0.0.pb-v1",

"/swagger-2.0.0.pb-v1.gz",

"/swagger.json",

"/swaggerapi",

"/ui",

"/ui/",

"/version"

]

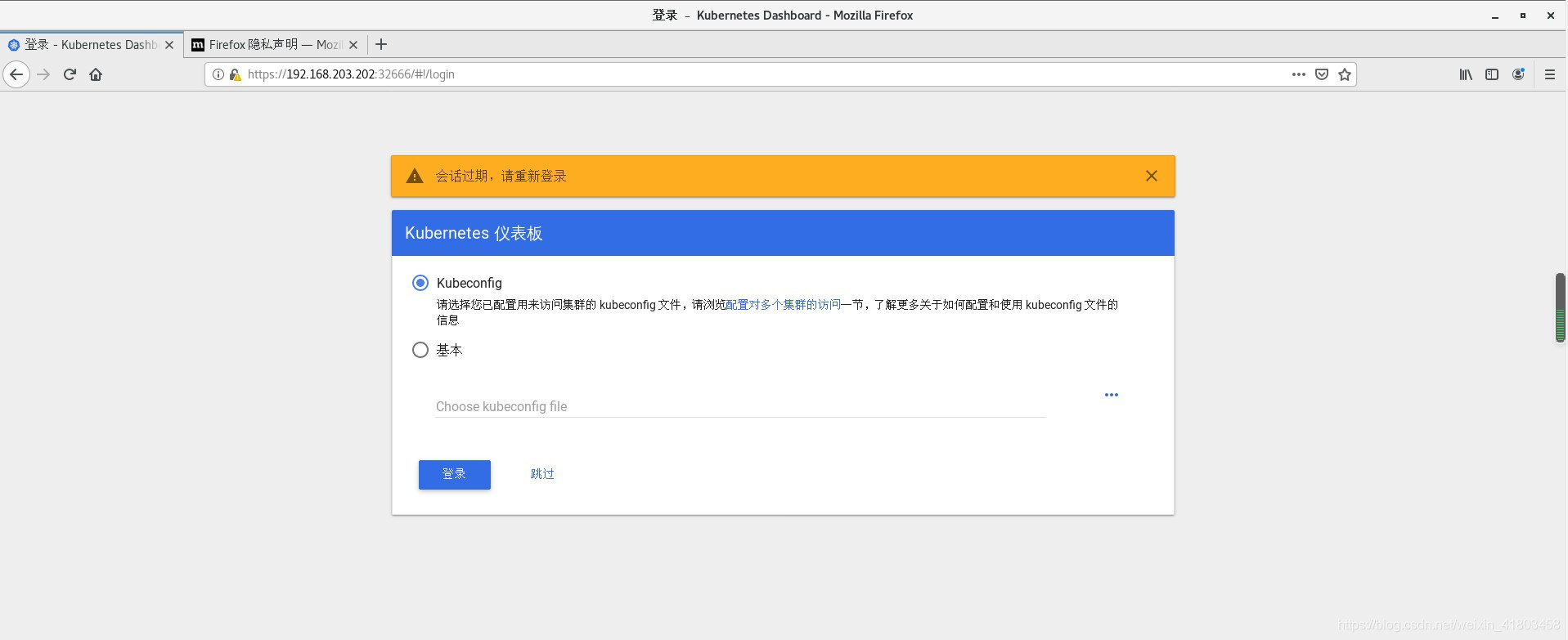

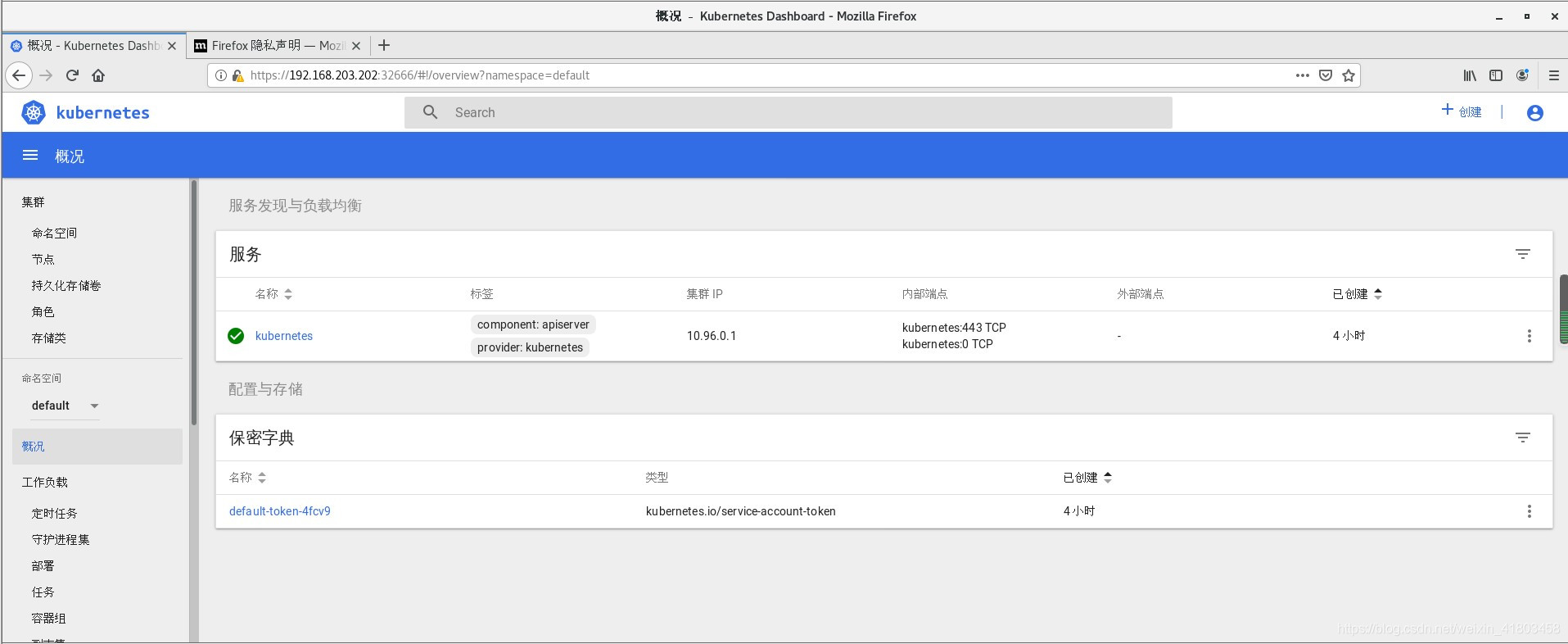

}通过火狐浏览器访问https://192.168.203.202:32666,192.168.203.202是Node1节点IP,选择“基本”,输入账号密码:admin即可。

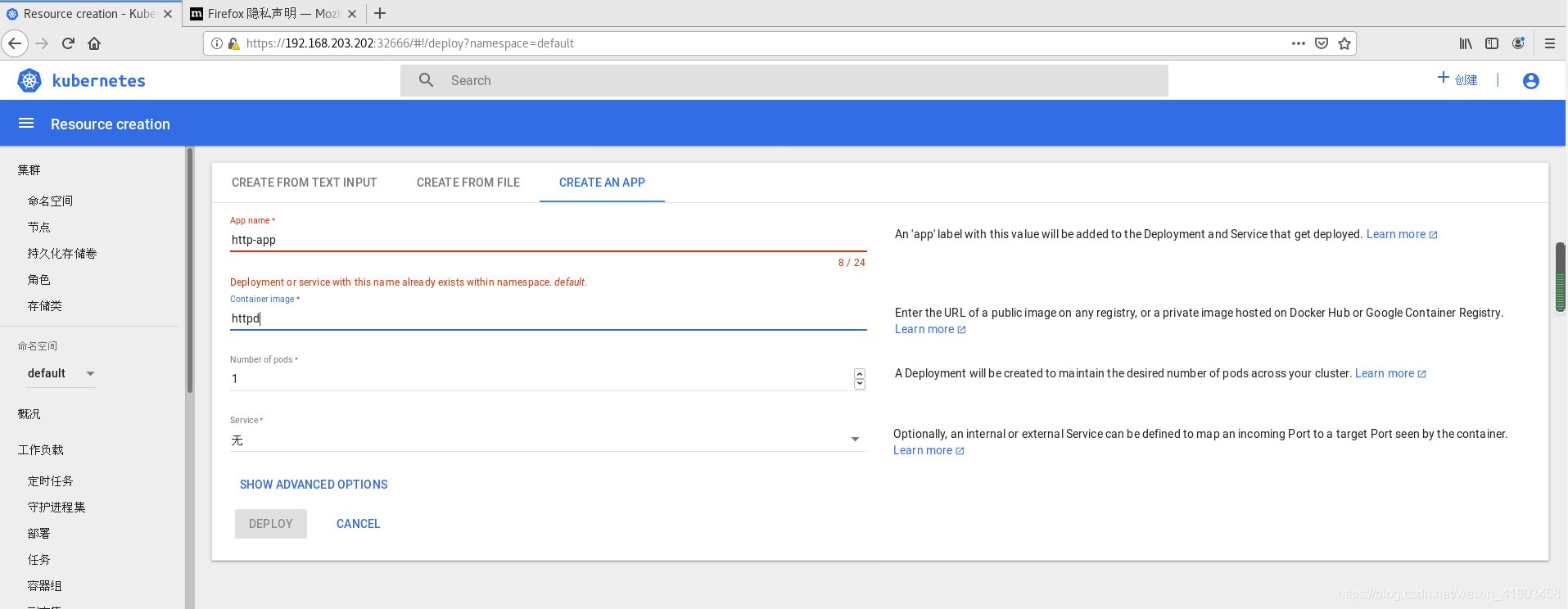

点击右上角“创建” ,创建一个应用测试(忽略红字,我是创建完之后才想起来截图),第三行Number of pod不要超过设定的Node数:

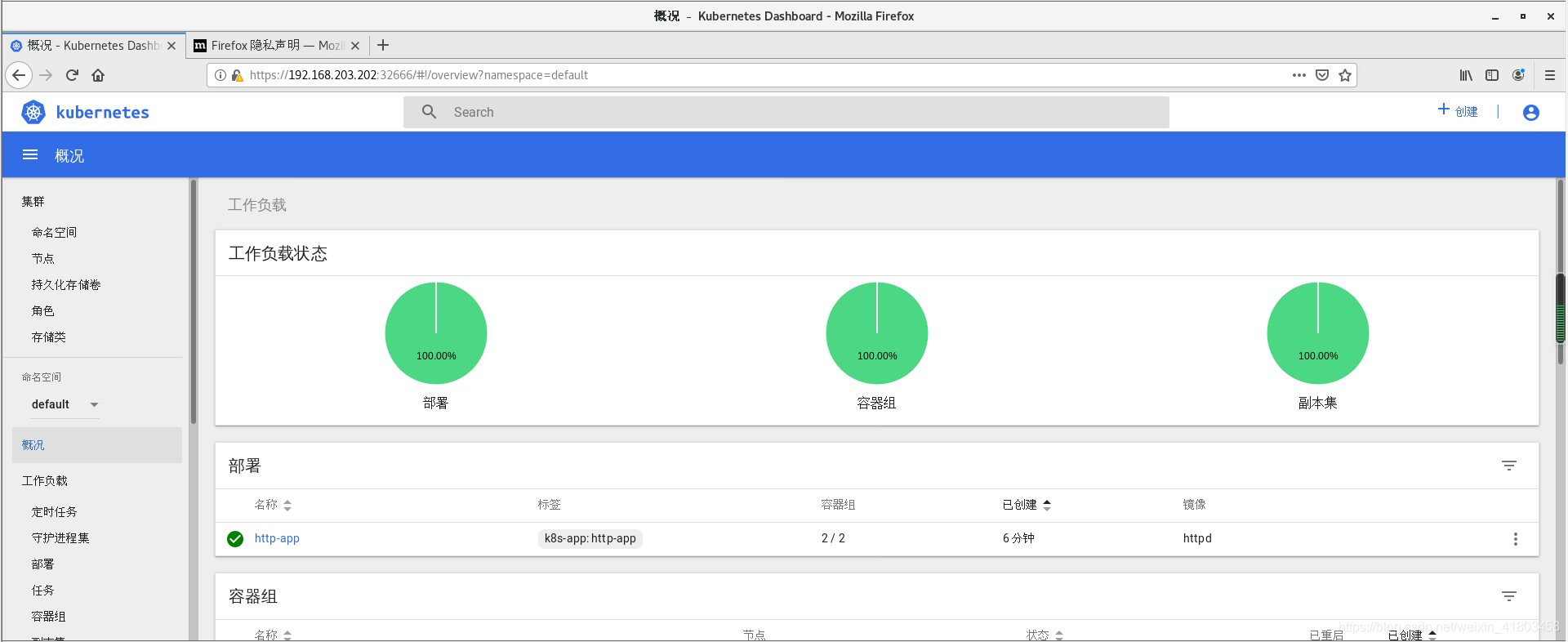

之后点左侧“概况”,即可查看:

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?