一、背景

当parquet文件列巨大时,比如10000个列时,使用spark sql下的DataFrame api合并parquet小文件会非常慢,因为它会将parquet的列转成行,而不管该列是否有值,这反而失去了parquet列式存储的优势。故简单的Spark sql DataFrame实现的parquet Merge合并将不再实用,而网上搜索出来的不管是博客还是论坛等几乎都是千篇一律的讲Spark sql DataFrame实现,对这种场景毫无帮助。

二、调研、实现及方案评估

故经过调研发现三种parquet merge方案,如下:

a.Sparksql DataFrame api方案

b.map reduce方案

c.通过parquet读写java api自定义实现

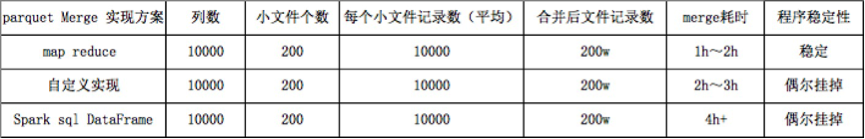

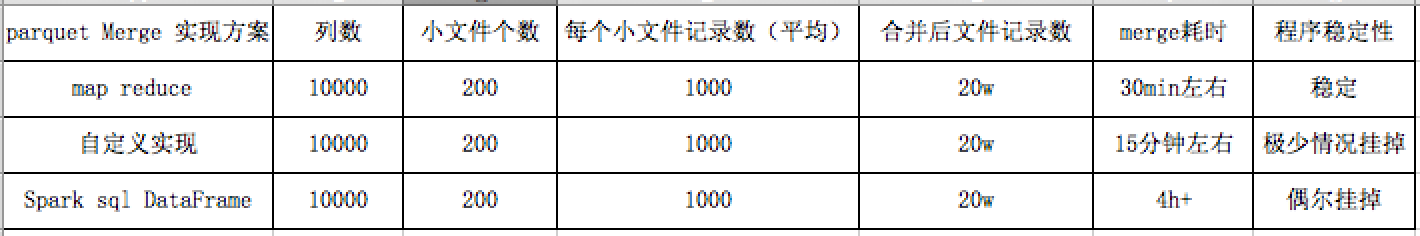

对以上三种方案分别实现,并对其性能、稳定性进行评估如下:

1.1w个列、小文件平均1w、总共200w的量级merge对比三种merge方案,发现hadoop mr程序性能、稳定性最佳

2.1w个列、小文件平均1000、总共20w的量级merge对比三种merge方案,发现自定义实现程序性能最佳、稳定性稍比mr略低

总结:以上merge都是将多个小文件最终merge为一个大文件时的性能、稳定性对比,倘若最终merge为多个parquet文件,通过对比及分析发现mr具有更好的可伸缩性,因为merge后的文件数越多,reduce数越多,程序耗时成倍数下降。故在20w甚至更小量级下,merge大量列的parquet文件为1个大文件时,选择自定义实现方案较好,因为其性能及稳定性都能得到保证;若merge后的parquet文件数量无特别要求,可以增加到多个,比如200个小文件合并为2个甚至更多比如10个、20个,这时选择Hadoop map reduce实现较优,其性能、稳定性都将是最好的;大量列的parquet merge不管量级、合并后文件数、单个文件记录数等如何变化,Spark sql DataFrame方案都将不实用,因为其大量的时间花在了列转行上了。

三、考虑到目前集群实用的是spark1.6.3,已有数据parquet版本为1.6(比较旧),我在实现parquet merge时采用的版本也是parquet-hadoop-bandle:1.6.0实现。同时由于低版本parquet存在parquet-251bug(高版本比如1.9已经修复)一定要关闭dictionary,即创建ParquetWriter时,将enableDictionary设置为false;若采用hadoop mr实现可通过ParquetOutputFormat.setEnableDictionary(job,false)设置。以下是我自己对hadoop mr、java parquet api自定义实现parquet merge的样板代码

1.hadoop mr实现(主类及依赖类)

1.1 主类ParquetMergeMR实现

package com.axdoc.mr; import com.axdoc.bean.BehaviorBean; import com.axdoc.log.common.hdfs.FileSystemUtils; import com.axdoc.log.common.parquet.ParquetUtils; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.Mapper; import org.apache.hadoop.mapreduce.Reducer; import org.apache.hadoop.mapreduce.lib.partition.HashPartitioner; import org.slf4j.Logger; import org.slf4j.LoggerFactory; import parquet.example.data.Group; import parquet.example.data.simple.SimpleGroupFactory; import parquet.hadoop.ParquetInputFormat; import parquet.hadoop.ParquetOutputFormat; import parquet.hadoop.api.DelegatingReadSupport; import parquet.hadoop.api.InitContext; import parquet.hadoop.api.ReadSupport; import parquet.hadoop.example.GroupReadSupport; import parquet.hadoop.example.GroupWriteSupport; import java.io.IOException; import java.lang.reflect.InvocationTargetException; import static parquet.hadoop.metadata.CompressionCodecName.SNAPPY; /** * Created by moyunqing on 2017/11/15. */ public class ParquetMergeMR { private static final transient Logger logger = LoggerFactory.getLogger(ParquetMergeMR.class); /** parquet合并分区器 * */ public static class ParquetMergePartitioner extends HashPartitioner<BehaviorBean,NullWritable>{ @Override public int getPartition(BehaviorBean key, NullWritable value, int numReduceTasks) { //保证每个distinct_id分配到同一个reduce return (key.getDistinct_id() == null?"".hashCode() : key.getDistinct_id().hashCode() & 2147483647) % numReduceTasks; } } /** parquet mapper * */ public static class ParquetMergeMapper extends Mapper<Void,Group,BehaviorBean,NullWritable>{ @Override protected void map(Void key, Group value,Context context) throws IOException, InterruptedException { BehaviorBean bean = BehaviorBean.fromGroup(value); context.write(bean,NullWritable.get()); } } /** parquet reducer * */ public static class ParquetMergeReducer extends Reducer<BehaviorBean,NullWritable,Void,Group> { private SimpleGroupFactory factory; @Override protected void reduce(BehaviorBean key, Iterable<NullWritable> values, Context context) throws IOException, InterruptedException { try { context.write(null, ParquetUtils.fromBean(factory,key)); } catch (IllegalAccessException e) { e.printStackTrace(); } catch (NoSuchMethodException e) { e.printStackTrace(); } catch (InvocationTargetException e) { e.printStackTrace(); } } @Override protected void setup(Context context) throws IOException, InterruptedException { super.setup(context); factory = new SimpleGroupFactory(GroupWriteSupport.getSchema(context.getConfiguration())); } } /** parquet read support * */ public static final class MergeReadSupport extends DelegatingReadSupport<Group> { public MergeReadSupport() { super(new GroupReadSupport()); } @Override public ReadContext init(InitContext context) { return super.init(context); } } /** 独立运行调用入口 * * @param args * @throws Exception */ public static void main(String[] args) throws Exception { parquetMerge(args); } /** parquet merge调用入口 * * @param args * @return * @throws Exception */ public static boolean parquetMerge(String[] args) throws Exception{ if(args == null || args.length == 0) throw new IllegalArgumentException("缺少输入参数:input path,output path!"); if(args.length == 1) throw new IllegalArgumentException("缺少输入参数:output path!"); String inputs = args[0]; String output = args[1]; if(FileSystemUtils.exists(output)){ FileSystemUtils.delete(output); } String schema = null; if(args.length >= 3) schema = args[2]; int reduceNum = 1; if(args.length >= 4 && args[3].matches("^\\d+$")) reduceNum = Integer.valueOf(args[3]); logger.info("inputs => " + inputs + ",output => " + output); Configuration conf = new Configuration(); conf.set("parquet.example.schema",schema); conf.set("mapred.child.java.opts","-Xmx2048m"); conf.set("mapred.job.reduce.input.buffer.percent","0.5"); conf.set("io.sort.mb","200"); conf.set(ReadSupport.PARQUET_READ_SCHEMA,schema); Job job = Job.getInstance(conf,"parquet-merge"); job.setJarByClass(ParquetMergeMR.class); job.setMapperClass(ParquetMergeMapper.class); job.setReducerClass(ParquetMergeReducer.class); job.setNumReduceTasks(reduceNum);//merge操作,故reduce task为1 //设置分区器 job.setPartitionerClass(ParquetMergePartitioner.class); job.setMapOutputKeyClass(BehaviorBean.class); job.setMapOutputValueClass(NullWritable.class); job.setInputFormatClass(ParquetInputFormat.class); ParquetInputFormat.setReadSupportClass(job,MergeReadSupport.class); ParquetInputFormat.addInputPaths(job,inputs); //job.setCombinerClass(ParquetMergeReducer.class); // job.setOutputKeyClass(NullWritable.class); job.setOutputValueClass(Group.class); job.setOutputFormatClass(ParquetOutputFormat.class); ParquetOutputFormat.setOutputPath(job,new Path(output)); ParquetOutputFormat.setCompression(job,SNAPPY); ParquetOutputFormat.setEnableDictionary(job,false); ParquetOutputFormat.setWriteSupportClass(job,GroupWriteSupport.class); boolean result = job.waitForCompletion(true); if(result) logger.info("merge parquet files from [" + inputs + "] to [" + output + "] success."); else logger.info("merge parquet files from [" + inputs + "] to [" + output + "] failed."); return result; } }

1.2 Hdfs操作工具类FileSystemUtils实现

package com.axdoc.log.common.hdfs; import com.axdoc.log.common.api.hdfs.FileStatusFilter; import org.apache.commons.lang.StringUtils; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.*; import org.slf4j.Logger; import org.slf4j.LoggerFactory; import java.io.IOException; import java.util.ArrayList; import java.util.List; /** * Created by moyunqing on 2016/12/9. */ public class FileSystemUtils { private final static Logger logger = LoggerFactory.getLogger(FileSystemUtils.class); public static final Integer FILE_LEVEL_DIR = 0; public static final Integer FILE_LEVEL_FILE = 1; public static final Integer FILE_LEVEL_DOC = 2; public static FileSystem getFileSystem(){ FileSystem fs = null; try { fs = FileSystem.get(new Configuration()); } catch (IOException e) { logger.error("[fs utils]: get file system failed!",e); } return fs; } public static FileSystem getFileSystem(Configuration conf){ FileSystem fs = null; try { fs = FileSystem.get(conf); } catch (IOException e) { logger.error("[fs utils]:get file system failed!",e); } return fs; } public static FSDataOutputStream append(String path){ if(StringUtils.isBlank(path)) return null; FSDataOutputStream write = null; try { FileSystem fs = getFileSystem(); write = fs.append(new Path(path)); } catch (IOException e) { logger.error("[fs utils]:append path " + path + " failed!",e); } return write; } public static FSDataOutputStream create(String path){ if(StringUtils.isBlank(path)) return null; FSDataOutputStream write = null; try { FileSystem fs = getFileSystem(); write = fs.create(new Path(path)); } catch (IOException e) { logger.error("[fs utils]:create path " + path + " failed!",e); } return write; } public static FSDataOutputStream create(String path,boolean overwrite){ if(StringUtils.isBlank(path)) return null; FSDataOutputStream write = null; try { FileSystem fs = getFileSystem(); write = fs.create(new Path(path),overwrite); } catch (IOException e) { logger.error("[fs utils]:create path " + path + " failed!",e); } return write; } public static boolean rename(String src,String dest){ boolean isSuccess = false; if(StringUtils.isBlank(src) || StringUtils.isBlank(dest)) return isSuccess; try { FileSystem fs = getFileSystem(); if(exists(src)) isSuccess = fs.rename(new Path(src),new Path(dest)); } catch (IOException e) { logger.error("[fs utils]:rename src:" + src + " to dest:" + dest + " failed!",e); } return isSuccess; } public static boolean mkdirs(String path){ if(StringUtils.isBlank(path)) return false; boolean isSuccess = false; try { FileSystem fs = getFileSystem(); isSuccess = fs.mkdirs(new Path(path)); } catch (IOException e) { logger.error("[fs utils]:mkdirs path " + path + " failed!",e); } return isSuccess; } public static boolean exists(String path){ if(StringUtils.isBlank(path)) return false; boolean isExists = false; try { FileSystem fs = getFileSystem(); if(fs != null) isExists = fs.exists(new Path(path)); } catch (IOException e) { logger.error("[fs utils]:cant not confirm the " + path + " exists!",e); } return isExists; } public static boolean delete(String path){ if(StringUtils.isBlank(path)) return false; boolean isDelete = false; try { FileSystem fs = getFileSystem(); isDelete = fs.delete(new Path(path),true); } catch (IOException e) { logger.error("[fs utils]:delete path " + path + " failed!",e); } return isDelete; } public static boolean delete(String path,boolean recursive){ if(StringUtils.isBlank(path)) return false; boolean isDelete = false; try { FileSystem fs = getFileSystem(); isDelete = fs.delete(new Path(path),recursive); } catch (IOException e) { logger.error("[fs utils]:delete path " + path + " failed!",e); } return isDelete; } /** 根据path遍历文档 * * @param path 文档路径 * @param filter 文档过滤器 * @param fLevel 返回文档级别,0为目录,1为文件,2不区分文件或者目录 * @return */ public static String [] listFiles(String path,PathFilter filter,Integer fLevel){ if(StringUtils.isBlank(path)) return null; return listFiles(path,filter,fLevel,false).toArray(new String[]{}); } public static String []

当面对拥有10000个列的巨大parquet文件时,传统的Spark SQL DataFrame API进行合并效率低下。本文探讨并比较了Spark DataFrame、MapReduce和自定义Java API三种合并方案。在不同数据规模下,MapReduce在性能和稳定性上表现出色,尤其在生成多个合并文件时。而对于1个大文件的合并,自定义实现方案在小量级数据中效果更好。在实现过程中,注意低版本parquet的字典问题,确保在创建ParquetWriter时关闭字典功能。

当面对拥有10000个列的巨大parquet文件时,传统的Spark SQL DataFrame API进行合并效率低下。本文探讨并比较了Spark DataFrame、MapReduce和自定义Java API三种合并方案。在不同数据规模下,MapReduce在性能和稳定性上表现出色,尤其在生成多个合并文件时。而对于1个大文件的合并,自定义实现方案在小量级数据中效果更好。在实现过程中,注意低版本parquet的字典问题,确保在创建ParquetWriter时关闭字典功能。

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

331

331

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?