salt-cp * yarn-env.sh /software/servers/hadoop-2.8.0/etc/hadoop/

服务节点设计

| IP | Hostname | Services |

|---|---|---|

| 172.168.28.11 | node-01 | zkfc、 namenode、datanode、nodemangeer、mysql |

| 172.168.28.12 | node-02 | zoopkeeper、zkfc、 namenode、datanode、nodemangeer、mysql、journalnode |

| 172.168.28.13 | node-03 | zoopkeeper、datanode、resourcemanager 、journalnode |

| 172.168.28.14 | node-04 | zoopkeeper、journalnode、datanode、resoucrcemanager |

服务器基础配置

创建集群用户

hadoop 管理用户

salt -N all os_util.useradd hadp 600

yarn 管理用户

salt -N all os_util.useradd yarn 600

mapred 管理用户

salt -N all os_util.useradd yarn 600

创建hadoop用户组,并将hadp、yarn、mapred用户加入hadoop用户组

salt -N all cmd.run 'groupadd hadoop -g 800;usermod -G hadoop hadp;usermod -G hadoop mapred;usermod -G hadoop yarn'

免密登录

清除现有密钥信息

salt -N all cmd.run 'rm -rf /home/hadp/.ssh'

salt -N all cmd.run 'rm -rf /home/yarn/.ssh'

使rm和nn端生成公钥,并存储在master端指定路径

Salt-master端执行:

mkdir -p /root/bdpos/base/home/hadp/.ssh

mkdir -p /root/bdpos/home/yarn/.ssh

salt -N nn os_util.createsshkey hadp | grep ssh | awk -F ' ' '{print $1" "$2}' > /root/bdpos/base/home/hadp/.ssh/authorized_keys

salt -N rm os_util.createsshkey yarn | grep ssh | awk -F ' ' '{print $1" "$2}' > /root/bdpos/base/home/yarn/.ssh/authorized_keys

创建minion端存放秘钥路径并制定权限

因为除rm端外,其他节点均未生成秘钥,故默认不会有.ssh目录,需手动创建该目录,用于存储管理节点公钥信息。

salt -N all os_util.mkdir /home/yarn/.ssh yarn yarn 700

salt -N all os_util.mkdir /home/hadp/.ssh hadp hadp 700

将收集至master端的秘钥信息下发至各minion端

salt-cp -N all /root/cake_cluster/base/home/yarn/.ssh/authorized_keys /home/yarn/.ssh/authorized_keys

文件下发后默认属组与master端一致为root,故需修改权限

salt -N all os_util.chmod 600 '/home/yarn/.ssh/authorized_keys'

salt -N all os_util.chown yarn yarn '/home/yarn/.ssh/authorized_keys'

salt-cp -N all /root/cake_cluster/base/home/hadp/.ssh/authorized_keys /home/hadp/.ssh/authorized_keys

salt -N all os_util.chmod 600 '/home/hadp/.ssh/authorized_keys'

salt -N all os_util.chown hadp hadp '/home/hadp/.ssh/authorized_keys'

文件和salt状态文件准备

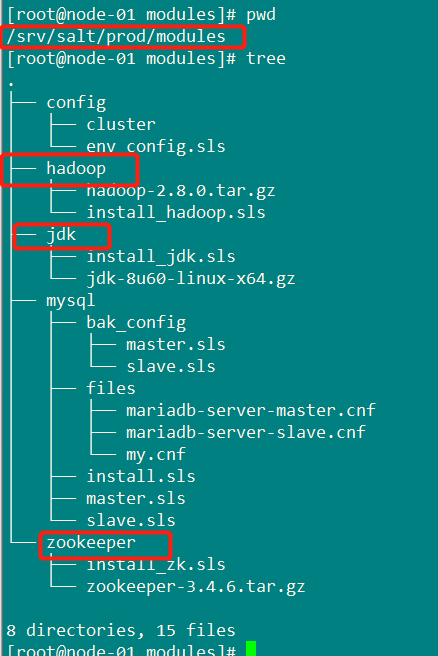

salt目录结构

为方便集群所涉及的软件的统一安装,在salt的prod环境的file_roots(/srv/salt/prod)下创建modules目录管理功能模块,即将各软件安装的配置管理文件和软件包分别存放在该模块下,目录结构如图

各软件安装的配置管理文件

zookeeper_install.sls

salt * state.sls modules.zookeeper.install_zk saltenv=prod

zk-file:

file.managed:

- source: salt://modules/zookeeper/zookeeper-3.4.6.tar.gz //这里的salt:// 表示的salt的工作目录,在/etc/salt/master中file_roots有配置的。后面的目录文件是工作区下的安装包

- name: /software/servers/zookeeper-3.4.6.tar.gz //这个参数的意思是拷贝到minion机器上的哪个位置上。我这里是放到/software/servers/下面的。

- user: hadp //用是什么用户和用户组的权限去执行这个命令

- group: hadp

- mode: 755

zk-install:

cmd.run:

- name: 'cd /software/servers && tar zxf zookeeper-3.4.6.tar.gz && chown -R hadp:hadp /software/servers/zookeeper-3.4.6' //解压,改变用户和用户组的指令

- unless: test -d /software/servers/zookeeper-3.4.6 //unless:用于检查的命令,仅当``unless``选项指向的命令返回false时才执行name指向的命令,检查是否安装。

- require: //require要求zk-file模块成功执行后,这里才会继续执行该模块。

- file: zk-file

zk-rmtgz: //删除minion机器上,上述zk-file模块中copy过去的安装包,在安装完毕后,删除这个安装包。

file.absent:

- name: /software/servers/zookeeper-3.4.6.tar.gz // 指定删除的位置和包名。

- require: //依赖关系,先安装然后才删除。

- cmd: zk-install

hadoop_install.sls

hadoop-file:

file.managed:

- source: salt://modules/hadoop/hadoop-2.8.0.tar.gz

- name: /software/servers/hadoop-2.8.0.tar.gz

- user: hadp

- group: hadp

- mode: 755

hadoop-install:

cmd.run:

- name: 'cd /software/servers && tar zxf hadoop-2.8.0.tar.gz && chown -R hadp:hadp /software/servers/hadoop-2.8.0'

- unless: test -d /software/servers/hadoop-2.8.0

- require:

- file: hadoop-file

hadoop-rmtgz:

file.absent:

- name: /software/servers/hadoop-2.8.0.tar.gz

- require:

- cmd: hadoop-install

jdk_install.sls

jdk-file:

file.managed:

- source: salt://modules/jdk/jdk-8u60-linux-x64.gz

- name: /software/servers/jdk-8u60-linux-x64.gz

- user: hadp

- group: hadp

- mode: 755

jdk-install:

cmd.run:

- name: 'cd /software/servers && tar zxf jdk-8u60-linux-x64.gz && chown -R hadp:hadp /software/servers/jdk1.8.0_60'

- unless: test -d /software/servers/jdk1.8.0_60

- require:

- file: jdk-file

jdk-rmtgz:

file.absent:

- name: /software/servers/jdk-8u60-linux-x64.gz

- require:

- cmd: jdk-instal

各文件目录位置

hadoop-dfs

- 各进程id

1、namenode、datanode进程id

salt -N zk cmd.run 'mkdir -p /data0/journal/data'

salt -N dn cmd.run "mkdir /data1;cd /data1;mkdir yarn-logs;mkdir yarn-pids;chown yarn:hadoop yarn-logs;chown yarn:hadoop yarn-pids"

HDFS安装配置

基础环境配置

启动集群

启动zookeeper集群(分别在node-02,node-03,node-04上启动zk)

salt -N zk zk_util.start_zk

也可以以hadp用户去各节点分别启动,

cd /hadoop/zookeeper-3.4.5/bin/

./zkServer.sh start

#查看状态:一个leader,两个follower

/zkServer.sh status

启动journalnode集群(分别在node-02,node-03,node-04上启动zk)

salt -N zk hadoop_util.start_jn

也可以以hadp用户去各节点分别启动

cd /hadoop/hadoop-2.6.4

sbin/hadoop-daemon.sh start journalnode

启动hdfs集群

- 格式化NN1

salt '192.168.28.11' cmd.run 'su - hadp -c "hdfs namenode -format "'

hdfs namenode -format

- 格式化NN端的zkfc

格式化zkfc即在zookeep上建立zkfc父节点

salt '192.168.28.11' hadoop_util.formatzk也可以在node-01上以hadp用户执行 hdfs zkfc -formatZK

- 启动NN1,也可以直接启动hdfs(在node-01上执行)

sbin/start-dfs.sh

salt '192.168.28.11' hadoop_util.startnn

- 以standby状态启动NN2

实现元数据的初始化同步

salt '192.168.28.12' hadoop_util.syncnn

等同 "hadoop-daemon.sh start namenode -bootstrapStandby -force"// 这种方式好像存在问题待查

scp -r /data0/nn/ node-02:/data0/nn/

- 启动NN1和NN2的zkfc

zkfc为监控NN并实现HA的守护程序,NN启动后应确保zkfc进程正常运行

salt 192.168.28.11 hadoop_util.startzkfc

salt 192.168.28.12 hadoop_util.startzkfc

salt -N nn hadoop_util.startzkfc

- 查看NN状态

hdfs haadmin -getServiceState nn1

或jps,或进入web页面查看

- 启动DN

salt -N dn hadoop_util.startdn

启动yarn集群

由于yarn的用户和hdfs的用户是不同的,所以通过start-all.sh脚本是不能启动所有的组件的,要分开启动

- 启动RM

1、salt -N rm hadoop_util.startrm

2、在node-03或者node-04切换到yarn用户,cd /software/servers/hadoop-2.8.0/sbin 目录执行 start-yarn.sh(可不用启动NM),然后再在另一台机器启动RM,yarn-daemon.sh start resourmanager

3、去两台机器各启动 resourmanager yarn-daemon.sh start resourmanager

- 启动NM

1、salt -N dn hadoop_util.startnm

停止整个集群

salt -N dn hadoop_util.stopdn

salt -N nn hadoop_util.stopnn

salt -N nn hadoop_util.stopzkfc

salt -N jn hadoop_util.stop_jn

salt -N dn hadoop_util.stopyarn

salt -N zk hadoop_util.stopzk

本文介绍了使用Salt自动部署Hadoop集群的详细步骤,包括服务节点设计、服务器基础配置(创建集群用户、实现免密登录)、文件和salt状态文件准备,以及HDFS安装配置(基础环境配置、集群启动与停止)等内容,为Hadoop集群的自动化部署提供了指导。

本文介绍了使用Salt自动部署Hadoop集群的详细步骤,包括服务节点设计、服务器基础配置(创建集群用户、实现免密登录)、文件和salt状态文件准备,以及HDFS安装配置(基础环境配置、集群启动与停止)等内容,为Hadoop集群的自动化部署提供了指导。

704

704

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?