hadoop在通过idea进行文件保存时,

@Test

public void putFile() throws Exception {

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(conf);

FSDataOutputStream fout = fs.create(new Path("hdfs://mycluster/user/hadoop/2019-10-9/a.txt"));

fout.write("how are you 你好吗?".getBytes());

fout.close();

}

提醒该错误:

org.apache.hadoop.security.AccessControlException: Permission denied: user=liyang, access=WRITE, inode="/user/hadoop/2019-10-9/a.txt":hadoop:supergroup:drwxr-xr-x

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.check(FSPermissionChecker.java:319)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.check(FSPermissionChecker.java:292)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:213)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:190)

at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:1728)

at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:1712)

at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkAncestorAccess(FSDirectory.java:1695)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.startFileInternal(FSNamesystem.java:2515)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.startFileInt(FSNamesystem.java:2450)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.startFile(FSNamesystem.java:2334)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.create(NameNodeRpcServer.java:624)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.create(ClientNamenodeProtocolServerSideTranslatorPB.java:397)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:616)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:982)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2049)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2045)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1698)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2043)

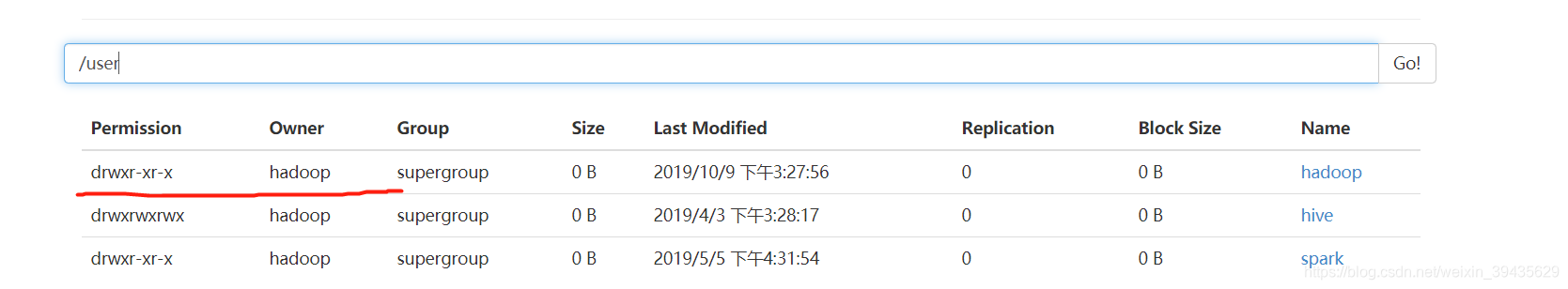

经过查看是由于 /user/hadoop的文件权限

而本地保存使用的用户名是liyang,导致用户权限不够

解决方案:

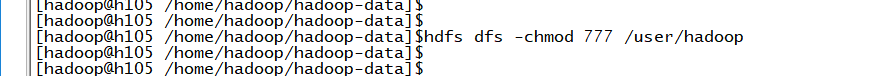

若文件权限要求不高,直接修改hdfs中文件权限即可

本文详细解析了在使用IDEA通过Hadoop保存文件时遇到的权限问题,具体表现为AccessControlException错误,原因是用户liyang尝试写入/user/hadoop目录下没有足够权限的文件。文章提供了检查和调整HDFS文件权限的方法,以解决此问题。

本文详细解析了在使用IDEA通过Hadoop保存文件时遇到的权限问题,具体表现为AccessControlException错误,原因是用户liyang尝试写入/user/hadoop目录下没有足够权限的文件。文章提供了检查和调整HDFS文件权限的方法,以解决此问题。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?