一、时间序列

时间序列是一种重要的数据形式,很多统计数据以及数据的规律和时间序列有着非常重要的联系。

- 示例:有2015年到2017年25万条911的紧急电话的数据,统计出数据中不同类型的紧急情况的数量

# coding=utf-8 import pandas as pd import numpy as np from matplotlib import pyplot as plt df = pd.read_csv("./911.csv") print(df.head(5)) #获取分类 # print()df["title"].str.split(": ") temp_list = df["title"].str.split(": ").tolist() cate_list = list(set([i[0] for i in temp_list])) print(cate_list) #构造全为0的数组 zeros_df = pd.DataFrame(np.zeros((df.shape[0],len(cate_list))),columns=cate_list) #赋值 for cate in cate_list: zeros_df[cate][df["title"].str.contains(cate)] = 1 # break # print(zeros_df) sum_ret = zeros_df.sum(axis=0) print(sum_ret)1、生成一段时间范围

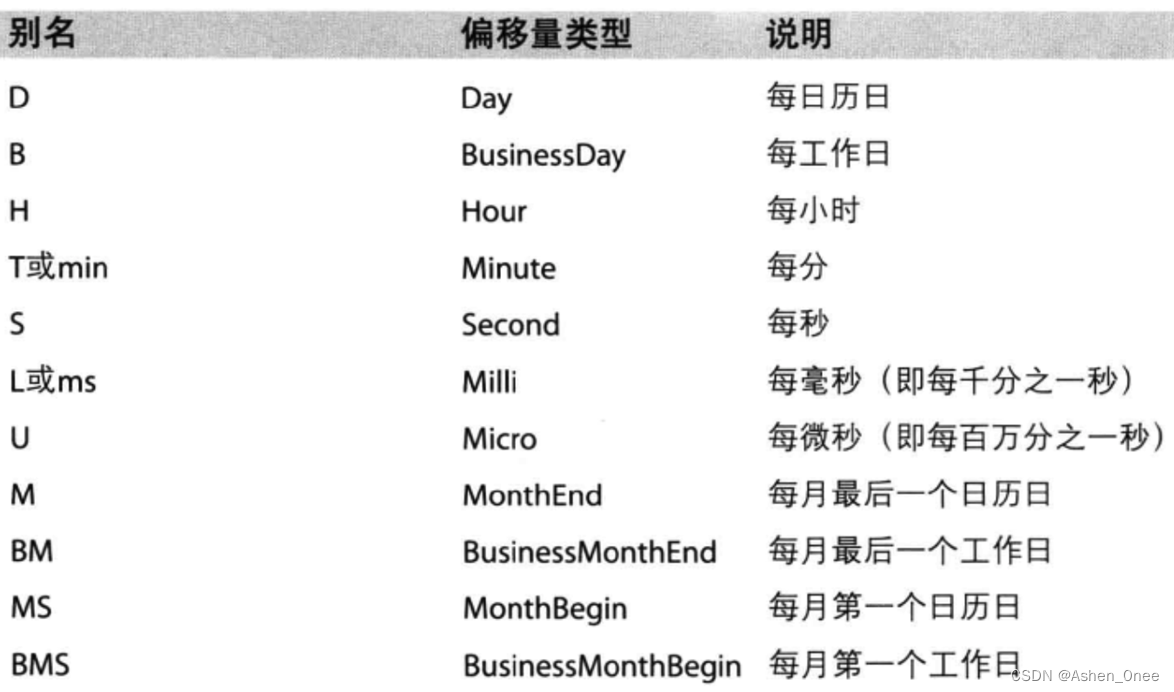

pd.date_range(start=, end=, periods=, freq='D')start 和 end 以及 freq 配合能够生成 start 和 end 范围内以频率 freq 的一组时间索引;

start 和 periods 以及 freq 配合能够生成从 start 开始的频率为 freq 的 periods 个时间索引。

[5 rows x 9 columns] cate EMS 124840 Fire 37432 Traffic 87465 Name: title, dtype: int64

2、在 DataFrame 中使用时间序列

index = pd.date_range("20170101", period = 10)

df = pd.DataFrame(np.random.rand(10), index=index)使用 .to_datetime 方法把时间字符串转化为时间序列:

df["timeStamp"] = pd.to_datetime(df["timeStamp"])

二、.resample 重采样

将时间序列从一个频率转化为另一个频率的过程。

- 将高频率数据转化为低频率数据为降采样;

- 低频率转化为高频率为升采样

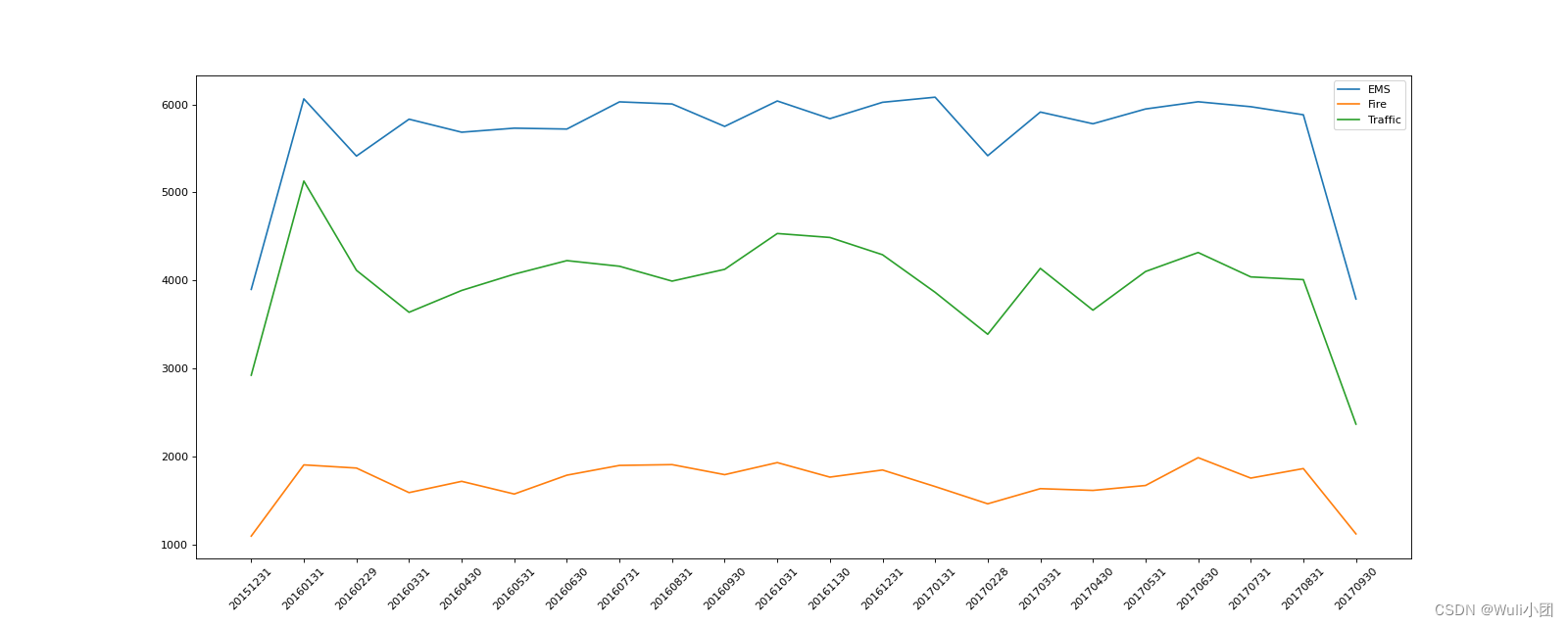

- 示例:统计911数据中不同月份电话次数的变化情况:

# coding=utf-8 #911数据中不同月份不同类型的电话的次数的变化情况 import pandas as pd import numpy as np from matplotlib import pyplot as plt #把时间字符串转为时间类型设置为索引 df = pd.read_csv("./911.csv") df["timeStamp"] = pd.to_datetime(df["timeStamp"]) #添加列,表示分类 temp_list = df["title"].str.split(": ").tolist() cate_list = [i[0] for i in temp_list] # print(np.array(cate_list).reshape((df.shape[0],1))) df["cate"] = pd.DataFrame(np.array(cate_list).reshape((df.shape[0],1))) df.set_index("timeStamp",inplace=True) print(df.head(1)) plt.figure(figsize=(20, 8), dpi=80) #分组 for group_name,group_data in df.groupby(by="cate"): #对不同的分类都进行绘图 count_by_month = group_data.resample("M").count()["title"] # 画图 _x = count_by_month.index print(_x) _y = count_by_month.values _x = [i.strftime("%Y%m%d") for i in _x] plt.plot(range(len(_x)), _y, label=group_name) plt.xticks(range(len(_x)), _x, rotation=45) plt.legend(loc="best") plt.show()[1 rows x 9 columns] DatetimeIndex(['2015-12-31', '2016-01-31', '2016-02-29', '2016-03-31', '2016-04-30', '2016-05-31', '2016-06-30', '2016-07-31', '2016-08-31', '2016-09-30', '2016-10-31', '2016-11-30', '2016-12-31', '2017-01-31', '2017-02-28', '2017-03-31', '2017-04-30', '2017-05-31', '2017-06-30', '2017-07-31', '2017-08-31', '2017-09-30'], dtype='datetime64[ns]', name='timeStamp', freq='M') DatetimeIndex(['2015-12-31', '2016-01-31', '2016-02-29', '2016-03-31', '2016-04-30', '2016-05-31', '2016-06-30', '2016-07-31', '2016-08-31', '2016-09-30', '2016-10-31', '2016-11-30', '2016-12-31', '2017-01-31', '2017-02-28', '2017-03-31', '2017-04-30', '2017-05-31', '2017-06-30', '2017-07-31', '2017-08-31', '2017-09-30'], dtype='datetime64[ns]', name='timeStamp', freq='M') DatetimeIndex(['2015-12-31', '2016-01-31', '2016-02-29', '2016-03-31', '2016-04-30', '2016-05-31', '2016-06-30', '2016-07-31', '2016-08-31', '2016-09-30', '2016-10-31', '2016-11-30', '2016-12-31', '2017-01-31', '2017-02-28', '2017-03-31', '2017-04-30', '2017-05-31', '2017-06-30', '2017-07-31', '2017-08-31', '2017-09-30'], dtype='datetime64[ns]', name='timeStamp', freq='M')

三、PeriodIndex 时间段

periods = pd.PeriodIndex(year=data["year"], month=data["month"], day=data["day"], hour=data["hour"], freq="H")

给这个时间段降采样:

data = df.set_index(periods).resample("10D").mean()

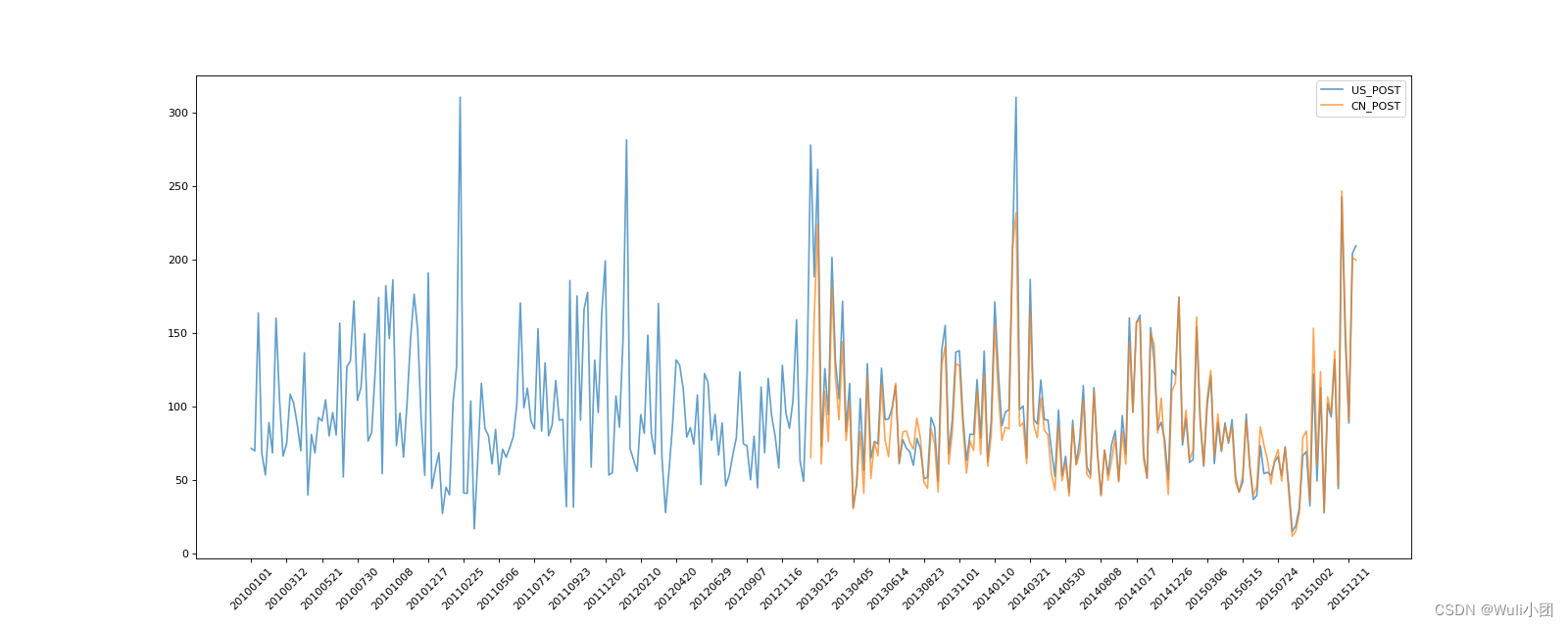

绘制 PM2.5 随时间的变化情况:

# coding=utf-8

import pandas as pd

from matplotlib import pyplot as plt

file_path = "./PM2.5/BeijingPM20100101_20151231.csv"

df = pd.read_csv(file_path)

#把分开的时间字符串通过periodIndex的方法转化为pandas的时间类型

period = pd.PeriodIndex(year=df["year"],month=df["month"],day=df["day"],hour=df["hour"],freq="H")

df["datetime"] = period

# print(df.head(10))

#把datetime 设置为索引

df.set_index("datetime",inplace=True)

#进行降采样

df = df.resample("7D").mean()

print(df.head())

#处理缺失数据,删除缺失数据

# print(df["PM_US Post"])

data =df["PM_US Post"]

data_china = df["PM_Nongzhanguan"]

print(data_china.head(100))

#画图

_x = data.index

_x = [i.strftime("%Y%m%d") for i in _x]

_x_china = [i.strftime("%Y%m%d") for i in data_china.index]

print(len(_x_china),len(_x_china))

_y = data.values

_y_china = data_china.values

plt.figure(figsize=(20,8),dpi=80)

plt.plot(range(len(_x)),_y,label="US_POST",alpha=0.7)

plt.plot(range(len(_x_china)),_y_china,label="CN_POST",alpha=0.7)

plt.xticks(range(0,len(_x_china),10),list(_x_china)[::10],rotation=45)

plt.legend(loc="best")

plt.show()

文章介绍了如何使用Python的pandas库处理时间序列数据,包括读取911紧急电话数据,通过时间戳进行数据分类和计数,以及使用.resample方法进行数据重采样,展示不同月份的紧急电话分布情况。此外,还涉及了PeriodIndex和降采样的概念。

文章介绍了如何使用Python的pandas库处理时间序列数据,包括读取911紧急电话数据,通过时间戳进行数据分类和计数,以及使用.resample方法进行数据重采样,展示不同月份的紧急电话分布情况。此外,还涉及了PeriodIndex和降采样的概念。

198

198

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?