https://handong1587.github.io/deep_learning/2015/10/09/unsupervised-learning.html

Jump to...

1. Papers

1. Clustering

2. Auto-encoder

3. RBM (Restricted Boltzmann Machine)

1. Papers

2. Blogs

3. Projects

4. Videos

Restricted Boltzmann Machine (RBM)

Sparse Coding

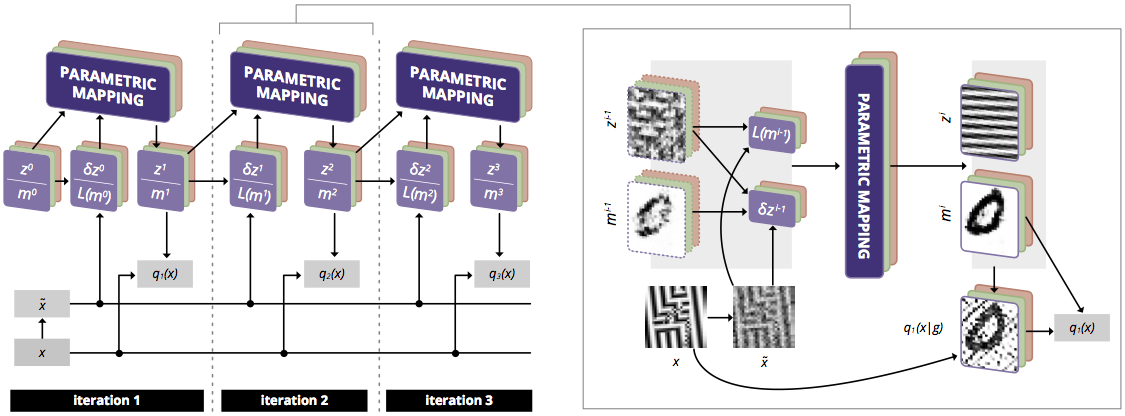

Fast Convolutional Sparse Coding in the Dual Domain

https://arxiv.org/abs/1709.09479

Auto-encoder

Papers

On Random Weights and Unsupervised Feature Learning

- intro: ICML 2011

- paper: http://www.robotics.stanford.edu/~ang/papers/icml11-RandomWeights.pdf

Unsupervised Learning of Spatiotemporally Coherent Metrics

Unsupervised Learning of Visual Representations using Videos

- intro: ICCV 2015

- project page: http://www.cs.cmu.edu/~xiaolonw/unsupervise.html

- arxiv: http://arxiv.org/abs/1505.00687

- paper: http://www.cs.toronto.edu/~nitish/depth_oral.pdf

- github: https://github.com/xiaolonw/caffe-video_triplet

Unsupervised Visual Representation Learning by Context Prediction

- intro: ICCV 2015

- homepage: http://graphics.cs.cmu.edu/projects/deepContext/

- arxiv: http://arxiv.org/abs/1505.05192

- github: https://github.com/cdoersch/deepcontext

Unsupervised Learning on Neural Network Outputs

- intro: “use CNN trained on the ImageNet of 1000 classes to the ImageNet of over 20000 classes”

- arxiv: http://arxiv.org/abs/1506.00990

- github: https://github.com/yaolubrain/ULNNO

Unsupervised Domain Adaptation by Backpropagation

- intro: ICML 2015

- project page: http://sites.skoltech.ru/compvision/projects/grl/

- paper: http://sites.skoltech.ru/compvision/projects/grl/files/paper.pdf

- github: https://github.com/ddtm/caffe/tree/grl

Unsupervised Learning of Visual Representations by Solving Jigsaw Puzzles

- arxiv: http://arxiv.org/abs/1603.09246

- notes: http://www.inference.vc/notes-on-unsupervised-learning-of-visual-representations-by-solving-jigsaw-puzzles/

Tagger: Deep Unsupervised Perceptual Grouping

- intro: NIPS 2016

- arxiv: https://arxiv.org/abs/1606.06724

- github: https://github.com/CuriousAI/tagger

Regularization for Unsupervised Deep Neural Nets

Sparse coding: A simple exploration

- blog: https://blog.metaflow.fr/sparse-coding-a-simple-exploration-152a3c900a7c#.o7g2jk9zi

- github: https://github.com/metaflow-ai/blog/tree/master/sparse-coding

Navigating the unsupervised learning landscape

Unsupervised Learning using Adversarial Networks

- intro: Facebook AI Research

- youtube: https://www.youtube.com/watch?v=lalg1CuNB30

Split-Brain Autoencoders: Unsupervised Learning by Cross-Channel Prediction

- intro: UC Berkeley

- project page: https://richzhang.github.io/splitbrainauto/

- arxiv: https://arxiv.org/abs/1611.09842

- github: https://github.com/richzhang/splitbrainauto

Learning Features by Watching Objects Move

- intro: CVPR 2017. Facebook AI Research & UC Berkeley

- arxiv: https://arxiv.org/abs/1612.06370

- github((Caffe+Torch): https://github.com/pathak22/unsupervised-video

CNN features are also great at unsupervised classification

- intro: Arts et Métiers ParisTech

- arxiv: https://arxiv.org/abs/1707.01700

Supervised Convolutional Sparse Coding

https://arxiv.org/abs/1804.02678

Clustering

Deep clustering: Discriminative embeddings for segmentation and separation

- arxiv: https://arxiv.org/abs/1508.04306

- github(Keras): https://github.com/jcsilva/deep-clustering

Neural network-based clustering using pairwise constraints

- intro: ICLR 2016

- arxiv: https://arxiv.org/abs/1511.06321

Unsupervised Deep Embedding for Clustering Analysis

- intro: ICML 2016. Deep Embedded Clustering (DEC)

- arxiv: https://arxiv.org/abs/1511.06335

- github: https://github.com/piiswrong/dec

Joint Unsupervised Learning of Deep Representations and Image Clusters

- intro: CVPR 2016

- arxiv: https://arxiv.org/abs/1604.03628

- github(Torch): https://github.com/jwyang/joint-unsupervised-learning

Single-Channel Multi-Speaker Separation using Deep Clustering

Towards K-means-friendly Spaces: Simultaneous Deep Learning and Clustering

Deep Unsupervised Clustering with Gaussian Mixture Variational

Variational Deep Embedding: A Generative Approach to Clustering

A new look at clustering through the lens of deep convolutional neural networks

- intro: University of Central Florida & Purdue University

- arxiv: https://arxiv.org/abs/1706.05048

Deep Subspace Clustering Networks

- intro: NIPS 2017

- arxiv: https://arxiv.org/abs/1709.02508

SpectralNet: Spectral Clustering using Deep Neural Networks

Clustering with Deep Learning: Taxonomy and New Methods

- intro: Technical University of Munich

- arxiv: https://arxiv.org/abs/1801.07648

- github: https://github.com/elieJalbout/Clustering-with-Deep-learning

Deep Continuous Clustering

Learning to Cluster

- openreview: https://openreview.net/forum?id=HkWTqLsIz

- github: https://github.com/kutoga/learning2cluster

Learning Neural Models for End-to-End Clustering

- intro: ANNPR

- arxiv: https://arxiv.org/abs/1807.04001

Deep Clustering for Unsupervised Learning of Visual Features

- intro: ECCV 2018

- arxiv: https://arxiv.org/abs/1807.05520

Improving Image Clustering With Multiple Pretrained CNN Feature Extractors

- intro: Poster presentation at BMVC 2018

- arxiv: https://arxiv.org/abs/1807.07760

Deep clustering: On the link between discriminative models and K-means

https://arxiv.org/abs/1810.04246

Deep Density-based Image Clustering

https://arxiv.org/abs/1812.04287

Deep Representation Learning Characterized by Inter-class Separation for Image Clustering

- intro: WACV 2019

- arxiv: https://arxiv.org/abs/1901.06474

Auto-encoder

Auto-Encoding Variational Bayes

The Potential Energy of an Autoencoder

- intro: PAMI 2014

- paper: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.698.4921&rep=rep1&type=pdf

Importance Weighted Autoencoders

Review of Auto-Encoders

- intro: Piotr Mirowski, Microsoft Bing London, 2014

- slides: https://piotrmirowski.files.wordpress.com/2014/03/piotrmirowski_2014_reviewautoencoders.pdf

- github: https://github.com/piotrmirowski/Tutorial_AutoEncoders/

Stacked What-Where Auto-encoders

Ladder Variational Autoencoders

How to Train Deep Variational Autoencoders and Probabilistic Ladder Networks

Rank Ordered Autoencoders

- arxiv: http://arxiv.org/abs/1605.01749

- github: https://github.com/paulbertens/rank-ordered-autoencoder

Decoding Stacked Denoising Autoencoders

Keras autoencoders (convolutional/fcc)

Building Autoencoders in Keras

Review of auto-encoders

- intro: Tutorial code for Auto-Encoders, implementing Marc’Aurelio Ranzato’s Sparse Encoding Symmetric Machine and testing it on the MNIST handwritten digits data.

- paper: https://github.com/piotrmirowski/Tutorial_AutoEncoders/blob/master/PiotrMirowski_2014_ReviewAutoEncoders.pdf

- github: https://github.com/piotrmirowski/Tutorial_AutoEncoders

Autoencoders: Torch implementations of various types of autoencoders

- intro: AE / SparseAE / DeepAE / ConvAE / UpconvAE / DenoisingAE / VAE / AdvAE

- github: https://github.com/Kaixhin/Autoencoders

Tutorial on Variational Autoencoders

Variational Autoencoders Explained

- blog: http://kvfrans.com/variational-autoencoders-explained/

- github: https://github.com/kvfrans/variational-autoencoder

Introducing Variational Autoencoders (in Prose and Code)

- blog: http://blog.fastforwardlabs.com/post/148842796218/introducing-variational-autoencoders-in-prose-and

Under the Hood of the Variational Autoencoder (in Prose and Code)

- blog: http://blog.fastforwardlabs.com/post/149329060653/under-the-hood-of-the-variational-autoencoder-in

The Unreasonable Confusion of Variational Autoencoders

Variational Autoencoder for Deep Learning of Images, Labels and Captions

- intro: NIPS 2016. Duke University & Nokia Bell Labs

- paper: http://people.ee.duke.edu/~lcarin/Yunchen_nips_2016.pdf

Convolutional variational autoencoder with PyMC3 and Keras

Pixelvae: A Latent Variable Model For Natural Images

beta-VAE: Learning Basic Visual Concepts with a Constrained Variational Framework

Variational Lossy Autoencoder

Convolutional Autoencoders

Convolutional Autoencoders in Tensorflow

A Deep Convolutional Auto-Encoder with Pooling - Unpooling Layers in Caffe

Deep Matching Autoencoders

- intro: University of Edinburgh & RIKEN AIP

- keywords: Deep Matching Autoencoders (DMAE)

- arxiv: https://arxiv.org/abs/1711.06047

Understanding Autoencoders with Information Theoretic Concepts

- intro: University of Florida

- arxiv: https://arxiv.org/abs/1804.00057

Hyperspherical Variational Auto-Encoders

- intro: University of Amsterdam

- project page: https://nicola-decao.github.io/s-vae/

- arxiv: https://arxiv.org/abs/1804.00891

- github: https://github.com/nicola-decao/s-vae

Spatial Frequency Loss for Learning Convolutional Autoencoders

https://arxiv.org/abs/1806.02336

DAQN: Deep Auto-encoder and Q-Network

https://arxiv.org/abs/1806.00630

Understanding and Improving Interpolation in Autoencoders via an Adversarial Regularizer

- intro: Google Brain

- arxiv: https://arxiv.org/abs/1807.07543

- github: https://github.com/brain-research/acai

RBM (Restricted Boltzmann Machine)

Papers

Deep Boltzmann Machines

- author: Ruslan Salakhutdinov, Geoffrey Hinton

- paper: http://www.cs.toronto.edu/~hinton/absps/dbm.pdf

On the Equivalence of Restricted Boltzmann Machines and Tensor Network States

Matrix Product Operator Restricted Boltzmann Machines

https://arxiv.org/abs/1811.04608

Blogs

A Tutorial on Restricted Boltzmann Machines

http://xiangjiang.live/2016/02/12/a-tutorial-on-restricted-boltzmann-machines/

Dreaming of names with RBMs

- blog: http://colinmorris.github.io/blog/dreaming-rbms

- github: https://github.com/colinmorris/char-rbm

on Cheap Learning: Partition Functions and RBMs

- blog: https://charlesmartin14.wordpress.com/2016/09/10/on-cheap-learning-partition-functions-and-rbms/

Improving RBMs with physical chemistry

- blog: https://charlesmartin14.wordpress.com/2016/10/21/improving-rbms-with-physical-chemistry/

- github: https://github.com/charlesmartin14/emf-rbm/blob/master/EMF_RBM_Test.ipynb

Projects

Restricted Boltzmann Machine (Haskell)

- intro: “This is an implementation of two machine learning algorithms, Contrastive Divergenceand Back-propagation.”

- github: https://github.com/aeyakovenko/rbm

tensorflow-rbm: Tensorflow implementation of Restricted Boltzman Machine

- intro: Tensorflow implementation of Restricted Boltzman Machine for layerwise pretraining of deep autoencoders.

- github: https://github.com/meownoid/tensorfow-rbm

Videos

Modelling a text corpus using Deep Boltzmann Machines

Foundations of Unsupervised Deep Learning

- intro: Ruslan Salakhutdinov [CMU]

- youtube: https://www.youtube.com/watch?v=rK6bchqeaN8

- mirror: https://pan.baidu.com/s/1mi4nCow

- sildes: http://www.cs.cmu.edu/~rsalakhu/talk_unsup.pdf

152

152

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?