1. BN层

优势: 可以选择很大的学习率,训练网络时收敛速度快,可以特高网络的泛化能力,减少过拟合。

用在激活层前面。计算过程如下:

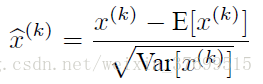

a.归一化处理

由于训练过程采用了batch随机梯度下降,因此 指的是一批训练数据时,各神经元输入值的平均值;

指的是一批训练数据时,各神经元输入值的平均值; 指的是一批训练数据时各神经元输入值的标准差!

指的是一批训练数据时各神经元输入值的标准差!

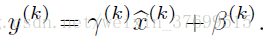

b. 变化重构:

其中尺度变换因子和位移因子是可以学习的参数。

2. boost提升学习的思想:

1) Train the first weak classifier (h1) in the initialized training dataset;

2) Combine the poorly-trained data of the weak classifier (h1) with new data to form the training data for a new round, and train the second weak classifier (h2);3) Combine the poorly-trained data of weak classifier (h1) and weak classifier (h2) with new data to form the training

data for a new round, and train the third weak classifier (h3);

4) Repeat Steps 2-3 until an adequate number of weak classifiers have been attained.

5) Form a strong classifier through the weighted voting of all weak classifiers.

3. bagging学习思想:其原理是从现有数据中有放回抽取若干个样本构建分类器,重复若干次建立若干个分类器进行投票。

5926

5926

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?