1.Linear Regression with One variable

Linear Regression is supervised learning algorithm, Because the data set is given a right answer for each example.

And we are predicting real-valued output so it is a regression problem.

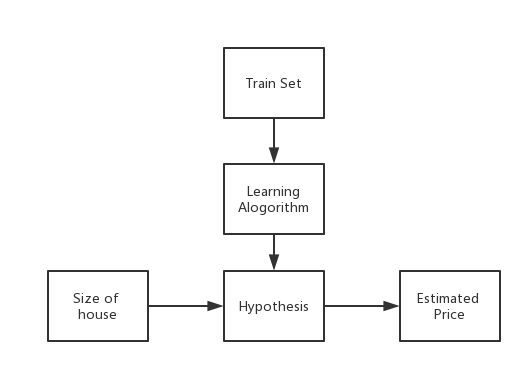

Block Diagram:

2. Cost Function

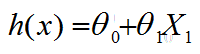

Idea: choose Θ0 and Θ1 so that h(x) is close to y for our training example

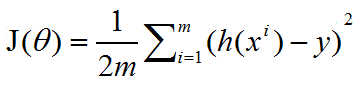

cost function:

(it a bow-shaped function )

(it a bow-shaped function )

So it became a mathematical problem of minimizing the cost function (Squared error funciton)

3. Gradient Descent

we are using gradient descent to minimize the cost function

Process:

1. Start with some random choice of the theta vector

2. Keep changing the theta vector to reduce J(theta) Until we end up at a minimum

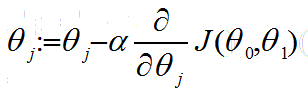

Repeat until convergence:

(the derivative term is the slope of the cost function)

(the derivative term is the slope of the cost function)

alpha is the learning rate And we need to a aimultaneous update for the theta vector.

1. If alpha is too small, the gradient descent is small

2. If alpha is too larger, gradient descent, it will overshoot the minimum, it may fail to converge.

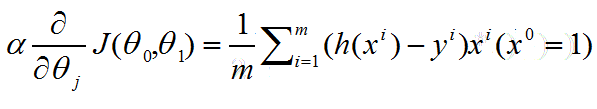

And taking the derivative, we can get:

Convex function: a bow-shaped function just like the cost function J(theta)

Batch gradient descent: each step of gradient descent uses all the training examples(sum over all the training sample)

本文介绍了一维线性回归的概念及其作为监督学习算法的应用,并详细解释了如何通过梯度下降法来最小化代价函数以求得最优参数。

本文介绍了一维线性回归的概念及其作为监督学习算法的应用,并详细解释了如何通过梯度下降法来最小化代价函数以求得最优参数。

1520

1520

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?