1 package big.data.analyse.sparksql 2 3 import org.apache.spark.sql.{Row, SparkSession} 4 import org.apache.spark.sql.types.{IntegerType, StringType, StructField, StructType} 5 6 /** 7 * Created by zhen on 2018/11/8. 8 */ 9 object SparkInFuncation { 10 def main(args: Array[String]) { 11 val spark = SparkSession.builder().appName("spark内置函数") 12 .master("local[2]").getOrCreate() 13 val userData = Array( 14 "2015,1,www.baidu.com", 15 "2016,4,www.google.com", 16 "2017,3,www.apache.com", 17 "2015,6,www.spark.com", 18 "2016,2,www.hadoop.com", 19 "2017,8,www.solr.com", 20 "2017,4,www.hive.com" 21 ) 22 val sc = spark.sparkContext 23 val sqlContext = spark.sqlContext 24 val userDataRDD = sc.parallelize(userData) // 转化为RDD 25 val userDataType = userDataRDD.map(line => { 26 val Array(age, id, url) = line.split(",") 27 Row( 28 age, id.toInt, url 29 ) 30 }) 31 val structTypes = StructType(Array( 32 StructField("age", StringType, true), 33 StructField("id", IntegerType, true), 34 StructField("url", StringType, true) 35 )) 36 // RDD转化为DataFrame 37 val userDataFrame = sqlContext.createDataFrame(userDataType,structTypes) 38 39 import org.apache.spark.sql.functions._ 40 userDataFrame 41 .groupBy("age") // 分组 42 .agg(("id","sum")) // 求和 43 .orderBy(desc("age")) // 排序 44 .show() 45 } 46 }

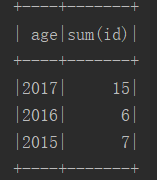

答案:

本文通过实例演示了如何使用Spark SQL进行数据加载、转换为DataFrame、应用内置函数、分组统计及排序展示结果,深入浅出地介绍了Spark SQL在大数据分析中的应用。

本文通过实例演示了如何使用Spark SQL进行数据加载、转换为DataFrame、应用内置函数、分组统计及排序展示结果,深入浅出地介绍了Spark SQL在大数据分析中的应用。

583

583

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?