For the naive thought to practise my academic English skill, the rest of my notes will be wrriten in my terrrible English.XD

If you have any kind of uncomfortable feel, please close this window and refer to the original edition from Mr. Lin.

I will be really appriciate for your understanding.

OK, so much for this.

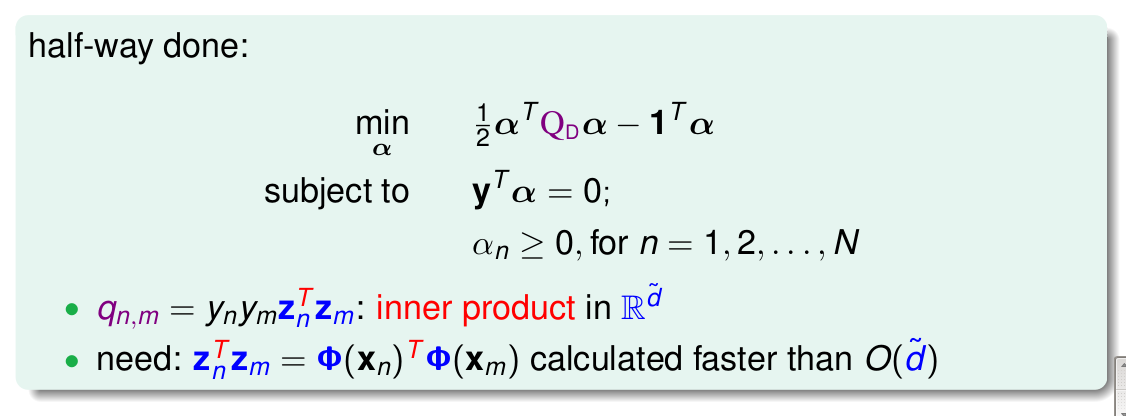

We discussed the powerful tool Dual SVM with a hard bound in the last class, which helps us to better understand the meaning of SVM.

But we did not solve the problem brought from a big ~d~

One large ~d~ my cause disaster when caculating Qd, which is the bottlenect of our model.

Here we introduce one tool called kernel function to better our situation.

If we can get the result of a specific kind of function with the parameters x and x', we can cut down the process in computing and give the output diectly for the input.

That's what we call kernel function.

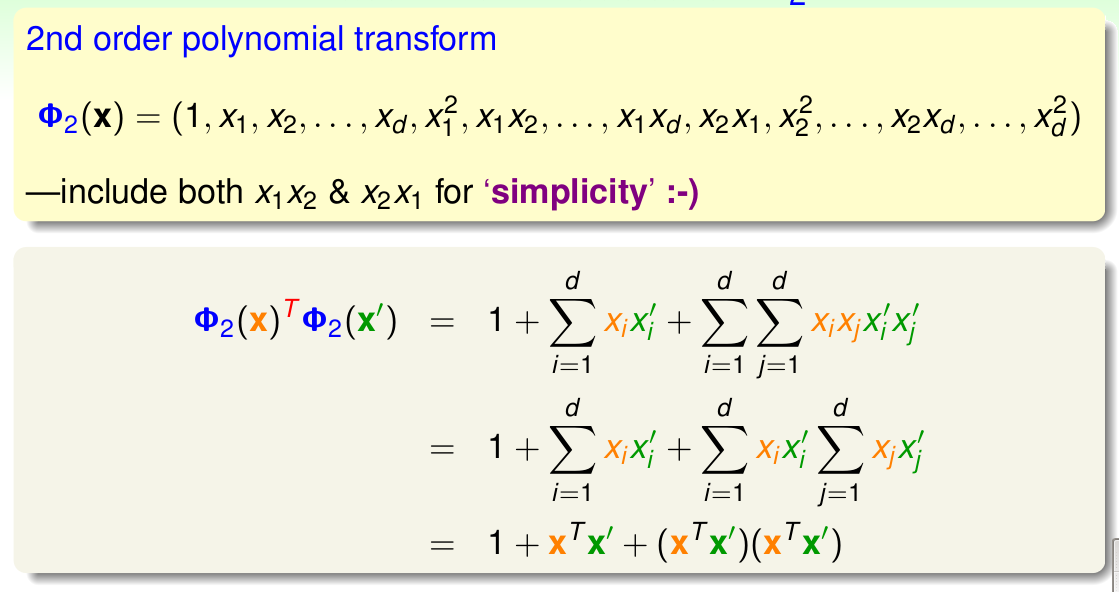

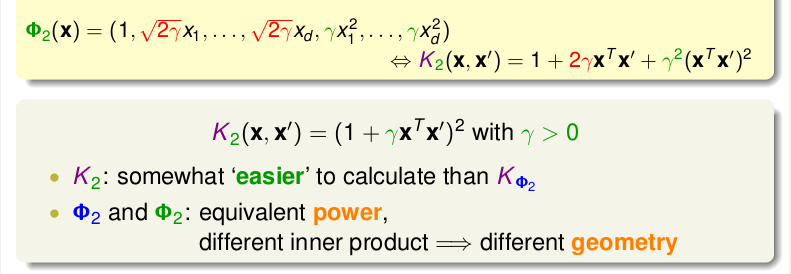

We use a 2nd order polynomial transform to illustrate.

The idea to make the computing sampler is to deal with xTx' and the polynomial rrelationship at the same time.

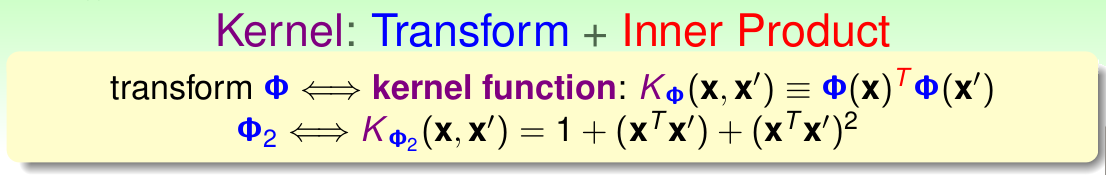

So here we get the kernel function whose input is x and x':

For the above question, we can apply the kernel function:

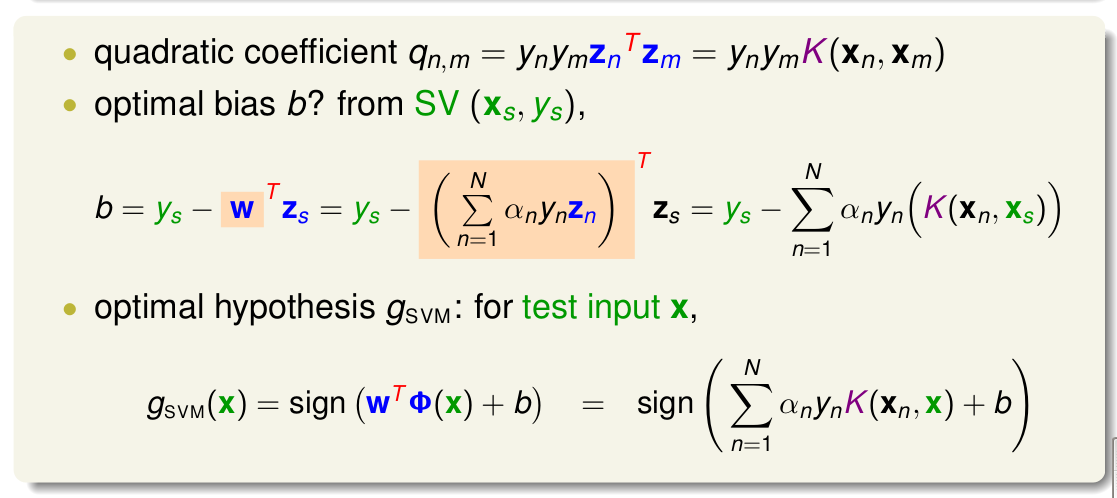

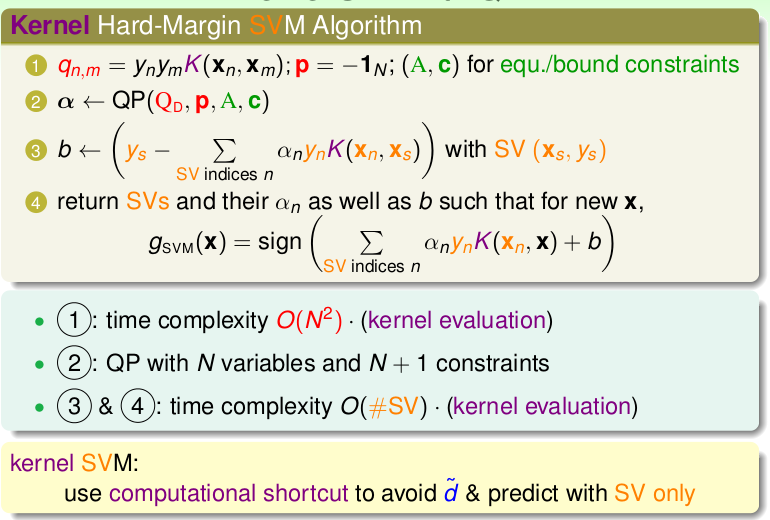

quadratic coefficient q n,m = y n y m z n T z m = y n y m K (x n , x m ) to get the matrix Qd.

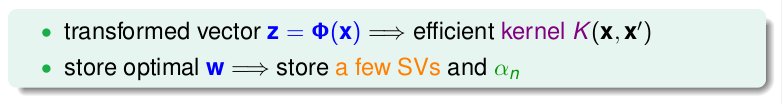

So that we need not to de the caculation in space of Z, but we could use KERNEL FUNCTION to get ZnT*Zm used xn and xm.

Kernel trick: plug in efficient kernel function to avoid dependence on d ̃

So if we give this method a name called Kernel SVM:

Let us come back to the 2nd polynomial, if we add some factor into expansion equation, we may get some new kernel function:

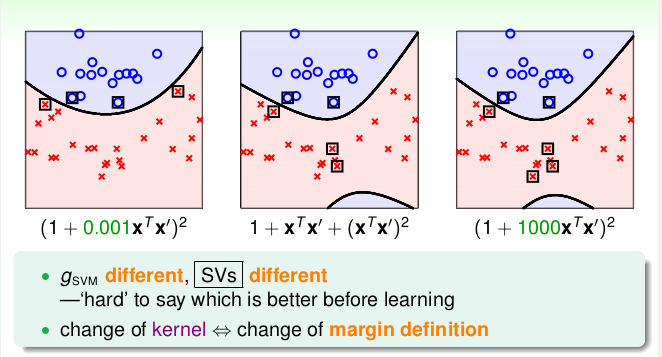

From the aspect of geometry, different kernel means different geometry distance, which affects the appearance of mapping, the defination of margin.

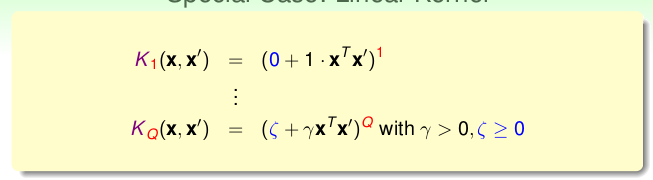

Now, we already have the common represention of polynomial kernel:

Particularly, Q = 1 means the linear condiction.

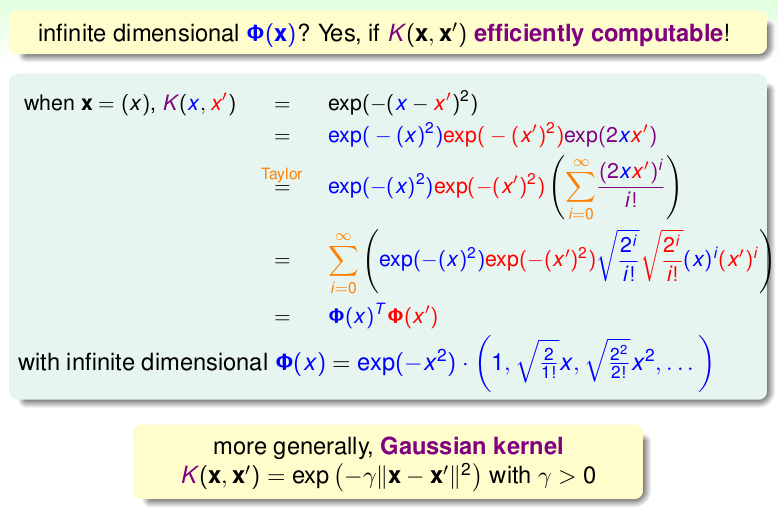

Then, how about infinite dimensional Φ(x)? Yes, it is also avalible.

Linear combination of Gaussians centered at SVs xn , also called Radial Basis Function (RBF) kernel

The value of 伽马 may determine the the extent of the tip for the gaussian function, which means the shadow of overfiting is still alive.

In the end, Mr. Lin compares these three kernel functions and shows their pros and cons, which are In line with the intuition.

An important point is that one potential manual kernel have to be" ZZ must always be positive semi-definite "

981

981

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?