# -*- coding: utf-8 -*-

import requests

import re

from bs4 import BeautifulSoup

from datetime import datetime

# 7. 将456步骤定义成一个函数 def getClickCount(newsUrl):

def getClickCount(newsUrl):

# 4. 使用正则表达式取得新闻编号

# url = "http://news.gzcc.cn/html/2018/xiaoyuanxinwen_0404/9183.html"

num = ".*/(.*).html"

num2 = re.match(num, newsUrl)

if num2:

print(num2.group(1))

# 5. 生成点击次数的Request URL

newsId = re.search('\_(.*).html', newsUrl).group(1).split('/')[-1]

clickUrl = 'http://oa.gzcc.cn/api.php?op=count&id={}&modelid=80'.format(newsId)

print(clickUrl)

# 6. 获取点击次数

resc = requests.get(clickUrl)

a = resc.text.split('.html')[-1].lstrip("('").rstrip("');")

print(a)

# 8. 将获取新闻详情的代码定义成一个函数 def getNewDetail(newsUrl):

def getNewDetail(newsUrl):

res1 = requests.get(newsUrl)

res1.encoding = "utf-8"

soup = BeautifulSoup(res1.text, "html.parser")

con = soup.select('#content')[0].tedtxt

info = soup.select('.show-info')[0].text

dt = info.lstrip('发布时间:')[:19]

# str = '2018-03-30 17:10:12'

dt2 = datetime.strptime(dt, '%Y-%m-%d %H:%M:%S')

print(dt2)

i = info.find('来源:')

if i > 0:

s = info[info.find('来源:'):].split()[0].lstrip('来源:')

print(s)

a = info.find('作者:')

if a > 0:

l = info[info.find('作者:'):].split()[0].lstrip('作者:')

print(l)

y = info.find('摄影:')

if y > 0:

u = info[info.find('作者:'):].split()[0].lstrip('作者:')

# 1. 用正则表达式判定邮箱是否输入正确。

r = '^(\w)+([.\_\-]\w+)*@(\w)+((\.\w{2,3}){1,3})$'

e = 'ad5214@qq.com'

if re.match(r,e):

print("success")

else:

print("fail")

# 2. 用正则表达式识别出全部电话号码。

str = "'版权所有:广州商学院 地址:广州市黄埔区九龙大道206号 学校办公室:020-82876130 招生电话:020-82872773'"

phone = re.findall('(\d{3,4})-(\d{6,8})',str)

print(phone)

# 3. 用正则表达式进行英文分词。re.split('',news)

cons = "'Everyone has their own dreams, I am the same. But my dream is not a lawyer, not a doctor, not actors, not even an industry. Perhaps my dream big people will find it ridiculous, but this has been my pursuit! My dream is to want to have a folk life! I want it to become a beautiful painting, it is not only sharp colors, but also the colors are bleak, I do not rule out the painting is part of the black, but I will treasure these bleak colors! Not yet, how about, a colorful painting, if not bleak, add color, how can it more prominent American? Life is like painting, painting the bright red color represents life beautiful happy moments.'"

con = re.split("[\s,.?\' ]+",cons)

print(con)

res = requests.get('http://news.gzcc.cn/html/xiaoyuanxinwen/')

res.encoding = 'utf-8'

soup = BeautifulSoup(res.text,'html.parser')

for news in soup.select('li'):

if len(news.select('.news-list-title'))>0:

href = news.select('a')[0].attrs['href']

getClickCount(href)

getNewDetail(href)

break

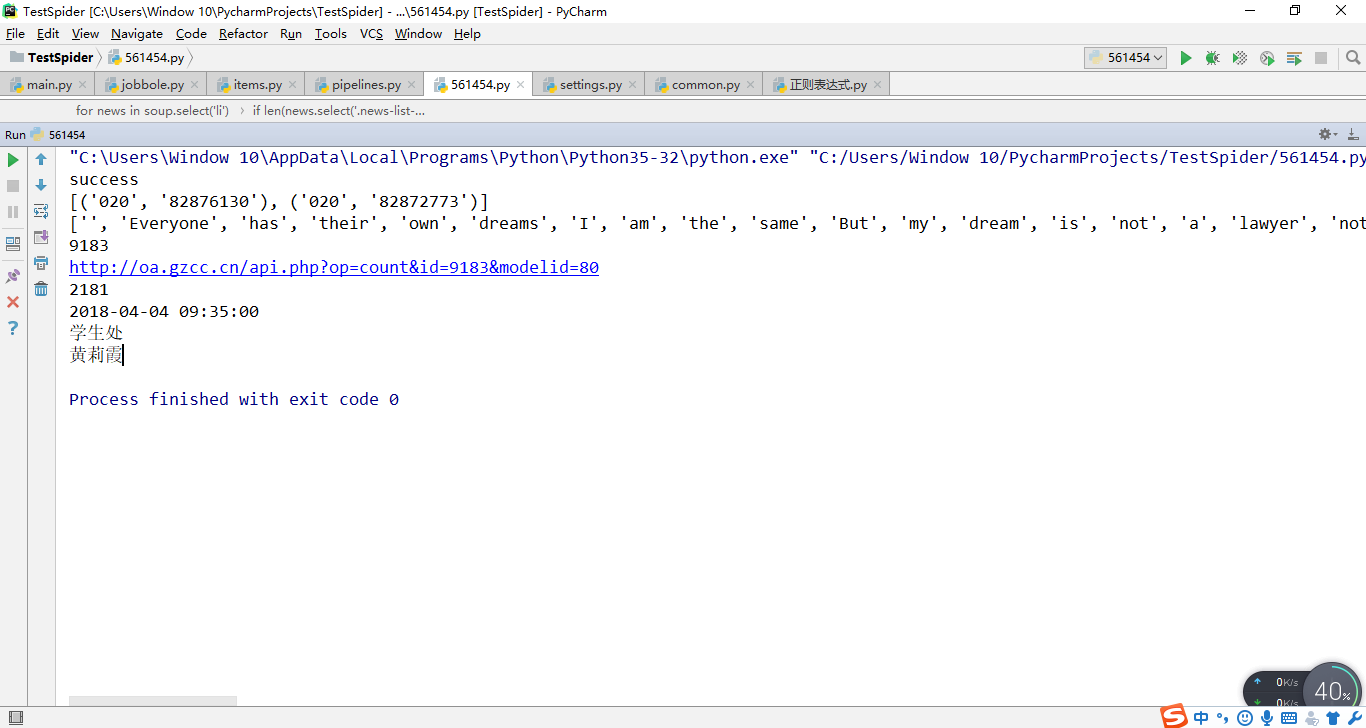

本文介绍了一个具体的爬虫应用案例,包括如何从特定网站抓取新闻详情及点击数等信息,并展示了正则表达式和BeautifulSoup在数据提取中的应用。

本文介绍了一个具体的爬虫应用案例,包括如何从特定网站抓取新闻详情及点击数等信息,并展示了正则表达式和BeautifulSoup在数据提取中的应用。

121

121

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?