from pyecharts import WordCloud

import jieba

import re

import nltk

with open(r'F:\算法\others\merry.txt', 'r', encoding='utf-8') as f:

text = f.readlines()

word_list = []

word_dic = {}

for line in text:

if re.findall('([a-zA-Z])', line.strip()):

result = "".join(i for i in line.strip() if ord(i) < 256)

from nltk.tokenize import WordPunctTokenizer

words = WordPunctTokenizer().tokenize(result)

for w in words:

if 97 <= ord(w.lower()[0]) <= 122:

word_list.append(w)

# print(word_list)

set_word_list = list(set(word_list))

for set_word in set_word_list:

word_dic[set_word] = word_list.count(set_word)

# print(word_dic)

name = []

value = []

for k,v in word_dic.items():

name.append(k)

value.append(int(v)*100)

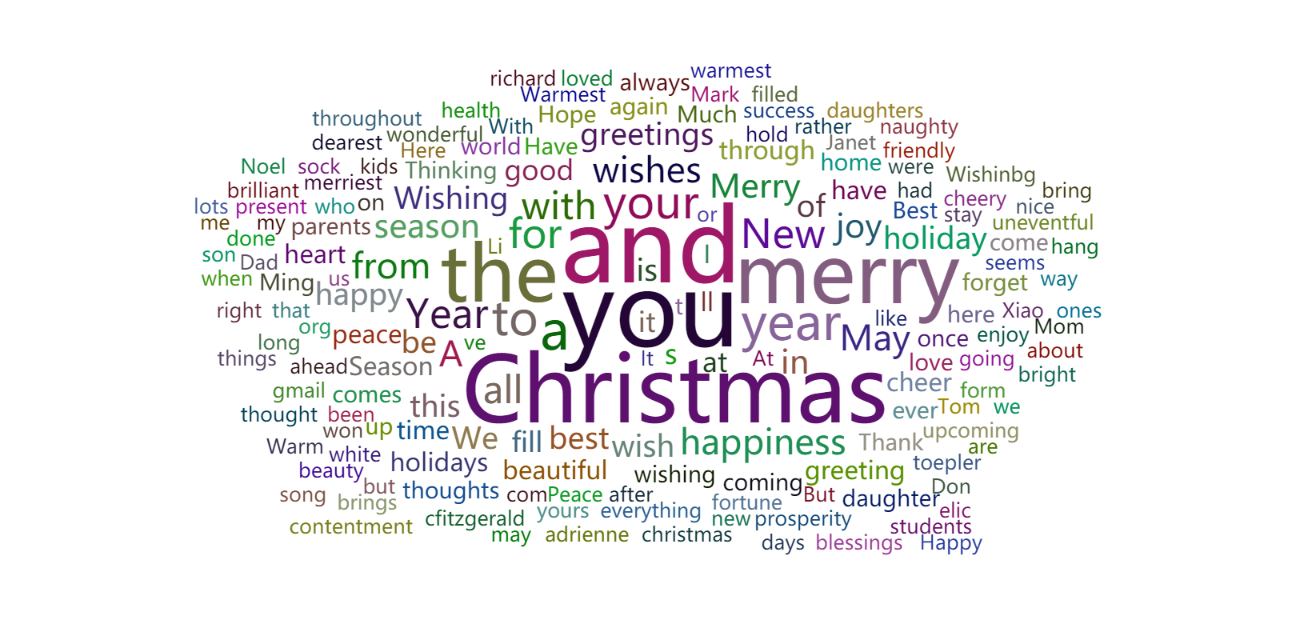

wordcloud = WordCloud(width=1300, height=620)

wordcloud.add("", name, value, word_size_range=[20, 100],shape='pentagon')

wordcloud.render('test.html')

2万+

2万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?