安装部署(Docker) | Apache StreamPark

docker --version

Docker version 24.0.4, build 3713ee1

docker compose version

Docker Compose version v2.19.1

2.打包镜像

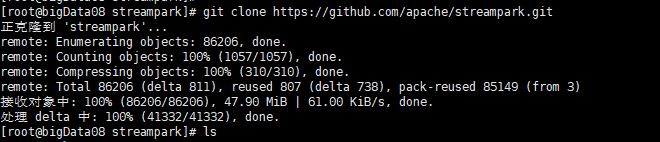

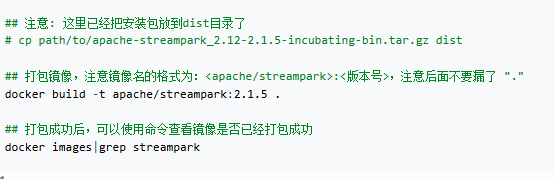

本文讲解的是以手动的方式打包镜像,首先按照如下命令下载 StreamPark 源码,切换分支后把打包好的安装包放到 dist 目录:

git clone https://github.com/apache/streampark.git

## 进入 docker 目录

cd streampark/docker

## 切换到期望打包的分支,这里为 release-2.1.7 分支

git checkout release-2.1.7

## 创建dist目录

mkdir dist

![]()

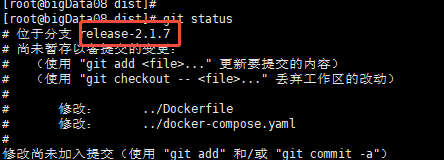

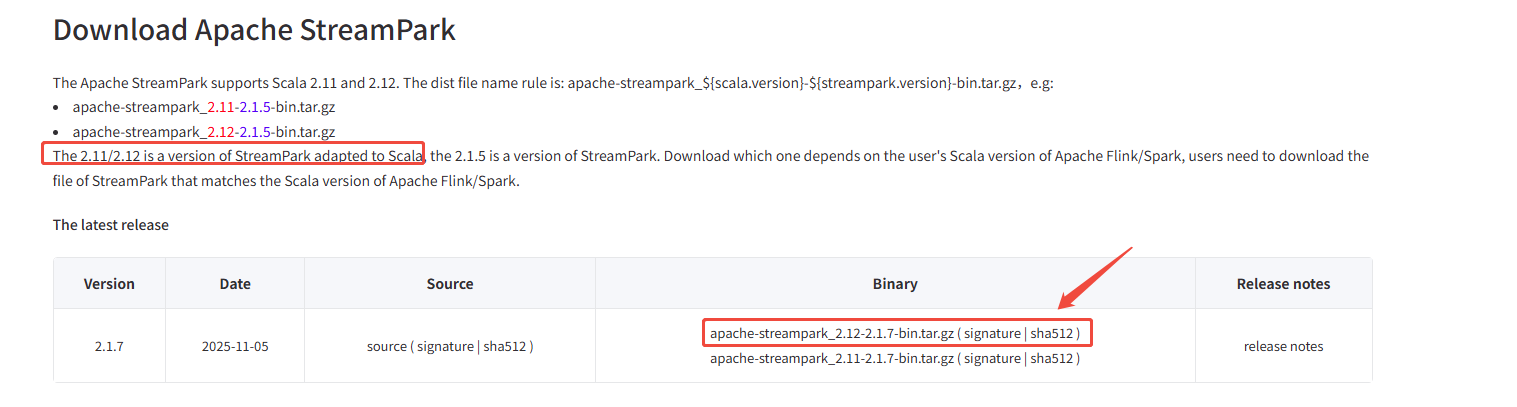

下载:

下载时间比较长 需要耐心等待。

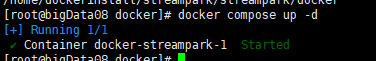

创建好 dist 目录之后,可以把打包好或已下载的安装包放到 dist 目录里面

![]()

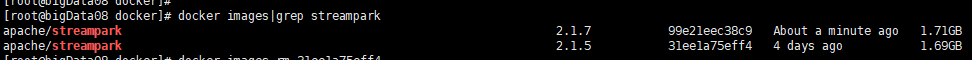

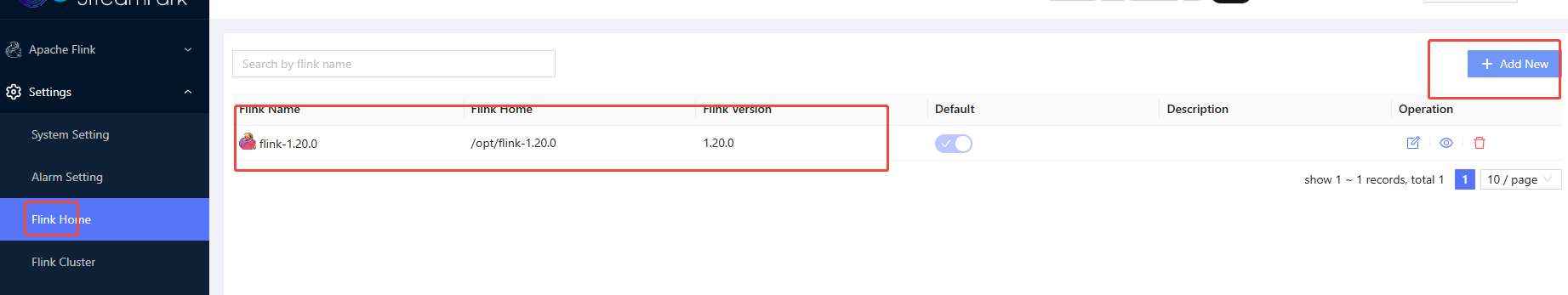

安装最新的2.1.7版本

docker build -t apache/streampark:2.1.7 .

![]()

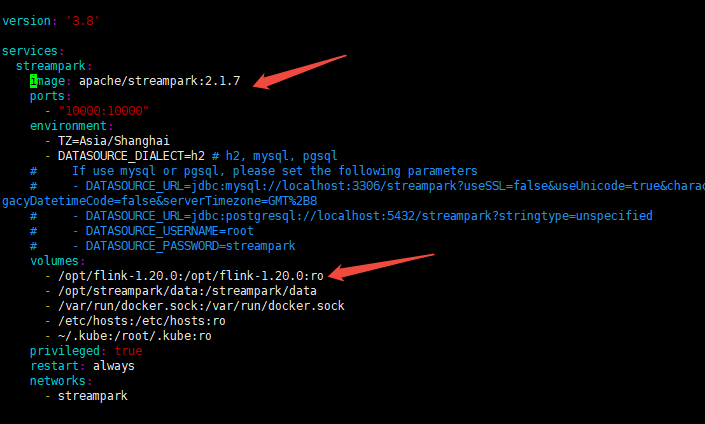

version: '3.8'

services:

streampark:

image: apache/streampark:2.1.7

ports:

- "10000:10000"

environment:

- TZ=Asia/Shanghai

- DATASOURCE_DIALECT=h2 # h2, mysql, pgsql

# If use mysql or pgsql, please set the following parameters

# - DATASOURCE_URL=jdbc:mysql://localhost:3306/streampark?useSSL=false&useUnicode=true&characterEncoding=UTF-8&allowPublicKeyRetrieval=false&useJDBCCompliantTimezoneShift=true&useLegacyDatetimeCode=false&serverTimezone=GMT%2B8

# - DATASOURCE_URL=jdbc:postgresql://localhost:5432/streampark?stringtype=unspecified

# - DATASOURCE_USERNAME=root

# - DATASOURCE_PASSWORD=streampark

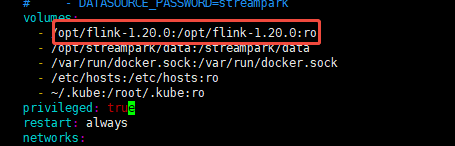

volumes:

- /opt/flink-1.20.0:/opt/flink-1.20.0:ro

- /opt/streampark/data:/streampark/data

- /var/run/docker.sock:/var/run/docker.sock

- /etc/hosts:/etc/hosts:ro

- ~/.kube:/root/.kube:ro

privileged: true

restart: always

networks:

- streampark

healthcheck:

test: [ "CMD", "curl", "http://streampark:10000" ]

interval: 5s

timeout: 5s

retries: 120

networks:

streampark:

driver: bridge

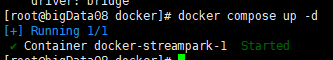

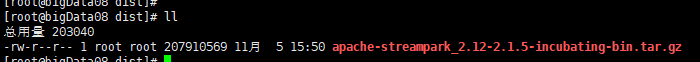

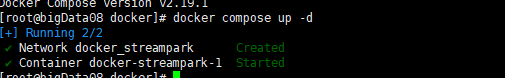

docker compose up -d

![]()

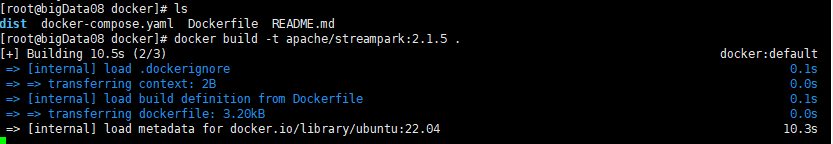

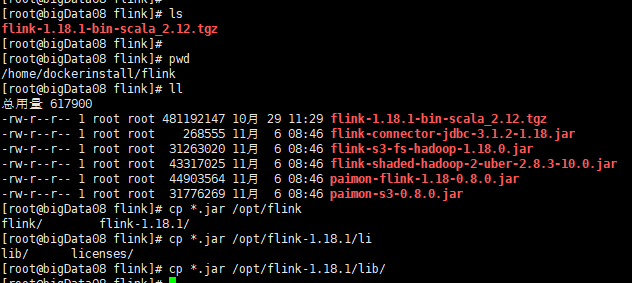

配置flink1.20

wget https://archive.apache.org/dist/flink/flink-1.20.0/flink-1.20.0-bin-scala_2.12.tgz .

wget https://repo1.maven.org/maven2/org/apache/paimon/paimon-flink-1.20/1.2.0/paimon-flink-1.20-1.2.0.jar .

wget https://repo1.maven.org/maven2/com/ververica/flink-sql-connector-mysql-cdc/3.0.1/flink-sql-connector-mysql-cdc-3.0.1.jar .

wget https://repo1.maven.org/maven2/org/apache/hadoop/hadoop-client-api/3.3.4/hadoop-client-api-3.3.4.jar .

wget https://repo1.maven.org/maven2/com/amazonaws/aws-java-sdk-bundle/1.12.262/aws-java-sdk-bundle-1.12.262.jar .

tar zxvf flink-1.20.0-bin-scala_2.12.tgz -C /opt/

cp *.jar /opt/flink-1.20.0/lib/

![]()

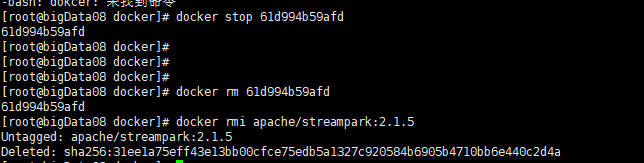

需要修改docker-compose.yaml

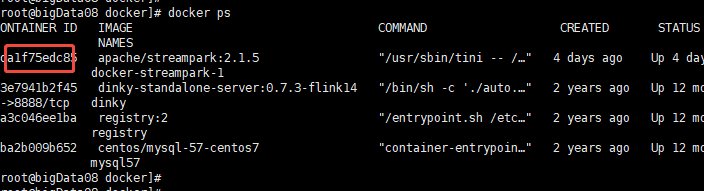

docker stop 7da1f75edc85

docker rm 7da1f75edc85

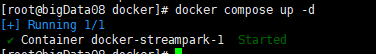

重新启动

docker compose up -d

配置flink1.20

配置flink集群

-----------------------------------------------------------------------------------------------------

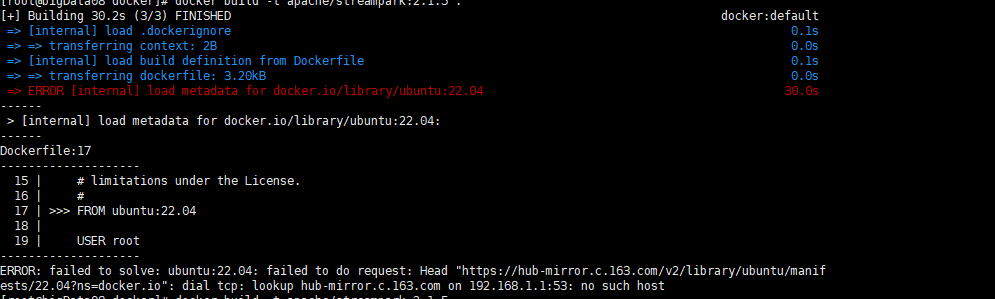

之前的 2.1.5版本的 安装

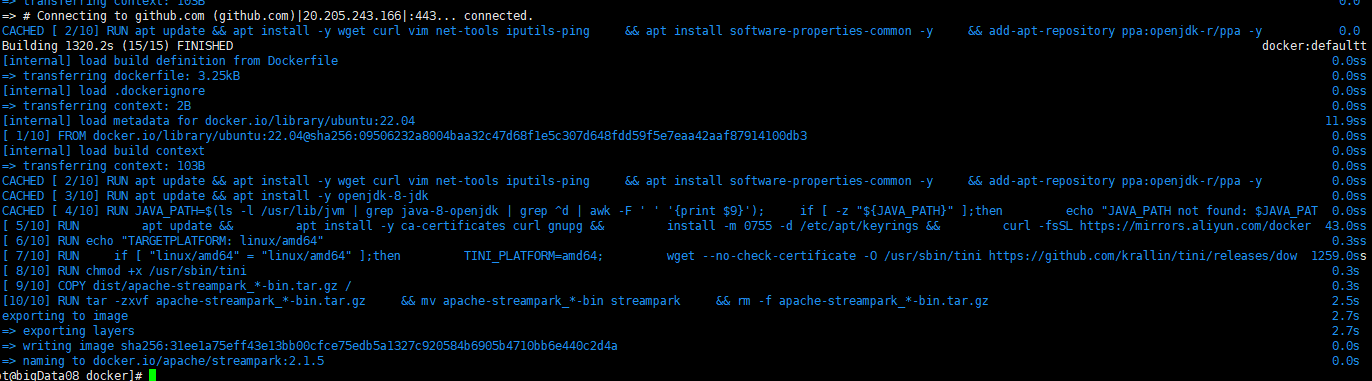

docker build -t apache/streampark:2.1.5 .

有异常

sudo vim /etc/docker/daemon.json "registry-mirrors": [

"https://docker.xuanyuan.me",

"https://tcr.tuna.tsinghua.edu.cn",

"https://mirror.ccs.tencentyun.com",

"https://docker.1ms.run"

]

}sudo systemctl daemon-reexec

sudo systemctl restart docker重新build

遇到问题

curl: (35) OpenSSL SSL_connect: Connection reset by peer in connection to download.docker.com:443

需要修改Dockerfile

vim Dockerfile

# Install docker

RUN \

# Add Docker's GPG key from Aliyun mirror:

apt update && \

apt install -y ca-certificates curl gnupg && \

install -m 0755 -d /etc/apt/keyrings && \

curl -fsSL https://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | gpg --dearmor -o /etc/apt/keyrings/docker.gpg && \

chmod a+r /etc/apt/keyrings/docker.gpg && \

# Add the Aliyun Docker CE repository:

echo "deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.gpg] https://mirrors.aliyun.com/docker-ce/linux/ubuntu $(. /etc/os-release && echo "$VERSION_CODENAME") stable" | \

tee /etc/apt/sources.list.d/docker.list > /dev/null && \

apt update && \

# Install the Docker packages.

apt install -y docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-pluginhttps://download.docker.com→https://mirrors.aliyun.com/docker-ce

重新build

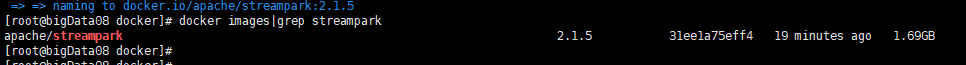

docker images|grep streampark

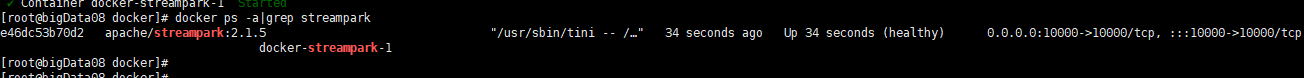

3.启动服务

docker compose up -d

docker ps -a|grep streampark

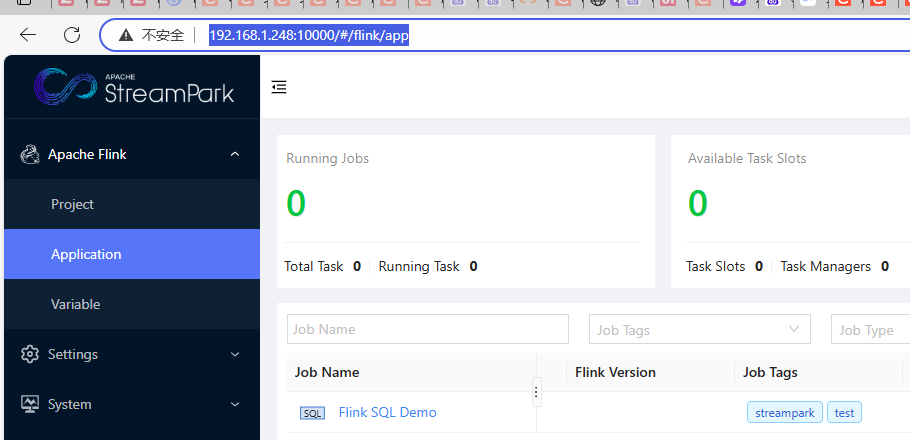

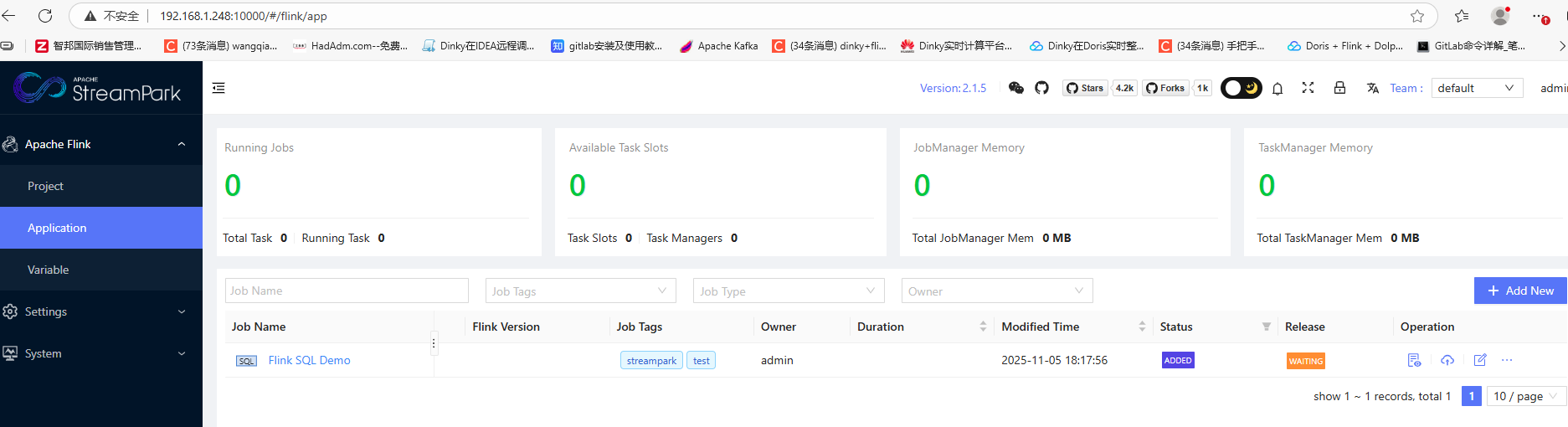

访问

Login - ApacheStreamPark

admin / streampark

上面的方式 没法配置flink

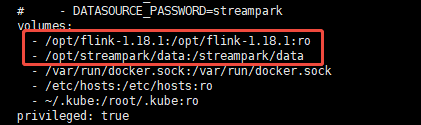

需要修改docker-compose.yaml

sudo mkdir -p /opt/streampark/data

/opt/streampark/data:/streampark/data

↑ ↑

宿主机目录 容器内目录docker compose up -d

这回配置成功了

--------------------------------------------------------------------------

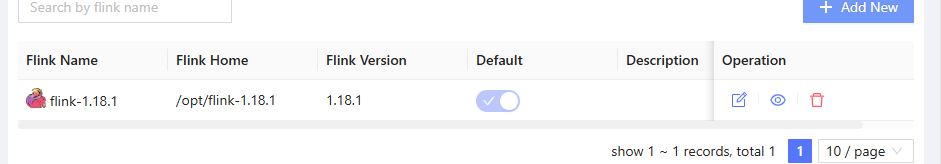

配置flink

配置 Flink 1.18.1 到 StreamPark

安装flink服务

wget https://mirrors.tuna.tsinghua.edu.cn/apache/flink/flink-1.18.1/flink-1.18.1-bin-scala_2.12.tgz

tar zxvf flink-1.18.1-bin-scala_2.12.tgz -C /opt/

路径示例:/opt/flink-1.18.1

wget https://repo1.maven.org/maven2/org/apache/paimon/paimon-s3/0.8.0/paimon-s3-0.8.0.jar wget https://repo1.maven.org/maven2/org/apache/paimon/paimon-flink-1.18/0.8.0/paimon-flink-1.18-0.8.0.jar

wget https://repo1.maven.org/maven2/org/apache/flink/flink-connector-jdbc/3.1.2-1.18/flink-connector-jdbc-3.1.2-1.18.jar

wget https://repo1.maven.org/maven2/org/apache/flink/flink-shaded-hadoop-2-uber/2.8.3-10.0/flink-shaded-hadoop-2-uber-2.8.3-10.0.jar

wget https://repo1.maven.org/maven2/org/apache/flink/flink-s3-fs-hadoop/1.18.0/flink-s3-fs-hadoop-1.18.0.jar

cp *.jar /opt/flink-1.18.1/lib/

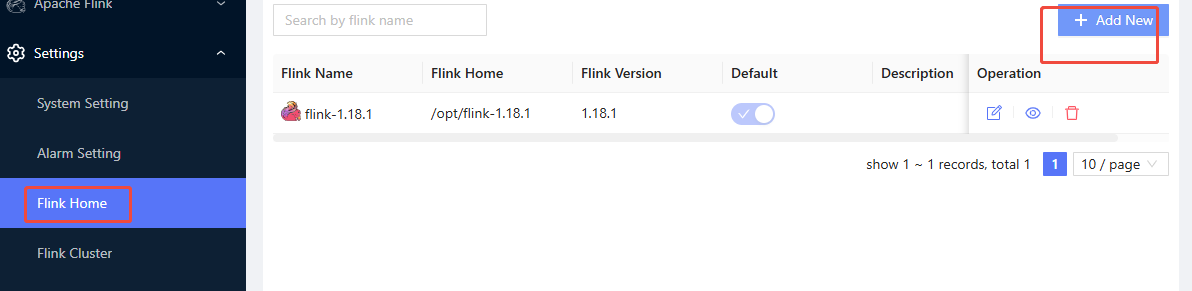

步骤 2:在 StreamPark Web UI 中添加 Flink 环境

- 登录 StreamPark 控制台

- 进入 Settings → Flink Env

- 点击 Add Flink Env

- 填写:

- Flink Name:

flink-1.18.1 - Flink Home:

/opt/flink-1.18.1 - Version:

1.18.1 - (可选)Scala Version:

2.12

- Flink Name:

- 点击 Save

✅ 验证:保存后,StreamPark 会尝试读取 bin/flink --version,若成功则表示配置有效。

---------------------------------------------------------

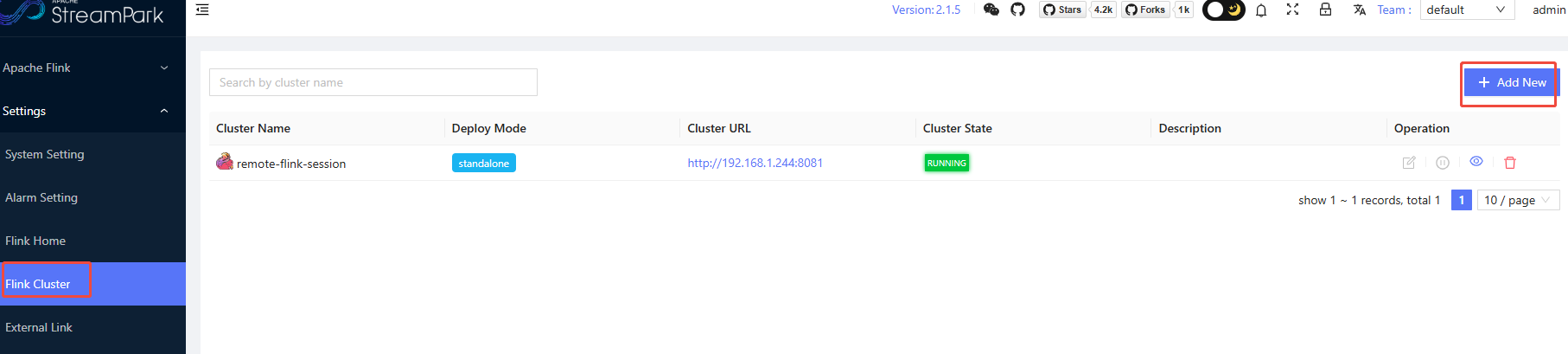

配置远程的flink集群:

要保证远程flink集群 8081端口可以访问

--------------------------------------------------------------------

1176

1176

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?