#include "threadpool.h"

#include <assert.h>

#include <errno.h>

#include <pthread.h>

#include <stdbool.h>

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <sys/prctl.h>

#include <unistd.h>

/* 初始化任务队列 */

void init_taskqueue(taskqueue* queue) {

//Todo

queue->front=NULL;

queue->rear=NULL;

queue->len=0;

//初始化队列互斥锁

pthread_mutex_init(&queue->mutex,NULL);

//分配并初始化任务状态控制

queue->has_jobs=(staconv*)malloc(sizeof(staconv));

pthread_mutex_init(&queue->has_jobs->mutex,NULL);

pthread_cond_init(&queue->has_jobs->cond,NULL);

queue->has_jobs->status=0;

}

/* 向任务队列中添加任务 */

void push_taskqueue(taskqueue* queue, task* new_task) {

//Todo

pthread_mutex_lock(&queue->mutex);

if(queue->rear==NULL){

queue->front=queue->rear=new_task;

}else{

queue->rear->next=new_task;

queue->rear=new_task;

}

new_task->next=NULL;

queue->len++;

printf("任务队列:%d",queue->len);

staconv*sc=queue->has_jobs;

pthread_mutex_lock(&sc->mutex);

sc->status++;

pthread_cond_signal(&sc->cond);

pthread_mutex_unlock(&sc->mutex);

pthread_mutex_unlock(&queue->mutex);

}

/* 从任务队列中获取任务 */

task* take_taskqueue(taskqueue* queue) {

pthread_mutex_lock(&queue->mutex);

staconv*sc=queue->has_jobs;

//Todo

while(queue->len == 0){

pthread_cond_wait(&sc->cond, &sc->mutex);

}

task* curtask = NULL;

if (queue->len > 0) {

curtask = queue->front;

queue->front = curtask->next;

if (!queue->front) {

queue->rear = NULL;

}

queue->len--;

}

pthread_mutex_unlock(&queue->mutex);

return curtask;

}

/* 销毁任务队列 */

void destroy_taskqueue(taskqueue* queue) {

//Todo

task*current=queue->front;

while(current!=NULL){

task*next=current->next;

free(current->arg);

free(current);

current=next;

}

pthread_mutex_destroy(&queue->has_jobs->mutex);

pthread_cond_destroy(&queue->has_jobs->cond);

free(queue->has_jobs);

pthread_mutex_destroy(&queue->mutex);

}

/* 线程运行的逻辑函数 */

void* thread_do(void* arg) {

thread*pthread=(thread*)arg;

threadpool* pool=pthread->pool;

/* 设置线程的名称 */

char thread_name[128] = {0};

sprintf(thread_name, "thread-pool-%d", pthread->id);

prctl(PR_SET_NAME, thread_name);

// 在初始化线程池时,对已经创建线程的数量进行统计,执行pool->num_threads++ */

pthread_mutex_lock(&pool->thcount_lock);

pool->num_threads++;

pthread_mutex_unlock(&pool->thcount_lock);

// 线程启动完成,标记为 ready

pthread_mutex_lock(&pthread->ready_mutex);

pthread->ready = true;

pthread_cond_signal(&pthread->ready_cond); // 通知主线程

pthread_mutex_unlock(&pthread->ready_mutex);

while (pool->is_alive) {

// 如果任务队列中还有任务,则继续运行;否则阻塞*

staconv*sc=pool->queue.has_jobs;

pthread_mutex_lock(&sc->mutex);

while(pool->queue.len==0&&pool->is_alive){

if (pool->monitor) {

thread_enter_block(pool->monitor, pthread_self());

}

pthread_cond_wait(&sc->cond,&sc->mutex);

}

pthread_mutex_unlock(&sc->mutex);

if (pool->is_alive) {

if (pool->monitor) {

thread_enter_active(pool->monitor, pthread_self());

}

pthread_mutex_lock(&pool->thcount_lock);

pool->num_working++;

pthread_mutex_unlock(&pool->thcount_lock);

task* curtask = take_taskqueue(&pool->queue);

if (curtask) {

curtask->function(curtask->arg); // 执行任务

free(curtask); // 释放任务

}

pthread_mutex_lock(&pool->thcount_lock);

pool->num_working--;

// 如果所有任务完成,通知等待线程

// TODO

if(pool->queue.len==0&&pool->num_working==0){

pthread_cond_broadcast(&pool->threads_all_idle);

}

pthread_mutex_unlock(&pool->thcount_lock);

if (pool->monitor) {

thread_enter_block(pool->monitor, pthread_self());

}

}

}

//运行到此位置表明线程将要退出,需改变当前线程池中的线程数量

// TODO

pthread_mutex_lock(&pool->thcount_lock);

pool->num_threads--;

pthread_mutex_unlock(&pool->thcount_lock);

return NULL;

}

/* 创建线程 */

int create_thread(threadpool* pool, thread** pthread, int id) {

*pthread = (thread*)malloc(sizeof(thread));

if (*pthread == NULL) return -1;

(*pthread)->id = id;

(*pthread)->pool = pool;

(*pthread)->ready = false;

pthread_mutex_init(&(*pthread)->ready_mutex, NULL);

pthread_cond_init(&(*pthread)->ready_cond, NULL);

// 启动线程

pthread_create(&(*pthread)->pthread, NULL, thread_do, *pthread);

pthread_detach((*pthread)->pthread);

return 0;

}

/* 初始化线程池 */

// 修改 initThreadPool 函数,添加监控器参数并关联

threadpool* initThreadPool(int num_threads, ThreadPoolMonitor* monitor) {

threadpool* pool = (threadpool*)malloc(sizeof(threadpool));

if (!pool) {

perror("malloc threadpool failed");

return NULL;

}

pool->num_threads = 0;

pool->num_working = 0;

pool->is_alive = true;

pool->monitor = monitor; // 关联监控器

pthread_mutex_init(&pool->thcount_lock, NULL);

pthread_cond_init(&pool->threads_all_idle, NULL);

init_taskqueue(&pool->queue);

pool->threads = (thread**)malloc(num_threads * sizeof(thread*));

if (!pool->threads) {

free(pool);

return NULL;

}

for (int i = 0; i < num_threads; ++i) {

create_thread(pool, &pool->threads[i], i);

// 等待线程就绪

thread* t = pool->threads[i];

pthread_mutex_lock(&t->ready_mutex);

while (!t->ready) {

pthread_cond_wait(&t->ready_cond, &t->ready_mutex); // 阻塞直到线程就绪

}

pthread_mutex_unlock(&t->ready_mutex);

// 安全更新监控器

if (pool->monitor) {

update_thread_id(pool->monitor, t->pthread, i); // 传递实际线程ID

}

printf("THPOOL_DEBUG: Thread %d ready with ID %lu\n", i, t->pthread);

}

while (pool->num_threads != num_threads) {}

return pool;

}

/* 向线程池中添加任务 */

void addTaskToThreadPool(threadpool* pool, task* curtask) {

// 将任务加入队列

push_taskqueue(&pool->queue, curtask);

printf("added task to pool %d\n", *(int*)curtask->arg);

}

/* 等待当前任务全部运行完毕 */

void waitThreadPool(threadpool* pool) {

pthread_mutex_lock(&pool->thcount_lock);

while (pool->queue.len > 0 || pool->num_working > 0) {

pthread_cond_wait(&pool->threads_all_idle, &pool->thcount_lock);

}

pthread_mutex_unlock(&pool->thcount_lock);

}

/* 销毁线程池 */

void destroyThreadPool(threadpool* pool) {

/* No need to destroy if it's NULL */

if (pool == NULL) return;

pool->is_alive = false;

// 等待线程池中的所有线程完成工作

// TODO: 添加代码

staconv*sc=pool->queue.has_jobs;

pthread_mutex_lock(&sc->mutex);

pthread_cond_broadcast(&sc->cond);

pthread_mutex_unlock(&sc->mutex);

//等待所有线程完成当前任务并退出

pthread_mutex_lock(&pool->thcount_lock);

while(pool->num_threads>0)

{

pthread_cond_wait(&pool->threads_all_idle,&pool->thcount_lock);

}

pthread_mutex_unlock(&pool->thcount_lock);

// 销毁任务队列

destroy_taskqueue(&pool->queue);

// 销毁线程指针数组,并释放所有为线程池分配的内存

// TODO: 添加代码

for(int i=0;i<pool->num_threads;i++)

{

free(pool->threads[i]);

}

free(pool->threads);

// 销毁线程池的互斥量和条件变量

pthread_mutex_destroy(&pool->thcount_lock);

pthread_cond_destroy(&pool->threads_all_idle);

free(pool);

}

/* 获取当前线程池中正在运行线程的数量 */

int getNumofThreadWorking(threadpool* pool) { return pool->num_working; }

#ifndef THREADPOOL_H

#define THREADPOOL_H

#define BUFSIZE 8096 // 缓冲区大小

#include <pthread.h>

#include <stdbool.h>

/* 同步状态结构体(条件变量+互斥锁) */

typedef struct staconv {

pthread_mutex_t mutex;

pthread_cond_t cond;

int status;

} staconv;

/* 任务结构 */

typedef struct task {

struct task* next;

void (*function)(void* arg); // 函数指针

void* arg; // 函数参数

} task;

/* 任务队列 */

typedef struct taskqueue {

pthread_mutex_t mutex;

task* front; // 队首指针

task* rear; // 队尾指针

staconv* has_jobs; // 任务状态控制

int len; // 任务数量

} taskqueue;

/* 线程结构 */

typedef struct thread {

int id;

pthread_t pthread;

struct threadpool* pool;

bool ready; // 新增:线程是否准备好

pthread_mutex_t ready_mutex; // 新增:互斥锁

pthread_cond_t ready_cond; // 新增:条件变量

} thread;

//线程状态监控结构

typedef struct {

pthread_t tid;

int active;

long long active_time;

long long block_time;

struct timespec last_time;

} ThreadStatus;

//线程池监控结构

typedef struct {

pthread_mutex_t mutex;

int thread_count;

ThreadStatus *threads;

int max_active;

int min_active;

long long total_active_time;

long long total_block_time;

} ThreadPoolMonitor;

/* 线程池结构 */

typedef struct threadpool {

thread** threads; // 线程数组

volatile int num_threads; // 线程总数

volatile int num_working; // 工作线程数

pthread_mutex_t thcount_lock; // 线程计数器锁

pthread_cond_t threads_all_idle; // 空闲条件变量

taskqueue queue; // 任务队列

volatile bool is_alive; // 线程池存活状态

ThreadPoolMonitor* monitor; //添加监控器引用

} threadpool;

typedef struct{

int fd;

pthread_t tid;

int thread_idx;

}thread_data;

//消息队列节点(用于传递文件名)

typedef struct{

int fd;

char filename[BUFSIZE];

char filetype[BUFSIZE];

}FilenameMsg;

//消息队列节点(用于传递文件内容)

typedef struct{

int fd;

char content[BUFSIZE];

long len;

char filetype[BUFSIZE];

}Msg;

typedef struct{

long long total_read_socket_time; // socket 读取时间(μs)

long long total_write_socket_time; // socket 写入时间(μs)

long long total_read_file_time; // 网页文件读取时间(μs)

long long total_write_log_time; // 日志写入时间(μs)

long long total_processing_time; // 线程处理时间(μs)

int child_count; // 已处理线程数

pthread_mutex_t mutex; // 互斥锁

}Stats;

/******************** 线程池对外接口 ********************/

// 初始化线程池

// threadpool.h 中修改函数声明

threadpool* initThreadPool(int num_threads, ThreadPoolMonitor* monitor);

// 添加任务到线程池

void addTaskToThreadPool(threadpool* pool, task* curtask);

// 等待所有任务完成

void waitThreadPool(threadpool* pool);

// 销毁线程池

void destroyThreadPool(threadpool* pool);

// 获取当前工作线程数

int getNumofThreadWorking(threadpool* pool);

void readMsgThread(void *arg);

void readFileThread(void *arg);

void sendMsgThread(void *arg);

void init_taskqueue(taskqueue* queue);

void push_taskqueue(taskqueue* queue, task* new_task);

void destroy_taskqueue(taskqueue* queue);

void addTaskToThreadPool(threadpool* pool, task* new_task);

void update_thread_id(ThreadPoolMonitor *monitor, pthread_t tid, int index);

void thread_enter_active(ThreadPoolMonitor *monitor, pthread_t tid);

void thread_enter_block(ThreadPoolMonitor *monitor, pthread_t tid);

#endif // THREADPOOL_H#include "threadpool.h"

#include <arpa/inet.h> // 网络地址转换

#include <errno.h> // 错误号定义

#include <fcntl.h> // 文件控制操作

#include <netinet/in.h> // 互联网地址族

#include <signal.h> // 信号处理

#include <stdio.h> // 标准输入输出

#include <stdlib.h> // 标准库函数

#include <string.h> // 字符串操作

#include <sys/socket.h> // 套接字接口

#include <sys/types.h> // 系统数据类型

#include <unistd.h> // POSIX系统调用

#include<time.h>

#include<sys/time.h>

#include<sys/wait.h>

#include<sys/sem.h>

#include<sys/ipc.h>

#include<sys/shm.h>

#include<pthread.h>

// 常量定义

#define VERSION 23 // 服务器版本

#define ERROR 42 // 错误日志类型

#define LOG 44 // 普通日志类型

#define FORBIDDEN 403 // 禁止访问状态码

#define NOTFOUND 404 // 文件未找到状态码

#define MAX_THREADS 100 //线程池线程数量

#define MONITOR_INTERVAL 2//性能监控间隔

// 支持的文件扩展名与MIME类型映射

struct {

char *ext; // 文件扩展名

char *filetype; // 对应MIME类型

} extensions[] = {

{"gif", "image/gif"}, // GIF图片

{"jpg", "image/jpg"}, // JPEG图片

{"jpeg", "image/jpeg"}, // JPEG图片

{"png", "image/png"}, // PNG图片

{"ico", "image/ico"}, // ICO图标

{"zip", "image/zip"}, // ZIP压缩包(原代码MIME类型可能有误)

{"gz", "image/gz"}, // GZ压缩包

{"tar", "image/tar"}, // TAR归档

{"htm", "text/html"}, // HTML文件

{"html", "text/html"}, // HTML文件

{0, 0} // 结束标记

};

// pthread_t threads[MAX_THREADS];//子线程数组

pthread_mutex_t lock;

pthread_mutex_t io_stats_lock; //保护I/O统计数据

volatile long total_read_socket_time=0;

volatile long total_write_socket_time=0;

volatile long total_read_file_time=0;

volatile long total_write_log_time=0;

volatile int total_requests=0;

volatile sig_atomic_t server_running=1;

Stats stats;

struct timespec start,end;

long duration;

int count;

int thread_count=0;

int max_thread=MAX_THREADS;

pthread_t *threads = NULL; // 声明为指针

pthread_t monitor_thread;//监控线程ID

// 线程池监控结构

ThreadPoolMonitor read_msg_monitor;

ThreadPoolMonitor read_file_monitor;

ThreadPoolMonitor send_msg_monitor;

// 线程池定义

static threadpool* read_msg_pool = NULL; // 读取请求线程池

static threadpool* read_file_pool = NULL; // 读取文件线程池

static threadpool* send_msg_pool = NULL; // 发送响应线程池

/* 日志记录函数

* 参数:

* type - 日志类型(ERROR/LOG/FORBIDDEN/NOTFOUND)

* s1 - 主要描述信息

* s2 - 补充信息

* socket_fd - 关联的套接字描述符

*/

// 计算两个时间点之间的时间差(以毫秒为单位)

long long calculate_time_diff(struct timespec start, struct timespec end) {

long long diff_sec = end.tv_sec - start.tv_sec;

long long diff_nsec = end.tv_nsec - start.tv_nsec;

return diff_sec * 1000000 + diff_nsec / 1000;

}

void logger(int type, char *s1, char *s2, int socket_fd) {

int fd;

char logbuffer[BUFSIZE * 2]; // 日志缓冲区

char timebuffer[64];// 用于存储格式化时间字符串

// 获取当前时间(秒和微秒)

struct timeval tv;

gettimeofday(&tv, NULL);

// 将秒部分格式化为字符串(年月日 时分秒)

struct tm *tm_info = localtime(&tv.tv_sec);

strftime(timebuffer, sizeof(timebuffer), "%Y-%m-%d %H:%M:%S", tm_info);

char full_timebuffer[256];

switch (type) {

case ERROR: // 致命错误日志

sprintf(logbuffer, "ERROR: %s:%s Errno=%d exiting pid=%d", s1, s2, errno, getpid());

break;

case FORBIDDEN: // 403响应

// 发送HTTP 403响应头

write(socket_fd,

"HTTP/1.1 403 Forbidden\nContent-Length: 185\nConnection: "

"close\nContent-Type: text/html\n\n<html><head>\n<title>403 "

"Forbidden</title>\n</head><body>\n<h1>Forbidden</h1>\nThe requested URL, "

"file type or operation is not allowed on this simple static file "

"webserver.\n</body></html>\n",

271);

sprintf(logbuffer, "FORBIDDEN: %s:%s", s1, s2);

snprintf(full_timebuffer, sizeof(full_timebuffer), "[%s.%03ld]", timebuffer, tv.tv_usec / 1000);

break;

case NOTFOUND: // 404响应

write(socket_fd,

"HTTP/1.1 404 Not Found\nContent-Length: 136\nConnection: close\nContent-Type: "

"text/html\n\n<html><head>\n<title>404 Not Found</title>\n</head><body>\n<h1>Not "

"Found</h1>\nThe requested URL was not found on this server.\n</body></html>\n",

224);

sprintf(logbuffer, "NOT FOUND: %s:%s", s1, s2);

snprintf(full_timebuffer, sizeof(full_timebuffer), "[%s.%03ld]", timebuffer, tv.tv_usec / 1000);

break;

case LOG: // 普通日志

sprintf(logbuffer, " INFO: %s:%s:%d", s1, s2, socket_fd);

// printf("INFO:[PID:%d] %s:%s:%d\n", getpid(), s1, s2, socket_fd);

snprintf(full_timebuffer, sizeof(full_timebuffer), "[%s.%03ld]", timebuffer, tv.tv_usec / 1000);

break;

case 45:

sprintf(logbuffer,"[%s.%03ld] %s",

timebuffer,tv.tv_usec/1000,s2);

break;

}

// 写入日志文件(追加模式)

if ((fd = open("webserver.log", O_CREAT | O_WRONLY | O_APPEND, 0644)) >= 0) {

write(fd, logbuffer, strlen(logbuffer));

write(fd, "\n", 1);

// write(fd,full_timebuffer,strlen(full_timebuffer));

close(fd);

}

// 遇到致命错误时退出进程

if (type == ERROR || type == NOTFOUND || type == FORBIDDEN) exit(3);

}

void thread_enter_active(ThreadPoolMonitor *monitor,pthread_t tid){

struct timespec now;

clock_gettime(CLOCK_MONOTONIC,&now);

pthread_mutex_lock(&monitor->mutex);

for(int i=0;i<monitor->thread_count;i++)

{

if(monitor->threads[i].tid==tid)

{

printf(" Checking thread #%d: stored tid=%lu, current tid=%lu\n",

i, (unsigned long)monitor->threads[i].tid, (unsigned long)tid);

if(!monitor->threads[i].active){

monitor->threads[i].block_time+=calculate_time_diff(monitor->threads[i].last_time,now);

monitor->total_block_time+=calculate_time_diff(monitor->threads[i].last_time,now);

}

monitor->threads[i].active=1;

monitor->threads[i].last_time=now;

break;

}

}

pthread_mutex_unlock(&monitor->mutex);

}

void thread_enter_block(ThreadPoolMonitor *monitor, pthread_t tid) {

struct timespec now;

clock_gettime(CLOCK_MONOTONIC, &now);

pthread_mutex_lock(&monitor->mutex);

for (int i = 0; i < monitor->thread_count; i++) {

if (monitor->threads[i].tid == tid) {

if (monitor->threads[i].active) {

// 计算活跃时间

monitor->threads[i].active_time += calculate_time_diff(monitor->threads[i].last_time, now);

monitor->total_active_time += calculate_time_diff(monitor->threads[i].last_time, now);

// 更新最高/最低活跃线程数

int current_active = 0;

for (int j = 0; j < monitor->thread_count; j++) {

if (monitor->threads[j].active) current_active++;

}

if (current_active > monitor->max_active)

monitor->max_active = current_active;

if (monitor->min_active == 0 || current_active < monitor->min_active)

monitor->min_active = current_active;

}

monitor->threads[i].active = 0;

monitor->threads[i].last_time = now;

break;

}

}

pthread_mutex_unlock(&monitor->mutex);

}

// 初始化线程池监控

void init_threadpool_monitor(ThreadPoolMonitor *monitor, int thread_count) {

monitor->threads = (ThreadStatus *)malloc(thread_count * sizeof(ThreadStatus));

monitor->thread_count = thread_count;

monitor->max_active = 0;

monitor->min_active = 0;

monitor->total_active_time = 0;

monitor->total_block_time = 0;

pthread_mutex_init(&monitor->mutex, NULL);

struct timespec now;

clock_gettime(CLOCK_MONOTONIC, &now);

for (int i = 0; i < thread_count; i++) {

monitor->threads[i].tid = 0;

monitor->threads[i].active = 0;

monitor->threads[i].active_time = 0;

monitor->threads[i].block_time = 0;

monitor->threads[i].last_time = now;

}

}

// 更新线程ID

void update_thread_id(ThreadPoolMonitor *monitor, pthread_t tid, int index) {

pthread_mutex_lock(&monitor->mutex);

if (index < monitor->thread_count) {

printf("UPDATE: Thread #%d set to tid=%lu\n", index, (unsigned long)tid);

monitor->threads[index].tid = tid; // 存储实际线程ID

}

pthread_mutex_unlock(&monitor->mutex);

}

// 性能数据收集线程

void* performance_monitor(void* arg) {

while (server_running) {

sleep(MONITOR_INTERVAL);

// 收集线程池状态

pthread_mutex_lock(&read_msg_monitor.mutex);

pthread_mutex_lock(&read_file_monitor.mutex);

pthread_mutex_lock(&send_msg_monitor.mutex);

// 计算当前活跃线程数

int read_msg_active = 0;

int read_file_active = 0;

int send_msg_active = 0;

for (int i = 0; i < read_msg_monitor.thread_count; i++) {

if (read_msg_monitor.threads[i].active) read_msg_active++;

}

for (int i = 0; i < read_file_monitor.thread_count; i++) {

if (read_file_monitor.threads[i].active) read_file_active++;

}

for (int i = 0; i < send_msg_monitor.thread_count; i++) {

if (send_msg_monitor.threads[i].active) send_msg_active++;

}

// 计算平均活跃时间和阻塞时间

long long read_msg_avg_active = 0;

long long read_msg_avg_block = 0;

long long read_file_avg_active = 0;

long long read_file_avg_block = 0;

long long send_msg_avg_active = 0;

long long send_msg_avg_block = 0;

if (read_msg_monitor.thread_count > 0) {

read_msg_avg_active = read_msg_monitor.total_active_time / read_msg_monitor.thread_count;

read_msg_avg_block = read_msg_monitor.total_block_time / read_msg_monitor.thread_count;

}

if (read_file_monitor.thread_count > 0) {

read_file_avg_active = read_file_monitor.total_active_time / read_file_monitor.thread_count;

read_file_avg_block = read_file_monitor.total_block_time / read_file_monitor.thread_count;

}

if (send_msg_monitor.thread_count > 0) {

send_msg_avg_active = send_msg_monitor.total_active_time / send_msg_monitor.thread_count;

send_msg_avg_block = send_msg_monitor.total_block_time / send_msg_monitor.thread_count;

}

// 打印性能数据

printf("\n===== 服务器性能监控 [%d秒间隔] =====\n", MONITOR_INTERVAL);

printf("\n读取请求线程池:\n");

printf(" 当前活跃线程: %d/%d\n", read_msg_active, read_msg_monitor.thread_count);

printf(" 最高活跃线程: %d\n", read_msg_monitor.max_active);

printf(" 最低活跃线程: %d\n", read_msg_monitor.min_active);

printf(" 平均活跃时间: %lld μs\n", read_msg_avg_active);

printf(" 平均阻塞时间: %lld μs\n", read_msg_avg_block);

printf(" 消息队列长度: %d\n", read_msg_pool->queue.len);

printf("\n读取文件线程池:\n");

printf(" 当前活跃线程: %d/%d\n", read_file_active, read_file_monitor.thread_count);

printf(" 最高活跃线程: %d\n", read_file_monitor.max_active);

printf(" 最低活跃线程: %d\n", read_file_monitor.min_active);

printf(" 平均活跃时间: %lld μs\n", read_file_avg_active);

printf(" 平均阻塞时间: %lld μs\n", read_file_avg_block);

printf(" 消息队列长度: %d\n", read_file_pool->queue.len);

printf("\n发送响应线程池:\n");

printf(" 当前活跃线程: %d/%d\n", send_msg_active, send_msg_monitor.thread_count);

printf(" 最高活跃线程: %d\n", send_msg_monitor.max_active);

printf(" 最低活跃线程: %d\n", send_msg_monitor.min_active);

printf(" 平均活跃时间: %lld μs\n", send_msg_avg_active);

printf(" 平均阻塞时间: %lld μs\n", send_msg_avg_block);

printf(" 消息队列长度: %d\n", send_msg_pool->queue.len);

printf("\n====================================\n");

pthread_mutex_unlock(&send_msg_monitor.mutex);

pthread_mutex_unlock(&read_file_monitor.mutex);

pthread_mutex_unlock(&read_msg_monitor.mutex);

}

return NULL;

}

void readMsgThread(void *arg) {

thread_data *args = (thread_data *)arg;

int fd = args->fd;

char buffer[BUFSIZE + 1];

long ret;

struct timespec start_time, end_time;

long long read_socket_time = 0;

long long write_log_time = 0;

long i;

clock_gettime(CLOCK_MONOTONIC, &start_time);

ret = read(fd, buffer, BUFSIZE);

clock_gettime(CLOCK_MONOTONIC, &end_time);

read_socket_time += calculate_time_diff(start_time, end_time);

if (ret == 0 || ret == -1) { // 读取失败处理

clock_gettime(CLOCK_MONOTONIC, &start_time);

logger(FORBIDDEN, "failed to read browser request", "", fd);

clock_gettime(CLOCK_MONOTONIC, &end_time);

write_log_time += calculate_time_diff(start_time, end_time);

pthread_mutex_lock(&stats.mutex);

stats.total_read_socket_time += read_socket_time;

stats.total_write_log_time += write_log_time;

pthread_mutex_unlock(&stats.mutex);

close(fd);

free(args);

return;

}

buffer[ret] = 0; // 确保字符串终止

// 替换换行符用于安全日志记录

for (i = 0; i < ret; i++)

if (buffer[i] == '\r' || buffer[i] == '\n') buffer[i] = '*';

clock_gettime(CLOCK_MONOTONIC, &start_time);

logger(LOG, "request", "", args->thread_idx); // 记录请求日志

clock_gettime(CLOCK_MONOTONIC, &end_time);

write_log_time += calculate_time_diff(start_time, end_time);

// 验证请求方法是否为GET

if (strncmp(buffer, "GET ", 4) && strncmp(buffer, "get ", 4)) {

clock_gettime(CLOCK_MONOTONIC, &start_time);

logger(FORBIDDEN, "Only simple GET operation supported", buffer, fd);

clock_gettime(CLOCK_MONOTONIC, &end_time);

write_log_time += calculate_time_diff(start_time, end_time);

pthread_mutex_lock(&stats.mutex);

stats.total_read_socket_time += read_socket_time;

stats.total_write_log_time += write_log_time;

pthread_mutex_unlock(&stats.mutex);

close(fd);

free(args);

return;

}

// 提取请求URI(定位第一个空格后的第二个空格)

for (i = 4; i < BUFSIZE; i++) {

if (buffer[i] == ' ') {

buffer[i] = 0;

break;

}

}

// 路径遍历攻击防护(检查..)

for (int j = 0; j < i - 1; j++)

if (buffer[j] == '.' && buffer[j + 1] == '.') {

clock_gettime(CLOCK_MONOTONIC, &start_time);

logger(FORBIDDEN, "Parent directory (..) path names not supported", buffer, fd);

clock_gettime(CLOCK_MONOTONIC, &end_time);

write_log_time += calculate_time_diff(start_time, end_time);

pthread_mutex_lock(&stats.mutex);

stats.total_read_socket_time += read_socket_time;

stats.total_write_log_time += write_log_time;

pthread_mutex_unlock(&stats.mutex);

close(fd);

free(args);

return;

}

// 处理根路径请求(重定向到index.html)

if (!strncmp(&buffer[0], "GET /\0", 6) || !strncmp(&buffer[0], "get /\0", 6))

strcpy(buffer, "GET /index.html");

// 确定文件扩展名对应的MIME类型

long buflen = strlen(buffer);

char *fstr = 0;

for (long i = 0; extensions[i].ext != 0; i++) {

long len = strlen(extensions[i].ext);

if (!strncmp(&buffer[buflen - len], extensions[i].ext, len)) {

fstr = extensions[i].filetype;

break;

}

}

if (!fstr) {

clock_gettime(CLOCK_MONOTONIC, &start_time);

logger(FORBIDDEN, "file extension type not supported", buffer, fd);

clock_gettime(CLOCK_MONOTONIC, &end_time);

write_log_time += calculate_time_diff(start_time, end_time);

pthread_mutex_lock(&stats.mutex);

stats.total_read_socket_time += read_socket_time;

stats.total_write_log_time += write_log_time;

pthread_mutex_unlock(&stats.mutex);

close(fd);

free(args);

return;

}

// 构造 FilenameMsg 并发送到 filename queue

FilenameMsg *filenameMsg = (FilenameMsg *)malloc(sizeof(FilenameMsg));

filenameMsg->fd = fd;

strcpy(filenameMsg->filename, &buffer[5]); // 跳过 "GET "

strcpy(filenameMsg->filetype, fstr);

task *new_task = (task *)malloc(sizeof(task));

new_task->function = (void (*)(void*))readFileThread;

new_task->arg = filenameMsg;

addTaskToThreadPool(read_file_pool, new_task);

thread_enter_block(&read_msg_monitor, pthread_self());

pthread_mutex_lock(&stats.mutex);

stats.total_read_socket_time += read_socket_time;

stats.total_write_log_time += write_log_time;

pthread_mutex_unlock(&stats.mutex);

free(args);

}

void readFileThread(void *arg) {

FilenameMsg *filenameMsg = (FilenameMsg*)arg;

int fd = filenameMsg->fd;

char *filename = filenameMsg->filename;

char *filetype = filenameMsg->filetype;

int file_fd;

long len;

char buffer[BUFSIZE];

struct timespec start_time, end_time;

long long read_file_time = 0;

long long write_log_time = 0;

clock_gettime(CLOCK_MONOTONIC, &start_time);

file_fd = open(filename, O_RDONLY); // 跳过"GET "后的/

clock_gettime(CLOCK_MONOTONIC, &end_time);

read_file_time += calculate_time_diff(start_time, end_time);

if (file_fd == -1) {

clock_gettime(CLOCK_MONOTONIC, &start_time);

logger(NOTFOUND, "failed to open file", filename, fd);

clock_gettime(CLOCK_MONOTONIC, &end_time);

write_log_time += calculate_time_diff(start_time, end_time);

pthread_mutex_lock(&stats.mutex);

stats.total_read_file_time += read_file_time;

stats.total_write_log_time += write_log_time;

pthread_mutex_unlock(&stats.mutex);

close(fd);

free(filenameMsg);

return;

}

clock_gettime(CLOCK_MONOTONIC, &start_time);

logger(LOG, "SEND", filename, fd);

clock_gettime(CLOCK_MONOTONIC, &end_time);

write_log_time += calculate_time_diff(start_time, end_time);

// 获取文件大小

len = (long)lseek(file_fd, (off_t)0, SEEK_END); // 获取文件大小

lseek(file_fd, (off_t)0, SEEK_SET); // 重置文件指针

// 分块读取文件内容

memset(buffer, 0, sizeof(buffer));

clock_gettime(CLOCK_MONOTONIC, &start_time);

long ret = read(file_fd, buffer, BUFSIZE);

clock_gettime(CLOCK_MONOTONIC, &end_time);

read_file_time += calculate_time_diff(start_time, end_time);

if (ret <= 0) {

clock_gettime(CLOCK_MONOTONIC, &start_time);

logger(ERROR, "failed to read file", filename, fd);

clock_gettime(CLOCK_MONOTONIC, &end_time);

write_log_time += calculate_time_diff(start_time, end_time);

pthread_mutex_lock(&stats.mutex);

stats.total_read_file_time += read_file_time;

stats.total_write_log_time += write_log_time;

pthread_mutex_unlock(&stats.mutex);

close(file_fd);

close(fd);

free(filenameMsg);

return;

}

// 构造 Msg 并发送到 msg queue

Msg *msg = (Msg*)malloc(sizeof(Msg));

msg->fd = fd;

msg->len = len;

memcpy(msg->content, buffer, ret);

strcpy(msg->filetype, filetype);

task *new_task = (task *)malloc(sizeof(task));

new_task->function = (void (*)(void*))sendMsgThread;

new_task->arg = msg;

addTaskToThreadPool(send_msg_pool, new_task);

thread_enter_block(&read_file_monitor, pthread_self());

pthread_mutex_lock(&stats.mutex);

stats.total_read_file_time += read_file_time;

stats.total_write_log_time += write_log_time;

pthread_mutex_unlock(&stats.mutex);

close(file_fd);

free(filenameMsg);

}

void sendMsgThread(void *arg) {

Msg *msg = (Msg*)arg;

int fd = msg->fd;

long len = msg->len;

char *content = msg->content;

char *filetype = msg->filetype;

char buffer[BUFSIZE];

struct timespec start_time, end_time;

long long write_socket_time = 0;

long long write_log_time = 0;

struct timespec total_start_time, total_end_time;

long long total_processing_time = 0;

clock_gettime(CLOCK_MONOTONIC, &total_start_time);

size_t max_len = BUFSIZE - 1; // 留出字符串结束符的空间

// 构造HTTP响应头

int bytes_written = snprintf(buffer, max_len,

"HTTP/1.1 200 OK\nServer: nweb/%d.0\nContent-Length: %ld\nConnection: "

"close\nContent-Type: %s\n\n",

VERSION, len, filetype);

// 检查是否截断

if (bytes_written >= max_len) {

// 日志记录或错误处理

logger(ERROR, "HTTP header too large", "Truncated", fd);

}

// 发送响应头

write(fd, buffer, strlen(buffer));

// 记录响应头日志

clock_gettime(CLOCK_MONOTONIC, &start_time);

logger(LOG, "Header", buffer, fd);

clock_gettime(CLOCK_MONOTONIC, &end_time);

write_log_time += calculate_time_diff(start_time, end_time);

clock_gettime(CLOCK_MONOTONIC, &start_time);

write(fd, buffer, strlen(buffer));

clock_gettime(CLOCK_MONOTONIC, &end_time);

write_socket_time += calculate_time_diff(start_time, end_time);

// 发送文件内容

clock_gettime(CLOCK_MONOTONIC, &start_time);

write(fd, content, len);

clock_gettime(CLOCK_MONOTONIC, &end_time);

write_socket_time += calculate_time_diff(start_time, end_time);

clock_gettime(CLOCK_MONOTONIC, &total_end_time);

total_processing_time += calculate_time_diff(total_start_time, total_end_time);

pthread_mutex_lock(&stats.mutex);

stats.total_write_socket_time += write_socket_time;

stats.total_write_log_time += write_log_time;

stats.total_processing_time += total_processing_time;

stats.child_count++;

pthread_mutex_unlock(&stats.mutex);

close(fd);

free(msg);

}

// 清理资源函数

void cleanup_resources() {

server_running=0;

pthread_join(monitor_thread,NULL);

waitThreadPool(read_msg_pool);

waitThreadPool(read_file_pool);

waitThreadPool(send_msg_pool);

destroyThreadPool(read_msg_pool);

destroyThreadPool(read_file_pool);

destroyThreadPool(send_msg_pool);

free(read_msg_monitor.threads);

free(read_file_monitor.threads);

free(send_msg_monitor.threads);

pthread_mutex_destroy(&stats.mutex);

pthread_mutex_destroy(&read_msg_monitor.mutex);

pthread_mutex_destroy(&read_file_monitor.mutex);

pthread_mutex_destroy(&send_msg_monitor.mutex);

pthread_mutex_destroy(&send_msg_monitor.mutex);

}

/* 主函数

* 参数:

* argc - 参数个数

* argv - 参数数组([端口号] [根目录])

*/

// 信号处理函数(处理Ctrl+C)

void sigint_handler(int sig) {

printf("\nReceived SIGINT (Ctrl+C), shutting down...\n");

server_running = 0; // 停止主循环

//等待监控线程结束

pthread_join(monitor_thread,NULL);

// 等待线程池中的所有任务完成

waitThreadPool(read_msg_pool); // 确保所有任务已处理完毕

waitThreadPool(read_file_pool);

waitThreadPool(send_msg_pool);

pthread_mutex_lock(&stats.mutex);

int total_requests = stats.child_count; // 获取总请求数

if (total_requests == 0) {

printf("No requests processed.\n");

pthread_mutex_unlock(&stats.mutex);

cleanup_resources();

exit(0);

}

// 计算各项总耗时和平均值(注意单位为微秒)

long long total_processing = stats.total_processing_time;

long long avg_processing = total_processing / total_requests;

long long avg_read_socket = (stats.total_read_socket_time) / total_requests;

long long avg_write_socket = (stats.total_write_socket_time) / total_requests;

long long avg_read_file = (stats.total_read_file_time) / total_requests;

long long avg_write_log = (stats.total_write_log_time) / total_requests;

// 输出统计结果

printf("\n===== 服务器性能统计 =====\n");

printf("总处理请求数: %d\n", total_requests);

printf("总处理时间: %lld μs\n", total_processing);

printf("平均请求处理时间: %lld μs/请求\n", avg_processing);

printf("------------------------\n");

printf("Socket读取平均耗时: %lld μs/请求\n", avg_read_socket);

printf("Socket写入平均耗时: %lld μs/请求\n", avg_write_socket);

printf("文件读取平均耗时: %lld μs/请求\n", avg_read_file);

printf("日志写入平均耗时: %lld μs/请求\n", avg_write_log);

printf("==========================\n");

pthread_mutex_unlock(&stats.mutex);

// 清理资源(线程/共享内存等)

cleanup_resources();

exit(0);

}

int main(int argc, char **argv) {

int port, listenfd, socketfd, hit;

socklen_t length;

static struct sockaddr_in cli_addr; // 客户端地址

static struct sockaddr_in serv_addr; // 服务器地址

pthread_mutex_init(&stats.mutex,NULL);

pthread_mutex_init(&io_stats_lock,NULL);

init_threadpool_monitor(&read_msg_monitor,20);

init_threadpool_monitor(&read_file_monitor,50);

init_threadpool_monitor(&send_msg_monitor,20);

read_msg_pool=initThreadPool(20,&read_msg_monitor);

read_file_pool=initThreadPool(50,&read_file_monitor);

send_msg_pool=initThreadPool(20,&send_msg_monitor);

if(pthread_create(&monitor_thread,NULL,performance_monitor,NULL)!=0)

{

logger(ERROR,"Failed to create performance monitor thread","",0);

return 1;

}

if(!(threads=malloc(max_thread*sizeof(pthread_t)))){

perror("内存分配失败");

return 1;

}

if (!read_msg_pool||!read_file_pool||!send_msg_pool) {

perror("Failed to initialize thread pool");

return 1;

}

//注册SIGINT处理函数

signal(SIGINT,sigint_handler);

// 参数验证

if (argc < 3 || argc > 3 || !strcmp(argv[1], "-?")) {

printf("用法:nweb 端口号 根目录\n");

exit(0);

}

// 禁止使用系统关键目录

if (!strncmp(argv[2], "/", 2) || !strncmp(argv[2], "/etc", 5) || !strncmp(argv[2], "/bin", 5) ||

!strncmp(argv[2], "/lib", 5) || !strncmp(argv[2], "/tmp", 5) ||

!strncmp(argv[2], "/usr", 5) || !strncmp(argv[2], "/dev", 5) ||

!strncmp(argv[2], "/sbin", 6)) {

printf("错误:禁止使用系统目录:%s\n", argv[2]);

exit(3);

}

// 切换工作目录

if (chdir(argv[2]) == -1) {

printf("错误:无法切换到目录:%s\n", argv[2]);

exit(4);

}

logger(LOG, "webserver启动", argv[1], getpid());

// 创建监听套接字

if ((listenfd = socket(AF_INET, SOCK_STREAM, 0)) < 0)

logger(ERROR, "socket系统调用失败", "", 0);

// 设置端口号

port = atoi(argv[1]);

if (port < 0 || port > 60000) logger(ERROR, "非法端口号(有效范围1-60000)", argv[1], 0);

// 配置服务器地址

serv_addr.sin_family = AF_INET;

serv_addr.sin_addr.s_addr = htonl(INADDR_ANY); // 监听所有接口

serv_addr.sin_port = htons(port);

// 绑定端口

if (bind(listenfd, (struct sockaddr *)&serv_addr, sizeof(serv_addr)) < 0)

logger(ERROR, "bind系统调用失败", "", 0);

// 开始监听

if (listen(listenfd, 64) < 0) logger(ERROR, "listen系统调用失败", "", 0);

// 主服务循环

for (hit = 1;; hit++) {

length = sizeof(cli_addr);

// 接受新连接(使用 socketfd 而非 connfd)

if ((socketfd = accept(listenfd, (struct sockaddr *)&cli_addr, &length)) < 0)

logger(ERROR, "accept系统调用失败", "", 0);

// 创建任务参数(使用 thread_data 而非 TaskArgs)

thread_data *args = (thread_data *)malloc(sizeof(thread_data));

if (!args) {

close(socketfd); // 修正为 socketfd

continue;

}

args->fd = socketfd; // 修正为 socketfd

// 获取线程索引(使用互斥锁保护)

pthread_mutex_lock(&stats.mutex);

args->thread_idx = stats.child_count++;

pthread_mutex_unlock(&stats.mutex);

// 创建任务并提交到线程池

task *new_task = (task *)malloc(sizeof(task));

if (!new_task) {

free(args);

close(socketfd); // 修正为 socketfd

continue;

}

new_task->function = (void (*)(void*))readMsgThread; // 显式类型转换

new_task->arg = args;

addTaskToThreadPool(read_msg_pool, new_task);

printf("Added task for FD %d to thread pool\n", socketfd);

}

return 0;

}我的线程能够正常运行,然后服务器性能监控运行之后,线程就运行不了了,请问为什么,我怀疑是锁的原因

最新发布

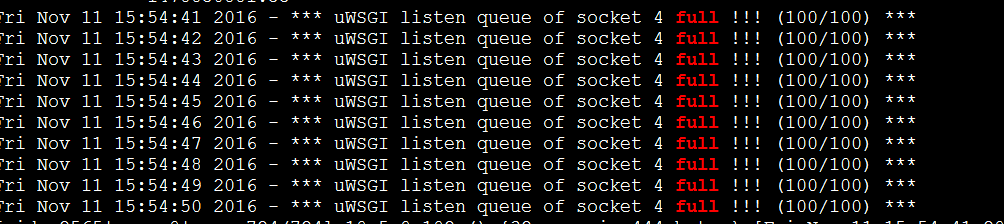

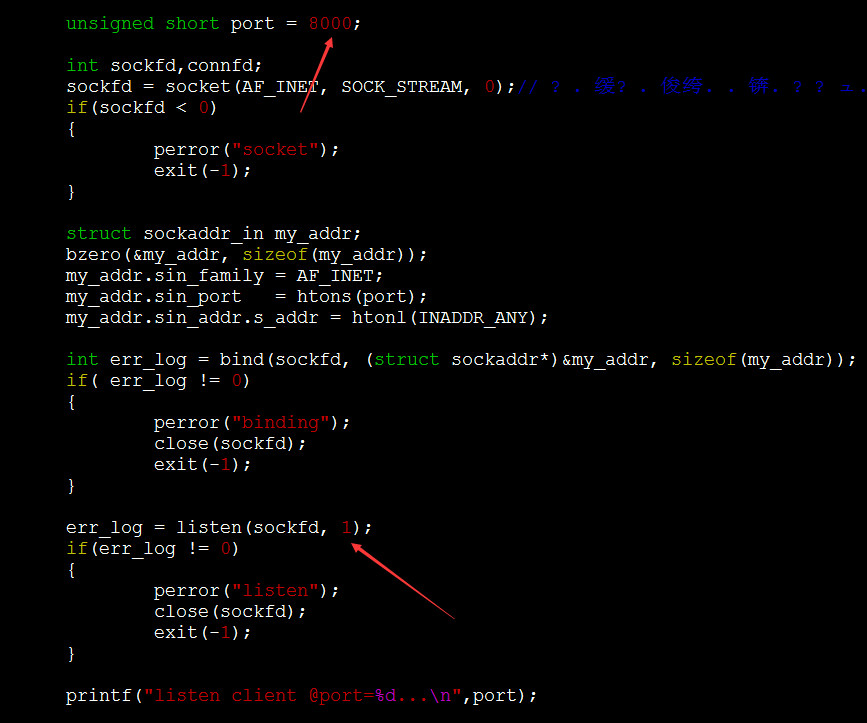

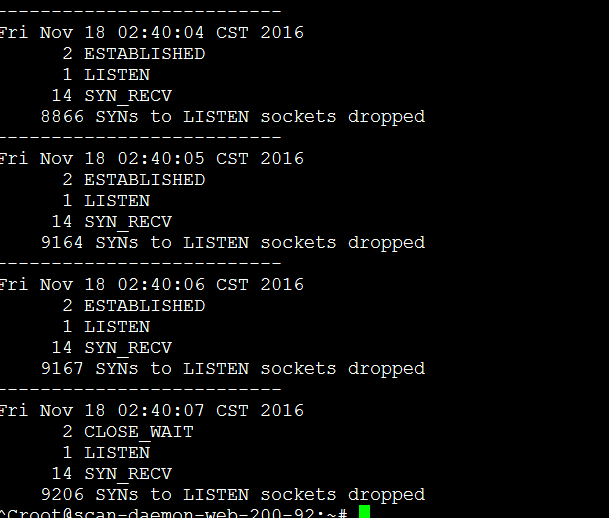

本文针对线上服务器偶尔出现的 uWSGI listen queue full 的问题进行了深入分析。通过对现象的复现及 TCP 状态跟踪,最终定位到部分 API 处理速度慢是根本原因。并给出了增加 listen 队列长度、使用 UNIX Domain Socket 和增加负载均衡等解决方案。

本文针对线上服务器偶尔出现的 uWSGI listen queue full 的问题进行了深入分析。通过对现象的复现及 TCP 状态跟踪,最终定位到部分 API 处理速度慢是根本原因。并给出了增加 listen 队列长度、使用 UNIX Domain Socket 和增加负载均衡等解决方案。

2186

2186

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?