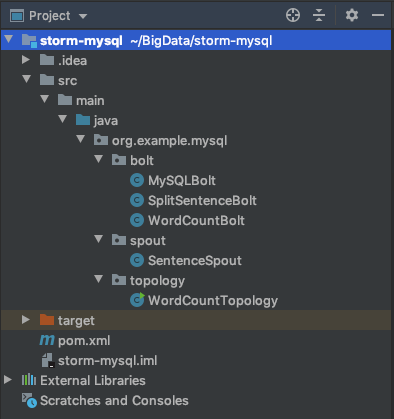

本示例用于演示将Bolt中的数据保存到MySQL数据库中。

1. pom.xml

<dependency>

<groupId>org.apache.storm</groupId>

<artifactId>storm-jdbc</artifactId>

<version>2.1.0</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>8.0.19</version>

</dependency>

<dependency>

<groupId>org.apache.storm</groupId>

<artifactId>storm-core</artifactId>

<version>2.1.0</version>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<version>1.18.12</version>

</dependency>

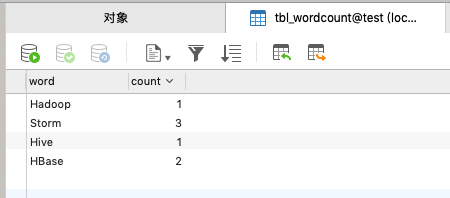

2. test.tbl_wordcount

CREATE TABLE `tbl_wordcount` (

`word` varchar(255) DEFAULT NULL,

`count` bigint(20) DEFAULT NULL

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

3. SentenceSpout

public class SentenceSpout extends BaseRichSpout {

private SpoutOutputCollector collector;

private List<String> sentenceList = Arrays.asList(

"Hadoop,Storm,Hive,HBase",

"Storm,HBase,Storm"

);

private Integer index = 0;

@Override

public void open(Map<String, Object> conf, TopologyContext context, SpoutOutputCollector collector) {

this.collector = collector;

}

/**

* Storm将会循环调用该方法

*/

@Override

public void nextTuple() {

if (index < sentenceList.size()) {

final String sentence = sentenceList.get(index);

// 发射时需要指定 消息id

collector.emit(new Values(sentence), index);

index++;

}

}

@Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("sentence"));

}

}

4. SplitSentenceBolt

/**

* 将句子分隔成单词

*/

@Slf4j

public class SplitSentenceBolt extends BaseRichBolt {

private OutputCollector collector;

@Override

public void prepare(Map<String, Object> topoConf, TopologyContext context, OutputCollector collector) {

this.collector = collector;

}

@Override

public void execute(Tuple input) {

try {

String sentence = input.getStringByField("sentence");

String[] words = sentence.split(",");

// 将每个单词流向到下一个Bolt

for (String word : words) {

// 发射时携带发射过来的input

collector.emit(input, new Values(word));

}

// 处理成功了给当前tuple做一个成功的标记,调用上游的ack方法

collector.ack(input);

} catch (Exception e) {

log.error("SplitSentenceBolt#execute exception", e);

// 异常做一个失败的标记,调用上游的fail方法

collector.fail(input);

}

}

@Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("word"));

}

}

5. WordCountBolt

public class WordCountBolt extends BaseRichBolt {

private OutputCollector collector;

private Map<String, Long> wordCountMap = null;

/**

* 大部分示例变量通常在prepare中进行实例化

* @param topoConf

* @param context

* @param collector

*/

@Override

public void prepare(Map<String, Object> topoConf, TopologyContext context, OutputCollector collector) {

this.collector = collector;

this.wordCountMap = new HashMap<>();

}

@Override

public void execute(Tuple input) {

String word = input.getStringByField("word");

Long count = wordCountMap.get(word);

if (count == null) {

count = 0L;

}

count++;

wordCountMap.put(word, count);

collector.emit(new Values(word, count));

collector.ack(input);

}

@Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("word", "count"));

}

}

6. MySQLBolt

public class MySQLBolt extends BaseRichBolt {

private JdbcClient jdbcClient;

@Override

public void prepare(Map<String, Object> map, TopologyContext topologyContext, OutputCollector outputCollector) {

Map hikariConfigMap = new HashMap();

hikariConfigMap.put("dataSourceClassName","com.mysql.cj.jdbc.MysqlDataSource");

hikariConfigMap.put("dataSource.url", "jdbc:mysql://localhost/test?useUnicode=true&characterEncoding=UTF-8");

hikariConfigMap.put("dataSource.user","root");

hikariConfigMap.put("dataSource.password","root123");

ConnectionProvider connectionProvider = new HikariCPConnectionProvider(hikariConfigMap);

connectionProvider.prepare();

jdbcClient = new JdbcClient(connectionProvider, 30);

}

@Override

public void execute(Tuple tuple) {

String word = tuple.getStringByField("word");

Long count = tuple.getLongByField("count");

List list = new ArrayList();

list.add(new Column("word", word, Types.VARCHAR));

List<List> select = jdbcClient.select("select word from tbl_wordcount where word = ?", list);

long num = select.stream().count();

if (num >= 1) {

jdbcClient.executeSql("update tbl_wordcount set count = "+count+" where word = '"+word+"'");

} else {

jdbcClient.executeSql("insert into tbl_wordcount values('" + word +"'," + count + ")");

}

}

@Override

public void declareOutputFields(OutputFieldsDeclarer outputFieldsDeclarer) {

}

}

7. WordCountTopology

import org.apache.storm.Config;

import org.apache.storm.LocalCluster;

import org.apache.storm.StormSubmitter;

import org.apache.storm.generated.StormTopology;

import org.apache.storm.topology.TopologyBuilder;

import org.example.mysql.bolt.MySQLBolt;

import org.example.mysql.bolt.SplitSentenceBolt;

import org.example.mysql.bolt.WordCountBolt;

import org.example.mysql.spout.SentenceSpout;

public class WordCountTopology {

public static void main(String[] args) throws Exception {

TopologyBuilder builder = new TopologyBuilder();

builder.setSpout("spout", new SentenceSpout());

builder.setBolt("split-bolt", new SplitSentenceBolt()).shuffleGrouping("spout");

builder.setBolt("word-count-bolt", new WordCountBolt()).shuffleGrouping("split-bolt");

builder.setBolt("jdbc-bolt", new MySQLBolt()).globalGrouping("word-count-bolt");

StormTopology topology = builder.createTopology();

// 提交拓扑

Config config = new Config();

config.setDebug(true);

if (args == null || args.length == 0) {

// 本地模式

config.setDebug(true);

LocalCluster cluster = new LocalCluster();

cluster.submitTopology("WordCountTopology", config, topology);

} else {

// 集群模式

config.setNumWorkers(2);

StormSubmitter.submitTopology(args[0],config,builder.createTopology());

}

}

}

6229

6229

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?