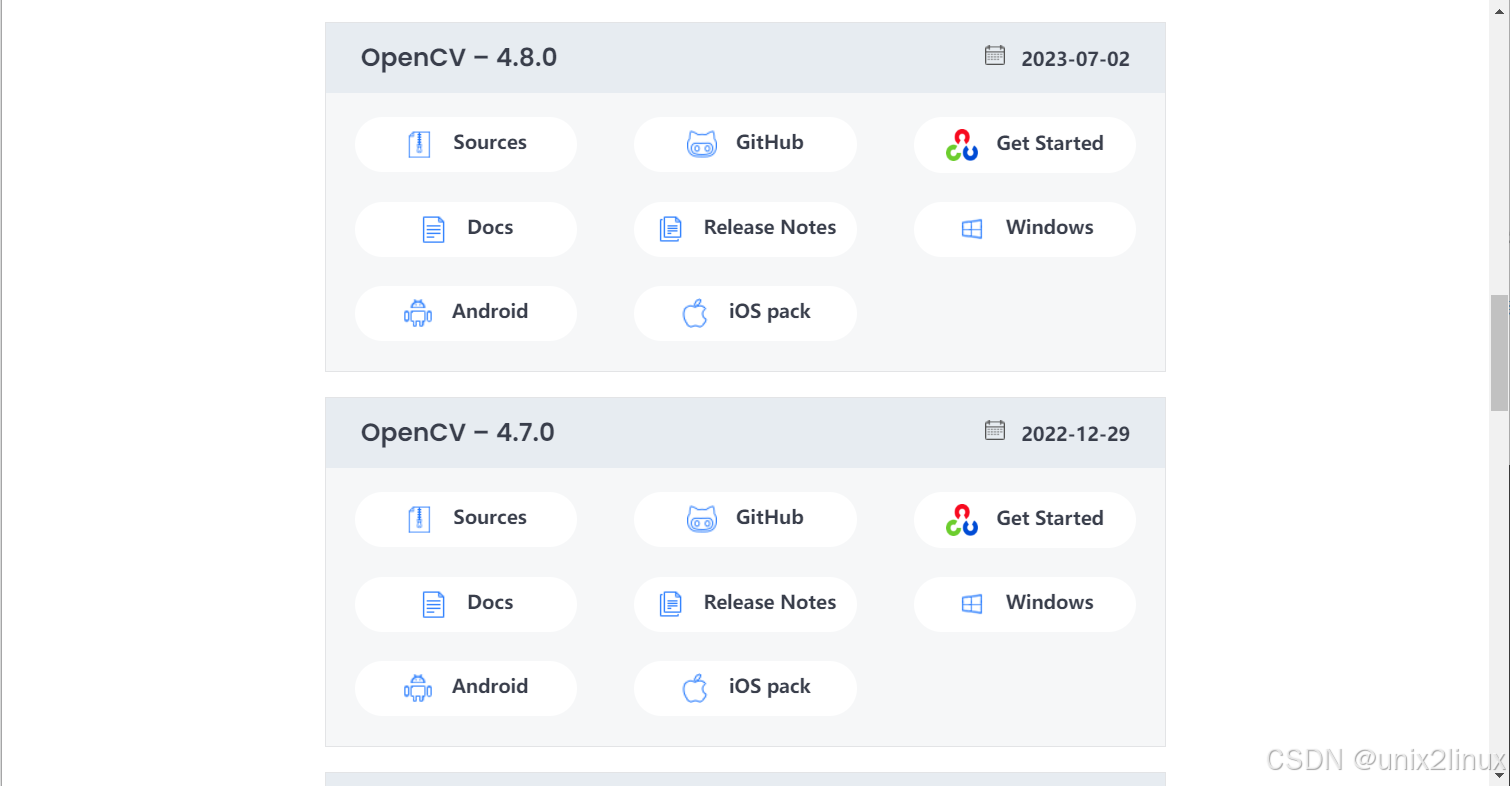

OpenCV-4.8.0

https://opencv.org/releases/

(alpha) D:\Deploy>wget https://cyfuture.dl.sourceforge.net/project/opencvlibrary/4.8.0/opencv-4.8.0-windows.exe?viasf=1

--2025-02-02 13:40:22-- https://cyfuture.dl.sourceforge.net/project/opencvlibrary/4.8.0/opencv-4.8.0-windows.exe?viasf=1

Resolving cyfuture.dl.sourceforge.net (cyfuture.dl.sourceforge.net)... 49.50.119.27

Connecting to cyfuture.dl.sourceforge.net (cyfuture.dl.sourceforge.net)|49.50.119.27|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 176191149 (168M) [application/octet-stream]

Saving to: 'opencv-4.8.0-windows.exe@viasf=1'

opencv-4.8.0-windows.exe@vias 100%[=================================================>] 168.03M 113KB/s in 19m 58s

2025-02-02 14:00:22 (144 KB/s) - 'opencv-4.8.0-windows.exe@viasf=1' saved [176191149/176191149]

include & lib & path

D:\Deploy\opencv-4.8.0\build\include

└─ opencv2

D:\Deploy\opencv-4.8.0\build\x64\vc16\lib

│ opencv_world480.lib

│ opencv_world480d.lib

└─ ...

D:\Deploy\opencv-4.8.0\build\x64\vc16\bin

│ opencv_world480.dll

│ opencv_world480d.dll

└─ ...

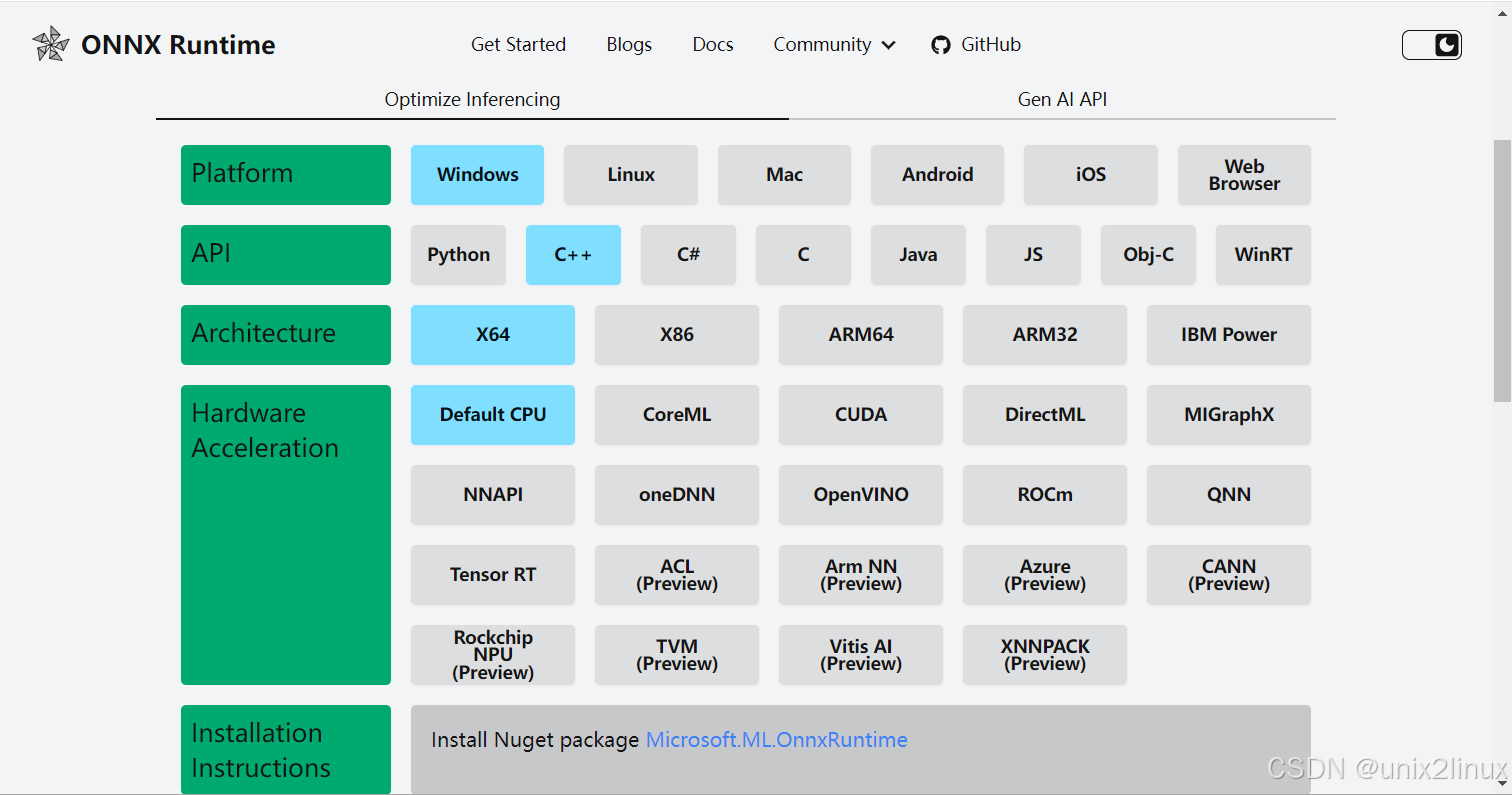

ONNX Runtime 1.20.1

https://onnxruntime.ai/getting-started

⇒ [ Install Nuget package Microsoft.ML.OnnxRuntime ]

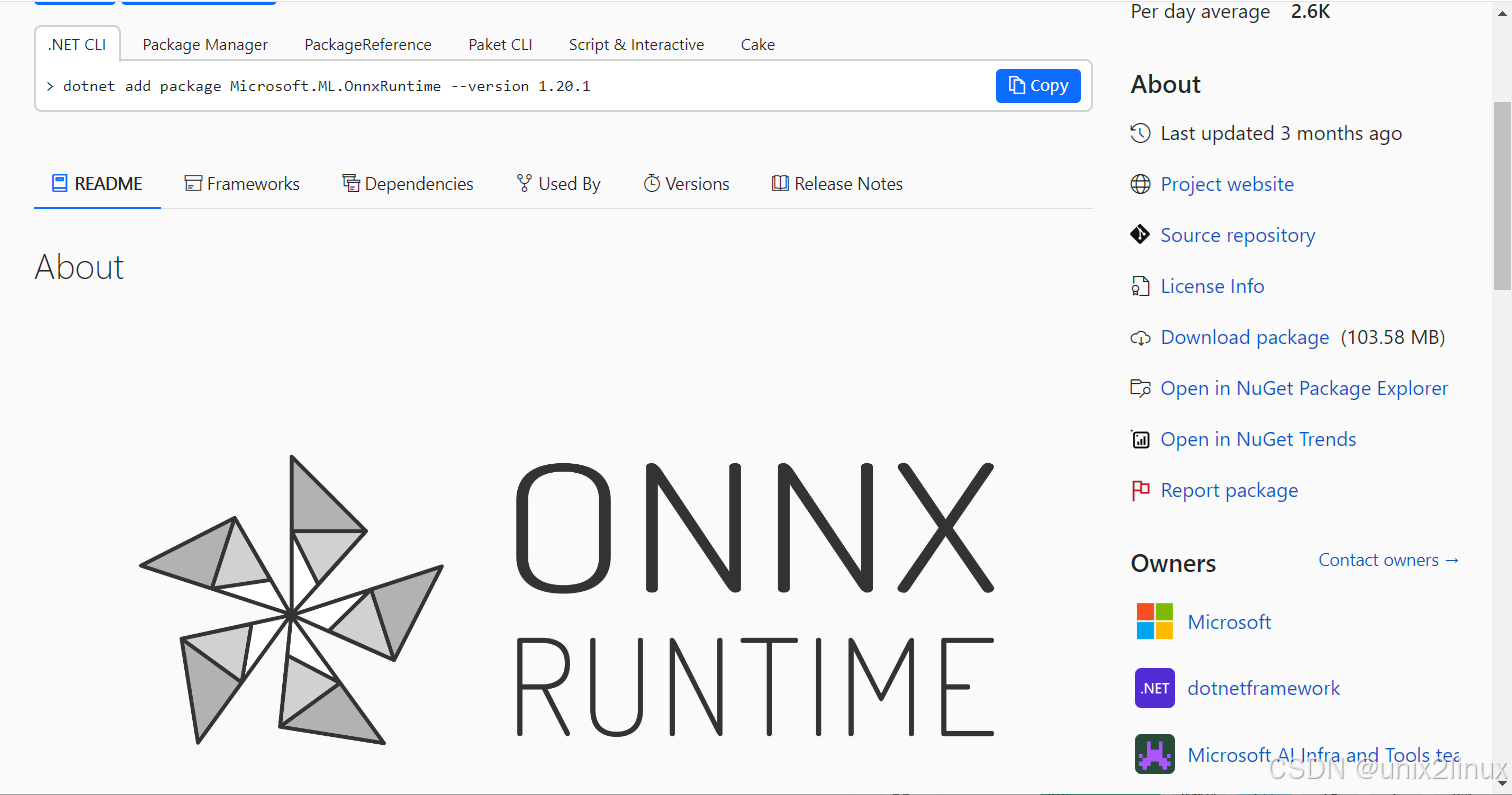

https://www.nuget.org/packages/Microsoft.ML.OnnxRuntime

⇒ [ Download package (103.58 MB) ]

include & lib & path

D:\Deploy\microsoft.ml.onnxruntime.1.20.1\build\native\include

D:\Deploy\microsoft.ml.onnxruntime.1.20.1\runtimes\win-x64\native

onnxruntime.lib;onnxruntime_providers_shared.lib

PATH=D:\Deploy\microsoft.ml.onnxruntime.1.20.1\runtimes\win-x64\native;%PATH%

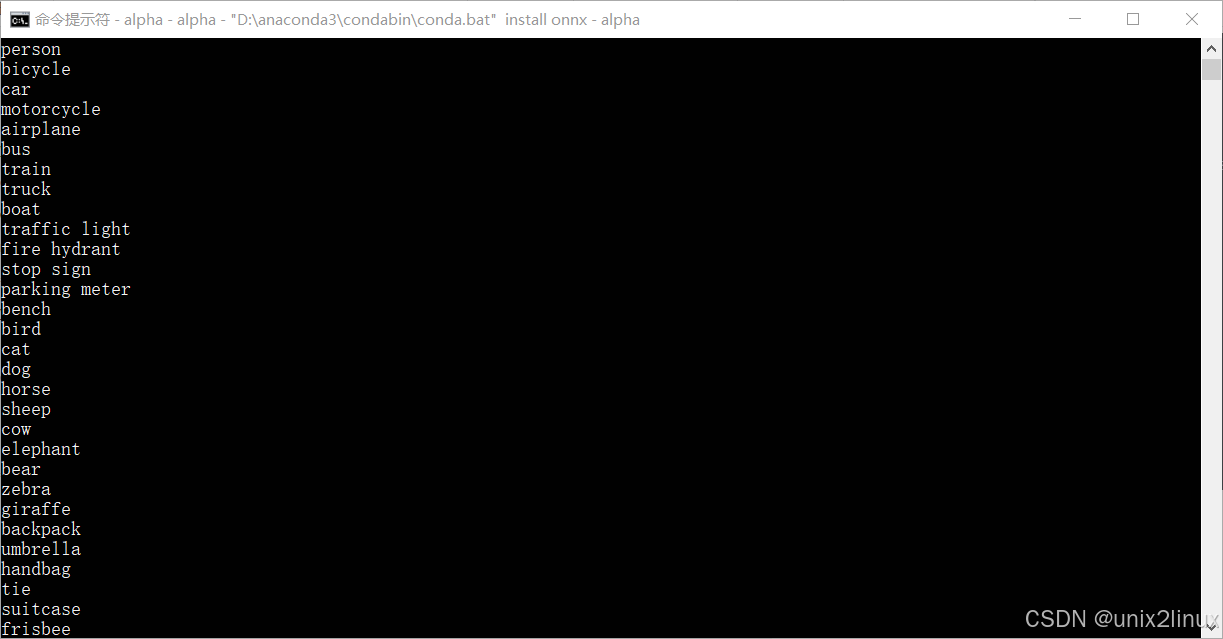

Export classes from YOLOv5s to txt

import torch

checkFile = 'yolov5s.pt'

def listClasses(modelFile = checkFile):

model = torch.load(modelFile, weights_only=False, map_location='cpu')

for key in model:

node = model[key]

subkey = 'names'

if hasattr(node, subkey):

for one in node.names:

print(one)

if __name__ == '__main__':

if True:

listClasses('yolov5s.pt')

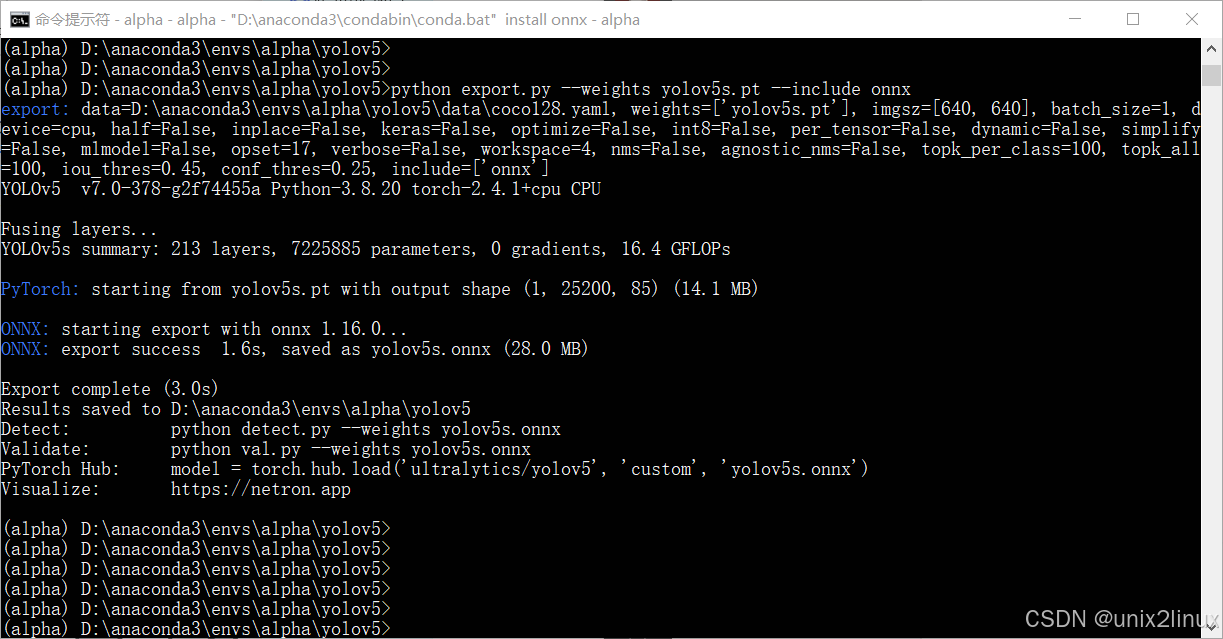

Export model from YOLOv5s to ONNX

python export.py --weights yolov5s.pt --include onnx

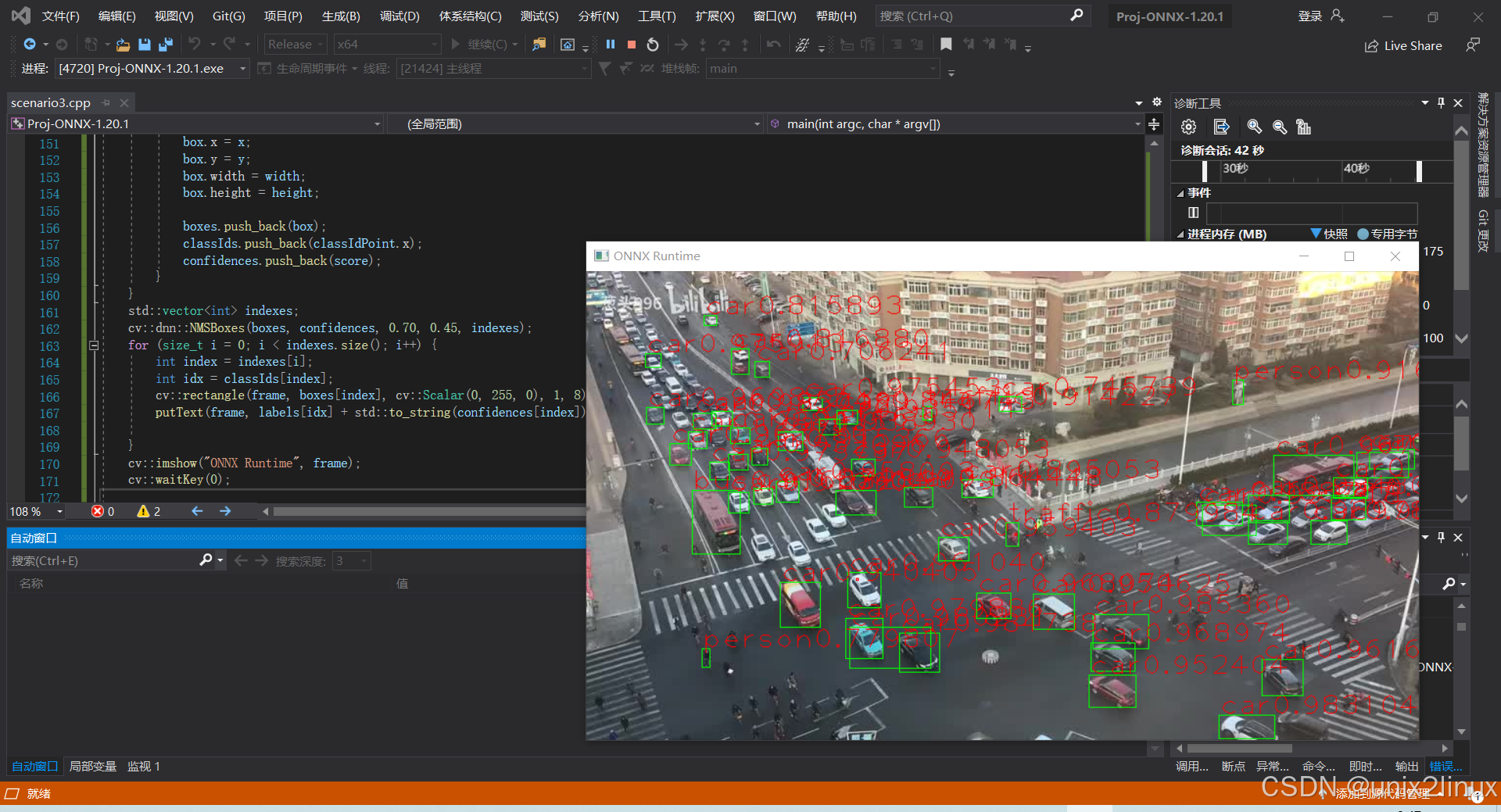

scenario3.cpp

#include <onnxruntime_cxx_api.h>

#include <opencv2/opencv.hpp>

#include <fstream>

#include <iostream>

#include <string>

using namespace std; // c++标准库中所有标识符都被定义于一个名为std的namespace中

using namespace cv;

std::vector<std::string> readClassNames(std::string labels_txt_file)

{

std::vector<std::string> classNames;

std::ifstream fp(labels_txt_file);

if (!fp.is_open()) {

printf("could not open file...\n");

exit(-1);

}

std::string name;

while (!fp.eof()) {

std::getline(fp, name);

if (name.length())

classNames.push_back(name);

}

fp.close();

return classNames;

}

int main(int argc, char* argv[]) {

Ort::Env env(ORT_LOGGING_LEVEL_WARNING, "runtime");

Ort::SessionOptions session_options;

std::wstring model_path = L"D:\\Deploy\\scenario\\models\\yolov5s.onnx";

// 设置了会话在执行单个操作时使用的线程数4

session_options.SetIntraOpNumThreads(4);

// 设置启用所有可用的图优化

session_options.SetGraphOptimizationLevel(GraphOptimizationLevel::ORT_ENABLE_ALL);

Ort::Session session(env, model_path.c_str(), session_options);

// 加载标签

std::string label_path = "D:\\Deploy\\scenario\\models\\yolov5s.txt";

std::vector<std::string> labels = readClassNames(label_path);

// 加载图像

std::string image_path = "D:\\Deploy\\scenario\\images\\1734613232.139.0.jpg";

cv::Mat frame = cv::imread(image_path);

if (frame.empty()) {

std::cerr << "Error loading image!" << std::endl;

return -1;

}

std::vector<std::string> input_node_names;

size_t numInputNodes = session.GetInputCount();

Ort::AllocatorWithDefaultOptions allocator;

int input_w = 0;

int input_h = 0;

int batch_size = 0;

int channel = 0;

int w = frame.cols;

int h = frame.rows;

int _max = std::max(h, w);

for (int i = 0; i < numInputNodes; i++) {

auto input_name = session.GetInputNameAllocated(i, allocator);

input_node_names.push_back(input_name.get());

Ort::TypeInfo input_type_info = session.GetInputTypeInfo(i);

auto input_tensor_info = input_type_info.GetTensorTypeAndShapeInfo();

auto input_dims = input_tensor_info.GetShape();

input_w = input_dims[3];

input_h = input_dims[2];

channel = input_dims[1];

batch_size = input_dims[0];

std::cout << "input format: NxCxHxW = " << input_dims[0] << "x" << input_dims[1] << "x" << input_dims[2] << "x" << input_dims[3] << std::endl;

}

cv::Mat image = cv::Mat::zeros(cv::Size(_max, _max), CV_8UC3);

cv::Rect roi(0, 0, w, h);

frame.copyTo(image(roi));

cv::Mat blob = cv::dnn::blobFromImage(image, 1 / 255.0, cv::Size(input_w, input_h), cv::Scalar(0, 0, 0), true, false);

size_t tpixels = input_h * input_w * channel;

std::array<int64_t, 4> input_shape_info{ batch_size, channel, input_h, input_w };

auto allocator_info = Ort::MemoryInfo::CreateCpu(OrtDeviceAllocator, OrtMemTypeCPU);

Ort::Value input_tensor_ = Ort::Value::CreateTensor<float>(allocator_info, blob.ptr<float>(), tpixels, input_shape_info.data(), input_shape_info.size());

size_t numOutputNodes = session.GetOutputCount();

std::vector<std::string> output_node_names;

int output_h = 0;

int output_w = 0;

for (int i = 0; i < numOutputNodes; i++) {

Ort::TypeInfo output_type_info = session.GetOutputTypeInfo(i);

auto output_tensor_info = output_type_info.GetTensorTypeAndShapeInfo();

auto output_dims = output_tensor_info.GetShape();

output_h = output_dims[1];

output_w = output_dims[2];

auto out_name = session.GetOutputNameAllocated(i, allocator);

output_node_names.push_back(out_name.get());

std::cout << "output format : HxW = " << output_dims[1] << "x" << output_dims[2] << std::endl;

}

const std::array<const char*, 1> inputNames = { input_node_names[0].c_str() };

const std::array<const char*, 1> outNames = { output_node_names[0].c_str() };

std::vector<Ort::Value> ort_outputs;

try {

ort_outputs = session.Run(Ort::RunOptions{ nullptr }, inputNames.data(), &input_tensor_, numInputNodes, outNames.data(), outNames.size());

}

catch (std::exception e) {

std::cout << e.what() << std::endl;

}

float x_factor = image.cols / static_cast<float>(input_w);

float y_factor = image.rows / static_cast<float>(input_h);

const float* pdata = ort_outputs[0].GetTensorMutableData<float>();

cv::Mat dout(output_h, output_w, CV_32F, (float*)pdata);

std::vector<cv::Rect> boxes;

std::vector<int> classIds;

std::vector<float> confidences;

int nLabelSize = labels.size();

for (int i = 0; i < dout.rows; i++) {

cv::Mat classes_scores = dout.row(i).colRange(5, nLabelSize + 5);

cv::Point classIdPoint;

double score;

minMaxLoc(classes_scores, 0, &score, 0, &classIdPoint);

float conf = dout.at<float>(i, 4);

//预测框置信度

if (conf < 0.15) {

continue;

}

//类别置信度判断

if (score > 0.35) {

float cx = dout.at<float>(i, 0);

float cy = dout.at<float>(i, 1);

float ow = dout.at<float>(i, 2);

float oh = dout.at<float>(i, 3);

int x = static_cast<int>((cx - 0.5 * ow) * x_factor);

int y = static_cast<int>((cy - 0.5 * oh) * y_factor);

int width = static_cast<int>(ow * x_factor);

int height = static_cast<int>(oh * y_factor);

cv::Rect box;

box.x = x;

box.y = y;

box.width = width;

box.height = height;

boxes.push_back(box);

classIds.push_back(classIdPoint.x);

confidences.push_back(score);

}

}

std::vector<int> indexes;

cv::dnn::NMSBoxes(boxes, confidences, 0.70, 0.45, indexes);

for (size_t i = 0; i < indexes.size(); i++) {

int index = indexes[i];

int idx = classIds[index];

cv::rectangle(frame, boxes[index], cv::Scalar(0, 255, 0), 1, 8);

putText(frame, labels[idx] + std::to_string(confidences[index]), cv::Point(boxes[index].tl().x, boxes[index].tl().y), cv::FONT_HERSHEY_PLAIN, 2.0, cv::Scalar(0, 0, 255), 1, 3);

}

cv::imshow("YOLOv5+ONNXRUNTIME", frame);

cv::waitKey(0);

session_options.release();

session.release();

return 0;

}

var args

#include <fstream>

#include <iostream>

#include <string>

#include <locale>

#include <codecvt>

std::string sysMode, sysClass, sysInput;

void blankLine()

{

std::cout << " " << std::endl;

}

std::wstring string2wstring(std::string str)

{

std::wstring strTmp;

std::wstring_convert<std::codecvt_utf8_utf16<wchar_t>> conv;

strTmp = conv.from_bytes(str);

return strTmp;

}

std::string wstring2string(std::wstring wstr)

{

std::string strTmp;

std::wstring_convert<std::codecvt_utf8<wchar_t>> myconv;

strTmp = myconv.to_bytes(wstr);

return strTmp;

}

std::string chars2string(char* pChars)

{

std::string strTmp = pChars;

return strTmp;

}

std::wstring chars2wstring(char* pChars)

{

std::wstring strTmp;

std::wstring_convert<std::codecvt_utf8<wchar_t>> conv;

strTmp = conv.from_bytes(pChars);

return strTmp;

}

void processArgs(int argc, char* argv[])

{

if (true) {

int opt;

blankLine();

while ((opt = getopt(argc, argv, "m:c:i:")) != -1)

{

switch (opt)

{

case 'm':

sysMode = chars2string(optarg);

std::cout << "-m " << sysMode << std::endl;

break;

case 'c':

sysClass = chars2string(optarg);

std::cout << "-c " << sysClass << std::endl;

break;

case 'i':

sysInput = chars2string(optarg);

std::cout << "-i " << sysInput << std::endl;

break;

default:

std::cerr << "UNKNONWN : " << opt << std::endl;

break;

}

}

blankLine();

}

}

onnx.cmd

@SET PREDICT_MODE="D:\Deploy\scenario\models\fireworksv1.onnx"

@SET PREDICT_CLASS="D:\Deploy\scenario\models\fireworksv1.txt"

@SET PREDICT_INPUT="D:\Deploy\scenario\videos\demo_00301.jpg"

@SET PREDICT_MODE="D:\Deploy\scenario\models\yolov5s.onnx"

@SET PREDICT_CLASS="D:\Deploy\scenario\models\yolov5s.txt"

@SET PREDICT_INPUT="D:\Deploy\scenario\images\1734613232.139.0.jpg"

@Proj-ONNX-1.20.1.exe -m %PREDICT_MODE% -c %PREDICT_CLASS% -i %PREDICT_INPUT%

1397

1397

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?