一、测试openvino自身的demo

cd /app/intel/openvino/deployment_tools/inference_engine/samples

sh build_samples.sh

在 /app/inference_engine_samples_build 下生产多个样例,在这测试object_detection_sample_ssd

cd /app/inference_engine_samples_build/intel64/Release

./object_detection_sample_ssd -i /app/openvino_test/dog.jpg -m /app/openvino_test/ssd_mobilenet_v2_coco_2018_03_29/FP32/frozen_inference_graph.xml -d CPU -l /app/inference_engine_samples_build/intel64/Release/lib/libcpu_extension.so

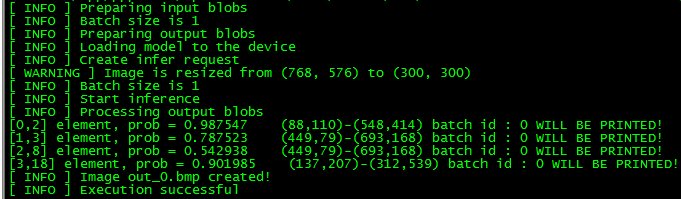

得到以下结果

在运行的过程中可能会出现下面错误

./object_detection_sample_ssd: error while loading shared libraries: libformat_reader.so: cannot open shared object file: No such file or directory

vi ~/.bashrc

在最后添加 export LD_LIBRARY_PATH=$/app/inference_engine_samples_build/intel64/Release/lib

二、测试自己创建的工程

例如测试ssd_mobilenetV2模型

1、编辑ssd_mobilenetV2_openvino.cpp,如下:

#include <inference_engine.hpp>

#include <reshape_ssd_extension.hpp>

#include <ext_list.hpp>

#include<chrono>

#include <opencv2/opencv.hpp>

#include <iostream>

using namespace cv;

using namespace InferenceEngine;

using namespace std;

template <typename T>

void matU8ToBlob(const cv::Mat& orig_image, InferenceEngine::Blob::Ptr& blob, int batchIndex = 0) {

InferenceEngine::SizeVector blobSize = blob->getTensorDesc().getDims();

const size_t width = blobSize[3];

const size_t height = blobSize[2];

const size_t channels = blobSize[1];

T* blob_data = blob->buffer().as OpenVINO目标检测实战

OpenVINO目标检测实战

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

1万+

1万+