AAAI 2020

EEMEFN: Low-Light Image Enhancement via Edge-Enhanced Multi-Exposure Fusion Network

Minfeng Zhu1 2∗ , Pingbo Pan2 3† , Wei Chen1‡ , Yi Yang2

1 State Key Lab of CAD&CG, Zhejiang University

2 The ReLER Lab, University of Technology Sydney,

3 Baidu Research

minfeng_zhu@zju.edu.cn, chenwei@cad.zju.edu.cn, pingbo.pan@student.uts.edu.au, yi.yang@uts.edu.au

Abstract

This work focuses on the extremely low-light image enhancement, which aims to improve image brightness and reveal hidden information in darken areas. Recently, image enhancement approaches have yielded impressive progress. However, existing methods still suffer from three main problems: (1) low-light images usually are high-contrast. Existing methods may fail to recover images details in extremely dark or bright areas; (2) current methods cannot precisely correct the color of low-light images; (3) when the object edges are unclear, the pixel-wise loss may treat pixels of different objects equally and produce blurry images.

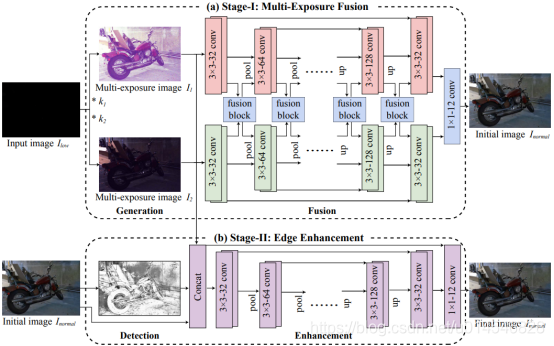

In this paper, we propose a two-stage method called Edge-Enhanced Multi-Exposure Fusion Network (EEMEFN) to enhance extremely low-light images. In the first stage, we employ a multi-exposure fusion module to address the high contrast and color bias issues. We synthesize a set of images with different exposure time from a single image and construct an accurate normal-light image by combining well-exposed areas under different illumination conditions. Thus, it can produce realistic initial images with correct color from extremely noisy and low-light images. Secondly, we introduce an edge enhancement module to refine the initial images with the help of the edge information. Therefore, our method can reconstruct high-quality images with sharp edges when minimizing the pixel-wise loss.

Experiments on the See-in-the-Dark dataset indicate that our EEMEFN approach achieves state-of-the-art performance.

Method

- Stage-I: Multi-Exposure Fusion

The multi-exposure fusion (MEF) module is introduced to fuse images with different exposure ratios into one high-quality initial image. As shown in Figure 1 (a), the MEF module includes two main steps: generation and fusion.

在生成步骤中,通过缩放不同放大比率的像素值,生成一组不同光照条件下的多曝光图像:

Ii = Clip(Ilow ∗ ki); where the Clip(x) = min(x, 1),exposure ratios {k1, k2, ..., kN }。

合成的图像可以在原始图像曝光不足的区域充分曝光。

在融合步骤中,将合成图像的部分信息融合成具有良好曝光信息的初始图像。特别地,提出了融合块来共享由不同卷积层得到的图像特征,以充分利用合成图像中有价值的信息。

Inormal = MEN(I0, I1, ..., IN )

MEN 模型具体:每个 Ii都经过U-Net进行变换;每个U-Net的每个stage又经过fusion模型进行特征融合,如下图。每个U-Net的输出再经过1x1卷积,将每一路的输出进行融合成初步的增强图像Inormal 。

- Stage-II: Edge Enhancement

The edge enhancement (EE) module aims to enhance the initial image generated by the MEF module. As shown in Figure 1 (b), the EE module consists of two main steps: detection and enhancement.

在检测步骤中,首先从初始图像生成边缘映射,而不是从输入图像生成边缘映射。输入图像和多次曝光图像的噪声非常大,使得边缘提取非常困难。MEF模块有效地去除噪音,并生成清晰的法线光图像和精确的边缘地图。采用 (Liu et al. 2017) 的方法进行边缘检测。

Liu, Y.; Cheng, M.-M.; Hu, X.; Wang, K.; and Bai, X.. Richer convolutional features for edge detection. 2017 CVPR

在增强步骤中,EE模块通过利用边缘信息增强初始图像。EE模块借助预测的边缘图,可以生成更光滑、颜色一致的物体表面,恢复丰富的纹理和锐利的边缘。

最后的输出通过L1 loss进行监督学习。

Dataset: See-in-the-Dark dataset

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?