楔子

使用sqoop将RDBMS数据导入到HBase当中

1 配置sqoop-env.sh

主要配置如下

#Set path to where bin/hadoop is available

export HADOOP_COMMON_HOME=/opt/module/hadoop250

#Set path to where hadoop-*-core.jar is available

export HADOOP_MAPRED_HOME=/opt/module/hadoop250

#set the path to where bin/hbase is available

export HBASE_HOME=/opt/module/hbase0986

#Set the path to where bin/hive is available

export HIVE_HOME=/opt/module/hive013

#Set the path for where zookeper config dir is

export ZOOCFGDIR=/opt/module/zookeeper345/conf

export ZOOKEEPER_HOME=/opt/module/zookeeper345

2MySQL数据

CREATE DATABASE /*!32312 IF NOT EXISTS*/`life` /*!40100 DEFAULT CHARACTER SET utf8 */;

USE `life`;

/*Table structure for table `k_s_fam` */

DROP TABLE IF EXISTS `k_s_fam`;

CREATE TABLE `k_s_fam` (

`id` int(11) NOT NULL DEFAULT '0',

`name` varchar(25) DEFAULT NULL,

`birthN` int(11) DEFAULT NULL,

`birthG` int(11) DEFAULT NULL,

`rela` varchar(40) DEFAULT '友',

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

/*Data for the table `k_s_fam` */

insert into `k_s_fam`(`id`,`name`,`birthN`,`birthG`,`rela`) values

(141,'修',0,603,'友'),

(143,'刘',511,622,'友'),

(144,'李',0,727,'友'),

(145,'邱',0,916,'友'),

(146,'刘',1006,1115,'友'),

(147,'陈',0,1208,'友'),

(148,'党',0,1209,'友'),

(149,'王',0,1229,'友'),

(150,'郭',619,0,'友'),

(151,'孙',709,0,'友'),

(152,'邢',1121,113,'友'),

(153,'弓',123,0,'友'),

(154,'王',918,0,'友'),

(155,'高',214,0,'友'),

(156,'南',206,0,'友'),

(157,'李',0,100,'10月多那会'),

(158,'马',528,711,'友'),

(159,'陈',506,603,'友'),

(160,'林',619,810,'友');

3 执行sqoop导入数据操作

hbase中不存在的表 导入的过程中会自动创建

bin/sqoop import \

--connect jdbc:mysql://192.168.48.3:3306/life \

--username root \

--password 123456 \

--table k_s_fam \

--columns "id,name,birthN,birthG,rela" \

--column-family "info" \

--hbase-create-table \

--hbase-row-key "id" \

--hbase-table "hbase_fam" \

--num-mappers 1 \

--split-by id

sqoop 执行部分过程

[grq@hadoop sqoop145]$ bin/sqoop import \

> --connect jdbc:mysql://192.168.48.3:3306/life \

> --username root \

> --password 123456 \

> --table k_s_fam \

> --columns "id,name,birthN,birthG,rela" \

> --column-family "info" \

> --hbase-create-table \

> --hbase-row-key "id" \

> --hbase-table "hbase_fam" \

> --num-mappers 1 \

> --split-by id

Warning: /opt/module/sqoop145/bin/../../hcatalog does not exist! HCatalog jobs will fail.

………………

# 省略 部分内容

………………

18/10/29 09:19:06 INFO mapreduce.Job: Counters: 30

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=158346

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=87

HDFS: Number of bytes written=0

HDFS: Number of read operations=1

HDFS: Number of large read operations=0

HDFS: Number of write operations=0

Job Counters

Launched map tasks=1

Other local map tasks=1

Total time spent by all maps in occupied slots (ms)=8602

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=8602

Total vcore-seconds taken by all map tasks=8602

Total megabyte-seconds taken by all map tasks=8808448

Map-Reduce Framework

Map input records=12

Map output records=12

Input split bytes=87

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=118

CPU time spent (ms)=1780

Physical memory (bytes) snapshot=106479616

Virtual memory (bytes) snapshot=2066251776

Total committed heap usage (bytes)=30474240

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=0

18/10/29 09:19:06 INFO mapreduce.ImportJobBase: Transferred 0 bytes in 31.8101 seconds (0 bytes/sec)

18/10/29 09:19:06 INFO mapreduce.ImportJobBase: Retrieved 12 records.

[grq@hadoop sqoop145]$

4 在hive中创建hbase外部表关联hbase表

此操作可以不需要,只是为了方便观察数据,而采取的

CREATE EXTERNAL TABLE relevance_hbase_fam(

id int,

name string,

birthN int,

birthG int,

rela string)

STORED BY

'org.apache.hadoop.hive.hbase.HBaseStorageHandler'

WITH SERDEPROPERTIES ("hbase.columns.mapping" =

":key,info:name,info:birthN,info:birthG,info:rela")

TBLPROPERTIES ("hbase.table.name" = "hbase_fam");

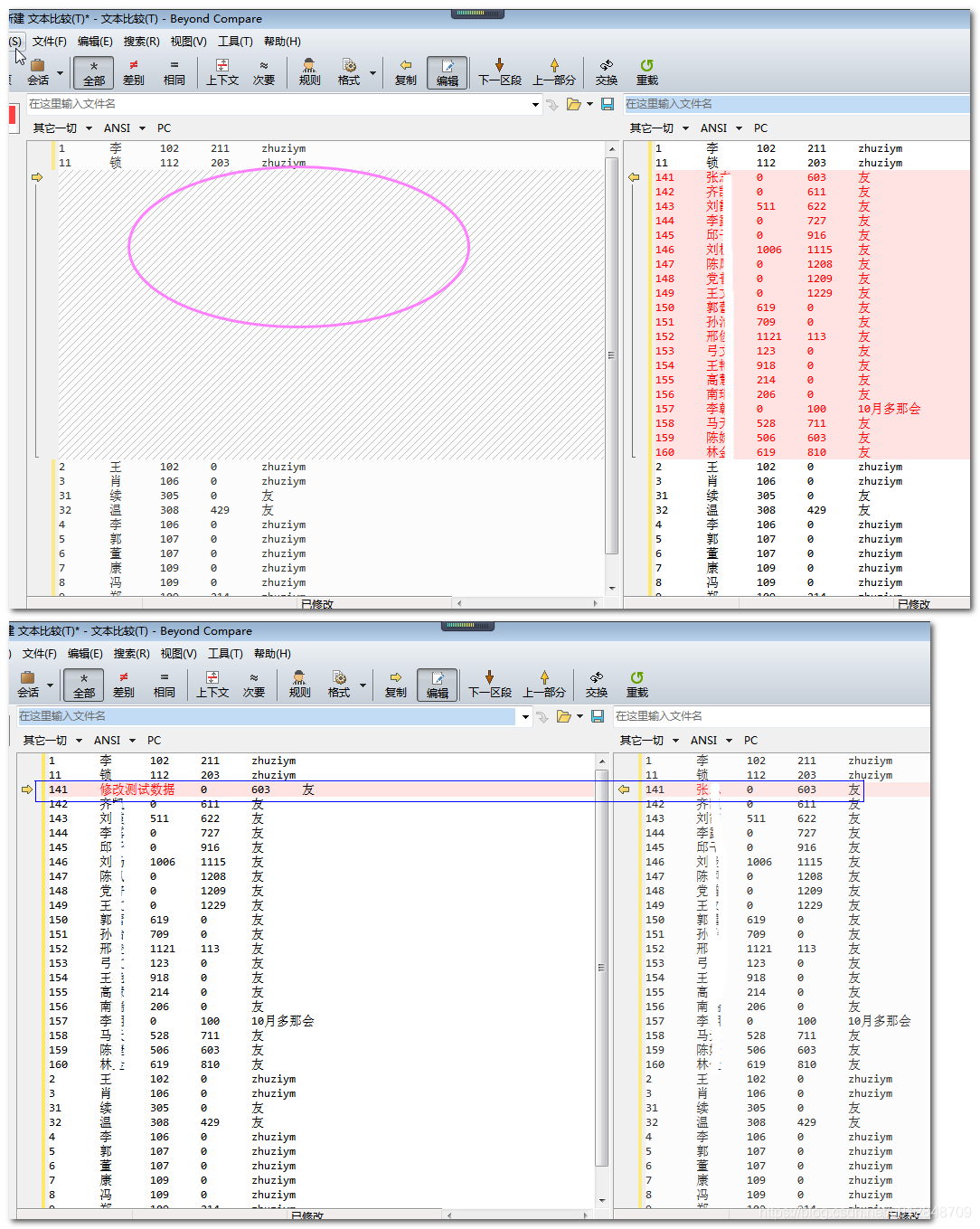

5 多次导入不同的数据测试

hive查询结果

hive (default)> select * from relevance_hbase_fam;

OK

relevance_hbase_fam.id relevance_hbase_fam.name relevance_hbase_fam.birthn relevance_hbase_fam.birthg relevance_hbase_fam.rela

1 李 102 211 zhuziym

11 锁 112 203 zhuziym

2 王 102 0 zhuziym

3 肖 106 0 zhuziym

31 续 305 0 友

32 温 308 429 友

4 李 106 0 zhuziym

5 郭 107 0 zhuziym

6 董 107 0 zhuziym

7 康 109 0 zhuziym

8 冯 109 0 zhuziym

9 郑 109 214 zhuziym

Time taken: 0.371 seconds, Fetched: 12 row(s)

hive (default)> select * from relevance_hbase_fam;

1:相同的数据导入2次,hbase中不会出现重复数据

2:2次先后导入不同的数据,hbase都会保留这些数据

3:导入rowkey相同的数据,其他字段不同,会根据rowkey在hbase中保留最新导入的数据

本文介绍如何使用SQOOP将MySQL数据库中的数据导入HBase,包括配置环境变量、创建MySQL表、执行SQOOP导入命令及在Hive中创建HBase外部表进行数据验证的过程。

本文介绍如何使用SQOOP将MySQL数据库中的数据导入HBase,包括配置环境变量、创建MySQL表、执行SQOOP导入命令及在Hive中创建HBase外部表进行数据验证的过程。

2957

2957

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?