1.添加依赖

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka_2.11</artifactId>

<version>0.9.0.1</version>

</dependency>

2.Kafka常用配置文件

public class KafkaProperties {

public static final String ZK = "127.0.0.1:2181";

public static final String BROKER = "127.0.0.1:9092";

public static final String TOPIC = "steven";

public static final String GROUP_ID = "test_group";

}

3.生产者代码示例

import kafka.javaapi.producer.Producer;

import kafka.producer.KeyedMessage;

import kafka.producer.ProducerConfig;

import java.util.Properties;

public class KafkaProducer extends Thread {

//指定broker

private String broker;

//指定topic

private String topic;

//发送消息需要依赖的类

private Producer producer;

public KafkaProducer(String broker, String topic) {

this.broker = broker;

this.topic = topic;

Properties properties = new Properties();

properties.put("metadata.broker.list", broker);

properties.put("serializer.class", "kafka.serializer.StringEncoder");

//防止数据丢失,生产上不是很严格用1,很严格的话用-1

properties.put("request.required.acks", "1");

producer = new Producer<Integer, String>(new ProducerConfig(properties));

}

@Override

public void run() {

//定义消息编号

int messageNo = 1;

while (true) {

String message = "message_" + messageNo;

producer.send(new KeyedMessage<Integer, String>(topic, message));

System.out.println("Sent: " + message);

messageNo++;

try {

Thread.sleep(2000);

} catch (Exception e) {

e.printStackTrace();

}

}

}

}

4.消费者代码示例

import kafka.consumer.Consumer;

import kafka.consumer.ConsumerConfig;

import kafka.consumer.ConsumerIterator;

import kafka.consumer.KafkaStream;

import kafka.javaapi.consumer.ConsumerConnector;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

import java.util.Properties;

public class KafkaConsumer extends Thread{

private String topic;

public KafkaConsumer(String topic) {

this.topic = topic;

}

private ConsumerConnector createConnector(){

Properties properties = new Properties();

properties.put("zookeeper.connect", KafkaProperties.ZK);

properties.put("group.id",KafkaProperties.GROUP_ID);

return Consumer.createJavaConsumerConnector(new ConsumerConfig(properties));

}

@Override

public void run() {

ConsumerConnector consumer = createConnector();

Map<String, Integer> topicCountMap = new HashMap<String, Integer>();

topicCountMap.put(topic, 1);

// String: topic

// List<KafkaStream<byte[], byte[]>> 对应的数据流

Map<String, List<KafkaStream<byte[], byte[]>>> messageStream = consumer.createMessageStreams(topicCountMap);

// 获取我们每次接收到的数据

KafkaStream<byte[], byte[]> stream = messageStream.get(topic).get(0);

ConsumerIterator<byte[], byte[]> iterator = stream.iterator();

while (iterator.hasNext()) {

String message = new String(iterator.next().message());

System.out.println("rec: " + message);

}

}

}

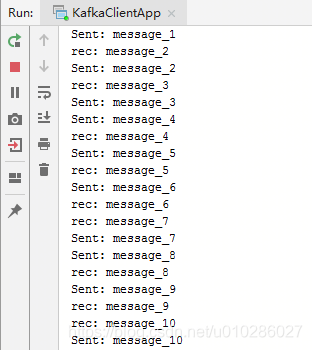

5.测试类

public class KafkaClientApp {

public static void main(String[] args) {

new KafkaProducer(KafkaProperties.BROKER,KafkaProperties.TOPIC).start();

new KafkaConsumer(KafkaProperties.TOPIC).start();

}

}

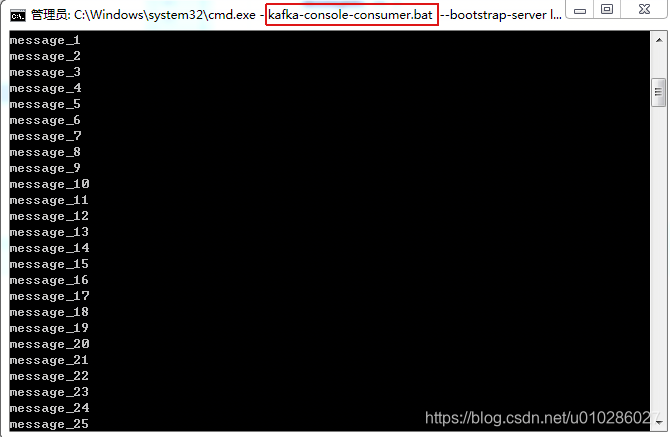

本文详细介绍如何使用Apache Kafka进行消息传递,包括添加依赖、配置参数、生产者与消费者代码示例,以及如何运行测试类。

本文详细介绍如何使用Apache Kafka进行消息传递,包括添加依赖、配置参数、生产者与消费者代码示例,以及如何运行测试类。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?